Automatic Detection of Uprooted Orchards Based on Orthophoto Texture Analysis

Abstract

:1. Introduction

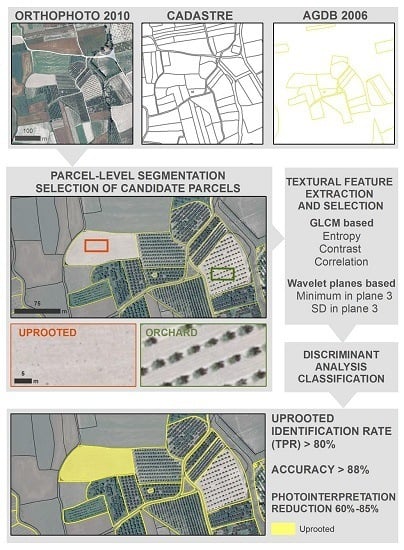

2. Materials and Methods

2.1. Study Area

2.2. Data Preparation

2.3. Parcel Level Image Segmentation

2.4. Textural Feature Extraction

2.4.1. Textural Features Based on GLCM

2.4.2. Plane-Based Wavelet Features

2.5. Feature Selection

2.6. Parcel-Level Classification

2.7. Performance Evaluation for Uprooted Parcel Identification

3. Results

3.1. Feature Selection

3.1.1. Evaluation of GLCM Based Textural Features

3.1.2. Evaluation of Textural Features Based on Wavelet Planes

3.2. Parcel-Level Classification

3.3. Effectiveness of Textural Feature Selection

3.4. Comparison of Textural Feature Performance

4. Discussion

4.1. Optimisation of the Uprooted Detection Process

4.2. Error Sources

4.3. Automatic Identification of Uprooted Parcels as an Aid to Photointerpretation

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| AGDBs | Agricultural geographical databases |

| SIFT | Scale-Invariant Feature Transform |

| GLCM | Grey Levels Co-occurrence Matrix |

| VHR | Very High Resolution |

| UAV | Unmanned Aerial Vehicle |

| GIS | Geographic Information System |

| RS | Remote Sensing |

| PNOA | National Plan of Aerial Orthophotography |

| DMC | Digital Mapping Camera |

| U | Uprooted class |

| O | Orchard class |

| GT | Ground Truth |

| H | GLCM based features |

| W | Wavelet plane based features |

| ASM | Angular Second Moment |

| PCA | Principal Component Analysis |

| PC | Principal Component |

| TPR | True Positive Rate |

| TNR | True Negative Rate |

| A | Accuracy |

| P | Precision |

References

- European Commission. Europeans, Agriculture and the Common Agricultural Policy (CAP). Available online: https://ec.europa.eu/agriculture/survey.es (accessed on 11 April 2017).

- Lister, T.W.; Lister, A.J.; Alexander, E. Land use change monitoring in Maryland using a probabilistic sample and rapid photointerpretation. Appl. Geogr. 2014, 51, 1–7. [Google Scholar] [CrossRef]

- Castillejo-González, I.L.; López-Granados, F.; García-Ferrer, A.; Peña-Barragán, J.M.; Jurado-Expósito, M.; de la Orden, M.S.; González-Audicana, M. Object- and pixel-based analysis for mapping crops and their agro-environmental associated measures using Quickbird imagery. Comput. Electron. Agric. 2009, 68, 207–215. [Google Scholar] [CrossRef]

- Kass, S.; Notarnicola, C.; Zebisch, M. Identification of orchards and vineyards with different texture-based measurements by using an object-oriented classification approach. Int. J. Geogr. Inf. Sci. 2011, 25, 931–947. [Google Scholar] [CrossRef]

- Martinez-Casasnovas, J.A.; Agelet-Fernandez, J.; Arno, J.; Ramos, M.C. Analysis of vineyard differential management zones and relation to vine development, grape maturity and quality. Span. J. Agric. Res. 2012, 10, 326–337. [Google Scholar] [CrossRef]

- Reis, S.; Taşdemir, K. Identification of hazelnut fields using spectral and gabor textural features. ISPRS J. Photogramm. Remote Sens. 2011, 66, 652–661. [Google Scholar] [CrossRef]

- Karakizi, C.; Oikonomou, M.; Karantzalos, K. Vineyard detection and vine variety discrimination from very high resolution satellite data. Remote Sens. 2016, 8, 235. [Google Scholar] [CrossRef]

- Knudsen, T.; Olsen, B.P. Automated change detection for updates of digital map databases. Photogramm. Eng. Remote Sens. 2003, 69, 1289–1296. [Google Scholar] [CrossRef]

- Schmedtmann, J.; Campagnolo, M. Reliable crop identification with satellite imagery in the context of common agriculture policy subsidy control. Remote Sens. 2015, 7, 9325. [Google Scholar] [CrossRef]

- Arivazhagan, S.; Ganesan, L. Texture classification using wavelet transform. Pattern Recognit. Lett. 2003, 24, 1513–1521. [Google Scholar] [CrossRef]

- Puissant, A.; Hirsch, J.; Weber, C. The utility of texture analysis to improve per-pixel classification for high to very high spatial resolution imagery. Int. J. Remote Sens. 2005, 26, 733–745. [Google Scholar] [CrossRef]

- Sali, E.; Wolfson, H. Texture classification in aerial photographs and satellite data. Int. J. Remote Sens. 1992, 13, 3395–3408. [Google Scholar] [CrossRef]

- Marceau, D.J.; Howarth, P.J.; Dubois, J.M.M.; Gratton, D.J. Evaluation of gray-level cooccurrence matrix method for land-cover classification using spot imagery. IEEE Trans. Geosci. Remote Sens. 1990, 28, 513–519. [Google Scholar] [CrossRef]

- Yu, C.; Qiu, Q.; Zhao, Y.; Chen, X. Satellite image classification using morphological component analysis of texture and cartoon layers. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1109–1113. [Google Scholar] [CrossRef]

- Coggins, J.M.; Jain, A.K. A spatial-filtering approach to texture analysis. Pattern Recognit. Lett. 1985, 3, 195–203. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmuga, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC3, 610–621. [Google Scholar] [CrossRef]

- Curran, P.J. The semivariogram in remote sensing: An introduction. Remote Sens. Environ. 1988, 24, 493–507. [Google Scholar] [CrossRef]

- Myint, S.W. Fractal approaches in texture analysis and classification of remotely sensed data: Comparisons with spatial autocorrelation techniques and simple descriptive statistics. Int. J. Remote Sens. 2003, 24, 1925–1947. [Google Scholar] [CrossRef]

- Song, S.; Xu, B.; Yang, J. SAR target recognition via supervised discriminative dictionary learning and sparse representation of the sar-hog feature. Remote Sens. 2016, 8, 683. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Comparing SIFT descriptors and Gabor texture features for classification of remote sensed imagery. In Proceedings of the IEEE International Conference on Image Processing, San Diego, CA, USA, 12–15 October 2008; pp. 1852–1855. [Google Scholar]

- Chehata, N.; Le Bris, A.; Lagacherie, P. Comparison of VHR panchromatic texture features for tillage mapping. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Melbourne, Australia, 21–26 July 2013; pp. 3128–3131. [Google Scholar]

- Berberoglu, S.; Curran, P.J.; Lloyd, C.D.; Atkinson, P.M. Texture classification of mediterranean land cover. Int. J. Appl. Earth Observ. Geoinf. 2007, 9, 322–334. [Google Scholar] [CrossRef]

- Baraldi, A.; Parmiggiani, F. An investigation of the textural characteristics associated with gray-level cooccurrence matrix statistical parameters. IEEE Trans. Geosci. Remote Sens. 1995, 33, 293–304. [Google Scholar] [CrossRef]

- Tuominen, S.; Pekkarinen, A. Performance of different spectral and textural aerial photograph features in multi-source forest inventory. Remote Sens. Environ. 2005, 94, 256–268. [Google Scholar] [CrossRef]

- Kohli, D.; Warwadekar, P.; Kerle, N.; Sliuzas, R.; Stein, A. Transferability of object-oriented image analysis methods for slum identification. Remote Sens. 2013, 5, 4209. [Google Scholar] [CrossRef]

- Aguilar, M.A.; Saldaña, M.M.; Aguilar, F.J. Geoeye-1 and Worldview-2 pan-sharpened imagery for object-based classification in urban environments. Int. J. Remote Sens. 2013, 34, 2583–2606. [Google Scholar] [CrossRef]

- Eckert, S. Improved forest biomass and carbon estimations using texture measures from Worldview-2 satellite data. Remote Sens. 2012, 4, 810. [Google Scholar] [CrossRef]

- Daliman, S.; Rahman, S.A.; Bakar, S.A.; Busu, S. Segmentation of oil palm area based on GLCM-SVM and NDVI. In Proceedings of the IEEE Region 10 Symposium, Kuala Lumpur, Malaisya, 14–16 April 2014; pp. 645–650. [Google Scholar]

- Chuang, Y.-C.; Shiu, Y.-S. A comparative analysis of machine learning with Worldview-2 pan-sharpened imagery for tea crop mapping. Sensors 2016, 16, 594. [Google Scholar] [CrossRef] [PubMed]

- Kuffer, M.; Pfeffer, K.; Sliuzas, R.; Baud, I. Extraction of slum areas from VHR imagery using GLCM variance. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1830–1840. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, H.; Lin, H.; Fang, C. Textural–spectral feature-based species classification of mangroves in mai po nature reserve from Worldview-3 imagery. Remote Sens. 2016, 8, 24. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. Urban flood mapping based on unmanned aerial vehicle remote sensing and random forest classifier—A case of Yuyao, China. Water 2015, 7, 1437. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074. [Google Scholar] [CrossRef]

- Warner, T.A.; Steinmaus, K. Spatial classification of orchards and vineyards with high spatial resolution panchromatic imagery. Photogramm. Eng. Remote Sens. 2005, 71, 179–187. [Google Scholar] [CrossRef]

- Kupidura, P.; Gwadera, L. Comparison of different approaches to extract heterogeneous objects from an image using an orchards example. In Proceedings of the International Archives of the Photogrammetry, Saint-Mandé France, 1–3 September 2010. [Google Scholar]

- Sertel, E.; Yay, I. Vineyard parcel identification from Worldview-2 images using object-based classification model. J. Appl. Remote Sens. 2014, 8, 083535. [Google Scholar] [CrossRef]

- Akar, Ö.; Güngör, O. Integrating multiple texture methods and NDVI to the random forest classification algorithm to detect tea and hazelnut plantation areas in northeast turkey. Int. J. Remote Sens. 2015, 36, 442–464. [Google Scholar] [CrossRef]

- Randen, T.; Husoy, J.H. Filtering for texture classification: A comparative study. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 291–310. [Google Scholar] [CrossRef]

- Ferro, C.J.S.; Warner, T.A. Scale and texture in digital image classification. Photogramm. Eng. Remote Sens. 2002, 68, 51–63. [Google Scholar]

- Simard, M.; DeGrandi, G.; Thomson, K.P.B. Adaptation of the wavelet transform for the construction of multiscale texture maps of sar images. Can. J. Remote Sens. 1998, 24, 264–285. [Google Scholar] [CrossRef]

- Recio, J.A. Técnicas de Extracción de Características y Clasificación de Imágenes Orientada a Objetos Aplicadas a la Actualización de Bases de Datos de Ocupación del Suelo. Ph.D. Thesis, Universidad Politécnica de Valencia, España, Spain, 2009. [Google Scholar]

- Tuceryan, M.; Jain, A.K. Texture analysis. In The Handbook of Pattern Recognition and Computer Vision; Pau, L.F., Wang, P.S.P., Eds.; World Scientific Publishing Co.: New York, NY, USA, 1993. [Google Scholar]

- Walter, V. Object-based classification of remote sensing data for change detection. ISPRS J. Photogramm. Remote Sens. 2004, 58, 225–238. [Google Scholar] [CrossRef]

- Blaschke, T.; Lang, S.; Lorup, E.; Strobl, S.; Zeil, P. Object-oriented image processing in an integrated GIS/Remote Sensing environment and perspectives for environmental applications. In Environmental Information for Planning, Politics and the Public; Cremers, A., Greve, K., Eds.; Metropolis-Verlag: Marburg, Germany, 2000; Volume 2, pp. 555–570. [Google Scholar]

- Walter, V. Automated GIS Data Collection and Update; Wichmann, H., Ed.; Huthig Gmbh: Heidelberg, Germany, 1999; pp. 267–280. [Google Scholar]

- Foody, G.M.; Mathur, A. The use of small training sets containing mixed pixels for accurate hard image classification: Training on mixed spectral responses for classification by a svm. Remote Sens. Environ. 2006, 103, 179–189. [Google Scholar] [CrossRef]

- Foody, G.M. The significance of border training patterns in classification by a feedforward neural network using back propagation learning. Int. J. Remote Sens. 1999, 20, 3549–3562. [Google Scholar] [CrossRef]

- Hintze, J.L. NSSC Statistical System, User’s Guide IV; NCSS: Kasville, UT, USA, 2007. [Google Scholar]

- Thessler, S.; Sesnie, S.; Ramos Bendaña, Z.S.; Ruokolainen, K.; Tomppo, E.; Finegan, B. Using k-nn and discriminant analyses to classify rain forest types in a landsat tm image over northern costa rica. Remote Sens. Environ. 2008, 112, 2485–2494. [Google Scholar] [CrossRef]

- Blackard, J.A.; Dean, D.J. Comparative accuracies of artificial neural networks and discriminant analysis in predicting forest cover types from cartographic variables. Comput. Electron. Agric. 1999, 24, 131–151. [Google Scholar] [CrossRef]

- Lopez-Granados, F.; Gomez-Casero, M.T.; Pena-Barragan, J.M.; Jurado-Exposito, M.; Garcia-Torres, L. Classifying irrigated crops as affected by phenological stage using discriminant analysis and neural networks. J. Am. Soc. Hortic. Sci. 2010, 135, 465–473. [Google Scholar]

- Karimi, Y.; Prasher, S.O.; McNairn, H.; Bonnell, R.B.; Dutilleul, P.; Goel, P.K. Classification accuracy of discriminant analysis, artificial neural networks, and decision trees for weed and nitrogen stress detection in corn. Trans. Am. Soc. Agric. Eng. 2005, 48, 1261–1268. [Google Scholar] [CrossRef]

- Gómez-Casero, M.T.; López-Granados, F.; Peña-Barragán, J.M.; Jurado-Expósito, M.; García-Torres, L.; Fernández-Escobar, R. Assessing nitrogen and potassium deficiencies in olive orchards through discriminant analysis of hyperspectral data. J. Am. Soc. Hortic. Sci. 2007, 132, 611–618. [Google Scholar]

- Karimi, Y.; Prasher, S.O.; McNairn, H.; Bonnell, R.B.; Dutilleul, P.; Goel, P.K. Discriminant analysis of hyperspectral data for assessing water and nitrogen stresses in corn. Trans. Am. Soci.Agric. Eng. 2005, 48, 805–813. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Tao, D.; Huang, X. On combining multiple features for hyperspectral remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2012, 50, 879–893. [Google Scholar] [CrossRef]

- Schiewe, J.; Tufte, L.; Ehlers, M. Potential and problems of multi-scale segmentation methods in Remote Sensing. Potenzial und Probl. Multiskaliger Segmentierungsmethoden der Fernerkund. 2001, 14, 34–39. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Shapiro, L.G.; Stockman, C.G. Computer Vision; Pentice Hall: London, UK, 2001. [Google Scholar]

- Zhang, Q.; Wang, J.; Gong, P.; Shi, P. Study of urban spatial patterns from spot panchromatic imagery using textural analysis. Int. J. Remote Sens. 2003, 24, 4137–4160. [Google Scholar] [CrossRef]

- Chitre, Y.; Dhawan, A.P. M-band wavelet discrimination of natural textures. Pattern Recognit. 1999, 32, 773–789. [Google Scholar] [CrossRef]

- Zhang, J.; Tan, T. Brief review of invariant texture analysis methods. Pattern Recognit. 2002, 35, 735–747. [Google Scholar] [CrossRef]

- Nunez, J.; Otazu, X.; Fors, O.; Prades, A.; Pala, V.; Arbiol, R. Multiresolution-based image fusion with additive wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1204–1211. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An introduction to variable and feature selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar] [CrossRef]

- Ladha, L.; Deepa, T. Feature selection methods and algorithms. Int. J. Comput. Sci. Eng. 2011, 3, 1787–1797. [Google Scholar]

- Nguyen, T.H.; Chng, S.; Li, H. T-test distance and clustering criterion for speaker diarization. In Proceedings of the Interspeech, Brisbane, Australia, 22–26 September 2008; pp. 36–39. [Google Scholar]

- Lark, R.M. Geostatistical description of texture on an aerial photograph for discriminating classes of land cover. Int. J. Remote Sens. 1996, 17, 2115–2133. [Google Scholar] [CrossRef]

- Lobo, A.; Chic, O.; Casterad, A. Classification of mediterranean crops with multisensor data: Per-pixel versus per-object statistics and image segmentation. Int. J. Remote Sens. 1996, 17, 2385–2400. [Google Scholar] [CrossRef]

- Aksoy, S.; Yalniz, I.Z.; Taşdemir, K. Automatic detection and segmentation of orchards using very high resolution imagery. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3117–3131. [Google Scholar] [CrossRef]

- Balaguer-Beser, A.; Ruiz, L.A.; Hermosilla, T.; Recio, J.A. Using semivariogram indices to analyse heterogeneity in spatial patterns in remotely sensed images. Comput. Geosci. 2013, 50, 115–127. [Google Scholar] [CrossRef]

- Ruiz, L.A.; Fdez-Sarría, A.; Recio, J.A. Texture feature extraction for classification of remote sensing data using wavelet decomposition: A comparative study. In Proceedings of the 20th ISPRS Congress, London, UK; 2004; pp. 1109–1114. [Google Scholar]

- Carleer, A.P.; Wolff, E. Urban land cover multi-level region-based classification of vhr data by selecting relevant features. Int. J. Remote Sens. 2006, 27, 1035–1051. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. Object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Foody, G.M.; Cambell, N.A.; Trodd, N.M.; Wood, T.F. Derivation and applications of probabilistic measures of class membership from the maximum likelihood classification. Photogramm. Eng. Remote Sens. 1992, 58, 1335–1341. [Google Scholar]

- Dean, A.M.; Smith, G.M. An evaluation of per-parcel land cover mapping using maximum likelihood class probabilities. Int. J. Remote Sens. 2003, 24, 2905–2920. [Google Scholar] [CrossRef]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for gis-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

| GLCM Based Feature | Equation | Direction (°) | Name |

|---|---|---|---|

| Homogeneity | 0 | h_h_0 | |

| 45 | h_h_45 | ||

| 90 | h_h_90 | ||

| 135 | h_h_135 | ||

| All | h_h_All | ||

| Dissimilarity | 0 | h_d_0 | |

| 45 | h_d_45 | ||

| 90 | h_d_90 | ||

| 135 | h_d_135 | ||

| All | h_d_All | ||

| Contrast | 0 | h_cn_0 | |

| 45 | h_cn_45 | ||

| 90 | h_cn_90 | ||

| 135 | h_cn_135 | ||

| All | h_cn_All | ||

| Entropy | 0 | h_e_0 | |

| 45 | h_e_45 | ||

| 90 | h_e_90 | ||

| 135 | h_e_135 | ||

| All | h_e_All | ||

| Angular second moment | 0 | h_a_0 | |

| 45 | h_a_45 | ||

| 90 | h_a_90 | ||

| 135 | h_a_135 | ||

| All | h_a_All | ||

| Mean | 0 | h_m_0 | |

| 45 | h_m_45 | ||

| 90 | h_m_90 | ||

| 135 | h_m_135 | ||

| All | h_m_All | ||

| Standard Deviation | 0 | h_s_0 | |

| 45 | h_s_45 | ||

| 90 | h_s_90 | ||

| 135 | h_s_135 | ||

| All | h_s_All | ||

| Correlation | 0 | h_cr_0 | |

| 45 | h_ cr_45 | ||

| 90 | h_cr_90 | ||

| 135 | h_cr_135 | ||

| All | h_cr_All | ||

| where N is the number of grey levels; P is the normalized symmetric GLCM of dimension N × N; Pi,j is the (i,j)th element of P. | |||

| Wavelet Feature | Equation | Plane | Name |

|---|---|---|---|

| Standard deviation | 1 | w_s_1 | |

| 2 | w_s_2 | ||

| 3 | w_s_3 | ||

| 4 | w_s_4 | ||

| Maximum | 1 | w_mx_1 | |

| 2 | w_mx_2 | ||

| 3 | w_mx_3 | ||

| 4 | w_mx_4 | ||

| Minimum | 1 | w_mn_1 | |

| 2 | w_mn_2 | ||

| 3 | w_mn_3 | ||

| 4 | w_mn_4 | ||

| Range | 1 | w_s_1 | |

| 2 | w_s_2 | ||

| 3 | w_s_3 | ||

| 4 | w_s_4 | ||

| where L is the number of pixels of the parcel; xi is the value of each pixel of the parcel. | |||

| Classified as Uprooted | Classified as Orchard | |

|---|---|---|

| GT Uprooted | True Positive (TP) | False Negative (FN) |

| GT Orchard | False Positive (FP) | True Negative (TN) |

| Accuracy | Precision | True Positive Rate | True Negative Rate |

|---|---|---|---|

| Number of Features | Name | A | P | TPR | TNR | ||

|---|---|---|---|---|---|---|---|

| All features | GLCM | 40 | Tex_Total _H | 0.876 | 0.504 | 0.741 | 0.896 |

| Wavelet | 16 | Tex_Total_W | 0.838 | 0.416 | 0.722 | 0.855 | |

| GLCM+Wavelet | 56 | Tex_Total_H+W | 0.871 | 0.489 | 0.672 | 0.900 | |

| Selected features | GLCM | 3 | Tex_Sel_H | 0.866 | 0.477 | 0.757 | 0.881 |

| Wavelet | 2 | Tex_Sel_W | 0.789 | 0.355 | 0.849 | 0.780 | |

| GLCM+Wavelet | 5 | Tex_Sel_H+W | 0.881 | 0.516 | 0.803 | 0.892 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ciriza, R.; Sola, I.; Albizua, L.; Álvarez-Mozos, J.; González-Audícana, M. Automatic Detection of Uprooted Orchards Based on Orthophoto Texture Analysis. Remote Sens. 2017, 9, 492. https://doi.org/10.3390/rs9050492

Ciriza R, Sola I, Albizua L, Álvarez-Mozos J, González-Audícana M. Automatic Detection of Uprooted Orchards Based on Orthophoto Texture Analysis. Remote Sensing. 2017; 9(5):492. https://doi.org/10.3390/rs9050492

Chicago/Turabian StyleCiriza, Raquel, Ion Sola, Lourdes Albizua, Jesús Álvarez-Mozos, and María González-Audícana. 2017. "Automatic Detection of Uprooted Orchards Based on Orthophoto Texture Analysis" Remote Sensing 9, no. 5: 492. https://doi.org/10.3390/rs9050492

APA StyleCiriza, R., Sola, I., Albizua, L., Álvarez-Mozos, J., & González-Audícana, M. (2017). Automatic Detection of Uprooted Orchards Based on Orthophoto Texture Analysis. Remote Sensing, 9(5), 492. https://doi.org/10.3390/rs9050492