Spatial and Spectral Hybrid Image Classification for Rice Lodging Assessment through UAV Imagery

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Site

2.2. Image-Based Modeling

2.3. Texture Analysis

2.4. Single Feature Probability

2.5. Image Classification

2.5.1. Maximum Likelihood Classification

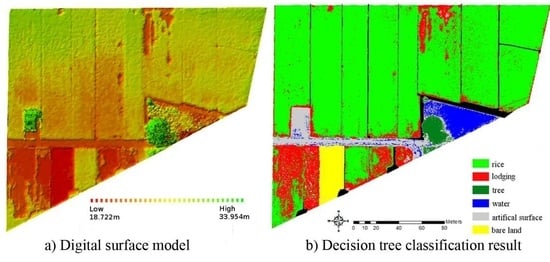

2.5.2. Decision Tree Classification

3. Results

3.1. Image-Based Modeling

3.2. Texture Analysis

3.3. Single Feature Probability

3.4. Image Classification

3.5. Rice Lodging Interpretation

4. Discussion

5. Conclusions

- The results reveal that UAVs are viable platforms for agricultural land classification because of their ability to be deployed quickly and to rapidly generate comprehensive high-resolution images. The resulting high-resolution UAV images can serve as scientific evidence of the impacts of agricultural disasters. With appropriate image classification techniques, UAV images have great potential to improve the current manual rice lodging assessment techniques.

- Based on the SFP results, the contribution of DSM and texture information to the classification accuracy can be estimated for each land cover type. Texture information could significantly improve the classification accuracy of rice and water. The DSM was more suitable for lodging and tree classification. The simultaneous addition of DSM and texture information exerted positive effects on the classification accuracy of artificial surface and bare land.

- For accuracy assessment, DTC using SFP values as the decision threshold values outperformed MLC, with a classification OA of 96.17% and Kappa value of 0.94.

- The inclusion of DSM information alone, texture information alone, and both DSM and texture information had varied positive effects on the classification accuracy of MLC (from 86.24% to 93.84%, 88.14%, and 90.76%, respectively).

- This study incorporated seven rice paddies in the study site that were reported for agricultural disaster relief compensation. Through the proposed classification technique, paddies E, F, and G had a >20% lodging rate (67.09%, 75.23%, and 50.50%, respectively); therefore, these paddies were eligible for disaster relief compensation. The proposed classification technique can effectively interpret lodging and provide the government with quantitative and objective data to be used as a reference for compensation. In addition, these data may serve as a valuable reference for various applications such as agricultural mapping/monitoring, agricultural insurance, yield estimation, and biomass estimation.

- To fulfill realistic conditions and accelerate the disaster relief compensation process, two additional image processing steps, extracting paddy field boundaries and thresholding a minimum lodging area of 1 m2, were executed to identify lodged rice within cadastral units. These steps minimized the commission error associated with rice/lodging identification and reduced scattering noise in paddy fields.

- In addition to rice lodging interpretation, future research can further examine the disaster-related loss of rice according to its growth stages (e.g., yellow leaves caused by cold damage and loss or mildew of rice straws caused by heavy rain or the Asian monsoon rainy season). Moreover, disaster assessment of other crops can be incorporated into future research.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Juliano, B.O. Rice in Human Nutrition; FAO Food Nutrition Series No.26; Food and Agriculture Organization of the United Nations: Rome, Italy, 1993; pp. 20–38. [Google Scholar]

- Vignola, R.; Harvey, C.A.; Bautista-Solis, P.; Avelino, J.; Rapidel, B.; Donatti, C.; Martinez, R. Ecosystem-based adaptation for smallholder farmers: Definitions, opportunities and constraints. Agric. Ecosyst. Environ. 2015, 211, 126–132. [Google Scholar] [CrossRef]

- Shimono, H.; Okada, M.; Yamakawa, Y.; Nakamura, H.; Kobayashi, K.; Hasegawa, T. Lodging in rice can be alleviated by atmospheric CO2 enrichment. Agric. Ecosyst. Environ. 2007, 118, 223–230. [Google Scholar] [CrossRef]

- Ishimaru, K.; Togawa, E.; Ookawa, T.; Kashiwagi, T.; Madoka, Y.; Hirotsu, N. New target for rice lodging resistance and its effect in a typhoon. Planta 2008, 227, 601–609. [Google Scholar] [CrossRef] [PubMed]

- Setter, T.; Laureles, E.; Mazaredo, A. Lodging reduces yield of rice by self-shading and reductions in canopy photosynthesis. Field Crops Res. 1997, 49, 95–106. [Google Scholar] [CrossRef]

- Chang, H.; Zilberman, D. On the political economy of allocation of agricultural disaster relief payments: Application to Taiwan. Eur. Rev. Agric. Econ. 2014, 41, 657–680. [Google Scholar] [CrossRef]

- Jia, Y.; Su, Z.; Shen, W.; Yuan, J.; Xu, Z. UAV remote sensing image mosaic and its application in agriculture. Int. J. Smart Home 2016, 10, 159–170. [Google Scholar] [CrossRef]

- Yang, M.; Yang, Y.; Hsu, S. Application of remotely sensed data to the assessment of terrain factors affecting the Tsao-Ling landslide. Can. J. Remote Sens. 2004, 30, 593–603. [Google Scholar] [CrossRef]

- Yang, M.; Lin, J.; Yao, C.; Chen, J.; Su, T.; Jan, C. Landslide-induced levee failure by high concentrated sediment flow—A case of Shan-An levee at Chenyulan River, Taiwan. Eng. Geol. 2011, 123, 91–99. [Google Scholar] [CrossRef]

- Yang, M.; Su, T.; Hsu, C.; Chang, K.; Wu, A. Mapping of the 26 December 2004 tsunami disaster by using FORMOSAT-2 images. Int. J. Remote Sens. 2007, 28, 3071–3091. [Google Scholar] [CrossRef]

- Lin, J.; Yang, M.; Lin, B.; Lin, P. Risk assessment of debris flows in Songhe Stream, Taiwan. Eng. Geol. 2011, 123, 100–112. [Google Scholar] [CrossRef]

- Atzberger, C. Advances in remote sensing of agriculture: Context description, existing operational monitoring systems and major information needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef]

- Sanders, K.T.; Masri, S.F. The energy-water agriculture nexus: The past, present and future of holistic resource management via remote sensing technologies. J. Clean. Prod. 2016, 117, 73–88. [Google Scholar] [CrossRef]

- Li, Z.; Chen, Z.; Wang, L.; Liu, J.; Zhou, Q. Area extraction of maize lodging based on remote sensing by small unmanned aerial vehicle. Trans. Chin. Soc. Agric. Eng. 2014, 30, 207–213. [Google Scholar]

- Zhang, H.; Lin, H.; Li, Y.; Zhang, Y.; Fang, C. Mapping urban impervious surface with dual-polarimetric SAR data: An improved method. Landsc. Urban Plann. 2016, 151, 55–63. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A. Texture and scale in object-based analysis of subdecimeter resolution unmanned aerial vehicle (UAV) imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 761–770. [Google Scholar] [CrossRef]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef]

- Kedzierski, M.; Wierzbicki, D. Methodology of Improvement of Radiometric Quality of Images Acquired from Low Altitudes. Measurement 2016, 92, 70–78. [Google Scholar] [CrossRef]

- Kedzierski, M.; Wilinska, M.; Wierzbicki, D.; Fryskowska, A.; Delis, P. Image Data Fusion for Flood Plain Mapping. In Proceedings of the International Conference on Environmental Engineering, Vilnius, Lithuania, 22–23 May 2014; p. 1. [Google Scholar]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Watson, C. An automated technique for generating georectified mosaics from ultra-high resolution unmanned aerial vehicle (UAV) imagery, based on structure from motion (SfM) point clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef]

- Li, K.; Yang, J.; Jiang, J. Nonrigid structure from motion via sparse representation. IEEE Trans. Cybern. 2015, 45, 1401–1413. [Google Scholar] [PubMed]

- Yahyanejad, S.; Rinner, B. A fast and mobile system for registration of low-altitude visual and thermal aerial images using multiple small-scale UAVs. ISPRS J. Photogramm. Remote Sens. 2015, 104, 189–202. [Google Scholar] [CrossRef]

- Lelong, C.C.; Burger, P.; Jubelin, G.; Roux, B.; Labbé, S.; Baret, F. Assessment of unmanned aerial vehicles imagery for quantitative monitoring of wheat crop in small plots. Sensors 2008, 8, 3557–3585. [Google Scholar] [CrossRef] [PubMed]

- Baluja, J.; Diago, M.P.; Balda, P.; Zorer, R.; Meggio, F.; Morales, F.; Tardaguila, J. Assessment of vineyard water status variability by thermal and multispectral imagery using an unmanned aerial vehicle (UAV). Irrig. Sci. 2012, 30, 511–522. [Google Scholar] [CrossRef]

- Uto, K.; Seki, H.; Saito, G.; Kosugi, Y. Characterization of rice paddies by a UAV-mounted miniature hyperspectral sensor system. IEEE J. Sel. Topics Appl. Earth Observ. 2013, 6, 851–860. [Google Scholar] [CrossRef]

- Yang, H.; Chen, E.; Li, Z.; Zhao, C.; Yang, G.; Pignatti, S.; Casa, R.; Zhao, L. Wheat lodging monitoring using polarimetric index from RADARSAT-2 data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 157–166. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. Remote sensing of vegetation structure using computer vision. Remote Sens. 2010, 2, 1157–1176. [Google Scholar] [CrossRef]

- Rokhmana, C.A. The potential of UAV-based remote sensing for supporting precision agriculture in Indonesia. Procedia Environ. Sci. 2015, 24, 245–253. [Google Scholar] [CrossRef]

- Polo, J.; Hornero, G.; Duijneveld, C.; García, A.; Casas, O. Design of a low-cost Wireless Sensor Network with UAV mobile node for agricultural applications. Comput. Electron. Agric. 2015, 119, 19–32. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Sam, L.; Martín-Torres, F.J.; Kumar, R. UAVs as remote sensing platform in glaciology: Present applications and future prospects. Remote Sens. Environ. 2016, 175, 196–204. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Peña, J.M.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. Selecting patterns and features for between-and within-crop-row weed mapping using UAV-imagery. Expert Syst. Appl. 2016, 47, 85–94. [Google Scholar] [CrossRef]

- Rango, A.; Laliberte, A.; Herrick, J.E.; Winters, C.; Havstad, K.; Steele, C.; Browning, D. Unmanned aerial vehicle-based remote sensing for rangeland assessment, monitoring, and management. J. Appl. Remote Sens. 2009, 3, 033542. [Google Scholar]

- Kuria, D.N.; Menz, G.; Misana, S.; Mwita, E.; Thamm, H.; Alvarez, M.; Mogha, N.; Becker, M.; Oyieke, H. Seasonal vegetation changes in the Malinda Wetland using bi-temporal, multi-sensor, very high resolution remote sensing data sets. Adv. Remote Sens. 2014, 3, 33. [Google Scholar] [CrossRef]

- Tamminga, A.; Hugenholtz, C.; Eaton, B.; Lapointe, M. Hyperspatial remote sensing of channel reach morphology and hydraulic fish habitat using an unmanned aerial vehicle (UAV): A first assessment in the context of river research and management. River Res. Appl. 2015, 31, 379–391. [Google Scholar] [CrossRef]

- Hubert-Moy, L.; Cotonnec, A.; Le Du, L.; Chardin, A.; Pérez, P. A comparison of parametric classification procedures of remotely sensed data applied on different landscape units. Remote Sens. Environ. 2001, 75, 174–187. [Google Scholar] [CrossRef]

- Peña-Barragán, J.M.; López-Granados, F.; García-Torres, L.; Jurado-Expósito, M.; Sánchez de La Orden, M.; García-Ferrer, A. Discriminating cropping systems and agro-environmental measures by remote sensing. Agron. Sustain. Dev. 2008, 28, 355–362. [Google Scholar] [CrossRef]

- Peña, J.M.; Gutiérrez, P.A.; Hervás-Martínez, C.; Six, J.; Plant, R.E.; López-Granados, F. Object-based image classification of summer crops with machine learning methods. Remote Sens. 2014, 6, 5019–5041. [Google Scholar] [CrossRef]

- Chuang, H.; Lur, H.; Hwu, K.; Chang, M. Authentication of domestic Taiwan rice varieties based on fingerprinting analysis of microsatellite DNA markers. Botanical Stud. 2011, 52, 393–405. [Google Scholar]

- Yang, M.; Chao, C.; Huang, K.; Lu, L.; Chen, Y. Image-based 3D scene reconstruction and exploration in augmented reality. Autom. Constr. 2013, 33, 48–60. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Lindeberg, T. Scale-space theory: A basic tool for analyzing structures at different scales. J. Appl. Stat. 1994, 21, 225–270. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. An affine invariant interest point detector. In Proceedings of the European Conference on Computer Vision, Copenhagen, Denmark, 28–31 May 2002; pp. 128–142. [Google Scholar]

- Westoby, M.; Brasington, J.; Glasser, N.; Hambrey, M.; Reynolds, J. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Tonkin, T.N.; Midgley, N.G. Ground-control networks for image based surface reconstruction: An investigation of optimum survey designs using UAV derived imagery and Structure-from-Motion photogrammetry. Remote Sens. 2016, 8, 786. [Google Scholar] [CrossRef]

- Matusik, W.; Buehler, C.; Raskar, R.; Gortler, S.J.; McMillan, L. Image-based visual hulls. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 23–28 July 2000; pp. 369–374. [Google Scholar]

- Jancosek, M.; Pajdla, T. Multi-view reconstruction preserving weakly-supported surfaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 3121–3128. [Google Scholar]

- Coburn, C.; Roberts, A.C. A multiscale texture analysis procedure for improved forest stand classification. Int. J. Remote Sens. 2004, 25, 4287–4308. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Su, T.C.; Yang, M.D.; Wu, T.C.; Lin, J.Y. Morphological segmentation based on edge detection for sewer pipe defects on CCTV images. Expert Syst. Appl. 2011, 38, 13094–13114. [Google Scholar] [CrossRef]

- Materka, A.; Strzelecki, M. Texture Analysis Methods–A Review; COST B11 report; Technical university of lodz, institute of electronics: Brussels, Belgium, 1998; pp. 9–11. [Google Scholar]

- Haralick, R.M.; Shanmugam, K. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Yang, M.D.; Su, T.C. Automated diagnosis of sewer pipe defects based on machine learning approaches. Expert Syst. Appl. 2008, 35, 1327–1337. [Google Scholar] [CrossRef]

- Culbert, P.D.; Pidgeon, A.M.; Louis, V.S.; Bash, D.; Radeloff, V.C. The impact of phenological variation on texture measures of remotely sensed imagery. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2009, 2, 299–309. [Google Scholar] [CrossRef]

- Yang, M.D.; Su, T.C.; Pan, N.F.; Liu, P. Feature extraction of sewer pipe defects using wavelet transform and co-occurrence matrix. Int. J. Wavelets Multi. 2011, 9, 211–225. [Google Scholar] [CrossRef]

- Yang, Y.; Song, M.; Li, N.; Bu, J.; Chen, C. What is the chance of happening: A new way to predict where people look. In Proceedings of the 11th European Conference on Computer Vision, ECCV, Heraklion, Crete, Greece, 5–11 September 2010; pp. 631–643. [Google Scholar]

- Chepkochei, L.C. Object-oriented image classification of individual trees using Erdas Imagine objective: Case study of Wanjohi area, Lake Naivasha Basin, Kenya. In Proceedings of the Kenya Geothermal Conference, Nairobi, Kenya, 21–22 November 2011. [Google Scholar]

- Jovanovic, D.; Govedarica, M.; Sabo, F.; Bugarinovic, Z.; Novovic, O.; Beker, T.; Lauter, M. Land Cover change detection by using Remote Sensing—A Case Study of Zlatibor (Serbia). Geogr. Pannonica 2015, 19, 162–173. [Google Scholar]

- Dahiya, S.; Garg, P.; Jat, M.K. Object oriented approach for building extraction from high resolution satellite images. In Proceedings of the Advance Computing Conference (IACC), Ghaziabad, India, 22-23 February 2013; pp. 1300–1305. [Google Scholar]

- Yang, M. A genetic algorithm (GA) based automated classifier for remote sensing imagery. Can. J. Remote Sens. 2007, 33, 203–213. [Google Scholar] [CrossRef]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation; John Wiley & Sons: Hoboken, New Jersey, USA, 2014. [Google Scholar]

- Swain, P.H. Fundamentals of pattern recognition in remote sensing. In Remote Sensing: The Quantitative Approach; McGraw-Hill International Book Co.: New York, USA, 1978; pp. 136–188. [Google Scholar]

- Richards, J.A.; Richards, J. Remote Sensing Digital Image Analysis; Springer-Verlaag: Heidelberger, Berlin, German, 1999. [Google Scholar]

- Jensen, J.R.; Lulla, K. Introductory digital image processing: A remote sensing perspective. Grocarto Int. 1987, 2, 65. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.; Stone, C.J.; Olshen, R.A. Classification and Regression Trees; CRC Press: Boca Raton, Florida, USA, 1984. [Google Scholar]

- Cho, J.H.; Kurup, P.U. Decision tree approach for classification and dimensionality reduction of electronic nose data. Sens. Actuators. B Chem. 2011, 160, 542–548. [Google Scholar] [CrossRef]

- Chasmer, L.; Hopkinson, C.; Veness, T.; Quinton, W.; Baltzer, J. A decision-tree classification for low-lying complex land cover types within the zone of discontinuous permafrost. Remote Sens. Environ. 2014, 143, 73–84. [Google Scholar] [CrossRef]

| Control Point | E (m) | N (m) | Z (m) |

|---|---|---|---|

| 1 | 0.51 | 0.22 | 0.42 |

| 2 | 0.20 | 0.11 | 0.45 |

| 3 | 0.30 | 0.11 | 0.83 |

| 4 | 0.21 | 0.22 | 0.28 |

| 5 | 0.00 | 0.22 | 0.65 |

| 6 | 0.10 | 0.22 | 0.03 |

| 7 | 0.20 | 0.33 | 0.10 |

| 8 | 0.72 | 0.55 | 0.05 |

| 9 | 0.41 | 0.22 | 0.28 |

| Average error | 0.29 | 0.24 | 0.34 |

| RGB + Texture | RGB + Texture + DSM | |||||

|---|---|---|---|---|---|---|

| ASM | Entropy | Contrast | ASM | Entropy | Contrast | |

| rice | 0.930 | 0.903 | 0.924 | 0.900 | 0.900 | 0.910 |

| lodging | 0.628 | 0.628 | 0.636 | 0.687 | 0.687 | 0.673 |

| tree | 0.292 | 0.292 | 0.612 | 0.371 | 0.371 | 0.631 |

| water | 0.903 | 0.821 | 0.827 | 0.873 | 0.818 | 0.813 |

| artificial surface | 0.822 | 0.810 | 0.816 | 0.833 | 0.824 | 0.823 |

| bare land | 0.862 | 0.855 | 0.862 | 0.901 | 0.883 | 0.894 |

| Class | RGB | RGB + Texture | RGB + DSM | RGB + Texture + DSM |

|---|---|---|---|---|

| rice | 0.462 | 0.930 | 0.899 | 0.900 |

| lodging | 0.619 | 0.628 | 0.698 | 0.687 |

| tree | 0.391 | 0.292 | 0.672 | 0.371 |

| water | 0.821 | 0.903 | 0.802 | 0.873 |

| artificial surface | 0.063 | 0.822 | 0.811 | 0.833 |

| bare land | 0.432 | 0.862 | 0.879 | 0.901 |

| Classification | Band | Overall Accuracy | Kappa |

|---|---|---|---|

| MLC | RGB | 86.24% | 0.799 |

| RGB + DSM | 93.84% | 0.906 | |

| RGB + Texture | 88.14% | 0.825 | |

| RGB + DSM + Texture | 90.76% | 0.861 | |

| DTC | RGB + DSM + Texture | 96.17% | 0.941 |

| Ground Truth | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Rice | Lodging | Tree | Water | Artificial Surface | Bare Land | Total | User’s Accuracy (%) | ||

| DTC | rice | 44,314 | 269 | 0 | 0 | 0 | 0 | 44,583 | 99.4 |

| lodging | 625 | 12,489 | 86 | 198 | 1723 | 4 | 15,125 | 82.6 | |

| tree | 0 | 0 | 5181 | 0 | 0 | 0 | 5181 | 100 | |

| water | 0 | 0 | 0 | 7528 | 76 | 0 | 7604 | 99.0 | |

| artificial surface | 0 | 0 | 0 | 120 | 5425 | 0 | 5545 | 97.8 | |

| bare land | 0 | 0 | 0 | 0 | 0 | 2984 | 2984 | 100.0 | |

| total | 44,939 | 12,758 | 5267 | 7846 | 7224 | 2988 | 81,022 | ||

| Producer’s accuracy(%) | 98.6 | 97.9 | 98.4 | 95.9 | 75.1 | 99.9 | |||

| Rice Paddy | A | B | C | D | E | F | G | |

|---|---|---|---|---|---|---|---|---|

| Pixel Number | ||||||||

| Lodging | 3255 | 6136 | 23,913 | 121,628 | 549,495 | 162,627 | 150,942 | |

| Paddy | 1,412,833 | 1,433,273 | 1,380,834 | 1,374,845 | 819,077 | 216,185 | 298,923 | |

| Lodging proportion (%) | 0.23 | 0.43 | 1.73 | 8.85 | 67.09 | 75.23 | 50.5 | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, M.-D.; Huang, K.-S.; Kuo, Y.-H.; Tsai, H.P.; Lin, L.-M. Spatial and Spectral Hybrid Image Classification for Rice Lodging Assessment through UAV Imagery. Remote Sens. 2017, 9, 583. https://doi.org/10.3390/rs9060583

Yang M-D, Huang K-S, Kuo Y-H, Tsai HP, Lin L-M. Spatial and Spectral Hybrid Image Classification for Rice Lodging Assessment through UAV Imagery. Remote Sensing. 2017; 9(6):583. https://doi.org/10.3390/rs9060583

Chicago/Turabian StyleYang, Ming-Der, Kai-Siang Huang, Yi-Hsuan Kuo, Hui Ping Tsai, and Liang-Mao Lin. 2017. "Spatial and Spectral Hybrid Image Classification for Rice Lodging Assessment through UAV Imagery" Remote Sensing 9, no. 6: 583. https://doi.org/10.3390/rs9060583

APA StyleYang, M. -D., Huang, K. -S., Kuo, Y. -H., Tsai, H. P., & Lin, L. -M. (2017). Spatial and Spectral Hybrid Image Classification for Rice Lodging Assessment through UAV Imagery. Remote Sensing, 9(6), 583. https://doi.org/10.3390/rs9060583