Deep Learning Based HPV Status Prediction for Oropharyngeal Cancer Patients

Abstract

:Simple Summary

Abstract

1. Introduction

2. Material and Methods

2.1. Data

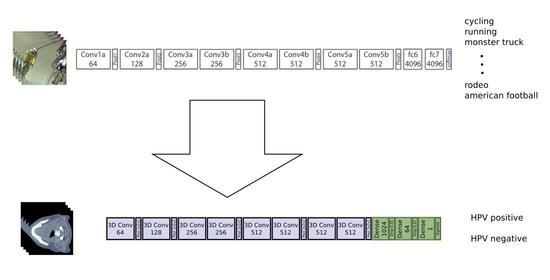

2.2. Deep Neural Network

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HPV | human papillomavirus |

| OPC | oropharyngeal cancer |

| CT | computed tomography |

| AUC | area under the receiver operating characteristic curve |

| CNN | convolutional neural network |

| FDG-PET | fluorodeoxyglucose—positron emission tomography |

| GTV | gross tumor volume |

| IHC | immunohistochemistry |

| DNA | deoxyribonucleic acid |

| ROC | receiver operating characteristic |

| AJCC | American Joint Committee of Cancer |

Appendix A

References

- IARC Working Group. IARC Monographs on the Evaluation of Carcinogenic Risks to Humans; WHO Press: Geneva, Switzerland, 2007; Volume 90, p. 124. [Google Scholar]

- Mork, J.; Lie, A.K.; Glattre, E.; Clark, S.; Hallmans, G.; Jellum, E.; Koskela, P.; Møller, B.; Pukkala, E.; Schiller, J.T.; et al. Human papillomavirus infection as a risk factor for squamous-cell carcinoma of the head and neck. N. Engl. J. Med. 2001, 344, 1125–1131. [Google Scholar] [CrossRef] [PubMed]

- Ng, M.; Freeman, M.K.; Fleming, T.D.; Robinson, M.; Dwyer-Lindgren, L.; Thomson, B.; Wollum, A.; Sanman, E.; Wulf, S.; Lopez, A.D.; et al. Smoking prevalence and cigarette consumption in 187 countries, 1980–2012. JAMA 2014, 311, 183–192. [Google Scholar] [CrossRef] [Green Version]

- Plummer, M.; de Martel, C.; Vignat, J.; Ferlay, J.; Bray, F.; Franceschi, S. Global burden of cancers attributable to infections in 2012: A synthetic analysis. Lancet Glob. Health 2016, 4, e609–e616. [Google Scholar] [CrossRef] [Green Version]

- Gillison, M.L.; Koch, W.M.; Capone, R.B.; Spafford, M.; Westra, W.H.; Wu, L.; Zahurak, M.L.; Daniel, R.W.; Viglione, M.; Symer, D.E.; et al. Evidence for a causal association between human papillomavirus and a subset of head and neck cancers. J. Natl. Cancer Inst. 2000, 92, 709–720. [Google Scholar] [CrossRef] [PubMed]

- Marur, S.; Li, S.; Cmelak, A.J.; Gillison, M.L.; Zhao, W.J.; Ferris, R.L.; Westra, W.H.; Gilbert, J.; Bauman, J.E.; Wagner, L.I.; et al. E1308: Phase II trial of induction chemotherapy followed by reduced-dose radiation and weekly cetuximab in patients with HPV-associated resectable squamous cell carcinoma of the oropharynx—ECOG-ACRIN Cancer Research Group. J. Clin. Oncol. 2017, 35, 490. [Google Scholar] [CrossRef]

- Schache, A.G.; Liloglou, T.; Risk, J.M.; Filia, A.; Jones, T.M.; Sheard, J.; Woolgar, J.A.; Helliwell, T.R.; Triantafyllou, A.; Robinson, M.; et al. Evaluation of human papilloma virus diagnostic testing in oropharyngeal squamous cell carcinoma: Sensitivity, specificity, and prognostic discrimination. Clin. Cancer Res. 2011, 17, 6262–6271. [Google Scholar] [CrossRef] [Green Version]

- Jordan, R.C.; Lingen, M.W.; Perez-Ordonez, B.; He, X.; Pickard, R.; Koluder, M.; Jiang, B.; Wakely, P.; Xiao, W.; Gillison, M.L. Validation of methods for oropharyngeal cancer HPV status determination in United States cooperative group trials. Am. J. Surg. Pathol. 2012, 36, 945. [Google Scholar] [CrossRef]

- Coates, J.; Souhami, L.; El Naqa, I. Big data analytics for prostate radiotherapy. Front. Oncol. 2016, 6, 149. [Google Scholar] [CrossRef] [Green Version]

- El Naqa, I.; Kerns, S.L.; Coates, J.; Luo, Y.; Speers, C.; West, C.M.; Rosenstein, B.S.; Ten Haken, R.K. Radiogenomics and radiotherapy response modeling. Phys. Med. Biol. 2017, 62, R179. [Google Scholar] [CrossRef]

- Kumar, V.; Gu, Y.; Basu, S.; Berglund, A.; Eschrich, S.A.; Schabath, M.B.; Forster, K.; Aerts, H.J.; Dekker, A.; Fenstermacher, D.; et al. Radiomics: The process and the challenges. Magn. Reson. Imaging 2012, 30, 1234–1248. [Google Scholar] [CrossRef] [Green Version]

- Peeken, J.C.; Wiestler, B.; Combs, S.E. Image-Guided Radiooncology: The Potential of Radiomics in Clinical Application. In Molecular Imaging in Oncology; Springer: Berlin/Heidelberg, Germany, 2020; pp. 773–794. [Google Scholar]

- Segal, E.; Sirlin, C.B.; Ooi, C.; Adler, A.S.; Gollub, J.; Chen, X.; Chan, B.K.; Matcuk, G.R.; Barry, C.T.; Chang, H.Y.; et al. Decoding global gene expression programs in liver cancer by noninvasive imaging. Nat. Biotechnol. 2007, 25, 675–680. [Google Scholar] [CrossRef]

- Fave, X.; Zhang, L.; Yang, J.; Mackin, D.; Balter, P.; Gomez, D.; Followill, D.; Jones, A.K.; Stingo, F.; Liao, Z.; et al. Delta-radiomics features for the prediction of patient outcomes in non–small cell lung cancer. Sci. Rep. 2017, 7, 1–11. [Google Scholar] [CrossRef]

- Spraker, M.B.; Wootton, L.S.; Hippe, D.S.; Ball, K.C.; Peeken, J.C.; Macomber, M.W.; Chapman, T.R.; Hoff, M.N.; Kim, E.Y.; Pollack, S.M.; et al. MRI radiomic features are independently associated with overall survival in soft tissue sarcoma. Adv. Radiat. Oncol. 2019, 4, 413–421. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huynh, E.; Coroller, T.P.; Narayan, V.; Agrawal, V.; Hou, Y.; Romano, J.; Franco, I.; Mak, R.H.; Aerts, H.J. CT-based radiomic analysis of stereotactic body radiation therapy patients with lung cancer. Radiother. Oncol. 2016, 120, 258–266. [Google Scholar] [CrossRef]

- Peeken, J.C.; Bernhofer, M.; Spraker, M.B.; Pfeiffer, D.; Devecka, M.; Thamer, A.; Shouman, M.A.; Ott, A.; Nüsslin, F.; Mayr, N.A.; et al. CT-based radiomic features predict tumor grading and have prognostic value in patients with soft tissue sarcomas treated with neoadjuvant radiation therapy. Radiother. Oncol. 2019, 135, 187–196. [Google Scholar] [CrossRef]

- Peeken, J.C.; Spraker, M.B.; Knebel, C.; Dapper, H.; Pfeiffer, D.; Devecka, M.; Thamer, A.; Shouman, M.A.; Ott, A.; von Eisenhart-Rothe, R.; et al. Tumor grading of soft tissue sarcomas using MRI-based radiomics. EBioMedicine 2019, 48, 332–340. [Google Scholar] [CrossRef] [Green Version]

- Peeken, J.C.; Shouman, M.A.; Kroenke, M.; Rauscher, I.; Maurer, T.; Gschwend, J.E.; Eiber, M.; Combs, S.E. A CT-based radiomics model to detect prostate cancer lymph node metastases in PSMA radioguided surgery patients. Eur. J. Nucl. Med. Mol. Imaging 2020, 47, 2968–2977. [Google Scholar] [CrossRef] [PubMed]

- Bogowicz, M.; Jochems, A.; Deist, T.M.; Tanadini-Lang, S.; Huang, S.H.; Chan, B.; Waldron, J.N.; Bratman, S.; O’Sullivan, B.; Riesterer, O.; et al. Privacy-preserving distributed learning of radiomics to predict overall survival and HPV status in head and neck cancer. Sci. Rep. 2020, 10, 1–10. [Google Scholar] [CrossRef]

- Huang, C.; Cintra, M.; Brennan, K.; Zhou, M.; Colevas, A.D.; Fischbein, N.; Zhu, S.; Gevaert, O. Development and validation of radiomic signatures of head and neck squamous cell carcinoma molecular features and subtypes. EBioMedicine 2019, 45, 70–80. [Google Scholar] [CrossRef] [Green Version]

- Fujima, N.; Andreu-Arasa, V.C.; Meibom, S.K.; Mercier, G.A.; Truong, M.T.; Sakai, O. Prediction of the human papillomavirus status in patients with oropharyngeal squamous cell carcinoma by FDG-PET imaging dataset using deep learning analysis: A hypothesis-generating study. Eur. J. Radiol. 2020, 126, 108936. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M.; et al. The Cancer Imaging Archive (TCIA): Maintaining and operating a public information repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef] [Green Version]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features With 3D Convolutional Networks. In Proceedings of the The IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Hussein, S.; Cao, K.; Song, Q.; Bagci, U. Risk Stratification of Lung Nodules Using 3D CNN-Based Multi-task Learning. Inf. Process. Med Imaging 2017, 249–260. [Google Scholar] [CrossRef] [Green Version]

- Kwan, J.Y.Y.; Su, J.; Huang, S.; Ghoraie, L.; Xu, W.; Chan, B.; Yip, K.; Giuliani, M.; Bayley, A.; Kim, J.; et al. Data from Radiomic Biomarkers to Refine Risk Models for Distant Metastasis in Oropharyngeal Carcinoma; The Cancer Imaging Archive: Manchester, NH, USA, 2019. [Google Scholar]

- Kwan, J.Y.Y.; Su, J.; Huang, S.H.; Ghoraie, L.S.; Xu, W.; Chan, B.; Yip, K.W.; Giuliani, M.; Bayley, A.; Kim, J.; et al. Radiomic biomarkers to refine risk models for distant metastasis in HPV-related oropharyngeal carcinoma. Int. J. Radiat. Oncol. Biol. Phys. 2018, 102, 1107–1116. [Google Scholar] [CrossRef]

- Elhalawani, H.; White, A.; Zafereo, J.; Wong, A.; Berends, J.; AboHashem, S.; Williams, B.; Aymard, J.; Kanwar, A.; Perni, S.; et al. Radiomics Outcome Prediction in Oropharyngeal Cancer [Dataset]; The Cancer Imaging Archive: Manchester, NH, USA, 2017. [Google Scholar]

- Grossberg, A.J.; Mohamed, A.S.; Elhalawani, H.; Bennett, W.C.; Smith, K.E.; Nolan, T.S.; Williams, B.; Chamchod, S.; Heukelom, J.; Kantor, M.E.; et al. Imaging and clinical data archive for head and neck squamous cell carcinoma patients treated with radiotherapy. Sci. Data 2018, 5, 180173. [Google Scholar] [CrossRef]

- Martin, V.; Emily, K.R.; Léo Jean, P.; Xavier, L.; Christophe, F.; Nader, K.; Phuc Félix, N.T.; Chang-Shu, W.; Sultanem, K. Data from Head-Neck-PET-CT; The Cancer Imaging Archive: Manchester, NH, USA, 2017. [Google Scholar]

- Vallieres, M.; Kay-Rivest, E.; Perrin, L.J.; Liem, X.; Furstoss, C.; Aerts, H.J.; Khaouam, N.; Nguyen-Tan, P.F.; Wang, C.S.; Sultanem, K.; et al. Radiomics strategies for risk assessment of tumour failure in head-and-neck cancer. Sci. Rep. 2017, 7, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Wee, L.; Dekker, A. Data from Head-Neck-Radiomics-HN1; The Cancer Imaging Archive: Manchester, NH, USA, 2019. [Google Scholar]

- Aerts, H.J.; Velazquez, E.R.; Leijenaar, R.T.; Parmar, C.; Grossmann, P.; Carvalho, S.; Bussink, J.; Monshouwer, R.; Haibe-Kains, B.; Rietveld, D.; et al. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat. Commun. 2014, 5, 1–9. [Google Scholar] [CrossRef]

- Park, S.H.; Han, K. Methodologic guide for evaluating clinical performance and effect of artificial intelligence technology for medical diagnosis and prediction. Radiology 2018, 286, 800–809. [Google Scholar] [CrossRef]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. C3D: Generic Features for Video Analysis. CoRR 2017, 2, 8. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Fei-Fei, L. Large-Scale Video Classification with Convolutional Neural Networks. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Raghu, M.; Zhang, C.; Kleinberg, J.; Bengio, S. Transfusion: Understanding transfer learning for medical imaging. arXiv 2019, arXiv:1902.07208. [Google Scholar]

- Lu, L.; Ehmke, R.C.; Schwartz, L.H.; Zhao, B. Assessing agreement between radiomic features computed for multiple CT imaging settings. PLoS ONE 2016, 11, e0166550. [Google Scholar] [CrossRef] [Green Version]

- Zhao, B.; Tan, Y.; Tsai, W.Y.; Schwartz, L.H.; Lu, L. Exploring variability in CT characterization of tumors: A preliminary phantom study. Transl. Oncol. 2014, 7, 88–93. [Google Scholar] [CrossRef] [Green Version]

- Larue, R.T.; van Timmeren, J.E.; de Jong, E.E.; Feliciani, G.; Leijenaar, R.T.; Schreurs, W.M.; Sosef, M.N.; Raat, F.H.; van der Zande, F.H.; Das, M.; et al. Influence of gray level discretization on radiomic feature stability for different CT scanners, tube currents and slice thicknesses: A comprehensive phantom study. Acta Oncol. 2017, 56, 1544–1553. [Google Scholar] [CrossRef]

- Ger, R.B.; Zhou, S.; Chi, P.C.M.; Lee, H.J.; Layman, R.R.; Jones, A.K.; Goff, D.L.; Fuller, C.D.; Howell, R.M.; Li, H.; et al. Comprehensive investigation on controlling for CT imaging variabilities in radiomics studies. Sci. Rep. 2018, 8, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Hendrycks, D.; Dietterich, T. Benchmarking Neural Network Robustness to Common Corruptions and Perturbations. arXiv 2019, arXiv:1903.12261. [Google Scholar]

- Kurakin, A.; Goodfellow, I.; Bengio, S. Adversarial examples in the physical world. arXiv 2016, arXiv:1607.02533. [Google Scholar]

- Hendrycks, D.; Lee, K.; Mazeika, M. Using Pre-Training Can Improve Model Robustness and Uncertainty. Int. Conf. Mach. Learn. 2019, 97, 2712–2721. [Google Scholar]

- Goldenberg, D.; Begum, S.; Westra, W.H.; Khan, Z.; Sciubba, J.; Pai, S.I.; Califano, J.A.; Tufano, R.P.; Koch, W.M. Cystic lymph node metastasis in patients with head and neck cancer: An HPV-associated phenomenon. Head Neck J. Sci. Spec. Head Neck 2008, 30, 898–903. [Google Scholar] [CrossRef]

- Begum, S.; Gillison, M.L.; Ansari-Lari, M.A.; Shah, K.; Westra, W.H. Detection of human papillomavirus in cervical lymph nodes: A highly effective strategy for localizing site of tumor origin. Clin. Cancer Res. 2003, 9, 6469–6475. [Google Scholar] [PubMed]

- Leijenaar, R.T.; Carvalho, S.; Hoebers, F.J.; Aerts, H.J.; Van Elmpt, W.J.; Huang, S.H.; Chan, B.; Waldron, J.N.; O’sullivan, B.; Lambin, P. External validation of a prognostic CT-based radiomic signature in oropharyngeal squamous cell carcinoma. Acta Oncol. 2015, 54, 1423–1429. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Leijenaar, R.T.; Bogowicz, M.; Jochems, A.; Hoebers, F.J.; Wesseling, F.W.; Huang, S.H.; Chan, B.; Waldron, J.N.; O’Sullivan, B.; Rietveld, D.; et al. Development and validation of a radiomic signature to predict HPV (p16) status from standard CT imaging: A multicenter study. Br. J. Radiol. 2018, 91, 20170498. [Google Scholar] [CrossRef] [PubMed]

- Lydiatt, W.M.; Patel, S.G.; O’Sullivan, B.; Brandwein, M.S.; Ridge, J.A.; Migliacci, J.C.; Loomis, A.M.; Shah, J.P. Head and neck cancers—major changes in the American Joint Committee on cancer eighth edition cancer staging manual. CA A Cancer J. Clin. 2017, 67, 122–137. [Google Scholar] [CrossRef] [PubMed]

- Wasylyk, B.; Abecassis, J.; Jung, A.C. Identification of clinically relevant HPV-related HNSCC: In p16 should we trust? Oral Oncol. 2013, 49, e33–e37. [Google Scholar] [CrossRef]

- Westra, W.H. Detection of human papillomavirus (HPV) in clinical samples: Evolving methods and strategies for the accurate determination of HPV status of head and neck carcinomas. Oral Oncol. 2014, 50, 771–779. [Google Scholar] [CrossRef] [Green Version]

- Qureishi, A.; Mawby, T.; Fraser, L.; Shah, K.A.; Møller, H.; Winter, S. Current and future techniques for human papilloma virus (HPV) testing in oropharyngeal squamous cell carcinoma. Eur. Arch. Oto-Rhino 2017, 274, 2675–2683. [Google Scholar] [CrossRef]

- Maniakas, A.; Moubayed, S.P.; Ayad, T.; Guertin, L.; Nguyen-Tan, P.F.; Gologan, O.; Soulieres, D.; Christopoulos, A. North-American survey on HPV-DNA and p16 testing for head and neck squamous cell carcinoma. Oral Oncol. 2014, 50, 942–946. [Google Scholar] [CrossRef]

| 2lClinical Variable | Training Set | Validation Set | Test Set | ||

|---|---|---|---|---|---|

| Cohort | OPC | HNSCC | HN PET-CT | HN1 | |

| Number of patients | 412 | 263 | 90 | 80 | |

| HPV: pos/neg | 290/122 | 223/40 | 71/19 | 23/57 | |

| HPV status | |||||

| Age | |||||

| pos | 58.81 (52.00–64.75) | 57.87 (52.00–64.00) | 62.32 (58.00–66.00) | 57.52 (52.00–62.50) | |

| neg | 64.82 (58.00–72.75) | 60.02 (54.50–67.25) | 59.11 (49.50–69.50) | 60.91 (56.00–66.00) | |

| Sex: Female/Male | |||||

| pos | 47/243 | 32/191 | 14/56 | 5/18 | |

| neg | 34/88 | 15/25 | 4/15 | 12/45 | |

| T-stage: T1/T2/T3/T4 | |||||

| pos | 46/93/94/57 | 60/93/41/29 | 10/37/15/9 | 4/8/9/8 | |

| neg | 9/35/43/35 | 6/12/12/10 | 3/4/8/4 | 9/16/9/23 | |

| N-stage: N0/N1/N2/N3 | |||||

| pos | 33/22/215/20 | 19/30/170/4 | 11/10/47/3 | 6/2/15/0 | |

| neg | 36/16/62/8 | 5/2/31/2 | 2/1/13/3 | 14/10/31/2 | |

| Tumor size [cm] | |||||

| pos | 29.35 (10.52–37.78) | 11.78 (3.94–14.04) | 34.63 (14.91–41.77) | 23.00 (10.83–34.29) | |

| neg | 36.99 (15.72–45.35) | 23.57 (5.80–22.85) | 35.09 (17.32–47.82) | 40.19 (11.77–54.42) | |

| transversal voxel spacing [mm] | 0.97 (0.98–0.98) | 0.59 (0.49–0.51) | 1.06 (0.98–1.17) | 0.98 (0.98–0.98) | |

| longitudinal voxel spacing [mm] | 2.00 (2.00–2.00) | 1.53 (1.00-2.50) | 2.89 (3.00–3.27) | 2.99 (3.00–3.00) | |

| manufacturer | |||||

| GE Med. Sys. | 272 | 238 | 45 | 0 | |

| Toshiba | 138 | 3 | 0 | 0 | |

| Philips | 2 | 12 | 45 | 0 | |

| CMS Inc. | 0 | 0 | 0 | 43 | |

| Siemens | 0 | 4 | 0 | 37 | |

| other | 0 | 6 | 0 | 0 | |

| Metric | 3D Video Pre-Trained | 3D from Scratch | 2D ImageNet Pre-Trained |

|---|---|---|---|

| AUC | 0.81 (0.02) | 0.64 (0.05) | 0.73 (0.02) |

| sensitivity | 0.75 (0.06) | 0.67 (0.12) | 0.84 (0.07) |

| specificity | 0.72 (0.09) | 0.49 (0.09) | 0.40 (0.13) |

| PPV | 0.53 (0.07) | 0.35 (0.03) | 0.37 (0.04) |

| NPV | 0.88 (0.02) | 0.79 (0.05) | 0.87 (0.03) |

| score | 0.62 (0.02) | 0.45 (0.05) | 0.51 (0.03) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lang, D.M.; Peeken, J.C.; Combs, S.E.; Wilkens, J.J.; Bartzsch, S. Deep Learning Based HPV Status Prediction for Oropharyngeal Cancer Patients. Cancers 2021, 13, 786. https://doi.org/10.3390/cancers13040786

Lang DM, Peeken JC, Combs SE, Wilkens JJ, Bartzsch S. Deep Learning Based HPV Status Prediction for Oropharyngeal Cancer Patients. Cancers. 2021; 13(4):786. https://doi.org/10.3390/cancers13040786

Chicago/Turabian StyleLang, Daniel M., Jan C. Peeken, Stephanie E. Combs, Jan J. Wilkens, and Stefan Bartzsch. 2021. "Deep Learning Based HPV Status Prediction for Oropharyngeal Cancer Patients" Cancers 13, no. 4: 786. https://doi.org/10.3390/cancers13040786

APA StyleLang, D. M., Peeken, J. C., Combs, S. E., Wilkens, J. J., & Bartzsch, S. (2021). Deep Learning Based HPV Status Prediction for Oropharyngeal Cancer Patients. Cancers, 13(4), 786. https://doi.org/10.3390/cancers13040786