The Case of a Multiplication Skills Game: Teachers’ Viewpoint on MG’s Dashboard and OSLM Features

Abstract

:1. Introduction

“to take in information from many sources and make decisions quickly; to deduce a game’s rules from playing rather than by being told; to create strategies for overcoming obstacles; to understand complex systems through experimentation”

2. Digital Games and DGBL

3. Teachers’ Attitude toward Digital Games

- Teachers’ perceptions of the value of incorporating digital games in the classroom. More analytically according to teachers’ beliefs, the main reasons to use digital games in the classroom are the following:

- To offer students a safe learning environment where the consequences of failure are smoother [49]

- To empower students’ activeness [48],

- To offer students feedback on their learning skills and actions [48],

- To visualize students’ progress for them to watch [48],

- To propose additional learning material or a reward [37],

- To entertain students [37],

- To support evolvement as digital games are considered ‘the future’ (teachers support the belief that the adoption rate of game-based learning will continue to speed up in the very immediate future [37]).

- Teachers’ acceptance that games play an important role in their teaching procedure. Acceptance of digital games by teachers depends on many factors:

- Teachers who experience playing games in their spare time are interested in the idea of digital games in their teaching process [45,50,51,52], while teachers’ ability to effectively deal with new technologies does not necessarily imply that they support the idea of digital games in the classroom [37,45]

- Usefulness and learning opportunities offered by the game [37],

- Teachers’ own experience, which has convinced them about the positive consequences of technology [48],

- Teachers’ perceptions on barriers against using games in the classroom. The main factors that discourage teachers from using digital games may be the following:

- Perceived negative effects of gaming e.g., addiction, emotional suppression, repetitive stress injuries, relationship issues, social disconnection [32],

- Unprepared students [32] that delay teaching, as without adequate preparation at home, students cannot cope with the subject and concepts, and therefore, they deprive the class of the opportunity to play games or the teacher needs to consume teaching time for repeating the same teaching material,

- Limited budgets [32] that lead to poorly equipped computer school labs, etc.,

- Classroom management issues [41] that can vary but take away the opportunity from the teacher to deal with issues such as the adoption of digital games,

- Teachers’ own poor efficacy in using the technologies, in combination with anxiety toward scenarios of failure [52].

4. Adaptiveness and Open Learner Modeling

5. OLM Visualization Options

- Bar Charts and Histograms usually represent learner performance, competencies level, and activity level (posts to forums, access frequency, content view durations, etc.).

- Radar Plots [71] support easy comparisons and identification of outliers. A radar plot can be used to represent multiple learners and compare them on various dimensions (e.g., performance, activity level, topics covered), or it can also depict a single user.

- Scatterplots [72] can represent either a single learner (performance, activity level, topics covered, etc.) or compare multiple learners using different colors or point shapes.

- Tables are used to represent either a single learner (performance, activity level, topics covered, etc.) or multiple learners (each one in a different row or column).

- Network Diagrams [70,71,76] are used to represent learner associations (learners are nodes and associations are edges) or content associations along with learner performance (topics are nodes and the way nodes are coded in color/size/shape denotes learner performance on each topic). When clicking on a node of the network, it may be expanded or collapsed to present more or less information [77].

- Skill Meters represent a simple overview of the learner model contents, which are typically found as a group of more than one skill meters assigned to each topic or concept. In addition, separate skill meters could be used for sub-topics allowing a simpler structure of the model presentation [79]. Most skill meters [71,76,80] display the learner level of knowledge, understanding, or skill compared to a subset of expert knowledge [79,81] in the form of proportion of the meter filled [76,82].

- Sunbursts are multi-level pie charts, which visualize hierarchical data, depicted by concentric circles [83]. The circle in the center represents the root node, with the hierarchy moving outward from the center. A segment of the inner circle bears a hierarchical relationship to those segments of the outer circle that lie within the angular sweep of the parent segment [84]. In OLMs, sunburst charts can present the complete learner model in concentric sectors. In some implementations, sunbursts are interactive, and the user can get more information describing the sources that support the current values, or the learner can access other sections related to a specific concept [85].

- Concept Maps depict ideas and information (concepts) as boxes or circles that are connected with labeled arrows in a downward-branching hierarchical structure [86,87]. Concept maps [78] can be pre-structured to reflect the domain, with the nodes indicating the strength of knowledge or understanding of a concept [88].

- Bullet Charts can be interactive and provide details concerning the way individual scores are calculated, and learners can view the tasks that contribute to a specific learning outcome and identify tasks that can improve their level. Bullet charts are typically unstructured OLM visualizations.

- Treemaps depicts hierarchies in which the rectangular screen space is divided into regions, which are also divided again for each level in the hierarchy [91]. Through size, there is an indication of topics’ strength, and typically, a learner can click on a cell to view the next layer in the hierarchical structure [77].

- Gauges depict learner skills on specific topics and can also integrate a scalar set of values representing discrete ranges, as in [64]. Each gauge represents a specific learner.

- Grids use color to indicate level of understanding [92] on a specific topic. They may display a single learner or multiple learners as in MasteryGrids [61] that use cells of different color saturation to show knowledge progress of the target student, reference group, and other students over multiple kinds of educational content organized by topics. Grids are unstructured OLM visualizations.

- Burn Down Charts graphically represent the remaining work versus time. In OLMs, through burn down charts, learners can track their own progress, showing target and projected completion time, percentage of assignments submitted and completed [66]. Thus, they are self-assessment tools keeping learners motivated and focused on their objectives.

- Task lists and focus lists (tasks to be focused on to stay on track) support student engagement with frequent formative feedback. The task list uses different colors in order to keep learners informed about the status of their task [66].

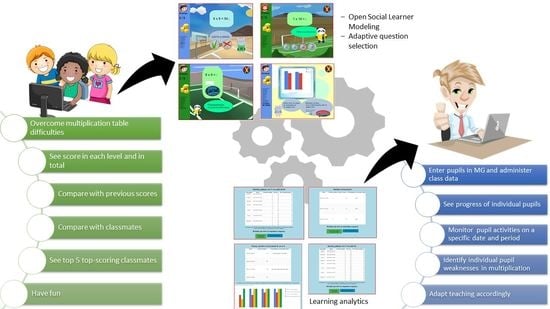

6. Multiplication Game Description

6.1. Brief Description of MG

6.2. Description of MG’s Teacher Daskboard

7. Survey Methodology and Analysis

- (a)

- To assess teachers’ perceptions about MG’s usefulness (MGU) in terms of perception/attitudes toward using digital games (ADG)

- (b)

- To assess teacher’s acceptance of MG (TA) and MG’s usefulness (MGU) among to gender, age, and teaching experience

- (c)

- To assess teacher’s acceptance of MG (TA) in terms of barriers in using digital games (BD)

- (d)

- To examine teacher’s acceptance of using MG (TA) in the classroom in terms of teachers’ attitude toward digital games (ADG)

- (e)

- To investigate teachers’ beliefs about the usefulness of MG in general (MGU)

- (f)

- To investigate teachers’ opinion about MG’s interface (I)

- (g)

- To investigate teachers’ opinion about the OSLM characteristics of MG.

7.1. Method

7.1.1. Hypothesis Testing

7.1.2. Research Participants

7.1.3. Design of the Instrument

7.1.4. Methodology

7.1.5. Data Analysis

7.1.6. Results of Hypothesis Testing

8. Discussion

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Prensky, M. Digital game-based learning. Comput. Entertain. 2003, 1, 21. [Google Scholar] [CrossRef]

- Hwang, G.-J.; Sung, H.-Y.; Hung, C.-M.; Huang, I.; Tsai, C.-C. Development of a personalized educational computer game based on students’ learning styles. Educ. Technol. Res. Dev. 2012, 60, 623–638. [Google Scholar] [CrossRef]

- Sung, H.-Y.; Hwang, G.-J. A collaborative game-based learning approach to improving students’ learning performance in science courses. Comput. Educ. 2013, 63, 43–51. [Google Scholar] [CrossRef]

- Papastergiou, M. Digital Game-Based Learning in high school Computer Science education: Impact on educational effectiveness and student motivation. Comput. Educ. 2009, 52, 1–12. [Google Scholar] [CrossRef]

- Read, J.; Macfarlane, S.; Casey, C. Endurability, Engagement and Expectations: Measuring Children’s Fun. Interact. Des. Child. 2002, 2, 1–23. [Google Scholar]

- Spires, H.A. Digital Game-Based Learning. J. Adolesc. Adult Lit. 2015, 59, 125–130. [Google Scholar] [CrossRef]

- Lampropoulos, G.; Anastasiadis, T.; Siakas, K. Digital Game-based Learning in Education: Significance of Motivating, Engaging and Interactive Learning Environments. In Proceedings of the 24th International Conference on Software Process Improvement-Research into Education and Training (INSPIRE 2019), Southampton, UK, 15–16 April 2019; pp. 117–127. [Google Scholar]

- Qian, M.; Clark, K.R. Game-based Learning and 21st century skills: A review of recent research. Comput. Hum. Behav. 2016, 63, 50–58. [Google Scholar] [CrossRef]

- Lin, C.-H.; Chen, S.-K.; Chang, S.-M.; Lin, S.S. Cross-lagged relationships between problematic Internet use and lifestyle changes. Comput. Hum. Behav. 2013, 29, 2615–2621. [Google Scholar] [CrossRef]

- Kuss, D.J.; Griffiths, M.D. Online gaming addiction in children and adolescents: A review of empirical research. J. Behav. Addict. 2012, 1, 3–22. [Google Scholar] [CrossRef] [Green Version]

- Chang, S.-M.; Hsieh, G.M.; Lin, S.S. The mediation effects of gaming motives between game involvement and problematic Internet use: Escapism, advancement and socializing. Comput. Educ. 2018, 122, 43–53. [Google Scholar] [CrossRef]

- All, A.; Castellar, E.P.N.; Van Looy, J. Assessing the effectiveness of digital game-based learning: Best practices. Comput. Educ. 2016, 92–93, 90–103. [Google Scholar] [CrossRef]

- Iacovides, I.; Aczel, J.; Scanlon, E.; Woods, W. Investigating the relationships between informal learning and player involvement in digital games. Learn. Media Technol. 2011, 37, 321–327. [Google Scholar] [CrossRef]

- Panoutsopoulosm, H.; Sampson, D.G. A Study on Exploiting Commercial Digital Games into School Context. Educ. Technol. Soc. 2012, 15, 15–27. [Google Scholar]

- Schaaf, R. Does digital game-based learning improve student time-on-task behavior and engagement in comparison to alternative instructional strategies? Can. J. Action Res. 2012, 13, 50–64. [Google Scholar]

- Busch, C.; Conrad, F.; Steinicke, M. Management Workshops and Tertiary Education. Electron. J. e-Learn. 2013, 11, 3–15. [Google Scholar]

- Li, Q. Understanding enactivism: A study of affordances and constraints of engaging practicing teachers as digital game designers. Educ. Technol. Res. Dev. 2012, 60, 785–806. [Google Scholar] [CrossRef]

- Sardone, N.B.; Devlin-Scherer, R. Teacher Candidate Responses to Digital Games. J. Res. Technol. Educ. 2010, 42, 409–425. [Google Scholar] [CrossRef]

- Ott, M.; Pozzi, F. Digital games as creativity enablers for children. Behav. Inf. Technol. 2012, 31, 1011–1019. [Google Scholar] [CrossRef]

- Bottino, R.M.; Ott, M.; Tavella, M. Serious Gaming at School. Int. J. Game-Based Learn. 2014, 4, 21–36. [Google Scholar] [CrossRef] [Green Version]

- Pivec, M. Editorial: Play and learn: Potentials of game-based learning. Br. J. Educ. Technol. 2007, 38, 387–393. [Google Scholar] [CrossRef]

- Hong, J.-C.; Cheng, C.-L.; Hwang, M.-Y.; Lee, C.-K.; Chang, H.-Y. Assessing the educational values of digital games. J. Comput. Assist. Learn. 2009, 25, 423–437. [Google Scholar] [CrossRef]

- de Freitas, S.; Oliver, M. How can exploratory learning with games and simulations within the curriculum be most effectively evaluated? Comput. Educ. 2006, 46, 249–264. [Google Scholar] [CrossRef] [Green Version]

- Franco, C.; Mañas, I.; Cangas, A.J.; Gallego, J. Exploring the Effects of a Mindfulness Program for Students of Secondary School. Int. J. Knowl. Soc. Res. 2011, 2, 14–28. Available online: https://econpapers.repec.org/RePEc:igg:jksr00:v:2:y:2011:i:1:p:14-28 (accessed on 3 August 2020). [CrossRef]

- Robertson, D.; Miller, D. Learning gains from using games consoles in primary classrooms: A randomized controlled study. Procedia Soc. Behav. Sci. 2009, 1, 1641–1644. [Google Scholar] [CrossRef] [Green Version]

- De Freitas, S.I. Using games and simulations for supporting learning. Learn. Media Technol. 2006, 31, 343–358. [Google Scholar] [CrossRef]

- Hsiao, H.-S.; Chang, C.-S.; Lin, C.-Y.; Hu, P.-M. Development of children’s creativity and manual skills within digital game-based learning environment. J. Comput. Assist. Learn. 2014, 30, 377–395. [Google Scholar] [CrossRef]

- Dabbagh, N.; Benson, A.D.; Denham, A.; Joseph, R. Learning Technologies and Globalization: Pedagogical Frameworks and Applications; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Gee, J.P. What video games have to teach us about learning and literacy. Comput. Entertain. 2003, 1, 20. [Google Scholar] [CrossRef]

- Boyle, E.A.; MacArthur, E.W.; Connolly, T.M.; Hainey, T.; Manea, M.; Kärki, A.; van Rosmalen, P. A narrative literature review of games, animations and simulations to teach research methods and statistics. Comput. Educ. 2014, 74, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Vogel, J.J.; Vogel, D.S.; Cannon-Bowers, J.; Bowers, C.A.; Muse, K.; Wright, M. Computer Gaming and Interactive Simulations for Learning: A Meta-Analysis. J. Educ. Comput. Res. 2006, 34, 229–243. [Google Scholar] [CrossRef]

- Ketelhut, D.J.; Schifter, C.C. Teachers and game-based learning: Improving understanding of how to increase efficacy of adoption. Comput. Educ. 2011, 56, 539–546. [Google Scholar] [CrossRef]

- Fullan, M. The New Meaning of Educational Change, 5th ed.; Routledge: London, UK, 2015. [Google Scholar]

- Bakar, A.; Inal, Y.; Cagiltay, K. Use of Commercial Games for Educational Purposes: Will Today’s Teacher. In EdMedia + Innovate Learning; Association for the Advancement of Computing in Education (AACE): Waynesville, NC, USA, 2006; pp. 1757–1762. [Google Scholar]

- Baek, Y.K. What Hinders Teachers in Using Computer and Video Games in the Classroom? Exploring Factors Inhibiting the Uptake of Computer and Video Games. Cyberpsychol. Behav. 2008, 11, 665–671. [Google Scholar] [CrossRef]

- Teo, T. Pre-service teachers’ attitudes towards computer use: A Singapore survey. Australas. J. Educ. Technol. 2008, 24, 413–424. [Google Scholar] [CrossRef] [Green Version]

- Bourgonjon, J.; De Grove, F.; De Smet, C.; Van Looy, J.; Soetaert, R.; Valcke, M. Acceptance of game-based learning by secondary school teachers. Comput. Educ. 2013, 67, 21–35. [Google Scholar] [CrossRef]

- De Grove, F.; Bourgonjon, J.; Van Looy, J. Digital games in the classroom? A contextual approach to teachers’ adoption intention of digital games in formal education. Comput. Hum. Behav. 2012, 28, 2023–2033. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef] [Green Version]

- Schifter, C. Infusing Technology into the Classroom; IGI Global: Hershey, PA, USA, 2008. [Google Scholar]

- Proctor, M.D.; Marks, Y. A survey of exemplar teachers’ perceptions, use, and access of computer-based games and technology for classroom instruction. Comput. Educ. 2013, 62, 171–180. [Google Scholar] [CrossRef]

- Allsop, Y.; Yeniman, E.; Screpanti, M. Teachers’ Beliefs about Game Based Learning; A Comparative Study of Pedagogy, Curriculum and Practice in Italy, Turkey and the UK. In Proceedings of the 7th European Conference on Games Based Learning, Porto, Portugal, 3–4 October 2013; pp. 1–10. [Google Scholar]

- Dickey, M.D. K-12 teachers encounter digital games: A qualitative investigation of teachers’ perceptions of the potential of digital games for K-12 education. Interact. Learn. Environ. 2015, 23, 485–495. [Google Scholar] [CrossRef]

- Can, G.; Cagiltay, K. Turkish Prospective Teachers’ Perceptions Regarding the Use of Computer Games with Educational Features. Educ. Technol. Soc. 2006, 9, 308–321. [Google Scholar]

- Schrader, P.G.; Zheng, D.; Young, M. Teachers’ Perceptions of Video Games: MMOGs and the Future of Preservice. Innov. J. Online Educ. 2006, 2, 3. [Google Scholar]

- Li, Q. Digital games and learning: A study of preservice teachers’ perceptions. Int. J. Play 2013, 2, 101–116. [Google Scholar] [CrossRef]

- Ruggiero, D. Video Games in the Classroom: The Teacher Point of View. In Proceedings of the Games for Learning Workshop of the Foundations of Digital Games Conference, Chania, Greece, 14–17 May 2013. [Google Scholar]

- Huizenga, J.; Dam, G.T.; Voogt, J.; Admiraal, W. Teacher perceptions of the value of game-based learning in secondary education. Comput. Educ. 2017, 110, 105–115. [Google Scholar] [CrossRef]

- Admiraal, W.; Huizenga, J.; Akkerman, S.; Dam, G.T. The concept of flow in collaborative game-based learning. Comput. Hum. Behav. 2011, 27, 1185–1194. [Google Scholar] [CrossRef] [Green Version]

- Becker, K.; Jacobsen, M.D. Games for Learning: Are Schools Ready for What’s To Come? In DiGRA; University of Calgary: Calgary, AB, Canada, 2005; Available online: http://www.digra.org/wp-content/uploads/digital-library/06278.39448.pdf (accessed on 1 March 2021).

- Barbour, M.; Evans, M.; Toker, S. Making Sense of Video Games: Pre-Service Teachers Struggle with This New Medium. In Proceedings of the Society for Information Technology and Teacher Education International Conference, Charleston, SC, USA, 2–6 March 2009; pp. 1367–1372. [Google Scholar]

- Kenny, R.F.; McDaniel, R. The role teachers’ expectations and value assessments of video games play in their adopting and integrating them into their classrooms. Br. J. Educ. Technol. 2011, 42, 197–213. [Google Scholar] [CrossRef]

- Kennedy-Clark, S. Pre-service teachers’ perspectives on using scenario-based virtual worlds in science education. Comput. Educ. 2011, 57, 2224–2235. [Google Scholar] [CrossRef]

- Clark, D.B.; Tanner-Smith, E.E.; Killingsworth, S.S. Digital Games, Design, and Learning. Rev. Educ. Res. 2016, 86, 79–122. [Google Scholar] [CrossRef] [Green Version]

- Kiili, K. Digital game-based learning: Towards an experiential gaming model. Internet High. Educ. 2005, 8, 13–24. [Google Scholar] [CrossRef]

- Self, J. Bypassing the intractable problem of student modelling. Intell. Tutoring Syst. Crossroads Artif. Intell. Educ. 1990, 41, 107–123. [Google Scholar]

- Bull, S.; Kay, J. Student models that invite the learner in: The smili open learner modelling framework. Int. J. Artif. Intell. Educ. 2007, 17, 89–120. [Google Scholar]

- Hsiao, I.-H.; Sosnovsky, S.; Brusilovsky, P. Guiding students to the right questions: Adaptive navigation support in an E-Learning system for Java programming. J. Comput. Assist. Learn. 2010, 26, 270–283. [Google Scholar] [CrossRef]

- Mitrovic, A.; Martin, B. Evaluating the effect of open student models on self-assessment. Int. J. Artif. Intell. Educ. 2007, 17, 121–144. [Google Scholar]

- Bull, S.; Mabbott, A.; Issa, A.S.A. UMPTEEN: Named and anonymous learner model access for instructors and peers. Int. J. Artif. Intell. Educ. 2007, 17, 227–253. [Google Scholar]

- Brusilovsky, P.; Somyurek, S.; Guerra, J.; Hosseini, R.; Zadorozhny, V.; Durlach, P.J. Open Social Student Modeling for Personalized Learning. IEEE Trans. Emerg. Top. Comput. 2015, 4, 450–461. [Google Scholar] [CrossRef]

- Hsiao, I.-H.; Brusilovsky, P. Motivational Social Visualizations for Personalized E-Learning. In Transactions on Petri Nets and Other Models of Concurrency XV; Springer: Berlin/Heidelberg, Germany, 2012; Volume 19, pp. 153–165. [Google Scholar]

- Hsiao, I.-H.; Bakalov, F.; Brusilovsky, P.; König-Ries, B. Progressor: Social navigation support through open social student modeling. New Rev. Hypermedia Multimedia 2013, 19, 112–131. [Google Scholar] [CrossRef] [Green Version]

- Brusilovsky, P. Intelligent Interfaces for Open Social Student Modeling. In Proceedings of the 2017 ACM Workshop on Intelligent Interfaces for Ubiquitous and Smart Learning, Limassol, Cyprus, 13 March 2017; p. 1. [Google Scholar]

- Guerra, J.; Hosseini, R.; Somyurek, S.; Brusilovsky, P. An Intelligent Interface for Learning Content. In Proceedings of the 21st International Conference on Evaluation and Assessment in Software Engineering, Karlskrona, Sweden, 15–16 June 2017; pp. 152–163. [Google Scholar]

- Law, C.-Y.; Grundy, J.; Cain, A.; Vasa, R.; Cummaudo, A. User Perceptions of Using an Open Learner Model Visualisation Tool for Facilitating Self-regulated Learning. In Proceedings of the Nineteenth Australasian Computing Education Conference on-ACE ’17, Geelong, Australia, 31 January–3 February 2017; pp. 55–64. [Google Scholar]

- Bull, S.; Kay, J. Smili: A Framework for Interfaces to Learning Data in Open Learner Models, Learning Analytics and Related Fields. Int. J. Artif. Intell. Educ. 2016, 26, 293–331. [Google Scholar] [CrossRef] [Green Version]

- Bull, S.; Gakhal, I.; Grundy, D.; Johnson, M.; Mabbott, A.; Xu, J. Preferences in Multiple-View Open Learner Models. Lect. Notes Comput. Sci. 2010, 6383, 476–481. [Google Scholar] [CrossRef]

- Jacovina, M.E.; Snow, E.L.; Allen, L.K.; Roscoe, R.D.; Weston, J.L.; Dai, J.; McNamara, D.S. How to Visualize Success: Presenting Complex Data in a Writing Strategy Tutor. In Proceedings of the 8th International Conference on Educational Data Mining, Madrid, Spain, 26–29 June 2015; Available online: www.jta.org (accessed on 5 May 2020).

- Tervakari, A.-M.; Silius, K.; Koro, J.; Paukkeri, J.; Pirttilä, O. Usefulness of information visualizations based on educational data. In Proceedings of the 2014 IEEE Global Engineering Education Conference (EDUCON), Istanbul, Turkey, 3–5 April 2014; pp. 142–151. [Google Scholar]

- Ferguson, R.; Shum, S.B. Social learning analytics. In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, Vancouver, BC, Canada, 29 April–2 May 2012; pp. 23–33. [Google Scholar]

- Park, Y.; Jo, I.-H. Development of the Learning Analytics Dashboard to Support Students’ Learning Performance. J. Univers. Comput. Sci. 2015, 21, 110. [Google Scholar]

- Dyckhoff, A.; Zielke, D.; Bültmann, M.; Chatti, M.; Schroeder, U. Design and implementation of a learning analytics toolkit for teachers. J. Educ. Technol. Soc. 2012, 15, 58–76. Available online: http://www.ifets.info/ (accessed on 5 May 2020).

- Leony, D.; Pardo, A.; Valentín, L.D.L.F.; De Castro, D.S.; Kloos, C.D. GLASS. In Proceedings of the 2nd International Conference on Digital Access to Textual Cultural Heritage, Göttingen, Germany, 1–2 June 2017; pp. 162–163. [Google Scholar]

- Santos, J.L.; Govaerts, S.; Verbert, K.; Duval, E. Goal-oriented visualizations of activity tracking. In Proceedings of the 2nd International Conference on Cryptography, Security and Privacy, Berlin, Germany, 3–4 November 2012; p. 143. [Google Scholar]

- Bull, S.; Johnson, M.D.; Masci, D.; Biel, C. Integrating and visualising diagnostic information for the benefit of learning. In Measuring and Visualizing Learning in the Information-Rich Classroom; Reimann, P., Bull, S., KickmeierRust, M., Vatrapu, R.K., Wasson, B., Eds.; Routledge Taylor and Francis: London, UK, 2015; pp. 167–180. [Google Scholar]

- Bull, S.; Ginon, B.; Boscolo, C.; Johnson, M. Introduction of learning visualisations and metacognitive support in a persuadable open learner model. In Proceedings of the Sixth International Conference on Information and Communication Technologies and Development: Full Papers-Volume 1, Cape Town, South Africa, 7–10 December 2016; pp. 30–39. [Google Scholar]

- Mathews, M.; Mitrovic, A.; Lin, B.; Holland, J.; Churcher, N. Do Your Eyes Give It Away? Using Eye Tracking Data to Understand Students’ Attitudes towards Open Student Model Representations. In Proceedings of the International Conference on Intelligent Tutoring Systems, Chania, Greece, 14–18 June 2012; pp. 422–427. [Google Scholar]

- Weber, G.; Brusilovsky, P. ELM-ART: An adaptive versatile system for Web-based instruction. Int. J. Artif. Intell. Educ. (IJAIED) 2001, 12, 351–384. [Google Scholar]

- Duan, D.; Mitrovic, A.; Churcher, N. Evaluating the Effectiveness of Multiple Open Student Models in EER-Tutor. In Proceedings of the International Conference on Computers in Education, Asia-Pacific Society for Computers in Education, Selangor, Malaysia, 29 November–3 December 2010; pp. 86–88. [Google Scholar]

- Papanikolaou, K.A.; Grigoriadou, M.; Kornilakis, H.; Magoulas, G.D. Personalizing the Interaction in a Web-based Educational Hypermedia System: The case of INSPIRE. User Model. User Adapt. Interact. 2003, 13, 213–267. [Google Scholar] [CrossRef]

- Bull, S.; Mabbott, A. 20,000 Inspections of a Domain-Independent Open Learner Model with Individual and Comparison Views. In Proceedings of the International Conference on Intelligent Tutoring Systems, Jhongli, Taiwan, 26–30 June 2006; Volume 4053, pp. 422–432. [Google Scholar]

- Clark, J. Multi-Level Pie Charts. 2006. Available online: http://www.neoformix.com/2006/MultiLevelPieChart.html (accessed on 5 May 2020).

- Webber, R.; Herbert, R.D.; Jiang, W. Space-filling Techniques in Visualizing Output from Computer Based Economic Models. Comput. Econ. Financ. 2006, 67. Available online: https://ideas.repec.org/p/sce/scecfa/67.html (accessed on 15 May 2021).

- Conejo, R.; Trella, M.; Cruces, I.; García, R. INGRID: A Web Service Tool for Hierarchical Open Learner Model Visualization. In Proceedings of the International Conference on User Modeling, Adaptation, and Personalization, Montreal, QC, Canada, 16–20 July 2012. [Google Scholar]

- Maries, A.; Kumar, A. The Effect of Open Student Model on Learning: A Study. In Proceedings of the 13th International Conference on Artificial Intelligence in Education, Los Angeles, CA, USA, 9–13 July 2007. [Google Scholar]

- Mabbott, A.; Bull, S. Student Preferences for Editing, Persuading, and Negotiating the Open Learner Model. In Proceedings of the International Conference on Intelligent Tutoring Systems, Jhongli, Taiwan, 26–30 June 2006. [Google Scholar]

- Mabbott, A.; Bull, S. Alternative Views on Knowledge: Presentation of Open Learner Models. In Proceedings of the International Conference on Intelligent Tutoring Systems, Alagoas, Brazil, 30 August–3 September 2004; Volume 3220, pp. 689–698. [Google Scholar]

- van Labeke, N.; Brna, P.; Morales, R. Opening up the interpretation process in an open learner model. Int. J. Artif. Intell. Educ. 2007, 17, 305–338. [Google Scholar]

- Dimitrova, V. STyLE-OLM: Interactive Open Learner Modelling. Int. J. Artif. Intell. Educ. 2003, 13, 35–78. [Google Scholar]

- Shneiderman, B. Discovering Business Intelligence Using Treemap Visualizations. B-EYE-Network-Boulder CO USA, 11 April 2006. Available online: https://www.cs.umd.edu/users/ben/papers/Shneiderman2011Discovering.pdf (accessed on 15 May 2021).

- Bull, S.; Brusilovsky, P.; Guerra, J.; Araujo, R. Individual and peer comparison open learner model visualisations to identify what to work on next. In CEUR Workshop Proceedings; University of Pittsburgh: Pittsburgh, PA, USA, 2016. [Google Scholar]

- Leonardou, A.; Rigou, M.; Garofalakis, J.D. Open Learner Models in Smart Learning Environments. In Cases on Smart Learning Environments; Darshan Singh, B.S.A., Bin Mohammed, H., Shriram, R., Robeck, E., Nkwenti, M.N., Eds.; IGI Global: Hershey, PA, USA, 2018; pp. 346–368. [Google Scholar]

- Xu, J.; Bull, S. Encouraging advanced second language speakers to recognise their language difficulties: A personalised computer-based approach. Comput. Assist. Lang. Learn. 2010, 23, 111–127. [Google Scholar] [CrossRef] [Green Version]

- Johnson, M.; Bull, S. Belief Exploration in a Multiple-Media Open Learner Model for Basic Harmony. In Artificial Intelligence in Education: Building Learning Systems that Care: From Knowledge Representation to Affective Modelling; du Boulay, B., Dimitrova, A.G.V., Mizoguchi, R., Eds.; IOS Press: Amsterdam, The Netherlands, 2009; pp. 299–306. [Google Scholar]

- Brusilovsky, P.; Sosnovsky, S. Engaging students to work with self-assessment questions. In Proceedings of the 10th Annual SIGCSE Conference on Innovation and Technology in Computer Science Education-ITiCSE ’05, Caparica, Portugal, 27–29 June 2005; Volume 37, pp. 251–255. [Google Scholar]

- Bull, S.; McKay, M. An Open Learner Model for Children and Teachers: Inspecting Knowledge Level of Individuals and Peers. In Proceedings of the International Conference on Intelligent Tutoring Systems, Alagoas, Brazil, 30 August–3 September 2004; Volume 3220, pp. 646–655. [Google Scholar]

- Lee, S.J.H.; Bull, S. An Open Learner Model to Help Parents Help their Children. Technol. Instr. Cogn. Learn. 2008, 6, 29. [Google Scholar]

- Brusilovsky, P.; Hsiao, I.; Folajimi, Y. QuizMap: Open Social Student Modeling and Adaptive Navigation Support with TreeMaps 2 A Summary of Related Work. In European Conference on Technology Enhanced Learning; Springer: Berlin/Heidelberg, Germany, 2011; pp. 71–82. [Google Scholar]

- Linton, F.; Schaefer, H.-P. Recommender Systems for Learning: Building User and Expert Models through Long-Term Observation of Application Use. User Model. User Adapt. Interact. 2000, 10, 181–208. [Google Scholar] [CrossRef]

- Leonardou, A.; Rigou, M. An adaptive mobile casual game for practicing multiplication. In Proceedings of the 20th Pan-Hellenic Conference on Informatics; Association for Computing Machinery (ACM), Patras, Greece, 10–12 November 2016; Association for Computing Machinery (ACM): New York, NY, USA, 2016; p. 29. [Google Scholar]

- Leonardou, A.; Rigou, M.; Garofalakis, J. Opening User Model Data for Motivation and Learning: The Case of an Adaptive Multiplication Game. In Proceedings of the 11th International Conference on Computer Supported Education, Heraklion, Greece, 2–4 May 2019; pp. 383–390. [Google Scholar]

- Leonardou, A.; Rigou, M.; Garofalakis, J. Adding Social Comparison to Open Learner Modeling. In Proceedings of the 2019 10th International Conference on Information, Intelligence, Systems and Applications (IISA), Patras, Greece, 15–17 July 2019; pp. 1–7. [Google Scholar]

- Gomez, M.; Ruipérez-Valiente, J.; Martínez, P.; Kim, Y. Applying Learning Analytics to Detect Sequences of Actions and Common Errors in a Geometry Game. Sensors 2021, 21, 1025. [Google Scholar] [CrossRef] [PubMed]

- Ware, C. Information Visualization-Perception for Design, 4th ed.; Elsevier: Amsterdam, The Netherlands, 2020. [Google Scholar]

- Cukier, K. Data, Data Everywhere: A Special Report on Managing. Economist 2010, 3–18. Available online: http://faculty.smu.edu/tfomby/eco5385_eco6380/The%20Economist-data-data-everywhere.pdf (accessed on 15 May 2021).

- Kelleher, C.; Wagener, T. Ten guidelines for effective data visualization in scientific publications. Environ. Model. Softw. 2011, 26, 822–827. [Google Scholar] [CrossRef]

- Festinger, L. A Theory of Social Comparison Processes. Hum. Relat. 1954, 7, 117–140. [Google Scholar] [CrossRef]

- Shepherd, M.M.; Briggs, R.; Reinig, B.A.; Yen, J.; Nunamaker, J.F. Invoking Social Comparison to Improve Electronic Brainstorming: Beyond Anonymity. J. Manag. Inf. Syst. 1995, 12, 155–170. [Google Scholar] [CrossRef]

- Dijkstra, P.; Kuyper, H.; Van Der Werf, G.; Buunk, A.P.; Van Der Zee, Y.G. Social Comparison in the Classroom: A Review. Rev. Educ. Res. 2008, 78, 828–879. [Google Scholar] [CrossRef]

- Chik, A. Digital gaming and social networking: English teachers’ perceptions, attitudes and experiences. Pedagog. Int. J. 2011, 6, 154–166. [Google Scholar] [CrossRef]

| Variables | N (%) |

|---|---|

| Gender | |

| Male | 23 (11.1) |

| Female | 159 (76.8) |

| Age | |

| <30 | 30 (14.5) |

| 30–45 | 96 (46.4) |

| >45 | 56 (27.1) |

| Teaching Experience in years | |

| <5 | 34 (16.4) |

| 5–15 | 67 (32.4) |

| >15 | 81 (39.1) |

| Teacher’s playing hours | |

| >2 and ≥5 | 28 (13.5) |

| 0 | 57 (27.5) |

| >0 and ≤2 | 89 (43) |

| >5 | 8 (3.9) |

| School area | |

| Urban | 113 (54.6%) |

| Provincial | 69 (33.3%) |

| Name of Variable (Acr.) | Min | Max | Mean Score | Std. Deviation |

|---|---|---|---|---|

| Teacher’s attitude (perception) toward digital games (ADG) | 2 | 5 | 3.97 | 0.672 |

| Teacher perception about MG’s usefulness (MGU) | 2 | 6 | 5.32 | 0.784 |

| Teachers’ acceptance of MG (TA) | 2 | 6 | 5.16 | 0.927 |

| Barriers in d.g (BD) | 2 | 6 | 4.52 | 1.066 |

| Interface MG (I) | 3 | 6 | 5.10 | 0.759 |

| Social opening of MG (SG) | 2 | 6 | 5.07 | 0.890 |

| Constructs-Items | Mean | Standard Deviation | Cronbach a |

|---|---|---|---|

| Barriers (BD) | 4.52 | 1.066 | 0.764 |

| V30 | 4.42 | 1.520 | |

| V31 | 4.78 | 1.255 | |

| V32 | 4.29 | 1.447 | |

| V33 | 4.60 | 1.382 | |

| V34 | 4.68 | 1.198 | |

| MG Interface (I) | 5.10 | 0.759 | 0.741 |

| V36 | 5.04 | 0.891 | |

| V37 | 5.15 | 0.879 | |

| Social opening of MG (SG) | 5.07 | 0.890 | 0.940 |

| V39 | 5.02 | 1.022 | |

| V40 | 5.02 | 1.040 | |

| V41 | 4.98 | 1.005 | |

| V42 | 5.14 | 0.935 | |

| V43 | 5.18 | 0.947 | |

| Teacher’s Attitude (perception) toward digital games (ADG) | 3.97 | 0.890 | 0.854 |

| V14 | 4.94 | 0.953 | |

| V15 | 5.03 | 0.895 | |

| V16 | 5.15 | 0.937 | |

| V17 | 2.71 | 1.291 | |

| V19 | 4.55 | 1.250 | |

| V21 | 3.92 | 1.336 | |

| V22 | 4.96 | 1.179 | |

| V23 | 4.63 | 1.158 | |

| V24 | 3.68 | 1.468 | |

| V25 | 4.19 | 1.249 | |

| V26 | 4.08 | 1.311 | |

| Teacher perception about MG’s usefulness (MGU) | 5.32 | 0.784 | 0.848 |

| V34 | 5.27 | 0.814 | |

| V35 | 5.38 | 0.869 | |

| Teachers’ acceptance of MG (TA) | 5.16 | 0.927 | 0.828 |

| V36 | 5.03 | 1.090 | |

| V37 | 5.26 | 0.915 |

| Variables (Hypothesis Testing) | Correlation Coefficient | ** p-Value |

|---|---|---|

| MGU-ADG (H1) | 0.569 | 0.0001 |

| TA-BD (H3) | 0.374 | 0.0001 |

| TA-ADG (H4) | 0.594 | 0.0001 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leonardou, A.; Rigou, M.; Panagiotarou, A.; Garofalakis, J. The Case of a Multiplication Skills Game: Teachers’ Viewpoint on MG’s Dashboard and OSLM Features. Computers 2021, 10, 65. https://doi.org/10.3390/computers10050065

Leonardou A, Rigou M, Panagiotarou A, Garofalakis J. The Case of a Multiplication Skills Game: Teachers’ Viewpoint on MG’s Dashboard and OSLM Features. Computers. 2021; 10(5):65. https://doi.org/10.3390/computers10050065

Chicago/Turabian StyleLeonardou, Angeliki, Maria Rigou, Aliki Panagiotarou, and John Garofalakis. 2021. "The Case of a Multiplication Skills Game: Teachers’ Viewpoint on MG’s Dashboard and OSLM Features" Computers 10, no. 5: 65. https://doi.org/10.3390/computers10050065

APA StyleLeonardou, A., Rigou, M., Panagiotarou, A., & Garofalakis, J. (2021). The Case of a Multiplication Skills Game: Teachers’ Viewpoint on MG’s Dashboard and OSLM Features. Computers, 10(5), 65. https://doi.org/10.3390/computers10050065