Transfer Learning-Based Lightweight SSD Model for Detection of Pests in Citrus

Abstract

:1. Introduction

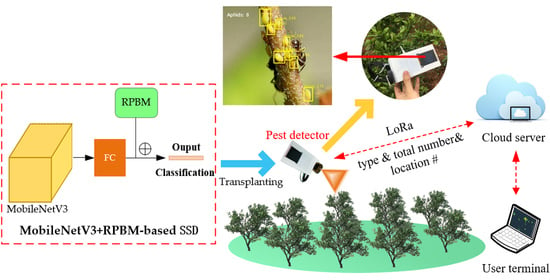

- We compare the latest MobileNet, GoogLeNet, ReseNet and VGGNet feature extraction networks, and optimize the best feature extraction network to further improve the detection speed and accuracy of the SSD.

- Before the model performs prediction, we add a miniaturized residual block of a 1 × 1 convolution kernel to each feature map used for prediction to predict category scores and frame offsets.

- The effective modified MobileNetV3-SSD model is transplanted into the embedded terminal developed by us to realize the rapid monitoring of pests.

2. Materials and Methods

2.1. Datasets of Pests

2.2. Modified SSD

2.2.1. Optimization of Feature Extraction Networks

- 1

- VGG16

- 2

- ResNet

- 3

- GoogLeNet

- 4

- Modified MobileNetV3

2.2.2. Predictive Convolution Kernel Miniaturization

2.2.3. Model Training Environment

2.2.4. Model Evaluation Indicators

2.3. Pest Detection Embedded Mobile System

3. Results

3.1. Results of Citrus Pest Identification Model

3.1.1. Analysis of Model Training Process

3.1.2. Optimization Results and Analysis of Feature Network

3.1.3. Comparison with Other Frameworks

3.2. Analysis of the Practical Validation Results of Citrus Pest Identification Model

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Khanramaki, M.; Askari Asli-Ardeh, E.; Kozegar, E. Citrus pests classification using an ensemble of deep learning models. Comput. Electron. Agric. 2021, 186, 106192. [Google Scholar] [CrossRef]

- He, X.; Bonds, J.; Herpbmt, A.; Langenakens, J. Recent development of unmanned aerial vehicle for plant protection in East Asia. Int. J. Agric. Biol. Eng. 2017, 10, 18–30. [Google Scholar]

- Yang, J.; Guo, X.; Li, Y.; Marinello, F.; Ercisli, S.; Zhang, Z. A survey of few-shot learning in smart agriculture: Developments, applications, and challenges. Plant Methods 2022, 18, 28. [Google Scholar] [CrossRef] [PubMed]

- Njoroge, A.W.; Mankin, R.W.; Smith, B.; Baributsa, D. Effects of hypoxia on acoustic activity of two stored-product pests, adult emergence, and grain quality. J. Econ. Entomol. 2019, 112, 1989–1996. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.R. PEDS-AI: A Novel Unmanned Aerial Vehicle Based Artificial Intelligence Powered Visual-Acoustic Pest Early Detection and Identification System for Field Deployment and Surveillance. In Proceedings of the 2023 IEEE Conference on Technologies for Sustainability (SusTech), Portland, OR, USA, 23 May 2023. [Google Scholar]

- Zhou, B.; Dai, Y.; Li, C.; Wang, J. Electronic nose for detection of cotton pests at the flowering stage. Trans. Chin. Soc. Agric. Eng. 2020, 36, 194–200. [Google Scholar]

- Zheng, Z.; Zhang, C. Electronic noses based on metal oxide semiconductor sensors for detecting crop diseases and insect pests. Comput. Electron. Agric. 2022, 197, 106988. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, W.; Qiu, Z.; Cen, H.; He, Y. A novel method for detection of Pieris rapae larvae on cabbage leaves using NIR hyperspectral imaging. Appl. Eng. Agric. 2016, 32, 311–316. [Google Scholar]

- Qin, Y.; Wu, Y.; Wang, Q.; Yu, S. Method for pests detecting in stored grain based on spectral residual saliency edge detection. Grain Oil Sci. Technol. 2019, 2, 33–38. [Google Scholar] [CrossRef]

- Yang, X.; Liu, M.; Xu, J.; Zhao, L.; Wei, S.; Li, W.; Chen, M.; Chen, M.; Li, M. Image segmentation and recognition algorithm of greenhouse whitefly and thrip adults for automatic monitoring device. Trans. Chin. Soc. Agric. Eng. 2018, 34, 164–170. [Google Scholar]

- Song, L. Recognition model of disease image based on discriminative deep belief networks. Comput. Eng. Appl. 2017, 53, 32–36. [Google Scholar]

- Martineau, M.; Conte, D.; Raveaux, R.; Arnault, I.; Munier, D.; Venturini, G. A survey on image-based pest classification. Pattern Recognit. 2017, 65, 273–284. [Google Scholar] [CrossRef] [Green Version]

- Deng, L.; Wang, Y.; Han, Z.; Yu, R. Research on pest pest image detection and recognition based on bio-inspired methods. Biosyst. Eng. 2018, 169, 139–148. [Google Scholar] [CrossRef]

- Mukhiddinov, M.; Muminov, A.; Cho, J. Improved Classification Approach for Fruits and Vegetables Freshness Based on Deep Learning. Sensors 2022, 22, 8192. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Yi, S.; Zeng, N.; Liu, Y.; Zhang, Y. Identification of rice diseases using deep convolutional neural networks. Neurocomputing 2017, 267, 378–384. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, Y.; He, D.J.; Li, Y.X. Identification of Apple Leaf Diseases Based on Deep Convolutional Neural Networks. Symmetry 2018, 10, 11. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Long, C.; Zeng, M.; Shen, J. A detecting method for the rape pests based on deep convolutional neural network. J. Hunan Agric. Univ. (Nat. Sci.) 2019, 45, 560–564. [Google Scholar]

- Kuzuhara, H.; Takimoto, H.; Sato, Y.; Kanagawa, A. pest detection and identification method based on deep learning for realizing a pest control system. In Proceedings of the 2020 59th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Chiang Mai, Thailand, 23–26 September 2020. [Google Scholar]

- He, Y.; Zeng, H.; Fan, Y.Y.; Ji, S.; Wu, J. Application of deep learning in integrated pest management: A real-time system for detection and diagnosis of oilseed rape pests. Mob. Inf. Syst. 2019, 2019, 4570808. [Google Scholar] [CrossRef] [Green Version]

- Qiu, G.; Tao, W.; Huang, Y.; Zhang, F. Research on farm pest monitoring and early warning system under the NB-IoT Framework. J. Fuqing Branch Fujian Norm. Univ. 2020, 162, 1–7. [Google Scholar]

- Luo, Q.; Huang, R.; Zhu, Y. Real-time monitoring and prewarning system for grain storehouse pests based on deep learning. J. Jiangsu Univ. (Nat. Sci. Ed.) 2019, 40, 203–208. [Google Scholar]

- Ghazi, M.M.; Yanikoglu, B.; Aptoula, E. Plant identification using deep neural networks via optimization of transfer learning parameters. Neurocomputing 2017, 235, 228–235. [Google Scholar] [CrossRef]

- Khan, M.A.; Akram, T.; Sharif, M.; Awais, M.; Javed, K.; Ali, H.; Saba, T. CCDF: Automatic system for segmentation and recognition of fruit crops diseases based on correlation coefficient and deep CNN features. Comput. Electron. Agric. 2018, 155, 220–236. [Google Scholar] [CrossRef]

- Liu, Z.; Gao, J.; Yang, G.; Zhang, H.; He, Y. Localization and Classification of Paddy Field Pests using a Saliency Map and Deep Convolutional Neural Network. Sci. Rep. 2016, 6, 20410. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Al-Qizwini, M.; Barjasteh, I.; Al-Qassab, H.; Radha, H. Deep learning algorithm for autonomous driving using GoogLeNet. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017. [Google Scholar]

- Wang, D.; Deng, L.; Ni, J.; Gao, J.; Zhu, H.; Han, Z. Recognition pest by image-based transfer learning. J. Sci. Food Agric. 2019, 99, 4524–4531. [Google Scholar]

- Suh, H.K.; Ijsselmuiden, J.; Hofstee, J.W.; Henten, E.J. Transfer learning for the classification of sugar beet and volunteer potato under field conditions. Biosyst. Eng. 2018, 174, 50–65. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Gavai, N.R.; Jakhade, Y.A.; Tribhuvan, S.A.; Bhattad, R. MobileNets for flower classification using TensorFlow. In Proceedings of the 2017 International Conference on Big Data, IoT and Data Science (BID), Pune, India, 20–22 December 2017. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Wang, L.; Lan, Y.; Liu, Z.; Yue, X.; Deng, S.; Guo, Y. Development and experiment of the portable real-time detection system for citrus pests. Trans. Chin. Soc. Agric. Eng. 2021, 37, 282–288. [Google Scholar]

- Wang, S.; Han, Y.; Chen, J.; He, X.; He, X.; Zhang, Z.; Liu, X.; Zhang, K. Weed Density Extraction Based on Few-Shot Learning Through UAV Remote Sensing RGB and Multispectral Images in Ecological Irrigation Area. Front. Plant Sci. 2022, 12, 735230. [Google Scholar] [CrossRef]

- Mohamed, E.K.; Fahad, A.; Sultan, A.; Sultan, A. A new mobile application of agricultural pests recognition using deep learning in cloud computing system. Alex. Eng. J. 2021, 60, 4423–4432. [Google Scholar]

- Jin, X.; Sun, Y.; Che, J.; Bagavathiannan, M.; Yu, J.; Chen, Y. A novel deep learning-based method for detection of weeds in vegetables. Pest Manag. Sci. 2022, 78, 1861–1869. [Google Scholar] [CrossRef]

- Robert, J.W.; Li, X.; Charles, X.L. Pelee: A Real-Time Object Detection System on Mobile Device. In Proceedings of the Advances in Neural Information Processing Systems 31 (NeurIPS 2018), Palais des Congrès de Montréal, Montréal, QC, Canada, 2–8 December 2018. [Google Scholar]

- Wang, C.; Bochkovskiy, A.; Liao, H.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Cao, G.; Xie, X.; Yang, W.; Liao, Q.; Shi, G.; Wu, J. Feature-fused SSD: Fast detection for small objects. Proc. SPIE 2018, 10615, 381–388. [Google Scholar]

| Feature Extraction Network | mAP/% | AR/% | Params/M | moL/ms |

|---|---|---|---|---|

| VGG16 | 89.22 | 91.34 | 584.179 | 679 |

| GoogLeNet | 85.80 | 90.18 | 37.864 | 459 |

| ResNet50 | 90.11 | 96.41 | 1478.179 | 1078 |

| MobileNetV3 | 86.40 | 91.07 | 15.147 | 286 |

| MobileNetV3+RPBM | 86.10 | 91.00 | 10.025 | 185 |

| Framework | mAP/% | Params/M | moL/ms |

|---|---|---|---|

| YOLOv7-tiny [39] | 68.66 | 6.201 | 42 |

| Pelee [38] | 84.44 | 9.430 | 172 |

| FFSSD [40] | 78.98 | 13.550 | 252 |

| MobileNetV3+RPBM+SSD (our) | 86.10 | 10.025 | 185 |

| Type | RRC 1/% | RRWC 2/% | TPR/% | CA/% |

|---|---|---|---|---|

| PCM | 82.0 | 9.0 | 91.0 | 90.1 |

| Aphids | 39.0 | 50.0 | 89.0 | 43.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Shi, W.; Tang, Y.; Liu, Z.; He, X.; Xiao, H.; Yang, Y. Transfer Learning-Based Lightweight SSD Model for Detection of Pests in Citrus. Agronomy 2023, 13, 1710. https://doi.org/10.3390/agronomy13071710

Wang L, Shi W, Tang Y, Liu Z, He X, Xiao H, Yang Y. Transfer Learning-Based Lightweight SSD Model for Detection of Pests in Citrus. Agronomy. 2023; 13(7):1710. https://doi.org/10.3390/agronomy13071710

Chicago/Turabian StyleWang, Linhui, Wangpeng Shi, Yonghong Tang, Zhizhuang Liu, Xiongkui He, Hongyan Xiao, and Yu Yang. 2023. "Transfer Learning-Based Lightweight SSD Model for Detection of Pests in Citrus" Agronomy 13, no. 7: 1710. https://doi.org/10.3390/agronomy13071710

APA StyleWang, L., Shi, W., Tang, Y., Liu, Z., He, X., Xiao, H., & Yang, Y. (2023). Transfer Learning-Based Lightweight SSD Model for Detection of Pests in Citrus. Agronomy, 13(7), 1710. https://doi.org/10.3390/agronomy13071710