Mapping of Olive Trees Using Pansharpened QuickBird Images: An Evaluation of Pixel- and Object-Based Analyses

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area and Satellite Image Acquisition

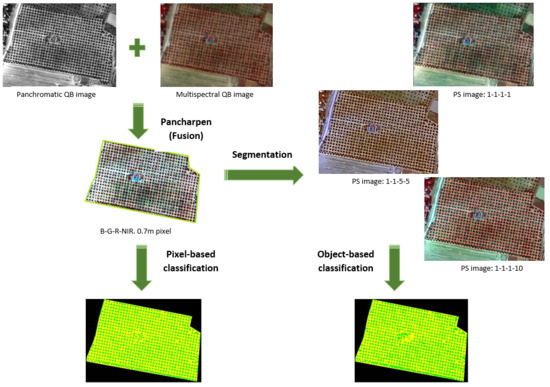

2.2. Data Fusion: Pansharpening of Multispectral Images

2.3. Segmentation

2.4. Classification and Accuracy Assessments

3. Results and Discussion

3.1. Data Fusion: Pansharpening of Multispectral Images

3.2. Segmentation

3.3. Olive Orchard Fields Classification

4. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Precision Agriculture in Europe. Legal, Social and Ethical Considerations. European Parliament (European Parliamentary Research Service). Available online: http://www.europarl.europa.eu/RegData/etudes/STUD/2017/603207/EPRS_STU(2017)603207_EN.pdf (accessed on 24 October 2018).

- The European Parliament and the Council of the European Union. Regulation 1306/2013 of the European Parliament and of the Council of 17 December 2013 on the financing, management and monitoring of the common agricultural policy and repealing Council Regulations (EEC) No 352/78, (EC) No 165/94, (EC) No 2799/98, (EC) No 814/2000, (EC) No 1290/2005 and (EC) No 485/2008 OJ L 347, 20.12.2013. Off. J. Eur. Union 2013, 347, 549–607. [Google Scholar]

- The European Parliament and the Council of the European Union. Regulation 1107/2009 of the European Parliament and of the Council of 21 October 2009 concerning the placing of plant protection products on the market and repealing Council Directives 79/117/EEC and 91/414/EEC. Off. J. Eur. Union 2009, 309, 1–50. [Google Scholar]

- de Castro, A.I.; Jiménez-Brenes, F.M.; Torres-Sánchez, J.; Peña, J.M.; Borra-Serrano, I.; López-Granados, F. 3-D characterization of vineyards using a novel UAV imagery-based OBIA procedure for precision viticulture applications. Remote Sens. 2018, 10, 584. [Google Scholar] [CrossRef]

- Fitzgerald, G.J.; Maas, S.J.; Detar, W.R. Spider mite detection and canopy component mapping in cotton using hyperspectral imagery and spectral mixture analysis. Precis. Agric. 2004, 5, 275–289. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.H.; Bradford, J.M. Comparison of QuickBird satellite imagery and airborne imagery for mapping grain sorghum yield patterns. Precis. Agric. 2006, 7, 33–44. [Google Scholar] [CrossRef]

- López-Granados, F.; Torres-Sánchez, J.; Serrano-Pérez, A.; de Castro, A.I.; Mesas-Carrascosa, F.; Peña, J. Early season weed mapping in sunflower using UAV technology: Variability of herbicide treatment maps against weed thresholds. Precis. Agric. 2016, 17, 183–199. [Google Scholar] [CrossRef]

- Huang, Y.; Reddy, K.N.; Fletcher, R.S.; Pennington, D. UAV low-altitude remote sensing for precision weed management. Weed Technol. 2018, 32, 2–6. [Google Scholar] [CrossRef]

- López-Granados, F.; Torres-Sánchez, J.; De Castro, A.; Serrano-Pérez, A.; Mesas-Carrascosa, F.; Peña, J. Object-based early monitoring of a grass weed in a grass crop using high resolution UAV imagery. Agron. Sustain. Dev. 2016, 36, 67. [Google Scholar] [CrossRef]

- Du, Q.; Chang, N.; Yang, C.; Srilakshmi, K.R. Combination of multispectral remote sensing, variable rate technology and environmental modeling for citrus pest management. J. Environ. Manag. 2008, 86, 14–26. [Google Scholar] [CrossRef]

- Percival, D.C.; Gallant, D.; Harrington, T.; Brown, G. Potential for commercial unmanned aerial vehicle use in wild blueberry production. Acta Hortic. 2017, 1180, 233–240. [Google Scholar] [CrossRef]

- Rai, M.; Ingle, A. Role of nanotechnology in agriculture with special reference to management of insect pests. Appl. Microbiol. Biotechnol. 2012, 94, 287–293. [Google Scholar] [CrossRef] [PubMed]

- Herrmann, I.; Vosberg, S.K.; Ravindran, P.; Singh, A.; Chang, H.; Chilvers, M.I.; Conley, S.P.; Townsend, P.A. Leaf and canopy level detection of fusarium virguliforme (sudden death syndrome) in soybean. Remote Sens. 2018, 10, 426. [Google Scholar] [CrossRef]

- Hillnhütter, C.; Mahlein, A.; Sikora, R.A.; Oerke, E. Remote sensing to detect plant stress induced by heterodera schachtii and rhizoctonia solani in sugar beet fields. Field Crops Res. 2011, 122, 70–77. [Google Scholar] [CrossRef]

- Santoso, H.; Gunawan, T.; Jatmiko, R.H.; Darmosarkoro, W.; Minasny, B. Mapping and identifying basal stem rot disease in oil palms in north sumatra with QuickBird imagery. Precis. Agric. 2011, 12, 233–248. [Google Scholar] [CrossRef]

- Fisher, D.K.; Hinton, J.; Masters, M.H.; Aasheim, C.; Butler, E.S.; Reichgelt, H. Improving irrigation efficiency through remote sensing technology and precision agriculture in se Georgia. In Proceedings of the ASAE Annual International Meeting 2004, Otawa, ON, Canada, 1–4 August 2004; pp. 3461–3470. [Google Scholar]

- Gago, J.; Douthe, C.; Coopman, R.E.; Gallego, P.P.; Ribas-Carbo, M.; Flexas, J.; Escalona, J.; Medrano, H. UAVs challenge to assess water stress for sustainable agriculture. Agric. Water Manag. 2015, 153, 9–19. [Google Scholar] [CrossRef]

- Rossini, M.; Fava, F.; Cogliati, S.; Meroni, M.; Marchesi, A.; Panigada, C.; Giardino, C.; Busetto, L.; Migliavacca, M.; Amaducci, S.; et al. Assessing canopy PRI from airborne imagery to map water stress in maize. ISPRS J. Photogramm. Remote Sens. 2013, 86, 168–177. [Google Scholar] [CrossRef]

- Gonzalez-Dugo, V.; Zarco-Tejada, P.; Nicolás, E.; Nortes, P.A.; Alarcón, J.J.; Intrigliolo, D.S.; Fereres, E. Using high resolution UAV thermal imagery to assess the variability in the water status of five fruit tree species within a commercial orchard. Precis. Agric. 2013, 14, 660–678. [Google Scholar] [CrossRef]

- Launeau, P.; Kassouk, Z.; Debaine, F.; Roy, R.; Mestayer, P.G.; Boulet, C.; Rouaud, J.; Giraud, M. Airborne hyperspectral mapping of trees in an urban area. Int. J. Remote Sens. 2017, 38, 1277–1311. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Serrano, N.; Arquero, O.; Peña, JM. High-throughput 3-D monitoring of agricultural-tree plantations with unmanned aerial vehicle (UAV) technology. PLoS ONE 2015, 10, e0130479. [Google Scholar] [CrossRef]

- Kim, C.; Hong, S. Identification of tree species from high resolution satellite imagery by using crown parameters. Presented at the SPIE—The International Society for Optical Engineering 2008, Cardiff, Wales, UK, 15–18 September 2008. [Google Scholar] [CrossRef]

- Molinier, M.; Astola, H. Feature selection for tree species identification in very high resolution satellite images. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Vancouver, BC, Canada, 24–29 July 2011; pp. 4461–4464. [Google Scholar]

- Arockiaraj, S.; Kumar, A.; Hoda, N.; Jeyaseelan, A.T. Identification and quantification of tree species in open mixed forests using high resolution QuickBird satellite imagery. J. Trop. For. Environ. 2015, 5. [Google Scholar] [CrossRef]

- Caughlin, T.T.; Rifai, S.W.; Graves, S.J.; Asner, G.P.; Bohlman, S.A. Integrating LiDAR-derived tree height and Landsat satellite reflectance to estimate forest regrowth in a tropical agricultural landscape. Remote Sens Ecol. Conserv. 2016, 2, 190–203. [Google Scholar] [CrossRef] [Green Version]

- Hawryło, P.; Wezyk, P. Predicting growing stock volume of scots pine stands using Sentinel-2 satellite imagery and airborne image-derived point clouds. Forests 2018, 9, 274. [Google Scholar] [CrossRef]

- Vierling, L.A.; Vierling, K.T.; Adam, P.; Hudak, A.T. Using satellite and airborne LiDAR to model woodpecker habitat occupancy at the landscape scale. PLoS ONE 2013, 8, e80988. [Google Scholar] [CrossRef] [PubMed]

- González-Audícana, M.; Saleta, J.L.; Catalán, R.G.; García, R. Fusion of multispectral and panchromatic images using improved IHS and PCA mergers based on wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1291–1299. [Google Scholar] [CrossRef] [Green Version]

- Otazu, X.; González-Audícana, M.; Fors, O.; Núñez, J. Introduction of sensor spectral response into image fusion methods. Application to wavelet-based methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2376–2385. [Google Scholar] [CrossRef] [Green Version]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2007, 130, 277–293. [Google Scholar] [CrossRef]

- Smits, P.C.; Dellepiane, S.G.; Schowengerdt, R.A. Quality assessment of image classification algorithms for land-cover mapping: A review and a proposal for a cost-based approach. Int. J. Remote Sens. 1999, 20, 1461–1486. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree species classification with random forest using very high spatial resolution 8-band WorldView-2 satellite data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef]

- Whiteside, T.G.; Boggs, G.S.; Maier, S.W. Comparing object-based and pixel-based classifications for mapping savannas. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 884–893. [Google Scholar] [CrossRef]

- Funck, J.W.; Zhong, Y.; Butler, D.A.; Brunner, C.C.; Forrer, J.B. Image segmentation algorithms applied to wood defect detection. Comput. Electron. Agric. 2003, 41, 157–179. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Kettig, R.L.; Landgrebe, D.A. Classification of multispectral image data by extraction and classification of homogeneous objects. IEEE Trans. Geosci. Electron. 1976, 14, 19–26. [Google Scholar] [CrossRef]

- Hulet, A.; Roundy, B.A.; Petersen, S.L.; Jensen, R.R.; Bunting, S.C. An object-based image analysis of pinyon and juniper woodlands treated to reduce fuels. Environ. Manag. 2014, 53, 660–671. [Google Scholar] [CrossRef] [PubMed]

- MacLean, M.G.; Congalton, R.G. Using object-oriented classification to map forest community types. In Proceedings of the American Society for Photogrammetry and Remote Sensing Annual Conference, Milwaukee, WI, USA, 1–4 May 2011. [Google Scholar]

- Jiménez-Brenes, F.M.; López-Granados, F.; Castro, A.I.; Torres-Sánchez, J.; Serrano, N.; Peña, J.M. Quantifying pruning impacts on olive tree architecture and annual canopy growth by using UAV-based 3D modelling. Plant Methods 2017, 13, 55. [Google Scholar] [CrossRef] [PubMed]

- International Olive Council (IOC). Olive Growing and Nursery Production. Available online: http://www.internationaloliveoil.org/projects/paginas/Section-a.htm (accessed on 26 November 2018).

- Ayerza, R.; Steven Sibbett, G. Thermal adaptability of olive (Olea europaea L.) to the arid Chaco of Argentina. Agric. Ecosyst. Environ. 2001, 84, 277–285. [Google Scholar] [CrossRef]

- DigitalGlobe. Information Products: Standard Imagery. Available online: https://dg-cms-uploads-production.s3.amazonaws.com/uploads/document/file/21/Standard_Imagery_DS_10-7-16.pdf (accessed on 20 November 2018).

- Song, C.; Woodcock, C.E.; Seto, K.C.; Lenney, M.P.; Macomber, S.A. Classification and change detection using Landsat TM data: When and how to correct atmospheric effects? Remote Sens. Environ. 2001, 75, 230–244. [Google Scholar] [CrossRef]

- Gonzalo, C.; Lillo-Saavedra, M. A directed search algorithm for setting the spectral–spatial quality trade-off of fused images by the wavelet Á Trous method. Can. J. Remote Sens. 2008, 34, 367–375. [Google Scholar] [CrossRef]

- Ranchin, T.; Wald, L. Fusion of high spatial and spectral resolution images: The ARSIS concept and its implementation. Photogramm. Eng. Remote Sens. 2000, 66, 49–61. [Google Scholar]

- Lillo-Saavedra, M.; Gonzalo, C. Spectral or spatial quality for fused satellite imagery? A trade-off solution using the wavelet Á Trous algorithm. Int. J. Remote Sens. 2006, 27, 1453–1464. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. Multiresolution segmentation: An optimization approach for high quality multi-scale image segmentation. In Proceedings of the 12th Symposium for Applied Geographic Information Processing (Angewandte Geographische Informationsverarbeitung XII) AGIT 2000, Salzburg, Austria, 5–7 July 2000. [Google Scholar]

- Rouse, J.W., Jr.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309–317. [Google Scholar]

- Roujean, J.L.; Breon, F.M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Hodgson, M.E. Reducing the computational requirements of the minimum-distance classifier. Remote Sens. Environ. 1998, 25, 117–128. [Google Scholar] [CrossRef]

- Hecker, C.; Van Der Meijde, M.; Van Der Werff, H.; Van Der Meer, F.D. Assessing the influence of reference spectra on synthetic SAM classification results. IEEE Trans. Geosci. Remote Sens. 2008, 46, 4162–4172. [Google Scholar] [CrossRef]

- Strahler, A.H. The use of prior probabilities in maximum likelihood classification of remotely sensed data. Remote Sens. Environ. 1980, 10, 135–163. [Google Scholar] [CrossRef]

- Quinlan, R. C4-5: Programs for Machine Learning; Morgan Kaufmann: San Mateo, CA, USA, 1993. [Google Scholar]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Rogan, J.; Franklin, J.; Roberts, D.A. A comparison of methods for monitoring multitemporal vegetation change using thematic mapper imagery. Remote Sens. Environ. 2002, 80, 143–156. [Google Scholar] [CrossRef]

- Wald, L. Data Fusion. Definition and Architectures- Fusion of Images of Different Spatial Resolutions; Presses de l’Ecole, Ecole des Mines de Paris: Paris, France, 2002; p. 200. ISBN 2-911762-38-X. [Google Scholar]

- Gonzalo-Martín, C.; Lillo-Saavedra, M. Quickbird image fusion by a multirresolution-multidirectional joint image representation. IEEE Lat. Am. Trans. 2007, 5, 32–37. [Google Scholar] [CrossRef]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Monserud, R.A.; Leemans, R. Comparing global vegetation maps with the kappa statistic. Ecol. Model. 1992, 62, 275–293. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Gutiérrez, P.A.; Peña, J.M.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. An experimental comparison for the identification of weeds in sunflower crops via unmanned aerial vehicles and object-based analysis. Lect. Notes Comput. Sci. 2015, 9094, 252–262. [Google Scholar] [CrossRef]

- Castillejo-González, I.L.; Peña-Barragán, J.M.; Jurado-Expósito, M.; Mesas-Carrascosa, F.J.; López-Granados, F. Evaluation of pixel- and object-based approaches for mapping wild oat (Avena sterilis) weed patches in wheat fields using QuickBird imagery for site-specific management. Eur. J. Agron. 2014, 59, 57–66. [Google Scholar] [CrossRef]

- Brodley, C.E.; Friedl, M.A. Decision tree classification of land cover from remotely sensed data. Remote Sens. Environ. 1997, 61, 399–409. [Google Scholar] [CrossRef]

- Castillejo-González, I.L.; López-Granados, F.; García-Ferrer, A.; Peña-Barragán, J.M.; Jurado-Expósito, M.; de la Orden, M.S.; González-Audicana, M. Object- and pixel-based analysis for mapping crops and their agro-environmental associated measures using QuickBird imagery. Comput. Electron. Agric. 2009, 68, 207–215. [Google Scholar] [CrossRef]

- García Torres, L.; Peña-Barragán, J.M.; López-Granados, F.; Jurado-Expósito, M.; Fernández-Escobar, R. Automatic assessment of agro-environmental indicators from remotely sensed images of tree orchards and its evaluation using olive plantations. Comput. Electron. Agric. 2008, 61, 179–191. [Google Scholar] [CrossRef] [Green Version]

- Johnson, B.A.; Tateishi, R.; Hoan, N.T. Satellite image pansharpening using a hybrid approach for object-based image analysis. ISPRS Int. J. Geo-Inf. 2012, 1, 228–241. [Google Scholar] [CrossRef]

| Categories | Features | Brief Description |

|---|---|---|

| Spectral | Mean | Mean of the intensity values of all pixels forming an image object |

| NDVI | Normalized Difference Vegetation Index [48] | |

| RDVI | Renormalized Difference Vegetation Index [49] | |

| Shape | Area | Number of pixels forming an image object |

| Asymmetry | Relative length of an image object compared to a regular ellipse polygon | |

| Border index | Ratio between the border lengths of the image object and the smallest enclosing rectangle | |

| Length | Multiplication between the number of pixels and the length-to-width ratio of an image object | |

| Width | Ratio between the number of pixels and the length-to-width ratio of an image object |

| Pansharpen Weight (B-G-R-NIR) | Spectral ERGAS | Spatial ERGAS |

|---|---|---|

| 1-1-1-1 | 0.72 | 1.13 |

| 1-1-5-5 | 1.84 | 1.73 |

| 1-1-1-10 | 1.83 | 1.86 |

| Field | Pansharpen Weight (B-G-R-NIR) | Scale Parameter | Colour | Shape | Compactness | Smoothness |

|---|---|---|---|---|---|---|

| A | 1-1-1-1 | 12 | 0.6 | 0.4 | 0.8 | 0.2 |

| 1-1-5-5 | 20 | 0.7 | 0.3 | 0.8 | 0.2 | |

| 1-1-1-10 | 20 | 0.7 | 0.3 | 0.8 | 0.2 | |

| B | 1-1-1-1 | 15 | 0.7 | 0.3 | 0.8 | 0.2 |

| 1-1-5-5 | 25 | 0.7 | 0.3 | 0.8 | 0.2 | |

| 1-1-1-10 | 25 | 0.5 | 0.5 | 0.8 | 0.2 | |

| C | 1-1-1-1 | 15 | 0.6 | 0.4 | 0.8 | 0.2 |

| 1-1-5-5 | 25 | 0.7 | 0.3 | 0.8 | 0.2 | |

| 1-1-1-10 | 17 | 0.6 | 0.4 | 0.8 | 0.2 | |

| D | 1-1-1-1 | 14 | 0.6 | 0.4 | 0.8 | 0.2 |

| 1-1-5-5 | 14 | 0.5 | 0.5 | 0.8 | 0.2 | |

| 1-1-1-10 | 14 | 0.5 | 0.5 | 0.8 | 0.2 | |

| E | 1-1-1-1 | 12 | 0.6 | 0.4 | 0.8 | 0.2 |

| 1-1-5-5 | 19 | 0.7 | 0.3 | 0.8 | 0.2 | |

| 1-1-1-10 | 22 | 0.7 | 0.3 | 0.8 | 0.2 |

| Analyses | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Pixel-Based | Object-Based | ||||||||||||||||

| Field | Image 1 | MD 2 | SAM | ML | DT | MD | SAM | ML | DT | ||||||||

| OA 3 | K | OA | K | OA | K | OA | K | OA | K | OA | K | OA | K | OA | K | ||

| A | 1-1-1-1 | 94.4 | 0.91 | 92.6 | 0.88 | 95.0 | 0.92 | 91.7 | 0.86 | 96.2 | 0.93 | 96.6 | 0.94 | 97.8 | 0.96 | 94.9 | 0.91 |

| 1-1-5-5 | 91.7 | 0.86 | 90.8 | 0.84 | 94.4 | 0.91 | 98.7 | 0.97 | 96.4 | 0.94 | 94.5 | 0.91 | 97.9 | 0.96 | 98.9 | 0.98 | |

| 1-1-1-10 | 89.4 | 0.82 | 88.0 | 0.79 | 92.8 | 0.87 | 98.6 | 0.98 | 91.7 | 0.86 | 95.7 | 0.93 | 98.8 | 0.98 | 98.7 | 0.98 | |

| B | 1-1-1-1 | 91.3 | 0.83 | 94.2 | 0.89 | 98.7 | 0.97 | 95.7 | 0.91 | 88.8 | 0.78 | 91.3 | 0.82 | 99.1 | 0.98 | 96.9 | 0.95 |

| 1-1-5-5 | 95.2 | 0.91 | 95.4 | 0.91 | 98.7 | 0.98 | 92.1 | 0.89 | 94.6 | 0.89 | 97.3 | 0.94 | 99.3 | 0.99 | 99.3 | 0.98 | |

| 1-1-1-10 | 95.7 | 0.86 | 86.5 | 0.73 | 97.5 | 0.95 | 94.3 | 0.90 | 94.1 | 0.88 | 77.9 | 0.59 | 98.7 | 0.97 | 98.5 | 0.97 | |

| C | 1-1-1-1 | 90.4 | 0.81 | 93.4 | 0.87 | 97.7 | 0.95 | 96.5 | 0.94 | 88.8 | 0.78 | 88.4 | 0.77 | 97.5 | 0.95 | 97.0 | 0.94 |

| 1-1-5-5 | 95.8 | 0.92 | 93.1 | 0.86 | 97.7 | 0.95 | 92.4 | 0.89 | 95.6 | 0.91 | 96.5 | 0.93 | 97.9 | 0.96 | 98.7 | 0.98 | |

| 1-1-1-10 | 95.5 | 0.91 | 85.3 | 0.71 | 96.6 | 0.93 | 94.1 | 0.90 | 94.9 | 0.90 | 64.9 | 0.31 | 98.5 | 0.97 | 98.6 | 0.97 | |

| D | 1-1-1-1 | 69.7 | 0.39 | 75.4 | 0.51 | 88.1 | 0.76 | 84.8 | 0.80 | 78.9 | 0.58 | 86.1 | 0.72 | 79.7 | 0.59 | 87.2 | 0.82 |

| 1-1-5-5 | 87.5 | 0.75 | 87.9 | 0.76 | 94.2 | 0.88 | 88.5 | 0.84 | 87.1 | 0.74 | 85.6 | 0.71 | 88.4 | 0.77 | 98.3 | 0.96 | |

| 1-1-1-10 | 89.5 | 0.79 | 85.3 | 0.71 | 92.3 | 0.85 | 87.3 | 0.83 | 85.7 | 0.72 | 88.0 | 0.76 | 91.6 | 0.83 | 98.7 | 0.97 | |

| E | 1-1-1-1 | 77.3 | 0.55 | 76.7 | 0.54 | 86.7 | 0.73 | 86.8 | 0.83 | 82.0 | 0.64 | 79.2 | 0.59 | 89.4 | 0.79 | 89.0 | 0.84 |

| 1-1-5-5 | 93.4 | 0.87 | 91.5 | 0.83 | 93.0 | 0.86 | 90.7 | 0.89 | 97.1 | 0.94 | 96.8 | 0.94 | 97.5 | 0.95 | 97.9 | 0.96 | |

| 1-1-1-10 | 95.2 | 0.91 | 88.1 | 0.76 | 89.9 | 0.80 | 88.8 | 0.85 | 96.1 | 0.92 | 82.1 | 0.64 | 97.3 | 0.95 | 97.1 | 0.95 | |

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Castillejo-González, I.L. Mapping of Olive Trees Using Pansharpened QuickBird Images: An Evaluation of Pixel- and Object-Based Analyses. Agronomy 2018, 8, 288. https://doi.org/10.3390/agronomy8120288

Castillejo-González IL. Mapping of Olive Trees Using Pansharpened QuickBird Images: An Evaluation of Pixel- and Object-Based Analyses. Agronomy. 2018; 8(12):288. https://doi.org/10.3390/agronomy8120288

Chicago/Turabian StyleCastillejo-González, Isabel Luisa. 2018. "Mapping of Olive Trees Using Pansharpened QuickBird Images: An Evaluation of Pixel- and Object-Based Analyses" Agronomy 8, no. 12: 288. https://doi.org/10.3390/agronomy8120288

APA StyleCastillejo-González, I. L. (2018). Mapping of Olive Trees Using Pansharpened QuickBird Images: An Evaluation of Pixel- and Object-Based Analyses. Agronomy, 8(12), 288. https://doi.org/10.3390/agronomy8120288