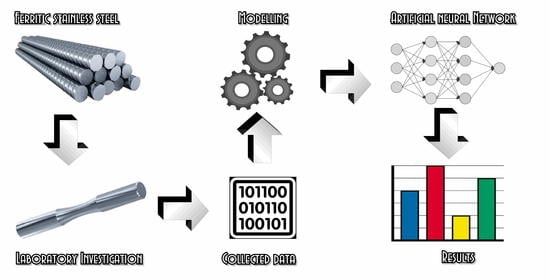

Modeling the Chemical Composition of Ferritic Stainless Steels with the Use of Artificial Neural Networks

Abstract

:1. Introduction

2. Materials and Methods

- Carbon (C);

- Molybdenum (Mn);

- Silicon (Si);

- Phosphorus (P);

- Sulphur (S);

- Chrome (Cr);

- Nickel (Ni);

- Molybdenum (Mo);

- Copper (Cu);

- Aluminum (Al).

- Yield strength (Rp0.2);

- Tensile strength (Rm);

- Relative elongation (A);

- Relative area reduction (Z);

- Impact strength (KcU2);

- Brinell hardness (HB).

- Radial basis functions (RBF);

- General regression neural network (GRNN);

- Multi-layer perceptron (MLP).

- Error backpropagation;

- Conjugate gradient;

- Quasi-Newton;

- Levenberg–Marquardt;

- Fast propagation;

- Delta-bar-delta.

- Mean absolute error (MAE);

- Mean absolute percentage error (MAPE);

- n—size of the set

- —i-th measured value

- —i-th computed value

3. Results

4. Discussion

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dobrzański, L.A. Fundamentals of Materials Science and Metallurgy; WNT: Warszawa, Poland, 2002. [Google Scholar]

- Dobrzański, L.A. Metal Engineering Materials; WNT: Warszawa, Poland, 2004. (In Polish) [Google Scholar]

- Dobrzański, L.A.; Honysz, R. Virtual examinations of alloying elements influence on alloy structural steels mechanical properties. J. Mach. Eng. 2011, 49, 102–119. [Google Scholar]

- Musztyfaga-Staszuk, M.; Honysz, R. Application of artificial neural networks in modeling of manufactured front metallization contact resistance for silicon solar cells. Arch. Metall. Mater. 2015, 60, 673–678. [Google Scholar] [CrossRef] [Green Version]

- Dobrzański, L.A.; Honysz, R. Computer modelling system of the chemical composition and treatment parameters influence on mechanical properties of structural steels. J. Achiev. Mater Manuf. Eng. 2009, 35, 138–145. [Google Scholar]

- Marciniak, A.; Korbicz, J. Data preparation and planning of the experiment. In Artificial Neural Networks in Biomedical Engineering; Tadeusiewicz, R., Korbicz, J., Rutkowski, L., Duch, W., Eds.; EXIT Academic Publishing House: Warsaw, Poland, 2013; Volume 9. [Google Scholar]

- Sitek, W. Metodologia Projektowania Stali Szybkotnących z Wykorzystaniem Narzędzi Sztucznej Inteligencji; International Ocsco World Press: Gliwice, Poland, 2010. (In Polish) [Google Scholar]

- Trzaska, J. Metodologia Prognozowania Anizotermicznych Krzywych Przemian Fazowych Stali Konstrukcyjnych i Maszynowych; Silesian University of Technology Publishing House: Gliwice, Poland, 2017. (In Polish) [Google Scholar]

- Honysz, R.; Dobrzański, L.A. Virtual laboratory methodology in scientific researches and education. J. Achiev. Mater Manuf. Eng. 2017, 2, 76–84. [Google Scholar] [CrossRef]

- Information about Steel for Metallographer. Available online: http://metallograf.de/start-eng.htm (accessed on 20 February 2021).

- MatWeb. Your Source for Materials Information. Available online: http://matweb.com (accessed on 20 February 2021).

- Feng, W.; Yang, S. Thermomechanical processing optimization for 304 austenitic stainless steel using artificial neural network and genetic algorithm. J. Appl. Phys. 2016, 122, 1–10. [Google Scholar] [CrossRef]

- Kim, Y.-H.; Yarlagadda, P. The Training Method of General Regression Neural Network for GDOP Approximation. Appl. Mech. Mater. 2013, 278–280, 1265–1270. [Google Scholar] [CrossRef]

- Mandal, S.; Sivaprasad, P.V.; Venugopal, S. Capability of a Feed-Forward Artificial Neural Network to Predict the Constitutive Flow Behavior of As Cast 304 Stainless Steel Under Hot Deformation. J. Eng. Mater. Technol. 2007, 2, 242–247. [Google Scholar] [CrossRef]

- Kapoor, R.; Pal, D.; Chakravartty, J.K. Use of artificial neural networks to predict the deformation behavior of Zr–2.5Nb–0.5Cu. J. Mater. Process. Technol. 2005, 169, 199–205. [Google Scholar] [CrossRef]

- Jovic, S.; Lazarevic, M.; Sarkocevic, Z.; Lazarevic, D. Prediction of Laser Formed Shaped Surface Characteristics Using Computational Intelligence Techniques. Laser. Eng. 2018, 40, 239–251. [Google Scholar]

- Karkalos, N.E.; Markopoulos, A.P. Determination of Johnson-Cook material model parameters by an optimization approach using the fireworks algorithm. Proc. Manuf. 2018, 22, 107–113. [Google Scholar] [CrossRef]

- Trzaska, J. Neural networks model for prediction of the hardness of steels cooled from the austenitizing temperature. Arch. Mater. Sci. Eng. 2016, 82, 62–69. [Google Scholar]

- Masters, T.; Land, W. New training algorithm for the general regression neural network. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics. Computational Cybernetics and Simulation, Orlando, FL, USA, 12–15 October 1997; Volume 3. [Google Scholar] [CrossRef]

- Jimenez-Come, M.J.; Munoz, E.; Garcia, R.; Matres, V.; Martin, M.L.; Trujillo, F.; Turiase, I. Pitting corrosion behaviour of austenitic stainless steel using artificial intelligence techniques. J. Appl. Logic. 2012, 10, 291–297. [Google Scholar] [CrossRef] [Green Version]

- Shen, C.; Wang, C.; Wei, X.; Li, Y.; van der Zwaag, S.; Xua, W. Physical metallurgy-guided machine learning and artificial intelligent design of ultrahigh-strength stainless steel. Acta Mater. 2019, 179, 201–214. [Google Scholar] [CrossRef]

- Adamczyk, J. Metallurgy Theoretical Part 1. The Structure of Metals and Alloys; Silesian University of Technology: Gliwice, Poland, 1999. (In Polish) [Google Scholar]

- Adamczyk, J. Metallurgy Theoretical Part 2. Plastic Deformation, Strengthening and Cracking; Silesian University of Technology: Gliwice, Poland, 2002. (In Polish) [Google Scholar]

- Dobrzański, L.A. Engineering Materials and Materials Design. Fundamentals of Materials Science and Physical Metallurgy; WNT: Warsaw, Poland; Gliwice, Poland, 2006. (In Polish) [Google Scholar]

- Totten, G.E. Steel Heat Treatment: Metallurgy and Technologies; CRC Press: New York, NY, USA, 2006. [Google Scholar]

- PN-EN 10002-1: 2002. Available online: http://www.pkn.pl/ (accessed on 20 February 2021).

- PN-EN ISO 6506-1: 2002. Available online: http://www.pkn.pl/ (accessed on 20 February 2021).

- Sitek, W. Employment of rough data for modelling of materials properties. Achiev. Mater. Manuf. Eng. 2007, 21, 65–68. [Google Scholar]

- Honysz, R. Optimization of Ferrite Stainless Steel Mechanical Properties Prediction with artificial intelligence algorithms. Arch. Metall. Mater. 2020, 65, 749–753. [Google Scholar] [CrossRef]

- Michalewicz, Z. Genetic Algorithms + Data Structures = Evolutionary Programs; WNT: Warsaw, Poland, 2003. [Google Scholar]

- Sammut, C.; Webb, G.I. (Eds.) Encyclopedia of Machine Learning and Data Mining; Springer Science & Business Media: New York, NY, USA, 2017. [Google Scholar]

- Rutkowski, L. Methods and Techniques of Artificial Intelligence; PWN: Warszawa, Poland, 2006. [Google Scholar]

- Tadeusiewicz, R. Artificial Neural Networks; Academic Publishing House: Warsaw, Poland, 2001. [Google Scholar]

- Tadeusiewicz, R.; Szaleniec, M. Leksykon Sieci Neuronowych; Wydawnictwo Fundacji “Projekt Nauka”: Wrocław, Poland, 2015. [Google Scholar]

- Specht, D.F. A general regression neural network. IEEE Trans. Neural Netw. Learn. Syst. 2002, 2, 568–576. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Strumiłło, P.; Kamiński, W. Radial basis function neural networks: Theory and applications. In Neural Networks and Soft Computing; Rutkowski, L., Kacprzyk, J., Eds.; Physica: Heidelberg, Germany, 2003; Volume 19. [Google Scholar] [CrossRef]

- Aitkin, M.; Foxall, R. Statistical modelling of artificial neural networks using the multi-layer perceptron. Stat. Comput. 2003, 13, 227–239. [Google Scholar] [CrossRef]

- Dobrzański, L.A.; Honysz, R. Artificial intelligence and virtual environment application for materials design methodology. Arch. Mater. Sci. Eng. 2010, 45, 69–94. [Google Scholar]

- Ossowski, S. Sieci Neuronowe w Ujęciu Algorytmicznym; WNT: Warszawa, Poland, 1996. (In Polish) [Google Scholar]

- Patterson, D.W. Artificial Neural Networks—Theory and Applications; Prentice-Hall: Englewood Cliffs, NJ, USA, 1998. [Google Scholar]

- Statology. Statistic Simplified. Available online: https://www.statology.org/ (accessed on 20 February 2021).

- StatSoft Europe. Available online: http://www.statsoft.pl/ (accessed on 20 February 2021).

| Range | Mechanical Properties | |||||

|---|---|---|---|---|---|---|

| Rp0.2 (MPa) | Rm (MPa) | A (%) | Z (%) | KCU2 (J/mm2) | HB | |

| minimum | 208 | 369 | 3 | 15 | 14 | 111 |

| maximum | 920 | 970 | 65 | 78 | 348 | 331 |

| Chemical Element | RBF Network | MLP Network | GRNN Network | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AR | MAE | MAPE | R | AR | MAE | MAPE | R | AR | MAE | MAPE | R | |

| C | 5-33-1 | 0.50 | 23.3% | 0.92 | 6-7-1 | 0.57 | 21.1% | 0.92 | 6-1636-2-1 | 0.01 | 4.6% | 0.95 |

| Mn | 6-54-1 | 0.11 | 14.7% | 0.89 | 5-15-5-1 | 0.12 | 15.5% | 0.86 | 6-1636-2-1 | 0.05 | 5.8% | 0.95 |

| Si | 5-24-1 | 0.03 | 11.5% | 0.54 | 6-13-1 | 0.03 | 11.4% | 0.57 | 6-1636-2-1 | 0.01 | 5.6% | 0.83 |

| Cr | 5-12-1 | 0.78 | 2.7% | 0.61 | 6-15-1 | 0.65 | 2.4% | 0.72 | 6-1636-2-1 | 0.3 | 1.4% | 0.90 |

| Ni | 6-30-1 | 0.33 | 22.9% | 0.90 | 6-10-1 | 0.31 | 18.8% | 0.89 | 6-1636-2-1 | 0.18 | 9.9% | 0.92 |

| Mo | 6-28-1 | 0.33 | 39.0% | 0.66 | 6-9-1 | 0.34 | 36.9% | 0.59 | 6-1636-2-1 | 0.06 | 6.4% | 0.95 |

| Cu | 6-57-1 | 0.09 | 20.8% | 0.61 | 6-23-2-1 | 0.10 | 22.3% | 0.57 | 6-1636-2-1 | 0.04 | 8.4% | 0.86 |

| Al | 3-18-1 | 0.05 | 36.6% | 0.44 | 3-2-1 | 0.05 | 36.9% | 0.43 | 6-1636-2-1 | 0.04 | 29.2% | 0.50 |

| P | 5-9-1 | 0.04 | 35.4% | 0.52 | 6-15-1 | 0.02 | 31.1% | 0.72 | 5-1636-2-1 | 0.03 | 33.3% | 0.68 |

| S | 2-10-1 | 0.06 | 39.4% | 0.58 | 5-2-1 | 0.05 | 37.2% | 0.63 | 4-1636-2-1 | 0.04 | 34.8% | 0.66 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Honysz, R. Modeling the Chemical Composition of Ferritic Stainless Steels with the Use of Artificial Neural Networks. Metals 2021, 11, 724. https://doi.org/10.3390/met11050724

Honysz R. Modeling the Chemical Composition of Ferritic Stainless Steels with the Use of Artificial Neural Networks. Metals. 2021; 11(5):724. https://doi.org/10.3390/met11050724

Chicago/Turabian StyleHonysz, Rafał. 2021. "Modeling the Chemical Composition of Ferritic Stainless Steels with the Use of Artificial Neural Networks" Metals 11, no. 5: 724. https://doi.org/10.3390/met11050724

APA StyleHonysz, R. (2021). Modeling the Chemical Composition of Ferritic Stainless Steels with the Use of Artificial Neural Networks. Metals, 11(5), 724. https://doi.org/10.3390/met11050724