A Feature-Based Structural Measure: An Image Similarity Measure for Face Recognition

Abstract

:Featured Application

Abstract

1. Introduction

2. Background

3. Similarity Measures

3.1. Overview of SSIM: A Structural Similarity Measure

3.2. Overview of FSIM: A Feature Similarity Measure

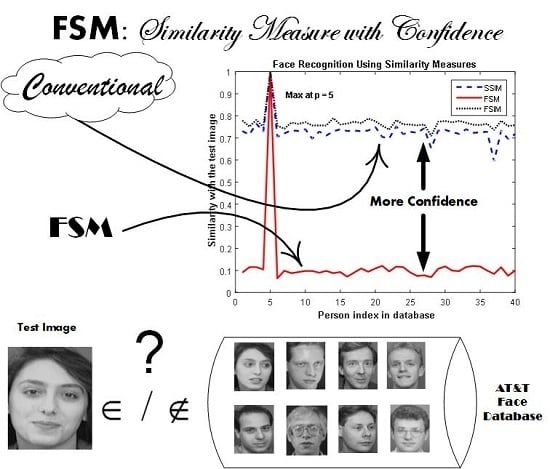

3.3. The Proposed Measure (FSM)

3.4. A Measure for Face Recognition Confidence

3.5. Discussion

4. Experimental Results and Performance

4.1. Image Database

- ORL faces images database: AT&T (American Telephone & Telegraph, Inc; New York City, NY, USA) or ORL database this is a well-known database which is widely used for testing face recognition systems. It consists of 40 individuals; each with 10 different images (poses). These images are taken with different levels of illumination, rotation, facial expression and facial details such as glasses. The size of each image is 92 × 112 pixels, with 256 grey levels per pixel [21]. See Figure 1.

- FEI faces images database: FEI database or Brazilian database, it consists of 200 individuals; each with 14 different images (poses). These images are taken at the artificial intelligence laboratory of FEI in Brazil; represented by students and staff at FEI, between 19 and 40 years old with distinct appearance, hairstyle, and adorns. The images in FEI database are colorful and size of each image is 640 × 480 pixels; in our experiment we used 700 images from FEI database for 50 individuals each with 14 poses [22]. See Figure 2.

4.2. Testing

4.3. Results and Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Research Ethics

References

- Parmar, D.N.; Mehta, B.B. Face recognition methods & applications. arXiv, 2014; arXiv:1403.0485. [Google Scholar]

- Zhao, W.; Chellappa, R.; Phillips, P.J.; Rosenfeld, A. Face recognition: A literature survey. ACM Comput. Surv. (CSUR) 2003, 35, 399–458. [Google Scholar] [CrossRef]

- Barrett, W.A. A survey of face recognition algorithms and testing results. In Proceedings of the Conference Record of the Thirty-First Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 2–5 November 1997. [Google Scholar]

- Fromherz, T. Face Recognition: A Summary of 1995–1997; Technical Report ICSI TR-98-027; International Computer Science Institute: Berkeley, CA, USA, 1998. [Google Scholar]

- Wiskott, L.; Fellous, J.M.; Krüger, N.; Malsburg, C.V.D. Face recognition by elastic bunch graph matching. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 775–779. [Google Scholar] [CrossRef]

- Turk, M.A.; Pentland, A.P. Face recognition using eigenfaces. In Proceedings of the CVPR’91 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 19–23 June 1991. [Google Scholar]

- Belkasim, S.O.; Shridhar, M.; Ahmadi, M. Pattern recognition with moment invariants: A comparative study and new results. Pattern Recognit. 1991, 24, 1117–1138. [Google Scholar] [CrossRef]

- Taj-Eddin, I.A.; Afifi, M.; Korashy, M.; Hamdy, D.; Nasser, M.; Derbaz, S. A new compression technique for surveillance videos: Evaluation using new dataset. In Proceedings of the Sixth International Conference on Digital Information and Communication Technology and its Applications (DICTAP), Konya, Turkey, 21–23 July 2016. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Hashim, A.N.; Hussain, Z.M. Novel imagedependent quality assessment measures. J. Comput. Sci. 2014, 10, 1548–1560. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, Z. A similarity measure based on Hausdorff distance for human face recognition. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR 2006), Hong Kong, China, 20–24 August 2006. [Google Scholar]

- Xu, Y.; Yao, L.; Zhang, D.; Yang, J.Y. Improving the interest operator for face recognition. Exp. Syst. Appl. 2009, 36, 9719–9728. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed]

- Singh, C.; Sahan, A.M. Face recognition using complex wavelet moments. Opt. Laser Technol. 2013, 47, 256–267. [Google Scholar] [CrossRef]

- Li, H.; Suen, C.Y. Robust face recognition based on dynamic rank representation. Pattern Recognit. 2016, 60, 13–24. [Google Scholar] [CrossRef]

- Li, L.; Xu, C.; Tang, W.; Zhong, C. 3D face recognition by constructing deformation invariant image. Pattern Recognit. Lett. 2008, 29, 1596–1602. [Google Scholar] [CrossRef]

- Marcolin, F.; Vezzetti, E. Novel descriptors for geometrical 3D face analysis. Multimedia Tools Appl. 2017, 76, 13805–13834. [Google Scholar] [CrossRef]

- Moos, S.; Marcolin, F.; Tornincasa, S.; Vezzetti, E.; Violante, M.G.; Fracastoro, G.; Speranza, D.; Padula, F. Cleft lip pathology diagnosis and foetal landmark extraction via 3D geometrical analysis. Int. J. Interact. Des. Manuf. (IJIDeM) 2017, 11, 1–18. [Google Scholar] [CrossRef]

- Hassan, A.F.; Hussain, Z.M.; Cai-lin, D. An Information-Theoretic Measure for Face Recognition: Comparison with Structural Similarity. Int. J. Adv. Res. Artif. Intell. 2014, 3, 7–13. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- AT&T Laboratories Cambridge. The Database of Faces. Available online: http://www.cl.cam.ac.uk/research/dtg/attarchive/facedatabase.html (accessed on 28 April 2017).

- Thomaz, C.E. (Caru). FEI Face Database. Available online: http://fei.edu.br/~cet/facedatabase.html (accessed on 15 May 2017).

- Hassan, A.F.; Cai-lin, D.; Hussain, Z.M. An information-theoretic image quality measure: Comparison with statistical similarity. J. Comput. Sci. 2014, 10, 2269–2283. [Google Scholar] [CrossRef]

- Lajevardi, S.M.; Hussain, Z.M. Novel higher-order local autocorrelation-like feature extraction methodology for facial expression recognition. IET Image Process. 2010, 4, 114–119. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shnain, N.A.; Hussain, Z.M.; Lu, S.F. A Feature-Based Structural Measure: An Image Similarity Measure for Face Recognition. Appl. Sci. 2017, 7, 786. https://doi.org/10.3390/app7080786

Shnain NA, Hussain ZM, Lu SF. A Feature-Based Structural Measure: An Image Similarity Measure for Face Recognition. Applied Sciences. 2017; 7(8):786. https://doi.org/10.3390/app7080786

Chicago/Turabian StyleShnain, Noor Abdalrazak, Zahir M. Hussain, and Song Feng Lu. 2017. "A Feature-Based Structural Measure: An Image Similarity Measure for Face Recognition" Applied Sciences 7, no. 8: 786. https://doi.org/10.3390/app7080786

APA StyleShnain, N. A., Hussain, Z. M., & Lu, S. F. (2017). A Feature-Based Structural Measure: An Image Similarity Measure for Face Recognition. Applied Sciences, 7(8), 786. https://doi.org/10.3390/app7080786