3.2. Bias Impact Analysis for a Spinning Multi-Beam Laser Scanner

The objective of this section is to derive a mathematical formulation that shows the impact of biases in the mounting parameters on the coordinates of points along planar features with different orientations. This analysis can further aid the development of an optimal/minimal flight and target configuration for calibration. Note that planar features are used specifically for this analysis as they facilitate the observation of positional deformations in one direction at a time, i.e., the effect in the direction normal to the plane. For simplifying the bias impact analysis without any loss of generality, we make several assumptions. Firstly, the IMU is mounted on the UAV with its

X- and

Y-axes aligned along the starboard and flight line directions, respectively. The IMU is assumed to be perfectly vertical, i.e., the

Z-axis of the IMU body frame is assumed to be perfectly aligned with the vertical direction of the mapping frame. Also, we assume that the flight line directions are either from South-to-North (

) or from North-to-South (

). These assumptions facilitate the decision as to whether the impact is along/across the flight line and vertical directions. As a result, the rotation matrix

would be given by Equation (3), where the top and bottom signs are for S-N and N-S flight line directions, respectively. In order to generalize the analysis regardless of the orientation of the LiDAR unit relative to the IMU body frame, Equation (1) is slightly modified by introducing a virtual LiDAR unit frame,

, which is almost aligned with the IMU body frame. This facilitates the decision as to whether the impact is along/across the flight line and vertical directions. Moreover, the use of a virtual LiDAR unit frame also prevents gimbal lock in the mounting parameter estimation. This modification is implemented by expressing the term

in Equation (1) as:

, where

is defined according to the laser scanner unit alignment relative to the IMU. The modified LiDAR point positioning is given by Equation (4). The laser unit coordinate system for the LiDAR unit alignment on the UAV platform used in this system and the assumed IMU body frame coordinate system (with

X,

Y,

Z-axes along starboard, forward and up directions, respectively) are shown in

Figure 5.

Since the virtual LiDAR unit frame is almost aligned with the IMU body frame, it results in small values for the differential angular boresight parameters (

,

,

) relating the two frames. So, the matrix,

can be written as shown in Equation (5), using the small angle approximations. Here,

,

,

denote the rotation around the

X,

Y,

Z-axes of the IMU body frame (i.e., across flight, along flight and vertical directions), respectively. Hence, these parameters denote the boresight pitch, roll and heading angles, respectively. The point coordinates relative to the virtual LiDAR unit frame, as derived using the nominal value for

according to

Figure 5, are given by Equations (6a) and (6b) in terms of the flying height,

and scan angles

. These coordinates (

also denote the location of a point with respect to the virtual LiDAR unit frame, which is almost parallel to the IMU body frame. The schematic illustration of these symbolic notations are depicted in

Figure 6 for a UAV-based LiDAR system. Substituting Equations (5) and (6) in Equation (4), we get the revised form of the LiDAR point positioning equation, as given in Equation (7), where

,

,

are the lever-arm offset parameters of the LiDAR unit frame relative to the IMU body frame. Now, the impact of the presence of bias in the system mounting parameters can be analyzed by differentiating Equation (7) with respect to the system mounting parameters. This is given by Equation (8).

The bias impact can be analyzed thoroughly by isolating the terms in Equation (8) corresponding to the impact of bias in each of the mounting parameters for each of the mapping frame coordinates—

,

and

, representing the coordinates across flying direction, along flying direction and vertical direction, respectively—as given in

Table 1.

Table 1 can now be used to assess the impact of each bias for planar surfaces in different orientations—vertical planes parallel to flight direction, vertical planes perpendicular to flight direction and horizontal planes)—thus indicating the impact across flight direction, along flight direction and vertical direction, respectively.

Impact of Bias in Lever-arm component across the flying direction :

The introduced shift across the flying direction is flying direction dependent and does not depend on the location of the point in question relative to the virtual laser unit coordinate system. As a result, its impact will be visible in case of having vertical planes parallel to the flying direction scanned from two flight lines in opposite directions.

Impact of Bias in Lever-arm component along the flying direction :

The introduced shift along the flying direction is flying direction dependent and does not depend on the location of the point in question relative to the virtual laser unit coordinate system. Again, it would impact vertical planes perpendicular to the flying direction scanned from two flight lines in opposite directions.

Impact of Bias in Lever-arm component in the vertical direction :

The introduced shift in the vertical direction is flying direction independent and does not depend on the location of the point in question relative to the virtual laser unit coordinate system. As a result, the entire point cloud would be shifted in the vertical direction by the same amount. So, this bias would not affect planes at any orientation for any flight line configuration.

Impact of Bias in Boresight Pitch :

A bias in this component will cause shifts along the flying direction as well as in the vertical direction. The impact of boresight pitch bias along the flying direction is flying direction dependent and its magnitude depends on the height () of the point in question relative to the virtual laser unit coordinate system. This impact would be visible in case of a planar feature perpendicular to the flying direction being scanned by flight lines in opposite directions. The impact of boresight pitch bias in the vertical direction would be manifested in horizontal planes. The magnitude of this impact depends on the -coordinate of the point in question. So, this impact would be visible even in case of a single flight line capturing a horizontal planar feature as long as there is a significant variation in the -coordinate at a given location within such a plane, i.e., if the same portion of the plane is scanned by the laser unit while being at different locations.

Since a bias in lever arm also causes shifts along the flying direction, there is a need to decouple the impacts so as to estimate both these biases accurately. Due to the impact of boresight pitch bias in the vertical direction in addition to the impact along flying direction, it would aid in naturally decoupling and , provided there is a significant -coordinate variability. But, the -coordinate variation could be reduced depending on the nature of the targets used or the sensor configuration. In this case, the -coordinate variability is limited by the relatively narrow vertical FOV of the LiDAR unit (±10°), thus making it insufficient to eliminate the correlation. In such cases, there is a need to have a significant variation in the value of in order to decouple the impacts of and so as to estimate both these biases accurately. This can be achieved by one of the following ways:

Two different flying heights: The shift caused along the flight direction by the bias will vary depending on the flying height, whereas the shift due to the bias will be constant for any flying height. Thus, the two biases can be derived accurately using flight lines at different flying heights.

Variation in target height w.r.t. flying height: In case of flight lines at the same flying height, a variation in the height of points along a target primitive would result in varying shifts. The amount of variation required depends on the flying height, i.e., . So, the higher the value of for a given flying height, the better the estimation accuracy of and will be. A high variation in can be achieved either by having vertical planes at different heights or with a significant variation in the heights along given targets.

Impact of Bias in Boresight Roll :

A bias in this component (

) will cause shifts across the flying direction as well as in the vertical direction. The impact of this bias

across the flying direction is flying direction dependent and its magnitude depends on the height (

) of the point in question relative to the virtual laser unit coordinate system. This bias would impact vertical planes parallel to the flying direction scanned from two flight lines in opposite directions. The impact of this bias in

the vertical direction is flying direction dependent (since

-coordinates will change signs depending on the flying direction, as shown in

Figure 6) and its magnitude depends on the

-coordinate of the point in question. The resultant discrepancy in the

Z-coordinate on combining two tracks in the same and opposite directions are given by Equations (9) and (10), respectively, according to

Figure 7. Here,

denotes the lateral distance between the two tracks and

denotes the distance of the point in question from the line bisecting the lateral distance between the two flight lines. The analysis reveals that this bias would cause a discrepancy for horizontal planes scanned from two flight lines in the same direction depending on the lateral distance between the tracks. On the other hand, for two tracks in opposite directions, the discrepancy would depend on the lateral location of the planar patch of interest relative to the bisecting direction between the tracks.

Since a bias in lever arm also causes shifts across the flying direction, there is a need to decouple the impacts of and so as to estimate both these biases accurately. Due to the impact of boresight roll bias in the vertical direction in addition to the impact across flying direction, it would aid in naturally decoupling and , provided there are planar patches scanned from flight lines in the same direction with sufficient lateral separation and also, some planar patches located at a significantly high lateral distance from the flight lines. However, in the case of the unavailability of such planar patches located at a high lateral distance, the decoupling of the two parameters can be achieved by ensuring a significant variation in the value of . This can be achieved by one of the following ways:

Two different flying heights: The shift caused along the flying direction by the bias will vary depending on the flying height, whereas the shift due to the bias will be constant for any flying height. Thus, the two biases can be derived accurately using flight lines at different flying heights.

Variation in target height w.r.t. flying height: In case of flight lines at the same flying height, a variation in the height of points along a target primitive would result in varying shifts. The amount of variation required depends on the flying height, i.e., . So, the higher the value of for a given flying height, the better the estimation accuracy of and will be.

Impact of Bias in Boresight Heading :

A bias in this component will cause shifts across and along the flying direction. The impact of this bias across the flying direction is dependent on the -coordinate variability. So, this would cause a discrepancy for vertical planes parallel to the flying direction for a single track. Moreover, the discrepancy on combining two tracks in same or opposite directions would depend on the variability within the points comprising the planes.

The impact of this bias

along the flying direction is flying direction independent since the sign change of

-coordinate on flight direction change is nullified by the presence of dual sign in the term. Also, the magnitude of this impact is

-coordinate dependent. This bias would induce a discrepancy in case of vertical planes perpendicular to the flying direction scanned from two flight lines in the same/opposite directions depending on the lateral distance between the tracks, as given by Equations (11) and (12). For the UAV system used in this study, the impact along flying direction will be more pronounced than the impact across flying direction since the LiDAR unit is scanning with the laser beams rotating 360° around the

-axis, thus resulting in a high

-coordinate variability. However, the

-coordinate variability is limited by the total vertical FOV of ±10° of the Velodyne VLP-16 Puck Hi-Res unit.

Throughout the previous discussion, we dealt with a system where the LiDAR unit coordinate system is not aligned with IMU body frame but it was dealt with by using a virtual laser unit frame. However, the

X,

Y,

Z-axes of the IMU body frame were assumed to be aligned along the starboard, forward and up directions. For other generic situations where the IMU body frame is not aligned in such a manner, we can also account for that by introducing a virtual IMU body frame. For such cases, the LiDAR equation will be modified to result in Equation (13). Here,

is a fixed rotation depending on the alignment of the actual IMU body frame relative to the UAV vehicle frame. Hence, this modification renders the current bias impact analysis indifferent to the LiDAR unit and IMU body frame alignment within the platform.

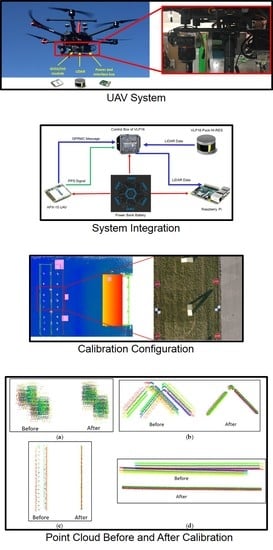

3.4. Calibration Strategy for UAV-Based LiDAR System

In this section, we propose a strategy to calibrate the mounting parameters of the LiDAR unit with respect to the onboard GNSS/INS unit using geometric tie features (e.g., planar and linear/cylindrical features). The conceptual basis for LiDAR system calibration is to minimize the discrepancies among conjugate linear and/or planar features obtained from different flight lines. Owing to the irregular distribution of LiDAR points, conjugate point pairs cannot be used since there is no accurate point-to-point correspondence. So, conjugate linear/cylindrical and planar features, such as building façades, ground patches, light poles and lane markers, are used and these can be directly extracted from overlapping areas within the flight lines. After collecting data from several flight lines, a 3D point cloud relative to a global reference frame will be derived using the GNSS/INS unit position and orientation and initial estimates for the mounting parameters. Then, conjugate features are identified and extracted from the reconstructed point cloud. Finally, an iterative LiDAR system calibration with weight modification [

18] is proposed to derive the mounting parameters based on the minimization of normal distance between conjugate features. However, conjugate feature extraction from several flight lines could be time-consuming and inefficient, especially when the initial estimates for mounting parameters used to reconstruct the 3D point cloud are considerably inaccurate. To facilitate automated identification of conjugate features in such cases, specifically designed calibration boards covered by highly reflective surfaces, that could be easily deployed and set up in outdoor environments, are used in this study. More specifically, various traffic signs (75 cm wide Stop signs, 90 cm × 60 cm Wrong Way signs and 60 cm × 60 cm checkerboard targets) are used as highly reflective boards. The highly reflective boards can be easily identified from intensity data, as shown in

Figure 8, where the points belonging to these boards exhibit higher intensity values compared to other LiDAR points. First, a pre-defined threshold is set to extract high-intensity points. To avoid the extraction of high-intensity points belonging to objects other than these boards, an approximate pre-set region is set as seed points for each board. Then, a distance-based region growing technique is adopted to group the high intensity board returns. Finally, a plane-fitting is done for these points and the points lying within a normal distance threshold from the best-fitting plane are extracted. Other planar features, such as ground patches or wall patches, can be extracted by defining two diagonally opposite corners and selecting the points lying within a buffer bounding box. The bounding box is constructed around the planar feature of interest by adding a buffer value (in

X,

Y and

Z directions) to the coordinates of diagonally opposite corners. Again, a plane-fitting is done for the points contained inside the box and the ones lying within a normal distance threshold from the best-fitting plane are extracted. Linear/cylindrical features can also be used for calibration and they are extracted by specifying the two end points for each feature. A buffer radius is set to define a cylinder around the linear feature of interest. Then, a line-fitting is done for the points lying within this cylindrical buffer and finally, the points that lie within a normal distance threshold from the best-fitting line are extracted. Note that the buffer values for linear/planar feature extraction are determined based on the accuracy of initial estimates of the mounting parameters.

The mounting parameters that are derived from calibration are the lever arm and boresight angles for the LiDAR unit. The lever arm for the LiDAR unit cannot be estimated in the calibration procedure since any change in will not introduce discrepancies among the different versions of the same feature captured from different flight lines. It would only result in a shift of the point cloud in the vertical direction as a whole. It is either manually measured or determined using vertical control (such as, horizontal planar patches with known elevation) and fixed during the calibration procedure.

Finally, the mounting parameters of each sensor are derived by minimizing the discrepancies between non-conjugate point pairings among conjugate features in overlapping flight lines. In case of a planar feature, the discrepancy arising from each non-conjugate point pair would consist of a non-random component lying along the planar surface. Hence, a modified weight is applied to the point pair so as to retain only the component of the discrepancy lying along the direction normal to the planar surface. Similarly, in case of a linear/cylindrical feature, a modified weight is applied so as to nullify the component of discrepancy along the direction of the feature. The modified weight is determined according to the direction of the planar/linear feature as obtained from the feature parameters derived by a plane/line fitting conducted on the points from the flight line that captures the most number of points belonging to the corresponding feature. A more detailed discussion regarding the theoretical basis for weight modification while using non-conjugate points along corresponding features can be found in Renaudin et al. (2011) [

18]. Finally, the mounting parameters for the laser unit can be derived by minimizing the discrepancies among the conjugate features arising from the above-mentioned pairs.

However, when the initial estimate of the mounting parameters is inaccurate, the estimated modified weight matrix would be imprecise which would affect the accuracy of the derived mounting parameters. Hence, this research proposes an iterative calibration procedure. Firstly, the discrepancy among extracted features is minimized to derive mounting parameters through the weight modification process. Then, the points along the extracted features are re-generated using the newly estimated mounting parameters and the discrepancy among conjugate features is minimized again using a newly defined modified weight matrix. The above steps are repeated until the change in the estimates of the mounting parameters is below a predefined threshold. In this study, these stopping criteria are enforced by monitoring the difference between the a-posteriori variance factors after two consecutive iterations of LSA and when the difference is less than 10−8, the adjustment is deemed complete. Since the a-posteriori variance factor indicates the average accuracy of calibration by quantifying the normal distance of points from best-fitting plane/line for all the extracted features, the threshold of 10−8 indicates a change of less than 0.01 µm in the overall normal distance, which can be definitely regarded as insignificant.