Haptic Hybrid Prototyping (HHP): An AR Application for Texture Evaluation with Semantic Content in Product Design

Abstract

:1. Introduction

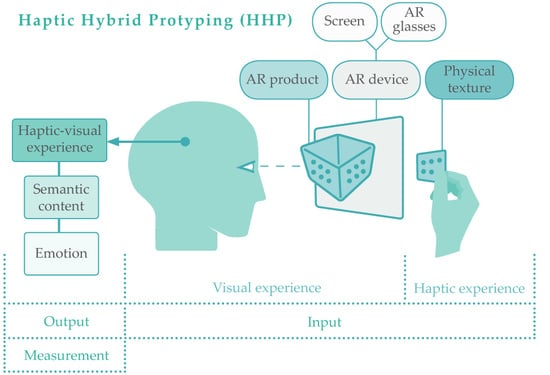

2. Haptic Hybrid Prototyping (HHP): Method Overview

3. Materials and Methods

3.1. Ethical Implications

3.2. Participants

3.3. Materials

3.4. Evaluation of Haptic-Visual Properties

3.5. Procedure

4. Results and Discussion

4.1. Analysis of Coincidence between Physical Prototypes and HHP

4.2. Analysis of the Application of Augmented Reality (AR) Technology as Part of the HHP Method

4.3. Sensory Experience and HHP

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Sample | AR Prototype |

|---|---|

| A-AR |  |

| B-AR |  |

| D-AR |  |

References

- Nafisi, M.; Wiktorsson, M.; Rösiö, C.; Granlund, A. Manufacturing engineering requirements in the early stages of new product development—A case study in two assembly plants. In Advanced Applications in Manufacturing Enginering; Woodhead Publishing: Cambridge, UK, 2019; pp. 141–167. ISBN 9780081024140. [Google Scholar]

- Rodríguez-Parada, L.; Pardo-Vicente, M.-Á.; Mayuet-Ares, P.-F. Digitalización de alimentos frescos mediante escaneado 3d para el diseño de envases personalizados. DYNA Ing. E Ind. 2018, 93, 681–688. [Google Scholar] [CrossRef]

- Gilchrist, A. Introducing Industry 4.0. In Industry 4.0; Apress: Berkeley, CA, USA, 2016; pp. 195–215. [Google Scholar]

- Alcácer, V.; Cruz-Machado, V. Scanning the industry 4.0: A literature review on technologies for manufacturing systems. Eng. Sci. Technol. Int. J. 2019, 22, 899–919. [Google Scholar]

- Čolaković, A.; Hadžialić, M. Internet of Things (IoT): A review of enabling technologies, challenges, and open research issues. Comput. Netw. 2018, 144, 17–39. [Google Scholar] [CrossRef]

- Calvillo-Arbizu, J.; Roa-Romero, L.M.; Estudillo-Valderrama, M.A.; Salgueira-Lazo, M.; Aresté-Fosalba, N.; del-Castillo-Rodríguez, N.L.; González-Cabrera, F.; Marrero-Robayna, S.; López-de-la-Manzana, V.; Román-Martínez, I. User-centred design for developing e-Health system for renal patients at home (AppNephro). Int. J. Med. Inform. 2019, 125, 47–54. [Google Scholar] [CrossRef] [PubMed]

- Desmet, P.M.A.; Xue, H.; Fokkinga, S.F. The same person is never the same: Introducing mood-stimulated thought/action tendencies for user-centered design. She Ji J. Des. Econ. Innov. 2019, 5, 167–187. [Google Scholar] [CrossRef]

- Crolic, C.; Zheng, Y.; Hoegg, J.; Alba, J.W. The influence of product aesthetics on consumer inference making. J. Assoc. Consum. Res. 2019, 4, 398–408. [Google Scholar] [CrossRef]

- Khalaj, J.; Pedgley, O. A semantic discontinuity detection (SDD) method for comparing designers’ product expressions with users’ product impressions. Des. Stud. 2019, 62, 36–67. [Google Scholar] [CrossRef]

- Krippendorff, K. The Semantic Turn: A New Foundation for Design; CRC/Taylor & Francis: Boca Raton, FL, USA, 2006; ISBN 9780415322201. [Google Scholar]

- Desmet, P.; Hekkert, P. Framework of product experience. Int. J. Des. 2007, 1, 13–23. [Google Scholar]

- Norman, D.A. El Diseño Emocional: Por Qué nos Gustan (o no) los Objetos Cotidianos; Paidós: Barcelona, Spain, 2005; ISBN 8449317290. [Google Scholar]

- Shannon, C.E.; Weaver, W. The Mathematical Theory of Communication; University of Illinois Press: Champaign, IL, USA, 1964; ISBN 0252725484. [Google Scholar]

- Monó, R. Design for Product Understanding: The Aesthetics of Design from a Semiotic Approach; Liber AB: Trelleborg, Sweden, 1997; ISBN 914701105X. [Google Scholar]

- Crilly, N.; Good, D.; Matravers, D.; Clarkson, P.J. Design as communication: Exploring the validity and utility of relating intention to interpretation. Des. Stud. 2008, 29, 425–457. [Google Scholar] [CrossRef]

- Demet, P. Getting Emotional With Pieter Desmet|Design & Emotion. Available online: http://www.design-emotion.com/2006/11/05/getting-emotional-with-dr-pieter-desmet/ (accessed on 19 November 2018).

- Norman, D.A.; Berkrot, P.; Tantor Media. The Design of Everyday Things; Tantor Media, Inc.: Old Saybrook, CT, USA, 2011; ISBN 1452654123. [Google Scholar]

- Crilly, N.; Moultrie, J.; Clarkson, P.J. Seeing things: Consumer response to the visual domain in product design. Des. Stud. 2004, 25, 547–577. [Google Scholar] [CrossRef]

- Bloch, P.H. Seeking the ideal form: Product design and consumer response. J. Mark. 1995, 59, 16. [Google Scholar] [CrossRef]

- Krippendorff, K. Propositions of human-centeredness; A philosophy for design. Dr. Educ. Des. Found. Futur. 2000, 3, 55–63. [Google Scholar]

- You, H.; Chen, K. Applications of affordance and semantics in product design. Des. Stud. 2007, 28, 23–38. [Google Scholar] [CrossRef]

- Chang, K.-H.; Chang, K.-H. Rapid prototyping. In e-Design; Academic Press: Cambridge, MA, USA, 2015; pp. 743–786. ISBN 978-0-12-382038-9. [Google Scholar]

- Um, D. Solid Modeling and Applications: Rapid Prototyping, CAD and CAE Theory, 2nd ed.; Springer: Berlin, Germany, 2018; ISBN 9783319745947. [Google Scholar]

- Bruno, F.; Angilica, A.; Cosco, F.; Luchi, M.L.; Muzzupappa, M. Mixed prototyping environment with different video tracking techniques. In Proceedings of the IMProVe 2011 International Conference on Innovative Methods in Product Design, Venice, Italy, 15–17 June 2011. [Google Scholar]

- Buchholz, C.; Vorsatz, T.; Kind, S.; Stark, R. SHPbench—A smart hybrid prototyping based environment for early testing, verification and (user based) validation of advanced driver assistant systems of cars. Procedia CIRP 2017, 60, 139–144. [Google Scholar] [CrossRef]

- Rieuf, V.; Bouchard, C.; Meyrueis, V.; Omhover, J.-F. Emotional activity in early immersive design: Sketches and moodboards in virtual reality. Des. Stud. 2017, 48, 43–75. [Google Scholar] [CrossRef]

- Mathias, D.; Snider, C.; Hicks, B.; Ranscombe, C. Accelerating product prototyping through hybrid methods: Coupling 3D printing and LEGO. Des. Stud. 2019, 62, 68–99. [Google Scholar] [CrossRef]

- Stark, R.; Beckmann-Dobrev, B.; Schulze, E.-E.; Adenauer, J.; Israel, J.H. Smart hybrid prototyping zur multimodalen erlebbarkeit virtueller prototypen innerhalb der produktentstehung. In Proceedings of the Berliner Werkstatt Mensch-Maschine-Systeme BWMMS’09: Der Mensch im Mittelpunkt Technischer Systeme, Berlin, Germany, 7–9 October 2009. [Google Scholar]

- Ng, L.X.; Ong, S.K.; Nee, A.Y.C. Conceptual design using functional 3D models in augmented reality. Int. J. Interact. Des. Manuf. 2015, 9, 115–133. [Google Scholar] [CrossRef]

- Liagkou, V.; Salmas, D.; Stylios, C. Realizing virtual reality learning environment for industry 4.0. Procedia CIRP 2019, 79, 712–717. [Google Scholar] [CrossRef]

- Ke, S.; Xiang, F.; Zhang, Z.; Zuo, Y. A enhanced interaction framework based on VR, AR and MR in digital twin. Procedia CIRP 2019, 83, 753–758. [Google Scholar] [CrossRef]

- Parizet, E.; Guyader, E.; Nosulenko, V. Analysis of car door closing sound quality. Appl. Acoust. 2008, 69, 12–22. [Google Scholar] [CrossRef]

- Dal Palù, D.; Buiatti, E.; Puglisi, G.E.; Houix, O.; Susini, P.; De Giorgi, C.; Astolfi, A. The use of semantic differential scales in listening tests: A comparison between context and laboratory test conditions for the rolling sounds of office chairs. Appl. Acoust. 2017, 127, 270–283. [Google Scholar] [CrossRef]

- Baer, T.; Coppin, G.; Porcherot, C.; Cayeux, I.; Sander, D.; Delplanque, S. “Dior, J’adore”: The role of contextual information of luxury on emotional responses to perfumes. Food Qual. Prefer. 2018, 69, 36–43. [Google Scholar] [CrossRef]

- Da Silva, E.S.A. Design, Technologie et Perception: Mise en Relation du Design Sensoriel, Sémantique et Émotionnel avec la Texture et les Matériaux. Ph.D. Thesis, ENSAM, Paris, France, 2016. [Google Scholar]

- Fenko, A.G.; Schifferstein, H.N.J.; Hekkert, P.P.M. Which senses dominate at different stages of product experience? In Proceedings of the Design Research Society Conference, Sheffield, UK, 16–19 July 2008. [Google Scholar]

- Fenko, A. Influencing healthy food choice through multisensory packaging design. In Multisensory Packaging; Springer International Publishing: Cham, Switzerland, 2019; pp. 225–255. [Google Scholar]

- Fenko, A.B. Sensory Dominance in Product Experience; VSSD: Delft, The Netherlands, 2010. [Google Scholar]

- Lee, W.; Park, J. Augmented foam: A tangible augmented reality for product design. In Proceedings of the Fourth IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR’05), Vienna, Austria, 5–8 October 2005; pp. 106–109. [Google Scholar]

- Beckmann-Dobrev, B.; Kind, S.; Stark, R. Hybrid simulators for product service-systems—Innovation potential demonstrated on urban bike mobility. Procedia CIRP 2015, 36, 78–82. [Google Scholar] [CrossRef]

- Min, X.; Zhang, W.; Sun, S.; Zhao, N.; Tang, S.; Zhuang, Y. VPModel: High-fidelity product simulation in a virtual-physical environment. IEEE Trans. Vis. Comput. Graph. 2019, 25, 3083–3093. [Google Scholar] [CrossRef]

- Rodríguez-Parada, L.; Mayuet, P.F.; Gamez, A.J. Industrial product design: Study of FDM technology for the manufacture of thermoformed prototypes. In Proceedings of the Manufacturing Engineering Society International Conference (MESIC2019), Madrid, Spain, 19—21 June 2019; Escuela Técnica Superior de Ingeniería y Diseño Industrial; p. 7. [Google Scholar]

- Teklemariam, H.G.; Das, A.K. A case study of phantom omni force feedback device for virtual product design. Int. J. Interact. Des. Manuf. 2017, 11, 881–892. [Google Scholar] [CrossRef]

- Turumogan, P.; Baharum, A.; Ismail, I.; Noh, N.A.M.; Fatah, N.S.A.; Noor, N.A.M. Evaluating users’ emotions for kansei-based Malaysia higher learning institution website using kansei checklist. Bull. Electr. Eng. Inform. 2019, 8, 328–335. [Google Scholar] [CrossRef]

- Heide, M.; Olsen, S.O. Influence of packaging attributes on consumer evaluation of fresh cod. Food Qual. Prefer. 2017, 60, 9–18. [Google Scholar] [CrossRef]

- Iosifyan, M.; Korolkova, O. Emotions associated with different textures during touch. Conscious. Cogn. 2019, 71, 79–85. [Google Scholar] [CrossRef]

- Eklund, A.A.; Helmefalk, M. Seeing through touch: A conceptual framework of visual-tactile interplay. J. Prod. Brand Manag. 2018, 27, 498–513. [Google Scholar] [CrossRef]

- Takahashi, C.; Watt, S.J. Optimal visual–haptic integration with articulated tools. Exp. Brain Res. 2017, 235, 1361–1373. [Google Scholar] [CrossRef]

- Tsetserukou, D.; Neviarouskaya, A. Emotion telepresence: Emotion augmentation through affective haptics and visual stimuli. J. Phys. Conf. Ser. 2012, 352, 012045. [Google Scholar] [CrossRef]

- Karjalainen, T.-M.; Snelders, D. Designing visual recognition for the brand. J. Prod. Innov. Manag. 2010, 27, 6–22. [Google Scholar] [CrossRef]

- Karjalainen, T.-M. Semantic Transformation in Design: Communicating Strategic Brand Identity through Product Design References; University of Art and Design in Helsinki: Helsinki, Finland, 2004; ISBN 9515581567. [Google Scholar]

- Tsai, T.; Chang, H.; Yu, M. Universal Access in Human-Computer Interaction. Interact. Technol. Environ. 2016, 9738, 198–205. [Google Scholar]

- Stark, R. Fraunhofer IPK: Smart Hybrid Prototyping. Available online: https://www.ipk.fraunhofer.de/en/divisions/virtual-product-creation/technologies-and-industrial-applications/smart-hybrid-prototyping/ (accessed on 16 July 2019).

- Microsoft HoloLens Commercial Suite. Available online: https://www.microsoft.com/es-es/p/microsoft-hololens-commercial-suite/944xgcf64z5b?activetab=pivot:techspecstab (accessed on 19 October 2019).

- Farooq, A.; Evreinov, G.; Raisamo, R. Enhancing Multimodal Interaction for Virtual Reality Using Haptic Mediation Technology. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Washington, DC, USA, 24–28 July 2019; pp. 377–388. [Google Scholar]

- Krippendorff, K.; Butter, R. Product Semantics: Exploring the Symbolic Qualities of Form; Departmental Papers; ASC: Philadelphia, PA, USA, 1984. [Google Scholar]

- Gibson, J.J. The Perception of the Visual World; Greenwood Press: Westport, CT, USA, 1974; ISBN 0837178363. [Google Scholar]

- West, C.K.; Gibson, J.J. The senses considered as perceptual systems. J. Aesthetic Educ. 2006, 3, 142. [Google Scholar] [CrossRef]

- Karjalainen, T.-M. Semantic mapping of design processes. In Proceeding of the 6th International Conference of the European Academy of Design, Bremen, Germany, 29–31 March 2005. [Google Scholar]

| Silky | Dirty | Abrasive | Rough | Soft | Sharp-Edge | Clean | Slippery | Adherent | Smooth | Σ | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| C | 24 | 22 | 32 | 24 | 14 | 12 | 25 | 18 | 11 | 19 | 201 |

| C±1 | 19 | 19 | 18 | 26 | 24 | 24 | 18 | 24 | 30 | 14 | 216 |

| C±2 | 9 | 8 | 4 | 2 | 15 | 16 | 9 | 11 | 11 | 16 | 101 |

| C±3 | 4 | 6 | 1 | 4 | 3 | 4 | 3 | 3 | 4 | 4 | 36 |

| C±4 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 3 | 6 |

| Silky | Dirty | Abrasive | Rough | Soft | Sharp-Edge | Clean | Slippery | Adherent | Smooth | Σ | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| C | 31 | 31 | 32 | 23 | 16 | 8 | 33 | 18 | 15 | 17 | 224 |

| C±1 | 18 | 18 | 15 | 23 | 33 | 25 | 12 | 29 | 27 | 28 | 228 |

| C±2 | 7 | 5 | 9 | 7 | 5 | 15 | 9 | 7 | 9 | 7 | 80 |

| C±3 | 0 | 1 | 0 | 3 | 2 | 7 | 2 | 2 | 4 | 4 | 25 |

| C±4 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 3 |

| Silky | Dirty | Abrasive | Rough | Clean | Slippery | Adherent | Σ | % | |

|---|---|---|---|---|---|---|---|---|---|

| C | 55 | 53 | 64 | 47 | 58 | 36 | 26 | 339 | 43.2% |

| C±1 | 37 | 37 | 33 | 49 | 30 | 53 | 57 | 296 | 37.8% |

| C±2 | 16 | 13 | 13 | 9 | 18 | 18 | 20 | 107 | 13.6% |

| C±3 | 4 | 7 | 1 | 7 | 5 | 5 | 8 | 37 | 4.7% |

| C±4 | 0 | 2 | 1 | 0 | 1 | 0 | 1 | 5 | 0.6% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pardo-Vicente, M.-Á.; Rodríguez-Parada, L.; Mayuet-Ares, P.F.; Aguayo-González, F. Haptic Hybrid Prototyping (HHP): An AR Application for Texture Evaluation with Semantic Content in Product Design. Appl. Sci. 2019, 9, 5081. https://doi.org/10.3390/app9235081

Pardo-Vicente M-Á, Rodríguez-Parada L, Mayuet-Ares PF, Aguayo-González F. Haptic Hybrid Prototyping (HHP): An AR Application for Texture Evaluation with Semantic Content in Product Design. Applied Sciences. 2019; 9(23):5081. https://doi.org/10.3390/app9235081

Chicago/Turabian StylePardo-Vicente, Miguel-Ángel, Lucía Rodríguez-Parada, Pedro F. Mayuet-Ares, and Francisco Aguayo-González. 2019. "Haptic Hybrid Prototyping (HHP): An AR Application for Texture Evaluation with Semantic Content in Product Design" Applied Sciences 9, no. 23: 5081. https://doi.org/10.3390/app9235081

APA StylePardo-Vicente, M. -Á., Rodríguez-Parada, L., Mayuet-Ares, P. F., & Aguayo-González, F. (2019). Haptic Hybrid Prototyping (HHP): An AR Application for Texture Evaluation with Semantic Content in Product Design. Applied Sciences, 9(23), 5081. https://doi.org/10.3390/app9235081