1. Introduction

Understanding information and its place in nature has been subject to various approaches in recent years (see below). Shannon already provided an “Information Theory” for certain aspects of information [

1]. However, from the beginning, and explicitly intended so by Shannon and his coworker in Information Theory, Warren Weaver, semantics was left out of considerations. Weaver stated that information in Information Theory has a different meaning to information in common usage [

2].

Information Theory provides a way of quantification of information by statistical means. Its introduction by Shannon was a bigger leap forward than anticipated in his time. Information Theory, not only helped to substantiate the so far somewhat fuzzy concept of information, but also made itself applicable far beyond its original field of communication technology.

Shannon’s Information Theory, by intention, is restricted to model quantitative aspects of information transmitted via a communication channel. The complementary field of investigation is meaningful, i.e., semantic information (unless stated otherwise, in the following “information” is to be understood as “semantic information”).

Since then, numerous approaches have been made to characterize or define what semantic information is. Views have been established that cover all aspects of information from communication to structures, culture, psychology,

etc. In addition, some aspects of quantum mechanics gave reasons to consider the role of information in processes and models on the smallest scale. The famous and intensively discussed measurement problem in quantum physics hinted to a special role of the (human) mind in establishing results of experiments. This led the eminent physicist Wheeler [

3] to a hypothesis he coined “It from Bit”, which indicates that reality comes from information and is established on information which then is the fundamental constituent of nature.

In parallel, progress was made in genetics and in biochemistry and insights were gained into the processing of information on different levels of an organism (

i.e., eukaryotes). Investigations reached from heredity and metabolism to the neuronal functions of the human brain, including impacts of quantum physics. With regard to information, several approaches as early as chemist Eigen’s [

4] as well as those of other scientists, and more recently biologist Loewenstein’s [

5] work on self-organization, provided specific views on the role of information in organisms.

In this contribution, we present a new comprehensive information model based on the current scientific discourse. Our model complies with, among others, Shannon’s Information Theory, Bateson’s [

6] definition and Burgin’s [

7] ontology. We take into account the common denominators for all information, which are Syntax, Semantics and Pragmatics (SSP), as defined by semiotician and philosopher Morris [

8]. Syntax (the rules according to which words are sequenced to sentences, signs that appear,

etc.), semantics (the relationship between a sign or a word to what it is pointing to in reality), and pragmatics (rules for applying words and signs in written or verbal conversations and broader social situations) frame meaning. We define semantic information in nature within a narrow and closely contoured framework. We propose that semantic information is the only existing information in biological nature. Information, in that sense, is a quality of energy—however, not all energy comes with information. More specifically, the potential structure of a given energy is information. It depends on a biological receiver to classify and process received energy as informational structured—or do not. Semantic Information is biological and it is exclusively created and used by biological evolution. We name our information model, which covers this, Evolutionary Energetic Information Model (EEIM).

Our basic assumption is that semantics is ligated to life. Without life, “meaning” is indeed meaningless. Semantic Information as the very base of meaning is part of life, which in turn is the result of biological evolution on earth (we are aware that developments beyond biological evolution are viewed as “evolution”; therefore, if not stated otherwise, in the following with “evolution” we refer to biological evolution on earth). Different branches and paths of evolution possess and process different variations of information. Information is also subject to evolutionary functions of mutation, selection and adaption. We assume syntax is being produced in the process of evolution as are semantics and pragmatics. Syntax in the beginning has materialized as rules imprinted in biochemistry molecules as it is imprinted into logical hardware circuits of computers. Later, the biochemistry hardware became complemented by “software”—also in an analogy to formal language of computer technology (this analogy is not to be extended beyond limits, of course). We further assume that semantics and pragmatics developed with accumulation of information, its syntactic rules and improved processing capabilities of the species’ biological neural network. The degree of similarities across all sectors of biological evolution is striking. Considering, e.g., psychologist and cognition researcher Heyes’ [

9] statement that “recent research reveals profound commonalities, as well striking differences, between human and non-human minds, and suggests that the evolution of human cognition has been much more gradual and incremental than previously assumed”, which hints to homogeneous structured developments of cognitive capabilities across all areas of biological evolution. Results differ dramatically but developments themselves show similarities. Another example that includes information processing in evolution is provided by physicist and philosopher Küppers [

10], who sees parallels between the “language of genes” and natural languages. Küppers states that “in view of the broad-ranging parallels between the structures of human language and the language of genes, recent years have seen even the linguistic theory of Chomsky move into the center of molecular-genetic research. This is associated with the hope that the methods and formalisms developed by Chomsky will also prove suited to the task of elucidating the structures of the molecular language of genetics.” In this contribution, we do not establish a link to these attempts, however we are aware that it might be created by future research. A further aspect of information’s pervasiveness can be shown when placing biological evolution into the hierarchy of physics. Information processing in an evolutionary context is by no means restricted to the molecular world or to the arena of classical (Newtonian) physics. Physicist and writer Davies [

11] states that “However, the key properties of life–replication with variation, and natural selection–does not logically require material structures themselves to be replicated. It is sufficient that information is replicated. This opens up the possibility that life may have started with some form of quantum replicator: Q-life, if you like.” Given the space we have for this contribution, we will not investigate these topics any further.

The more species have taken up information during evolution, the more they are potentially able to bequeath this information in various ways to the next generations to be built upon. By their building up on this, and being exposed to evolutionary mechanisms of mutation, adaption and selection, increasing information complexity is being developed, and with it more extensive information processing capabilities.

A comprehensive view on what semantic information is in nature is still missing. In the following, we will outline an approach to explain this in accordance with Information Theory, with views taken in quantum mechanics and with insights of modern molecular biology.

3. Meaning

In Information Theory, a central aspect of information is the culminated uncertainty of appearances of signs in an alphabet of signs. In any case, it does not matter what meanings individual signs or sequences of signs are intended to carry.

Of course, this does cover only partly what we expect information to be. Our understanding of information reaches beyond syntax, semantics and pragmatics. It includes meaning, knowledge and cognition.

What are “meaning”, “knowledge” and “cognition” in our context? With regard to meaning we refer to Küppers [

10] who states that “meaningful information in an absolute sense does not exist. Information acquires its meaning only in reference to a recipient. Thus, in order to specify the semantics of information one has to take into account the particular state of the recipient at the moment he receives and evaluates the information.” In short to maintain knowledge and cognition. With biochemist Kováč [

26], we understand knowledge as being embodied in a system, “as the capacity to do ontic work, necessary for maintaining onticity, permanence of the system. And also to do epistemic work: to sense, measure, record the properties of the surroundings in order to counter their destructive effects and, in the case of more knowledgeable systems, to anticipate them. Epistemic complexity of an agent thus enfolds two processes: one of the evolutionary past and another of the total set of potential actions to be performed in the future.” Cognition uses knowledge and creates knowledge. It relates mental abilities to knowledge capabilities as memory, judgment, reasoning, decision making,

etc. In the case of humans, cognition can be conscious, abstract, conceptual,

etc.Although everybody has an intuitive understanding what meaning is, it is worthwhile to have a closer look.

3.1. Current Modeling of Meaning

Einstein, famously, once asked “Do you really believe the moon exists only when you look at it?” He could not comply with a strong view in quantum mechanics that observations themselves change the world. Wheeler later even proposed a participatory universe where observers are not just collecting information of the objects they observe but also heavily influence the results of observations. He invented thought experiments like the “delayed choice”, which he even placed into interstellar space to get a convincing scenario supporting his view [

27].

Let us assume, for the sake of a little thought experiment, that no observers are around to look at objects of the world. In that case, no one would be aware of culture and artifacts. In case humans stopped existing as “observers”, immediately all cultural elements of the world would have virtually disappeared. They might still exist as “things” for animals, but not as parts of a—by humans created and maintained—culture. The same applies to results, methods and artifacts of natural science. The horizon of human specific knowledge would collapse. Of course, the brain, as a knowledge processing biological device, would also be gone and with it the extraordinary cognitive capabilities of mankind. Nobody would be left to utter “I am”. All human specific information would be wasted to such a degree that it simply would not exist anymore. All meaning with regard to mankind would be erased.

What would be left is what evolution produced beside humans that could look at objects of the real world. Although we have only limited insight into the ways animals see the world, we know about numerous similarities. Since we are “products” of the same evolution, it hardly could be otherwise. In many ways animals (and plants) act in comparable ways in their environments, that is to say their ecological niches, as humans do. We can conclude that since they survive in the same reality as we do they maintain a realistic view of the world. Thus their “meaning” or better “meanings” of the world would still held up if all humans had gone. “Meaning” in this case reaches from elementary interaction, e.g., by bacteria with the environment to complex dealings similar to our own ones, e.g., by primates.

However, as soon as we would exclude animals and plants (and bacteria) from observing the world in our thought experiment, we would have excluded meaning from the earth where our known evolution has taken place. What is then left does not have a meaning, however limited it might be. Stones and lifeless chemical structures and electromagnetic or gravitational fields, we assume, do not actively have a meaning. They might be subjects of meaning, but the carriers of this are consequently excluded from being by our thought experiment. Nevertheless, we recognize that it is widely accepted that, e.g., interacting particles as photons do “measurements” when colliding with physical structures. We assume however, that in the absence of an observer this would create Shannon Information, however not semantic information.

With meaning gone in our thought experiment, the existence of things has disappeared as well. It takes awareness to recognize “existence”. Since we cannot be aware of objects as they are because we are restricted by our senses and interpretation capabilities, these objects as we define them no longer “exist”. As objects, e.g., the “moon”, they depend on living beings who recognize an object as a moon. In other words, the objects’ very existence depends on being observed. Someone arriving from outer space might “see” the moon through his specific instruments and senses. The result may be very different from the appearance we create of the moon. We assume that observers create or construct objects by building mental models. Observers create models of objects of various complexity. Simple organisms create simple ones. Organisms that are more complex, however, may use simple models, combine theme, use them as building blocks and create new and more sophisticated models.

Reality on a basic level is indeed nothing we can describe without being aware of it. An object is only such with respect to an observer interacting with it [

28]. Even abstract nouns like relationship, potential, force,

etc. are results of our capability to create models of the external world. This is even so if—to an extreme—reality is thought to be pure mathematics, as physicist Tegmark [

29] proposes.

In summary, the key to meaning is biological life. No meaning without life and no life without evolution. As we will line out in the following, evolution produces meaning via creating and processing information.

3.2. Meaning—A Product of Evolution

Meaning is linked to evolution. It is produced by evolution as a part of the organisms themselves that are created in the process of evolution. This is partly done internally by processing available information in organisms, and partly by allowing external information to enter the organisms for further processing. The latter happened first with the beginning of evolution.

Information is what allows meaning and knowledge to be created. It has been existing since the start of evolution. The very first appearance of life came with information. From then on variety and complexity have both changed life. With the unfolding life information became richer and more complex—depending on the individual species. This, in turn, allowed species to create more complex models of the world which they recognized as their environment.

Information processing in and by organisms is in major parts being done specifically by DNA and RNA, inherited by ancestors at the level of molecules, and of course by brains. In addition to that communication necessary for building and running an organism and information based interaction with the environment, based on senses and action, play an important part in information processing.

Each organism depends on informational input from the external world. Without it is impossible to build models of the world, which are applied and combined with models that were inherited. The senses, which allow gaining external information, vary considerably. Different species apply different senses according to their specific approaches of how to recognize and understand their environment. All developments of these senses, however, started with the beginning of evolution. They started with the beginning of life—still biology’s biggest mystery—and thus they started small. They may have been started by catching single photons that provided a sense of an active light source, may it have been the sun or an object reflecting this photon. Encapsulation, self-adaption, reproduction or, in other words containment, metabolism and genetics, which are often, such as by physicist Roederer [

30], is seen as a combined first giant step of evolution we assume to as much later and was preceded by continuous appearance. Catching information was first. Loewenstein [

5] assumes that the most fundamental level of life is photosynthesis. “And photosynthesis is the capture of information from solar photons.” Molecular geneticist McFadden [

31] speculates that self-replication, which indeed might be seen as a precondition for the real beginning of life, was created by quantum processes incorporating entanglement of combinations of amino acids and multiverses, of which one eventually produced the right combination of amino acids capable of doing self-replication.

How life started in detail, however, is subject to research, and it is being debated. Let us assume with Loewenstein [

5] that, in whatever way it commenced, it started with an information gain. This is not necessarily the only aspect but certainly one of the most important ones. It is one that is so tightly connected with evolution that we consider it as a precondition for evolutionary development. We assume that from early on information usage went on with model building. Incoming information was—and is—used for creating models of the world. At a primitive stage, for example, bacteria—they already had in early history developed the cell wall as a major invention—used incoming light to orientate their movements. To a much greater extent incoming information was and is processed by mammals. Bigger species had to process more information to orientate themselves and act in their environment. The models they used during evolution were abstractions of more primitive models. Part of the abstraction was transformed, as a result, and became part of their physiognomy which allowed a species to physically take advantage of their environment. Of course, this was not “their environment” exclusively, but was inhabited by other species with which strong interactions like hunting or being hunted existed. Part of the model information went into “brainware”,

i.e., processing capabilities and data.

Each species extracts specific meaning out of its environment. The range of this extraction might be small, e.g., in the case of bacteria, or large, e.g., in the case of humans who are able to explore the depth of the universe, the smallness of quantum and their own mind, to name a few. The better and the more intensive the interaction with the world outside organisms is, the better are the preconditions to extend the current reach and broaden the sphere of knowledge.

4. Information and Energy

The interaction between organisms and their external environment happens via senses for gaining information. Different organisms feature different senses. Mobility broadens the scope and intensity of interaction. The scope of senses can be extended by walking, swimming, flying, etc. Communication capabilities, such as language, habitus, gestures, signs, etc., further extend interaction capabilities with the external world.

No matter which means of interaction are being used, they have to be paid for with energy by the organisms. For a biological organism, there is no exchange with its environment available at all that would be free of charge.

Semantic information in our assumption is a quality of energy—it is not carried by it. Information nevertheless does not change the fundamentals of energy. Einstein’s formula equalizing energy with mass and Planck’s formula equalizing energy with the product of a fundamental constant and frequency are not touched. Of course, we assume energy to be as un-destroyable, transferrable from one physical object to another, and—important here—convertible from one form to another one. Energy is dynamic, it is never homogeneous.

4.1. No Information without Energy

Bateson’s characterization of information as a “difference which makes a difference” already directs to energy. It seems plausible that no difference can be created without applying energy; no cause, no effect.

Shannon’s type of information intentionally rules out meaning. By doing so, information entropy, on the one hand, is a measurement of information in a certain sense, but not in a sense that is commonly shared. The common understanding of information includes Morris’ [

8] definition of syntax, semantics and pragmatics (see above).

All of the three mentioned components evidently were familiar to the human brain from quite early on. They were needed for doing language processing. DNA and RNA molecules are also places where we can identify those components of information when assuming the components are organized and interpreted as signs and words. Investigating the external world, artifacts for information storage, information transmission and information manipulation contain syntax, semantics and pragmatics. To be more precise, it must be emphasized that in the context outlined above externally stored information loses its status as information when it is stored. It regains the status as semantic information when it has been read again. When it is stored, it is transformed into manipulated structured matter or electromagnetic fields or holograms, and when read, its “engraved” structure is being transmitted to the reading instance by using energy. Then, it can be transformed into biological information once again. Information storage is feasible with matter and energy. It can be engraved, written, digitized or coded in electric fields. Many more options than those are available. They range from the Rosetta Stone (to name an ancient example) or the like to more modern means like books, news, documents, data on computers, streamed data or traffic signs, to name only a few.

Information transmission can take place by means of organism specific capabilities such as languages, gestures,

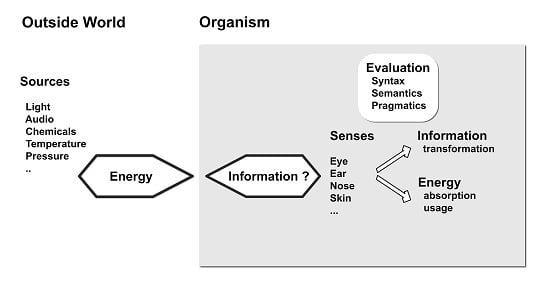

etc., and by external artifacts reaching from drums to modern media technologies. In the same way, information processing as a capability, in a narrow sense, is restricted to biological organisms. However, they can be supported by means of external artifacts like, for example, computers. In both cases, in our understanding, since information is restricted to residing in biological organisms, the externalized transmission and processing does not deal with information in a narrow sense, but merely with patterns of energy. External means of supporting biological storage, transmission and processing are not part of what evolution is concerned with. The external resource only becomes information—out of matter and energy—if it is activated and evaluated by biological organisms (see

Figure 1).

We assume, syntax has been produced by evolution as is semantics and pragmatics (see Morris [

8] for a definition). In the beginning, syntax has materialized as simple rules (if e.g., received energy—it may have been some photons—appears to come from a source more to the right as similar energy did before, then generate an impulse to move to the right too) imprinted in biochemistry molecules as it is imprinted into logical hardware circuits of computers (this analogy is not to be overstretched beyond certain limits, of course). Later, the biochemistry hardware became accompanied by “software”—also in an analogy to formal language of computer technology. We further assume that semantics was developed with accumulation of information, as were its syntactic rules and improved processing capabilities of the species biological neural network.

Figure 1.

Transformation of energy into information from outside to inside of a biologic organism. Energy is transformed into information via senses and the application of syntactic and semantic rules (by accompanied pragmatics) evaluating energy on its usability as information.

Thus, a book, the Rosetta Stone and an electronically represented Beethoven sonata remain dead matter or meaningless acoustic waves as long as they are not interpreted by a sufficiently knowledgeable being. Assuming sufficient knowledge means that this being has sufficient context information to its disposal to do the interpretation. As a step before this interpretation, however, the information has to be provided to the interpreting instance. For this, communication between source and interpreting instance—ultimately the brain—has to take place. Each sign that is to be communicated reaches this interpreter via energy. Whether it is photons reflected by book pages or audio signals or odorant chemicals reaching the odorant receptors, it is transformed from energy into information by the senses (eye, skin, ear, nose, and taste buds). It thus reaches the brain by means of energy—classified as information—and, with the help of energy, is manipulated for storage, processing and transmission.

5. The Evolutionary Energetic Information Model

As mentioned above, we base our approach of a new comprehensive information model on results available among others via Shannon’s Information Theory, Bateson’s definition, and Burgin’s ontology. The model defines semantic information in nature within a narrow and closely contoured framework. At the core, we propose that this is the only information in nature. Information in that sense is energy, however, not all energy is information. Information is biological and it is exclusively being produced by evolution. This information model we call Evolutionary Energetic Information Model (EEIM).

The basic ideas of our modeling approach of information are as follows:

information without energy cannot exist;

evolution exclusively creates information on various levels of complexity; and

thinking produces emerging models of the external world to reduce complexity.

5.2. Evolution Creates Meaning

EEIM is part of the biological evolution. The progress of evolution creates new SSP contexts. New SSP contexts thus are the result of adaption and selection. New capabilities—created, e.g., at random—manifest better or worse ways of surviving. Better survival means a higher probability of those who show it to exploit the environment, to create advantages within even their own species, and to bequeath the new capabilities to a next generation.

The information created by evolution is dynamic by nature. It lives and dies with the organism possessing it. In the case of humans, the organism has created various ways of externalizing information as a physical structure, e.g., by transforming semantic information into potential information residing in physical structures like stone engravings, written paper, silicon storage, etc. It can be (re-)activated by other humans by applying the proper set of SSP, thereby transforming potential information into semantic information. Let us take a ship like the RMS Titanic for example. When Morse telegraphs just came into wider use, an emergency call could only have been identified as such if the proper signal sequence had been received and both had followed the proper rules, as prescribed by SSP. Similar processes, we assume, are happening in biological systems as multi-staged transformation beginning with the senses and ending with brain processing.

In summary, our model comprises the characteristics in

Table 1.

Table 1.

Characteristics of Evolutionary Energetic Information Model (EEIM). A mapping between aspects of reality and model attributes is given.

| Aspects of Reality | | Attributes of Information EEIM |

|---|

| Evolution | 1 | Information is exclusively being “produced” by evolution |

| Energy | 2 | Information is a quality of-informational-energy |

| 3 | Informational energy provides semantic information to accordingly prepared receiver instances |

| 4 | Not all energy is informational |

| Semiotics | 5 | Information is bound to syntax, semantics and pragmatics |

| 6 | Information requires a sending instance |

| 7 | Information requires a biological receiver able to interpret incoming information |

| Biological receptors | 8 | Interpreting receiving instances for information are living organisms exclusively |

| Information processing | 9 | Organisms possess processing capabilities for information according to their place in evolution |

| 10 | SSP in context is needed to transform received information into new information |

| 11 | Animals are able to process information |

| Knowledge | 12 | Information may potentially be transformed into knowledge |

| 13 | Organisms possess knowledge according their background in evolution |

| 14 | Organisms processing knowledge are able to produce knowledge processing artifacts |

| Entropy | 15 | Shannon’s Information Theory allows for the probability of the appearance of signals. It does not cover semantic. |

(1) Information is exclusively being “produced” by evolution. Everything that was created by biological evolution receives, manipulates and sends information. All that is not a result of biological evolution contains no information but, as artifacts, can contain potential information. Potential information can—but need not—be Shannon Information created by biological objects of evolution. Transmission of potential information by means of patterns of energy to a biological receiver is a precondition of being interpreted there. Interpretation is accepting energy as information, classifying it accordingly and channeling it properly. Thereby semantic information is created out of energy. Semantic information is being “produced” by evolution as it came into being with evolution and has evolved in evolution over time as an intrinsic part of evolution itself.

(2) Information is a quality of—informational—energy. A transformation into information is bound to an earlier transmission of energy. In the case of potential information, where energy is the originating cause, the transformation result will be information. In case transformation does not take place, energy remains pure energy and may be used by the receiver organism as such. Every living system is equipped with a fixed number of distinct sensors. The sensors interact with a specific part of the surroundings we call environment (Kováč [

26]). Via these sensors, informational energy reaches the organism. In case of no energy inflow or when incoming energy is homogeneous,

i.e., when entropy is maximized, no semantic information can be detected.

(3) Informational energy provides information to accordingly prepared receiver instances. The observation of energy by an organism

a priori does not indicate whether the observed energy is information or not, unless the observer possesses and applies a fitting context,

i.e., an SSP. Thus energy is information in a context. Energy, however is present all the time. Whether the organism in question is able to detect semantic information or not makes the difference. This in turns depends on the semantic context, the syntactic rules and the pragmatic features available. Kováč [

26] summarizes that “Life is an eminently active enterprise aimed at acquiring both a fund of energy and a stock of knowledge, the possession of one being instrumental to the acquisition of the other.”

(4) Not all energy is information. Energy becomes information by a transformation of a receiving instance with a fitting context (SSP). Forms of energy may appear as light, heat, pressure, acoustics, electromagnetic fields, as well as olfactory and tasting substances and even quantum effects [

32]. In short, information can be anything the senses of biological beings are sensitive to. Depending on the applied context, the same energy might lead to different information.

(5) Information is bound to syntax, semantics and pragmatics. Whether energy is taken by an organism as semantic information or whether it remains pure energy depends on the context the organism provides. The context consists of SSP, which, in turn, is built of inherited parts and results in the learning of this organism. In turn, semantic information exists only within context, which is SSP.

(6) Information requires a sending instance. Information can only be received if sent before. This means that the source always has to spend energy. A book being read reflects photons from the sun or an artificial light source and thereby acts as a source of energy from the receiver’s point of view. Thus the sender instance does not need to be—but can be—a sender acting by explicit intention.

(7) Semantic information requires a biological receiver able to interpret incoming information. Information is non-static. It is dynamic in three major aspects. The first is with regard to its staying within biological systems. Information is constantly being processed, communicated or actively stored. The second aspect is with regard to evolution. Information is subject to evolutionary mechanisms like selection, mutation and adaption. Through these mechanisms, it develops with the organisms and species themselves and becomes part of what is being inherited. During the process of evolution, each species has developed its specific set of SSP. The third aspect touches individual learning. Learning in a social context necessarily also changes the SSP sets of an individual.

(8) Interpreting receiving instances for information are living organisms exclusively. Non-interpreting inorganic instances that receive energy are not able to perform the transformation into semantic information. Their lack of SSP does not allow the necessary classification of incoming energy as information. All incoming energy, then, remains energy and can, e.g., change the heat status of an object.

(9) Organisms possess processing capabilities for semantic information according to their place in evolution. Information can be received by any kind of organism depending on the available sets of SSP it possesses. A simple informational light may come with incoming photons where SSP consists of very simple rules of sensing darkness or brightness. A more complex information requiring a different SSP are, e.g., the massive data streams of the Large Hadron Collider (at the European Organization for Nuclear Research, CERN, Geneva) experiment.

(10) SSP in context is needed to transform received semantic information into new information. Information creates new information [

16]. Information needs information as an input, produces information as an intermediate results and as an output. Each step, however, changes the context and the SSP of information. This might result in ruling out incoming information so far accepted, or

vice versa.

(11) Animals are able to process information. Biological organisms perform information processing and are the results of information processing—however, not exclusively—and they develop new capabilities in information processing in the course of evolution. This includes gathering knowledge by species and individuals when building up context by applying SSP.

(12) Information may potentially be transformed into knowledge. Available information of sufficient complexity is mandatory for developing (more) complex information structures and are a precondition for the development of (more) sophisticated thinking capabilities. As Kováč states “Biological evolution is a progressing process of knowledge acquisition (cognition) and, correspondingly, of growth of complexity. The acquired knowledge represents epistemic complexity.” In addition to prior knowledge, available when new information comes in, there is also pragmatics regarding a subject as, e.g. context comprising interest, intention, novelty, complexity, and selectivity. All this determines the overall quantity of information.

(13) Organisms possess knowledge according their background in biological evolution. This is knowledge regarding their immediate and wider environment, about rules applicable in interaction with others, of certain techniques find and explore food, self-aware knowledge, etc.

(14) Organisms processing knowledge are able to produce knowledge processing artifacts. Artifacts for knowledge-processing, such as computers (not yet available for knowledge in a conscious sense), currently can only be developed by humans and thus by evolution.

(15) Shannon’s Information Theory allows for the probability of the appearance of signals. It does not cover semantic information. Shannon Entropy can be applied in inorganic and organic fields. By intention of Shannon and Weaver, it is restricted, as a statistical theory, to measure the amount of (potential) information going through a transmission channel. Shannon explained the role of redundancy, noise, and error rates [

13]. The underlying information model separated information from meaning.

Semantic information is being created exclusively in the process of biological evolution. At its core, energy within an organism processes—depending on the availability of SSP—classified energy into information and further on handles it as such. The complexity of the information and the SSP depend on the level of evolution reached.

5.3. Emerging of External World Models from Information Intake

Why do we not see quantum objects? The reason is that the entrance level for evolution was at a higher level. No decision was taken to do so. Instead, configurations of what we call matter and energy allowed interaction with, e.g., photons, which were taken as both energy and information. The latter at the very first stages resulted from very basic interactions of organisms (and their precursors) and their external world. They took in not just energy, but also more and more information regarding the environment.

By treating energy as information, models of the world were created from start on. A light or a dark spot, a moving object showing characteristics of prey, and so on. With two sensory systems objects could be calculated with regard to speed, distance etc. Colour sensitivity was added. The capabilities for creating very basic models of the external world were used to create more sophisticated models of the environment. This obviously has been done in different ways by different species. Because of the long common history shared between species, similarities between them, e.g., mammals, are strong.

Here, emergence comes into play. New capabilities combined with older ones allowed for the creation of new models of the world. With certain combinations of capabilities, new features of the outside world emerged as completely novel. Unfortunately, the process of emergence is not a well defined property or feature. Many types of emergence have merely been proposed, but cannot be proven. Whether emergence is considered an objective feature of the world, or whether it is merely existent in the eyes of the beholder, is still being discussed as philosopher Bedau [

33] reflects.

We do not consider evolution as a direct cause for emergence. It is part of cognitive abilities acquired during and within evolution. Our access to reality is limited and we have to rely on models of reality. The environment cannot be understood directly. We have to help ourselves by our constructions of reality. As philosopher Wallner [

34] states regarding models in the context of constructive realism “If they serve us well for gaining control over the environment, we keep them. If they do not, we discard them.” Model building seems to be an informational capability that all organisms, or better all organisms above a certain complexity, should possess. Model building in this sense is partnering with cognition in reducing complexity. Emergent—that is constructed—models are being created and used by the organisms and their brains. By creating new and more advanced models, available models may re-used and—when emergence happens—added with new qualities not contained before (e.g., bacteria to orient themselves according stimuli not used before, crows by recognize new types of enemies, humans by thinking about information models). Thus, emergence is a result of information processing not a result of a miracle or an intervening divine instance.

As philosopher Jeremy Butterfield [

35] does, we do consider emergence as knowledge that is novel and robust relative to some more basic comparison class Emergent phenomena are Janus faced; they depend on more basic phenomena, and yet they are autonomous from that base [

33].

We take the view that the process of taking semantic information from the outside world into the unfolding biological environment of life and making it subject to evolution started the process of model building, which is still going on in all species. Models are being built on top of more basic (or less adapted) earlier models and provide the basis for even more sophisticated models. This is obviously a knowledge building process. At different stages, specific views of the world are created.

This process of building models is connected to steps or stages marking knowledge leaps. It is based on taking in information, combining it with information already there and creating new information. With increasing amounts of information, complexity of information models had to be created that are able to reduce both amount of data to be processed and complexity to be dealt with. Since information is energy, both reductions are energy saving and thus optimizations of the running of the thermodynamically open organic systems.

5.4. EEIM’s Impact on Science

Will EEIM have a practical impact? We assume so. For an engineer, it makes a significant difference whether a motor is described by its internal forces at work, by the interplay of components, and by the chemical reactions taking place or whether—complementary—she/he is also able to understand, e.g., the thermodynamic laws and the role entropy plays in the machinery. Similarly, the capabilities of a computer cannot be sufficiently understood by just analyzing and understanding in detail its physical components and their interfaces to each other. It is the software which makes the difference and turns the physical device into what it is, an information manipulating framework of great flexibility. To understand how a computer works, the understanding principles of algorithms, of programming languages, of logical circuits, etc. must also be understood. The point is, understanding of complex systems requires holistic views to be applied. In any way, motors and computers are here to be seen as metaphoric, not as strong analogies to information handling in biological organisms. Life is certainly different from machinery.

Being aware of the important role information has in biology will influence the whole attitude of how organisms are being analyzed, understood and described. This will hopefully open the way to identifying new principles and maybe laws information obeys in organisms. Currently, we are only beginning to understand biological components and processes to a certain degree. To understand more of the combined roles and impacts of information, evolution and energy the EEM hopefully provides a staring point for new thinking.

Inevitable, this contribution leaves many questions open. Among them, are the roles of external information resources and information processing artifacts, which show capabilities that resemble processes of evolution. Also, the measurement of semantic information is not answered and—connected with the question of the very beginning of life—the origin of biological semantic information and its developments throughout evolution. The impact of quantum information on biological information, we also expect, to be a field of fruitful studies.

6. Conclusions

This contribution presents an approach to understand semantic information in nature, taking into account the views of Shannon, Bateson, Morris and others. The novelty of this approach is threefold. First, information is considered as a quality of energy, however, not all energy is information. Second, semantic information is assumed to be processed by biological organisms only. Third, information is being “produced” by evolution and restricted to areas belonging to evolution. With EEIM, we can now better explain the growth of information in time and in species in general. We make a distinction between Shannon Information, classified as potential information, and semantic information used by biological organisms. Semantic information is part of biological organisms in various degrees, depending on the species themselves, their ecological niche, the time within evolution, etc. This distinction draws a line between information as statistic pattern and semantic information. We have also created a basis from where in history knowledge was developed and worldviews or world models have been developed by species. This will potentially help epistemology to understand better how organisms perform the perception of the outer world. It will also help to understand better how organisms reduce complexity of incoming information of the outer world in order to create simplified models for their internal use.