Towards Expert-Based Speed–Precision Control in Early Simulator Training for Novice Surgeons

Abstract

:1. Introduction

2. Materials and Methods

2.1. Research Ethics and Participants

2.2. Camera Views

2.3. Five Step Pick-and-Place Task

2.4. Generation of Individual Training Data for Time and Precision

3. Results

3.1. Expert Benchmark Statistics

3.2. Speed–Accuracy Trade-off Functions (SATFs) for Detecting Individual Strategies

3.3. Who Beats the Expert?

3.4. Criteria for Strategy

3.5. Criteria for Level of Performance

3.6. Criteria for Stability of Performance

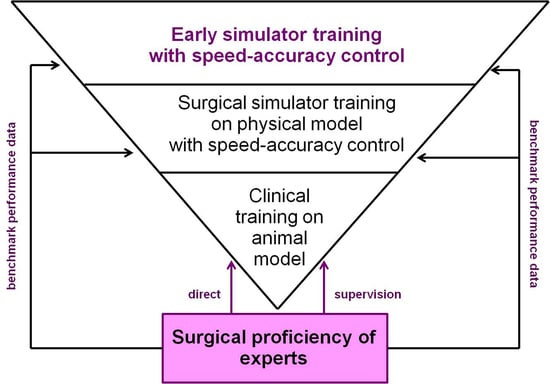

3.7. Towards Expert-Based Speed–Precision Control

- generate reliable and discerning measures (parameters), relative to time and precision of individual performance, at any moment in time during training.

- compare individual parameter measures and statistics with the desired parameter value, based on the known (“learned”) performance profile of an expert user, at any moment in time during training.

- provide feedback to the user as early as possible, and regularly as necessary, about what exactly he/she needs to focus on while training to attain an optimal performance level.

4. Discussion

Supplementary Materials

Funding

Acknowledgments

Conflicts of Interest

References

- Stunt, J.J.; Wulms, P.H.; Kerkhoffs, G.M.; Dankelman, J.; van Dijk, C.N.; Tuijthof, G.J.M. How valid are commercially available medical simulators? Adv. Med. Educ. Pract. 2014, 5, 385–395. [Google Scholar] [CrossRef] [PubMed]

- Marcano, L.; Komulainen, T.; Haugen, F. Implementation of performance indicators for automatic assessment. In Proceedings of the 27th European Symposium on Computer Aided Process Engineering, Barcelona, Spain, 1–5 October 2017. [Google Scholar]

- Marcano, L.; Yazidi, A.; Ferati, M.; Komulainen, T. Towards Effective Automatic Feedback for Simulator Training. In Proceedings of the 58th Conference on Simulation and Modelling, Reykjavik, Iceland, 25–27 September 2017. [Google Scholar]

- Batmaz, A.U.; de Mathelin, M.; Dresp-Langley, B. Getting nowhere fast: Trade-off between speed and precision in training to execute image-guided hand-tool movements. BMC Psychol. 2016, 4, 55. [Google Scholar] [CrossRef] [PubMed]

- Batmaz, A.U.; de Mathelin, M.; Dresp-Langley, B. Effects of indirect screen vision and tool-use on the time and precision of object positioning on real-world targets. Perception 2016, 45, 286. [Google Scholar]

- Batmaz, A.U.; Falek, M.; Nageotte, F.; Zanne, P.; Zorn, L.; de Mathelin, M.; Dresp-Langley, B. Novice and expert haptic behaviours while using a robot controlled surgery system. In Proceedings of the 2017 13th IASTED International Conference on Biomedical Engineering, Innsbruck, Austria, 20–21 February 2017. [Google Scholar]

- Batmaz, A.U.; de Mathelin, M.; Dresp-Langley, B. Seeing virtual while acting real: Visual display and strategy effects on the time and precision of eye-hand coordination. PLoS ONE 2017, 12, e0183789. [Google Scholar] [CrossRef]

- Batmaz, A.U.; de Mathelin, M.; Dresp-Langley, B. Effects of 2D and 3D image views on hand movement trajectories in the surgeon’s peri-personal space in a computer controlled simulator environment. Cogent Med. 2018, 5, 1426232. [Google Scholar] [CrossRef]

- Gallagher, A.G.; O’Sullivan, C. Fundamentals in Surgical Simulation: Principles and Practice. Improving Medical Outcome—Zero Tolerance Series; Springer Business Media: Berlin, Germany, 2011. [Google Scholar]

- Gallagher, A.G. Metric-based simulation training to proficiency in medical education: What it is and how to do it. Ulster Med. J. 2012, 81, 107–113. [Google Scholar]

- Gallagher, A.G.; Ritter, E.M.; Champion, H.; Higgins, G.; Fried, M.P.; Moses, G. Virtual reality simulation for the operating room: Proficiency-based training as a paradigm shift in surgical skills training. Ann. Surg. 2005, 241, 364–372. [Google Scholar] [CrossRef]

- Dreyfus, H.L.; Dreyfus, S.E.; Athanasiou, T. Mind over Machine: The Power of Human Intuition and Expertise in the Era of the Computer; Free Press: New York, NY, USA, 1986. [Google Scholar]

- Seymour, N.E.; Gallagher, A.G.; Roman, S.A.; O’Brien, M.K.; Andersen, D.K.; Satava, R.M. Analysis of errors in laparoscopic surgical procedures. Surg. Endosc. 2004, 18, 592–595. [Google Scholar] [CrossRef]

- Van Sickle, K.; Smith, B.; McClusky, D.A.; Baghai, M.; Smith, C.D.; Gallagher, A.G. Evaluation of a tensiometer to provide objective feedback in knot-tying performance. Am. Surg. 2005, 71, 1018–1023. [Google Scholar]

- Van Sickle, K.R.; Gallagher, A.G.; Smith, C.D. The effect of escalating feedback on the acquisition of psychomotor skills for laparoscopy. Surg. Endosc. 2007, 21, 220–224. [Google Scholar] [CrossRef]

- Reznick, R.K. Teaching and testing technical skills. Am. J. Surg. 1993, 165, 358–361. [Google Scholar] [CrossRef]

- Chen, C.; White, L.; Kowalewski, T.; Aggarwal, R.; Lintott, C.; Comstock, B.; Kuksenok, K.; Aragon, C.; Holst, D.; Lendvay, T. Crowd-sourced assessment of technical skills: A novel method to evaluate surgical performance. J. Surg. Res. 2014, 187, 65–71. [Google Scholar] [CrossRef] [PubMed]

- Moorthy, K.; Munz, Y.; Sarker, S.K.; Darzi, A. Objective assessment of technical skills in surgery. BMJ 2003, 327, 1032–1037. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sewell, C.; Morris, D.; Blevins, N.H.; Dutta, S.; Agrawal, S.; Barbagli, F.; Salisbury, K. Providing metrics and performance feedback in a surgical simulator. Comput. Aided Surg. 2008, 13, 63–81. [Google Scholar] [CrossRef] [PubMed]

- Ritter, E.M.; McClusky, D.A.; Gallagher, A.G.; Smith, C.K. Real-time objective assessment of knot quality with a portable tensiometer is superior to execution time for assessment of laparoscopic knot-tying performance. Surg. Innov. 2005, 12, 233–237. [Google Scholar] [CrossRef] [PubMed]

- Rosen, J.; Hannaford, B.; Richards, C.G.; Sinanan, M.N. Markov modeling of minimally invasive surgery based on tool/tissue interaction and force/torque signatures for evaluating surgical skills. IEEE Trans. Biomed. Eng. 2001, 48, 579–591. [Google Scholar] [CrossRef] [PubMed]

- Jarc, A.M.; Curet, M.J. Viewpoint matters: Objective performance metrics for surgeon endoscope control during robot-assisted surgery. Surg. Endosc. 2017, 31, 1192–1202. [Google Scholar] [CrossRef]

- Fogassi, L.; Gallese, V. Action as a binding key to multisensory integration. In Handbook of Multisensory Processes; Calvert, G., Spence, C., Stein, B.E., Eds.; MIT Press: Cambridge, MA, USA, 2004; pp. 425–441. [Google Scholar]

- Bonnet, C.; Dresp, B. A fast procedure for studying conditional accuracy functions. Behav. Res. Instrum. Comput. 1993, 25, 2–8. [Google Scholar] [CrossRef] [Green Version]

- Fitts, P.M. The information capacity of the human motor system in controlling the amplitude of movement. J. Exp. Psychol. 1954, 47, 381–391. [Google Scholar] [CrossRef]

- Meyer, D.E.; Irwin, A.; Osman, A.M.; Kounios, J. The dynamics of cognition and action: Mental processes inferred from speed-accuracy decomposition. Psychol. Rev. 1988, 95, 183–237. [Google Scholar] [CrossRef]

- Luce, R.D. Response Times: Their Role in Inferring Elementary Mental Organization; Oxford University Press: New York, NY, USA, 1986. [Google Scholar]

- Held, R. Visual-haptic mapping and the origin of crossmodal identity. Optometry Vision Sci. 2009, 86, 595–598. [Google Scholar] [CrossRef] [PubMed]

- Henriques, D.Y; Cressman, E. Visuo-motor adaptation and proprioceptive recalibration. J. Motor Behav. 2012, 44, 435–444. [Google Scholar] [CrossRef] [PubMed]

- Krakauer, J.W.; Mazzoni, P. Human sensorimotor learning: Adaptation, skill, and beyond. Curr. Opin. Neurobiol. 2011, 21, 636–644. [Google Scholar] [CrossRef]

- Goh, A.C.; Goldfarb, D.W.; Sander, J.C.; Miles, B.J.; Dunkin, B.J. Global evaluative assessment of robotic skills: Validation of a clinical assessment tool to measure robotic surgical skills. J. Urol. 2012, 187, 247–252. [Google Scholar] [CrossRef] [PubMed]

- Dresp-Langley, B. Principles of perceptual grouping: Implications for image-guided surgery. Front. Psychol. 2015, 6, 1565. [Google Scholar] [CrossRef] [PubMed]

- Smith, R.; Patel, V.; Satava, R. Fundamentals of robotic surgery: A course of basic robotic surgery skills based upon a society consensus template of outcomes measures and curriculum development. Int. J. Med. Robot Comput. Assist. Surg. 2014, 10, 379–384. [Google Scholar] [CrossRef] [PubMed]

- Aiono, S.; Gilbert, J.M.; Soin, B.; Finlay, P.A.; Gordan, A. Controlled trial of the introduction of a robotic camera assistant (Endo Assist) for laparoscopic cholecystectomy. Surg. Endosc. Other Interv. Tech. 2002, 16, 1267–1270. [Google Scholar] [CrossRef]

- King, B.W.; Reisner, L.A.; Pandya, A.K.; Composto, A.M.; Ellis, R.D.; Klein, M.D. Towards an autonomous robot for camera control during laparoscopic surgery. J. Laparoendosc. Adv. Surg. Tech. 2013, 23, 1027–1030. [Google Scholar] [CrossRef]

| EXPERT | NT 1 | NT2 | NT3 | NT4 | NT5 | NT6 | NT7 | NT8 | NT9 | NT10 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | 13.74 | 15.79 | 14.79 | 12.90 | 14.81 | 26.23 | 19.17 | 21.76 | 13.46 | 12.46 | 12.82 |

| Standard deviation | 3.10 | 3.54 | 3.92 | 2.79 | 2.64 | 4.01 | 5.72 | 5.55 | 2.69 | 2.16 | 3.45 |

| EXPERT | NT1 | NT2 | NT3 | NT4 | NT5 | NT6 | NT7 | NT8 | NT9 | NT10 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | 871 | 2004 | 1598 | 1691 | 1189 | 1255 | 1229 | 1743 | 1425 | 1572 | 1919 |

| Standard deviation | 273 | 504 | 399 | 487 | 406 | 345 | 446 | 584 | 586 | 470 | 640 |

| EXPERT | NOVICE A “Extreme Speed-Focused Strategy” | NOVICE B “Speed-Focused Strategy” | NOVICE C “Undetermined Strategy” | NOVICE D “Optimal Precision-Focused Strategy” | |

|---|---|---|---|---|---|

| Mean | 14.63 | 4.76 | 6.35 | 8.85 | 9.13 |

| Standard deviation | 2.59 | 0.42 | 0.71 | 1.77 | 1.25 |

| EXPERT | NOVICE A “Extreme Speed-Focused Strategy” | NOVICE B “Speed-Focused Strategy” | NOVICE C “Undetermined Strategy” | NOVICE D “OPTIMAL Precision-Focused Strategy” | |

|---|---|---|---|---|---|

| Mean | 770 | 1146 | 905 | 1278 | 406 |

| Standard deviation | 166 | 378 | 250 | 434 | 151 |

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dresp-Langley, B. Towards Expert-Based Speed–Precision Control in Early Simulator Training for Novice Surgeons. Information 2018, 9, 316. https://doi.org/10.3390/info9120316

Dresp-Langley B. Towards Expert-Based Speed–Precision Control in Early Simulator Training for Novice Surgeons. Information. 2018; 9(12):316. https://doi.org/10.3390/info9120316

Chicago/Turabian StyleDresp-Langley, Birgitta. 2018. "Towards Expert-Based Speed–Precision Control in Early Simulator Training for Novice Surgeons" Information 9, no. 12: 316. https://doi.org/10.3390/info9120316

APA StyleDresp-Langley, B. (2018). Towards Expert-Based Speed–Precision Control in Early Simulator Training for Novice Surgeons. Information, 9(12), 316. https://doi.org/10.3390/info9120316