1. Introduction

Most natural language processing (NLP) tasks operationalize an input sentence as a word sequence with token-level embeddings and features, which creates long-distance dependencies (LDDs) when encountering long complex sentences such as dependency parsing [

1], constituency parsing [

2], semantic role labeling (SRL) [

3], machine translation [

4], discourse parsing [

5], and text summary [

6]. In previous works, the length of a sentence has been blamed for LDDs superficially, and several universal methods are proposed to cure this issue, e.g., hierarchical recurrent neural networks [

7], long short-term memory (LSTM) [

8], attention mechanism [

9], Transformer [

10], implicit graph neural networks [

11], etc.

Abstract meaning representation (AMR) parsing [

12], or translating a sentence to a directed acyclic semantic graph with relations among abstract concepts, has made strides in counteracting LDDs in different approaches. In terms of transition-based strategies, Peng et al. [

13] propose a cache system to predict arcs between distant words. In graph-based methods, Cai and Lam [

14] present a graph ↔ sequence iterative inference to overcome inherent defects of the one-pass prediction process in parsing long sentences. In seq2seq-based approaches, Bevilacqua et al. [

15] employ the Transformer-based pre-trained language model, BART [

16], to address LDDs in long sentences. Among these categories, seq2seq-based approaches have become mainstream, and recent parsers [

17,

18,

19,

20] employ the seq2seq architecture with the popular codebase

SPRING [

15], achieving better performance. Notably,

HGAN [

20] integrates token-level features, syntactic dependencies (SDP), and SRL with heterogeneous graph neural networks and has become the state-of-the-art (SOTA) in terms of removing extra silver training data, graph-categorization, and ensemble methods.

However, these AMR parsers still suffer performance degradation when encountering long sentences with deeper AMR graphs [

18,

20] that introduce most LDD cases. We argue that the complexity of the clausal structure inside a sentence is the essence of LDDs, where clauses are the core units of grammar and center on a verb that determines the occurrences of other constituents [

21]. Our intuition is that non-verb words in a clause typically cannot depend on words outside, while dependencies between verbs correspond to the inter-clause relations, resulting in LDDs across clauses [

22].

To prove our claim, we demonstrate the AMR graph of a sentence from the AMR 2.0 dataset (

https://catalog.ldc.upenn.edu/LDC2017T10, accessed on 11 June 2022) and distinguish the AMR relation distances in terms of different segment levels (clause/phrase/token) in

Figure 1. Every AMR relation is represented as a dependent edge between two abstract AMR nodes that align to one or more input tokens. The dependency distances of inter-token relations are subtractive results from the indices of tokens aligned to the source and target nodes, while those of inter-phrase and inter-clause relations are calculated by indices of the headwords in phrases and the verbs in clauses, respectively. (The AMR relation distances between a main clause and a relative/appositive clause are decided by the modified noun phrase in the former and the verb in the latter). As can be observed:

Dependency distances of inter-clause relations are typically much longer than those of inter-phrase and inter-token relations, leading to most of the LDD cases. For example, the AMR relation , occurring in the clause “I get very anxious”, and its relative clause “which does sort of go away …”, has a dependency distance of 6 (subtracting the 9th token “anxious” from the 15th token “go”).

Reentrant AMR nodes abstracted from pronouns also lead to far distant AMR relations. For example, the AMR relation has a dependency distance of 33 (subtracting the 1st token “I” from the 34th token “wait”).

Based on the findings above, we are inspired to utilize the clausal features of a sentence to cure LDDs. Rhetorical structure theory (RST) [

23] provides a general way to describe the coherence relations among clauses and some phrases, i.e., elementary discourse units, and postulates a hierarchical discourse structure called discourse tree. Except for RST, a novel clausal feature, hierarchical clause annotation (HCA) [

24], also captures a tree structure of a complex sentence, where the leaves are segmented clauses and the edges are the inter-clause relations.

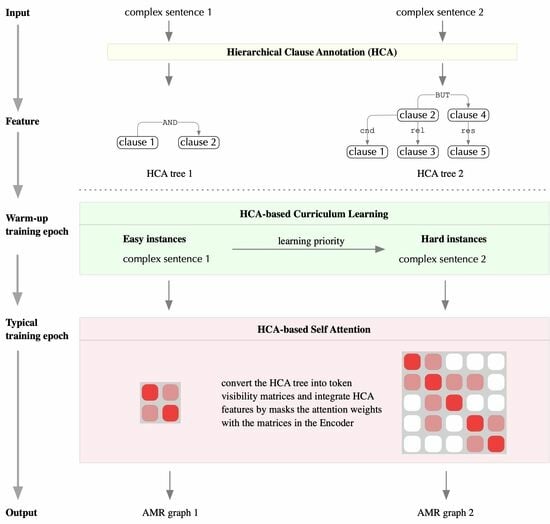

Due to the better parsing performances of the clausal structure [

24], we select and integrate the HCA trees of complex sentences to cure LDDs in AMR parsing. Specifically, we propose two HCA-based approaches, HCA-based self-attention (HCA-SA) and HCA-based curriculum learning (HCA-CL), to integrate the HCA trees as clausal features in the popular AMR parsing codebase,

SPRING [

15]. In HCA-SA, we convert an HCA tree into a clause adjacency matrix and a token visibility matrix to restrict the attention scores between tokens from unrelated clauses and increase those from related clauses in masked-self-attention encoder layers. In HCA-CL, we employ curriculum learning with two training curricula, Clause-Number and Tree-Depth, with the assumption that “the more clauses or the deeper clausal tree in a sentence, the more difficult it is to learn”.

We conduct extensive experiments on two in-distribution (ID) AMR datasets (i.e., AMR 2.0 and AMR 3.0 (

https://catalog.ldc.upenn.edu/LDC2020T02, accessed on 11 June 2022)) and three out-of-distribution (OOD) ones (i.e., TLP, New3, and Bio) to evaluate our two HCA-based approaches. In ID datasets, our parser achieves a 0.7 Smatch F1 score improvement against the baseline model,

SPRING, on both AMR 2.0 and AMR 3.0, and outperforms the SOTA parser,

HGAN, by 0.5 and 0.6 F1 scores for the fine-grained metric

SRL in the two datasets. Notably, as the clause number of the sentence increases, our parser outperforms

SPRING by a large margin and achieves better Smatch F1 scores than

HGAN, indicating the ability to cure LDDs. In OOD datasets, the performance boosts achieved by our HCA-based approaches are more evident in complicated corpora like New3 and Bio, where sentences consist of more clauses and longer clauses. Our code is publicly available at

https://github.com/MetroVancloud/HCA-AMRparsing (accessed on 3 August 2023).

The rest of this paper is organized as follows. The related works are summarized in

Section 2, and the proposed approaches are detailed in

Section 3. Then, the experiments of AMR parsing are presented in

Section 4. Next, the discussion of the experimental results is presented in

Section 5. Finally, our work is concluded in

Section 6.

4. Experiments

In this section, we describe the details of datasets, environments, model hyperparameters, evaluation metrics, compared models, and parsing results for the experiments.

4.1. Datasets

For the benchmark datasets, we choose two standard AMR datasets, AMR 2.0 and AMR 3.0, as the ID settings and three test sets, TLP, New3, and Bio, as the OOD settings.

For the HCA tree of each sentence, we use the manually annotated HCA trees for AMR 2.0 provided by [

24] and auto-annotated HCA trees for the remaining datasets, which were all generated by the HCA Segmenter and the HCA Parser proposed by [

24].

4.1.1. In-Distribution Datasets

We first train and evaluate our HCA-based parser on two standard AMR parsing evaluation benchmarks:

AMR 2.0 includes 39,260 sentence–AMR pairs in which source sentences are collected from: the DARPA BOLT and DEFT programs, transcripts and English translations of Mandarin Chinese broadcast news programming from China Central TV, text from the Wall Street Journal, translated Xinhua news texts, various newswire data from NIST OpenMT evaluations, and weblog data used in the DARPA GALE program.

AMR 3.0 is a superset of AMR 2.0 and enriches the data instances to 59,255. New source data added to AMR 3.0 include sentences from Aesop’s Fables, parallel text and the situation frame dataset developed by LDC for the DARPA LORELEI program, and lead sentences from Wikipedia articles about named entities.

The training, development, and test sets in both datasets are a random split, and therefore we take them as ID datasets as in previous works [

15,

17,

18,

20,

45].

4.1.2. Out-of-Distribution Datasets

To further estimate the effects of our HCA-based approaches on open-world data that come from a different distribution, we follow the OOD settings introduced by [

15] and predict based on three OOD test sets with the parser trained on the AMR 2.0 training set:

New3 (Accessed at

https://catalog.ldc.upenn.edu/LDC2020T02, accessed on 11 August 2022): A set of 527 instances from AMR 3.0, whose source was the LORELEI DARPA project (not included in the AMR 2.0 training set) consisting of excerpts from newswire and online forums.

TLP (Accessed at

https://amr.isi.edu/download/amr-bank-struct-v3.0.txt, accessed on 12 October 2022; note that the annotators did not mention the translators of this English version applied in the annotation): The full AMR-tagged children’s novel written by Antoine de Saint-Exupéry,

The Little Prince (version 3.0), consisting of 1562 pairs.

4.1.3. Hierarchical Clause Annotations

For the hierarchical clausal features utilized in our HCA-based approaches, we use the manually annotated HCA corpus for AMR 2.0 provided in [

24]. Moreover, we employ the HCA segmenter and the HCA parser proposed in [

24] to generate silver HCA trees for AMR 3.0 and three OOD test sets. Detailed statistics of the evaluation datasets in this paper are listed in

Table 1.

4.2. Baseline and Compared Models

We compare our HCA-based AMR parser with several recent parsers:

AMR-gs (2020) [

14], a graph-based parser that enhances incremental graph construction with an AMR graph ↔ sequence (

AMR-gs) iterative inference mechanism in one-stage procedures.

APT (2021) [

44], a transition-based parser that employs an action-pointer transformer (APT) to decouple source tokens from node representations and address alignments.

StructBART (2021) [

45], a transition-based parser that integrates the pre-trained language model, BART, for structured fine-tuning.

SPRING (2021) [

15], a fine-tuned BART model that predicts a linearized AMR graph.

HCL (2022) [

18], a hierarchical curriculum learning (

HCL) framework that helps the seq2seq model adapt to the AMR hierarchy.

ANCES (2022) [

17], a seq2seq-based parser that adds the important ancestor (

ANCES) information into the Transformer decoder.

HGAN (2022) [

20], a seq2seq-based parser that applies a heterogeneous graph attention network (

HGAN) to argument word representations with syntactic dependencies and semantic role labeling of input sentences. It is also the current SOTA parser in the settings of removing graph re-categorization, extra silver training data, and ensemble methods.

Since

SPRING provides a clear and efficient seq2seq-based architecture based on a vanilla BART, recent seq2seq-based models

HCA,

ANCES, and

HGAN all select it as the codebase. Therefore, we also choose

SRPING as the baseline model to apply our HCA-based approaches. Additionally, we do not take the competitive AMR parser,

ATP [

19], into consideration for our compared models since it employs syntactic dependency parsing and semantic role labeling as intermediate tasks to introduce extra silver training data.

4.3. Hyper-Parameters

For the hyper-parameters of our HCA-based approaches, we list their layer, name, and value in

Table 2. To pick the hyper-parameters employed in the HCA-SA Encoder, i.e.,

,

, and

, we use a random search with a total of 16 trials in their search spaces (

: [0.1, 0.6],

: [0.4, 0.9],

: [0.7, 1.0], and stride +0.1). According to the results of these experimental trials, we selected the final pick for each hyper-parameter. All models are trained until reaching their maximum epochs, and then we select the best model checkpoint on the development set.

4.4. Evaluation Metrics

Following previous AMR parsing works, we use Smatch scores [

57] and fine-grained metrics [

58] to evaluate the performances. Specifically, the fine-grained AMR metrics are:

- 1.

Unlabeled (Unlab.): Smatch computed on the predicted graphs after removing all edge labels.

- 2.

No WSD (NoWSD): Smatch while ignoring Propbank senses (e.g., “go-01” vs. “go-02”).

- 3.

Named entity (NER): F-score on the named entity recognition (:name roles).

- 4.

Wikification (Wiki.): F-score on the wikification (:wiki roles).

- 5.

Negation (Neg.): F-score on the negation detection (:polarity roles).

- 6.

Concepts (Conc.): F-score on the concept identification task.

- 7.

Reentrancy (

Reent.): Smatch computed on reentrant edges only, e.g., the edges of node “I” in

Figure 1.

- 8.

Semantic role labelings (SRL): Smatch computed on :ARGi roles only.

As suggested in [

18],

Unlab.,

Reent, and

SRL are considered to be structure-dependent metrics, since:

Unlab. does not consider any edge labels and only considers the graph structure;

Reent. is a typical structure feature for the AMR graph. Without reentrant edges, the AMR graph is reduced to a tree;

SRL denotes the core-semantic relation of the AMR, which determines the core structure of the AMR.

Conversely, all other metrics are classified as structure-independent metrics.

4.5. Experimental Environments

Table 3 lists the information on the main hardware and software used in our experimental environments. Note that the model in AMR 2.0 is trained for a total of 30 epochs for 16 h, while the model trained in AMR 3.0 is finished in a total of 30 epochs for 28 h given the experimental environments.

4.6. Experimental Results

We now report the AMR parsing performances of our HCA-based parser and other comparison parsers on ID datasets and OOD datasets, respectively.

4.6.1. Results in ID Datasets

As demonstrated in

Table 4, we report AMR parsing performances of the baseline model (

SPRING), other compared parsers, and the modified

SPRING that applies our HCA-based self-attention (

HCA-SA) and curriculum learning (

HCA-CL) approaches on ID datasets AMR 2.0 and AMR 3.0. All the results of our HCA-based model have averaged scores of five experimental trials, and we compute the significance of performance differences using the non-parametric approximate randomization test [

59]. From the results, we can make the following observations:

Equipped with our HCA-SA and HCA-CL approaches, the baseline model SPRING achieves a 0.7 Smatch F1 score improvement on both AMR 2.0 and AMR 3.0. The improvements are significant, with and , respectively.

In AMR 2.0, our HCA-based model outperforms all compared models except ANCES and the HGAN version that introduces both DP and SRL features.

In AMR 3.0, consisting of more sentences with HCA trees, the performance gap between our HCA-based parser and the SOTA (HGAN with DP and SRL) is only a 0.2 Smatch F1 score.

To better analyze how the performance improvements of the baseline model are achieved when applying our HCA-based approaches, we also report structure-dependent fine-grained results in

Table 4. As claimed in

Section 1, inter-clause relations in the HCA can bring LDD issues, which are typically related to AMR concept nodes aligned with verb phrases and reflected in structure-dependent metrics. As can be observed:

Our HCA-based model outperforms the baseline model in nearly all fine-grained metrics, especially in structure-dependent metrics, with 1.1, 1.8, and 3.9 F1 score improvements in Unlab., Reent., and SRL, respectively.

In the , , and metrics, our HCA-based model achieves the best performance against all compared models.

4.6.2. Results in OOD Datasets

As demonstrated in

Table 5, we report the parsing performances of our HCA-based model and compared models on three OOD datasets. As can be seen:

Our HCA-based model outperforms the baseline model SPRING with 2.5, 0.7, and 3.1 Smatch F1 score improvements in the New3, TLP, and Bio test sets, respectively.

In the New3 and Bio datasets that contain long sentences of newswire and biomedical texts and have more HCA trees, our HCA-based model outperforms all compared models.

In the TLP dataset that contains many simple sentences of a children’s story and fewer HCA trees, our HCA-based does not perform as well as HCL and HGAN.

5. Discussion

As shown in the previous section, our HCA-based model achieves prominent improvements against the baseline model, SPRING, and outperforms other compared models, including the SOTA model HGAN in some fine-grained metrics in ID and ODD datasets. In this section, we further discuss the paper’s main issue of whether our HCA-based approaches have any effect on curing LDDs. Additionally, the ablation studies and the case studies are also provided.

5.1. Effects on Long-Distance Dependencies in ID Datasets

As claimed in

Section 1, most LDD cases occur in sentences with complex hierarchical clause structures. In

Figure 5, we demonstrate the parsing performance trends of the baseline model

SPRING, the SOTA parser

HGAN (we only use the original data published in their paper to draw performance trends over the number of tokens, without performances in terms of the number of clauses), and our HCA-based model over the number of tokens and clauses in sentences from AMR 2.0. It can be observed that:

When the number of tokens (denoted as #Token for simplicity ) >20 in a sentence, the performance boost of our HCA-based model against the baseline SPRING gradually becomes significant.

For the case #Token > 50 that indicates sentences with many clauses and inter-clause relations, our HCA-based model outperforms both SPRING and HGAN.

When compared with performances trends for #Clause, the performance lead of our HCA-based model against SPRING becomes much more evident as #Clause increases.

To summarize, our HCA-based approaches show significant effectiveness on long sentences with complex clausal structures that introduce most LDD cases.

5.2. Effects on Long-Distance Dependencies in OOD Datasets

As the performance improvements achieved by our HCA-based approaches are much more prominent in OOD datasets than in ID datasets, we further explore the OOD datasets with different characteristics.

Figure 6 demonstrates the two main statistics of three OOD datasets, i.e., the average number of clauses per sentence (denoted as

) and the average number of tokens per clause (

). These two statistics of the datasets both characterize the complexity of the clausal structure inside a sentence, where

We also present the performance boosts of our HCA-based parser against

SPRING in

Figure 6. As can be observed, the higher the values of

and

in an OOD dataset, the higher the Smatch improvements that are achieved by our HCA-based approaches. Specifically, New3 and Bio seem to cover more complex texts from newswire and biomedical articles, while TLP contains simpler sentences that are easy for children to read. Therefore, our AMR parser performs much better on complex sentences from Bio and New3, indicating the effectiveness of our HCA-based approaches on LDDs.

5.3. Ablation Study

In the HCA-SA approach, two token visibility matrices derived from HCA trees are introduced to mask certain attention heads. Additionally, we propose a clause-relation-bound attention head setting to integrate inter-clause relations in the encoder. Therefore, we conduct ablation studies by introducing random token visibility matrices (denoted as “w/o VisMask”) and removing the clause-relation-bound attention setting (denoted as “w/o ClauRel”). Note that “w/o VisMask” contains the case of “w/o ClauRel” because the clause-relation-bound attention setting is based on the masked-self-attention mechanism.

In the HCA-CL approach, extra training epochs for Clause-Number and Tree-Depth curricula serve as a warm-up stage for the subsequent training process. To eliminate the effect of the extra epochs, we also add the same number of training epochs to the ablation study of our HCA-CL approach.

In HCA-SA, the clause-relation-bound attention setting (denoted as “ClauRel”) contributes most in the SRL metric due to the mappings between inter-clause relations (e.g., Subjective and Objective) and SRL-type AMR relations (e.g., :ARG0 and :ARG1).

In HCA-SA, the masked-self-attention mechanism (denoted as “VisMask”) achieves significant improvements in the Reent. metric by increasing the visibility of pronoun tokens to all tokens.

In HCA-CL, the Tree-Depth curriculum (denoted as “TD”) has no effects on the parsing performances. We conjecture that sentences with much deeper clausal structures are rare, and the number of split buckets for the depth of clausal trees is not big enough to distinguish the training sentences.

5.4. Case Study

To further demonstrate the effectiveness of our HCA-based approaches on LDDs in AMR parsing, we compare the output AMR graphs of the same example sentence in

Figure 1 parsed by the baseline model

SPRING and by the modified

SPRING that applies our HCA-SA and HCA-CL approaches (denoted as

Ours), respectively, in

Figure 7.

For SPRING, it mislabels node “go-02” in subgraph as the :ARG1 role of node “contrast-01”. Then, it fails to realize that it is “anxious” in that takes the :ARG1 role of “go-02” in . Additionally, the causality between and is not interpreted correctly due to the absence of node “cause-01” and its arguments.

In contrast, when integrating the HCA, Ours seems to understand the inter-clause relations better. Although “possible-01” in subgraph is mislabeled as the :ARG2 role of node “contrast-01”, it succeeds in avoiding the errors made by SPRING. Another mistake in Ours is that the relation :quant between “much” and “anxiety” is reversed and replaced by :domain, which barely impacts the Smatch F1 scores. The vast performance gap between SPRING and our HCA-based SPRING in Smatch F1 scores (66.8% vs. 88.7%) also proves the effectiveness of the HCA on LDDs in AMR parsing.