Spline Function Simulation Data Generation for Walking Motion Using Foot-Mounted Inertial Sensors

Abstract

:1. Introduction

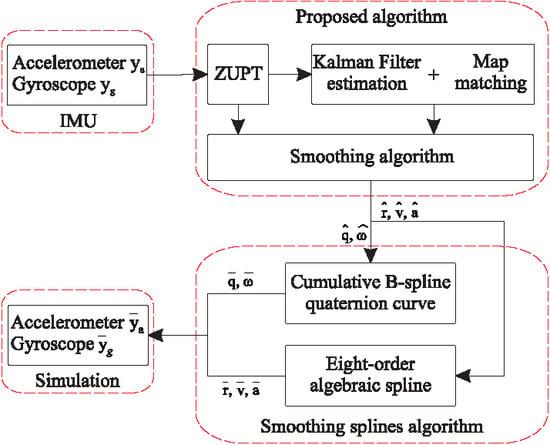

2. Smoothing Algorithm with Waypoint-Based Map Matching

2.1. Standard Inertial Navigation Using an Indirect Kalman Filter

2.2. Path Identification

2.3. Initial Yaw Angle Adjustment

3. Spline Function Computation

3.1. Cumulative B-Splines Quaternion Curve

3.2. Eighth-Order Algebraic Splines

4. Experiment and Results

4.1. Walking along a Rectangular Path

4.2. Walking along a 3D Indoor Environment

4.3. Evaluation of Simulation Data Usefulness

4.3.1. Affects of Sampling Rate on the Estimation Performance

4.3.2. Gyroscope Bias Effect

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhou, H.; Hu, H. Human motion tracking for rehabilitation—A survey. Biomed. Signal Process. Control 2008, 3, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Tao, Y.; Hu, H.; Zhou, H. Integration of vision and inertial sensors for 3D arm motion tracking in home-based rehabilitation. Int. J. Robot. Res. 2007, 26, 607–624. [Google Scholar] [CrossRef]

- Raiff, B.R.; Karataş, Ç.; McClure, E.A.; Pompili, D.; Walls, T.A. Laboratory validation of inertial body sensors to detect cigarette smoking arm movements. Electronics 2014, 3, 87–110. [Google Scholar] [CrossRef] [PubMed]

- Fang, T.H.; Park, S.H.; Seo, K.; Park, S.G. Attitude determination algorithm using state estimation including lever arms between center of gravity and IMU. Int. J. Control Autom. Syst. 2016, 14, 1511–1519. [Google Scholar] [CrossRef]

- Ahmed, H.; Tahir, M. Improving the accuracy of human body orientation estimation with wearable IMU sensors. IEEE Trans. Instrum. Meas. 2017, 66, 535–542. [Google Scholar] [CrossRef]

- Suh, Y.S.; Phuong, N.H.Q.; Kang, H.J. Distance estimation using inertial sensor and vision. Int. J. Control Autom. Syst. 2013, 11, 211–215. [Google Scholar] [CrossRef]

- Erdem, A.T.; Ercan, A.Ö. Fusing inertial sensor data in an extended Kalman filter for 3D camera tracking. IEEE Trans. Image Process. 2015, 24, 538–548. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Meng, X. Use of an inertial/magnetic sensor module for pedestrian tracking during normal walking. IEEE Trans. Instrum. Meas. 2015, 64, 776–783. [Google Scholar] [CrossRef]

- Ascher, C.; Kessler, C.; Maier, A.; Crocoll, P.; Trommer, G. New pedestrian trajectory simulator to study innovative yaw angle constraints. In Proceedings of the 23rd International Technical Meeting of the Satellite Division of the Institute of Navigation (ION GNSS 2010), Portland, OR, USA, 21–24 September 2010; pp. 504–510. [Google Scholar]

- Jiménez, A.R.; Seco, F.; Prieto, J.C.; Guevara, J. Indoor pedestrian navigation using an INS/EKF framework for yaw drift reduction and a foot-mounted IMU. In Proceedings of the 7th Workshop on Positioning, Navigation and Communication, Dresden, Germany, 11–12 March 2010; pp. 135–143. [Google Scholar] [CrossRef]

- Fourati, H. Heterogeneous data fusion algorithm for pedestrian navigation via foot-mounted inertial measurement unit and complementary filter. IEEE Trans. Instrum. Meas. 2015, 64, 221–229. [Google Scholar] [CrossRef]

- Rodger, J.A. Toward reducing failure risk in an integrated vehicle health maintenance system: A fuzzy multi-sensor data fusion Kalman filter approach for IVHMS. Expert Syst. Appl. 2012, 39, 9821–9836. [Google Scholar] [CrossRef]

- He, C.; Kazanzides, P.; Sen, H.T.; Kim, S.; Liu, Y. An Inertial and Optical Sensor Fusion Approach for Six Degree-of-Freedom Pose Estimation. Sensors 2015, 15, 16448–16465. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, A.; Golnaraghi, M.F. Initial calibration of an inertial measurement unit using an optical position tracking system. In Proceedings of the PLANS 2004. Position Location and Navigation Symposium (IEEE Cat. No.04CH37556), Monterey, CA, USA, 26–29 April 2004; pp. 96–101. [Google Scholar] [CrossRef]

- Enayati, N.; Momi, E.D.; Ferrigno, G. A quaternion-based unscented Kalman filter for robust optical/inertial motion tracking in computer-assisted surgery. IEEE Trans. Instrum. Meas. 2015, 64, 2291–2301. [Google Scholar] [CrossRef]

- Karlsson, P.; Lo, B.; Yang, G.Z. Inertial sensing simulations using modified motion capture data. In Proceedings of the 11th International Conference on Wearable and Implantable Body Sensor Networks (BSN 2014), ETH Zurich, Switzerland, 16–19 June 2014. [Google Scholar]

- Young, A.D.; Ling, M.J.; Arvind, D.K. IMUSim: A simulation environment for inertial sensing algorithm design and evaluation. In Proceedings of the 10th ACM/IEEE International Conference on Information Processing in Sensor Networks, Chicago, IL, USA, 12–14 April 2011; pp. 199–210. [Google Scholar]

- Ligorio, G.; Sabatini, A.M. A simulation environment for benchmarking sensor fusion-based pose estimators. Sensors 2015, 15, 32031–32044. [Google Scholar] [CrossRef] [PubMed]

- Zampella, F.J.; Jiménez, A.R.; Seco, F.; Prieto, J.C.; Guevara, J.I. Simulation of foot-mounted IMU signals for the evaluation of PDR algorithms. In Proceedings of the 2011 International Conference on Indoor Positioning and Indoor Navigation, Guimaraes, Portugal, 21–23 September 2011; pp. 1–7. [Google Scholar] [CrossRef]

- Parés, M.; Rosales, J.; Colomina, I. Yet Another IMU Simulator: Validation and Applications; EuroCow: Castelldefels, Spain, 2008; Volume 30. [Google Scholar]

- Zhang, W.; Ghogho, M.; Yuan, B. Mathematical model and matlab simulation of strapdown inertial navigation system. Model. Simul. Eng. 2012, 2012, 264537. [Google Scholar] [CrossRef]

- Parés, M.E.; Navarro, J.A.; Colomina, I. On the generation of realistic simulated inertial measurements. In Proceedings of the 2015 DGON Inertial Sensors and Systems Symposium (ISS), Karlsruhe, Germany, 22–23 September 2015; pp. 1–15. [Google Scholar] [CrossRef]

- Schumaker, L. Spline Functions: Basic Theory, 3rd ed.; Cambridge Mathematical Library, Cambridge University Press: Cambridge, UK, 2007. [Google Scholar]

- Kim, M.J.; Kim, M.S.; Shin, S.Y. A general construction scheme for unit quaternion curves with simple high order derivatives. In Proceedings of the 22nd Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 6–11 August 1995; ACM: New York, NY, USA, 1995; pp. 369–376. [Google Scholar] [CrossRef] [Green Version]

- Sommer, H.; Forbes, J.R.; Siegwart, R.; Furgale, P. Continuous-time estimation of attitude using B-Splines on Lie groups. J. Guid. Control Dyn. 2016, 39, 242–261. [Google Scholar] [CrossRef]

- Simon, D. Data smoothing and interpolation using eighth-order algebraic splines. IEEE Trans. Signal Process. 2004, 52, 1136–1144. [Google Scholar] [CrossRef]

- Pham, T.T.; Suh, Y.S. Spline function simulation data generation for inertial sensor-based motion estimation. In Proceedings of the 2018 57th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Nara, Japan, 11–14 September 2018; pp. 1231–1234. [Google Scholar]

- Pavlis, N.K.; Holmes, S.A.; Kenyon, S.C.; Factor, J.K. The development and evaluation of the Earth Gravitational Model 2008 (EGM2008). J. Geophys. Res. Solid Earth 2012, 117. [Google Scholar] [CrossRef] [Green Version]

- Metni, N.; Pflimlin, J.M.; Hamel, T.; Souères, P. Attitude and gyro bias estimation for a VTOL UAV. Control Eng. Pract. 2006, 14, 1511–1520. [Google Scholar] [CrossRef]

- Hwangbo, M.; Kanade, T. Factorization-based calibration method for MEMS inertial measurement unit. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 1306–1311. [Google Scholar] [CrossRef]

- Suh, Y.S. Inertial sensor-based smoother for gait analysis. Sensors 2014, 14, 24338–24357. [Google Scholar] [CrossRef]

- Placer, M.; Kovačič, S. Enhancing indoor inertial pedestrian navigation using a shoe-worn marker. Sensors 2013, 13, 9836–9859. [Google Scholar] [CrossRef]

- Gallier, J.; Xu, D. Computing exponential of skew-symmetric matrices and logarithms of orthogonal matrices. Int. J. Robot. Autom. 2002, 17, 1–11. [Google Scholar]

- Patron-Perez, A.; Lovegrove, S.; Sibley, G. A spline-based trajectory representation for sensor fusion and rolling shutter cameras. Int. J. Comput. Vis. 2015, 113, 208–219. [Google Scholar] [CrossRef]

- Markley, F.L.; Crassidis, J.L. Fundamentals of Spacecraft Attitude Determination and Control; Springer: New York, NY, USA, 2014. [Google Scholar]

- Golub, G.H.; Van Loan, C.F. Matrix Computations; Johns Hopkins University Press: Baltimore, MD, USA, 1983. [Google Scholar]

| Parameters | Values | Related Equations |

|---|---|---|

| 0.0015 | (2) | |

| 0.00001 | ||

| 0.8 | (9) | |

| 1.5 | ||

| 30 | ||

| 0.01 | (10) | |

| 0.0004 | (12) | |

| 0.0004 | (13) | |

| (15) | ||

| 1 | (26) | |

| 0.00001 |

| Sampling Rate | Mean of (m) | Radius (m) | ||

|---|---|---|---|---|

| a | b | |||

| 50 Hz | −1.5217 | −0.5428 | 0.7605 | 0.0215 |

| 100 Hz | 0.6639 | 0.4745 | 0.0554 | 0.0034 |

| 150 Hz | 0.6955 | 0.4558 | 0.0594 | 0.0032 |

| 200 Hz | 0.7156 | 0.4520 | 0.0582 | 0.0042 |

| Mean of (m) | Radius (m) | |||

|---|---|---|---|---|

| a | b | |||

| 0 | 0.7023 | 0.6122 | 0.133 | 0.0226 |

| 0.00001 | 0.6568 | 0.4696 | 0.0595 | 0.0035 |

| 0.0001 | −0.2038 | −0.0280 | 0.5952 | 0.0309 |

| 0.001 | −6.3768 | 0.5568 | 3.5123 | 1.0306 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pham, T.T.; Suh, Y.S. Spline Function Simulation Data Generation for Walking Motion Using Foot-Mounted Inertial Sensors. Electronics 2019, 8, 18. https://doi.org/10.3390/electronics8010018

Pham TT, Suh YS. Spline Function Simulation Data Generation for Walking Motion Using Foot-Mounted Inertial Sensors. Electronics. 2019; 8(1):18. https://doi.org/10.3390/electronics8010018

Chicago/Turabian StylePham, Thanh Tuan, and Young Soo Suh. 2019. "Spline Function Simulation Data Generation for Walking Motion Using Foot-Mounted Inertial Sensors" Electronics 8, no. 1: 18. https://doi.org/10.3390/electronics8010018

APA StylePham, T. T., & Suh, Y. S. (2019). Spline Function Simulation Data Generation for Walking Motion Using Foot-Mounted Inertial Sensors. Electronics, 8(1), 18. https://doi.org/10.3390/electronics8010018