Emergency Support Unmanned Aerial Vehicle for Forest Fire Surveillance

Abstract

:1. Introduction

2. Related Work

3. Hardware Architecture

3.1. Payload

- Optical sensors: The UAV was equipped with two different types of cameras, allowing the gathering of detailed information of the fire and the environment.

- –

- RGB monocular camera: with a resolution of at 60 fps in HD.

- –

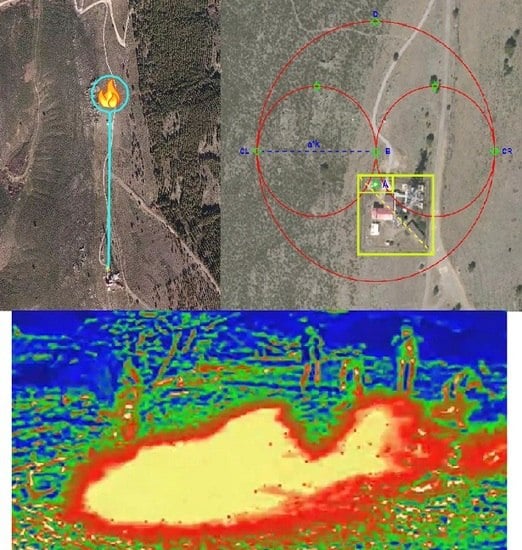

- Thermal camera FLIR AX5 series: the camera in charge of collecting thermal images of the fire (Figure 4), which provided information about the temperature at which the different focuses of interest were found. Its compact size, the wide range of resolutions and aspect ratios, and its compatibility with different software made it ideal for working on board UAVs.

- Digital temperature sensors DS18B20: a set of five DS18B20 digital temperature sensors distributed along the UAV body, providing temperature data at different points of the UAV.

- Onboard embedded unit: All the processing was performed on-board by an Intel NUC embedded computer, which had an Intel i7-7567U CPU at 3.5 GHz CPU and 8 GB RAM. The software was developed and integrated with ROS Kinetic, under the Ubuntu 16.04 LTS operating system.

- Communications module: It was necessary to establish a communications system that allowed the transmission of the data between the UAV and the ground station. To avoid range limitations or interference due to occlusions, a 3G/4G modem was chosen to ensure communications in a wide range of situations.

3.2. Charging Base

4. Software Architecture

4.1. Autonomous Navigation

- Fire alert: The UAV in standby position receives an alert message with the position of the center of the fire in UTM coordinates. This alert is generated by a system composed of several thermal cameras (Figure 7b) installed at the top of a telecommunication tower, as shown in Figure 7a, which provides a vision of the environment in a short interval of time. This system is responsible for detecting fires within a radius of 3.5 km around the tower. In this phase, the software establishes the communication between the fire detection system and the UAV.

- Take-off: Once the UAV receives the alert message and based on its initial UTM coordinates and the fire location , the path generation algorithm estimates the corresponding waypoints.As shown in Figure 8, the path generated starts by including a safe take-off from point to point B, which is a point away from the base with altitude h (this point is located in the collision-free area in the base). Once point B is reached, depending on the fire location, the algorithms generate safety points and . These points are calculated by considering a distance a from the furthest point of possible collision with respect to the base, then a safety coefficient k is used . After that, the algorithm creates a circular path considering as the diameter.Once the point or is reached, the algorithm generates the trajectory from this point to point D (the location of the center of the fire).

- Generation of the path: After the take-off maneuver is accomplished, the algorithm generates a list of waypoints () based on several trajectories, as explained in Algorithm 1. These waypoints are in meters with respect to the initial position (location at takeoff).

Algorithm 1: Trajectory generation. As shown in Figure 9, the first trajectory is the path from the initial UAV position to a point located in the border of the orbit, and this point is calculated as follows:where are the point coordinates, are the coordinates of the center of orbit, and r is the radius of the orbit.Then, the first path from the initial position to the orbit is calculated as follows:where is the linear distance between trajectory points and is between the center of the fire and the initial position, as shown in Figure 10.The next step is to calculate the orbit path.where is the conjugate angle of , as shown in Figure 10, and is the step angle between the orbit path points.Finally, the last path generated is equal to the first path, but in the opposite direction. - Tracking: Finally, it is necessary to generate an algorithm that receives this list of waypoints and verifies if the UAV achieves these or not. Algorithm 2 describe the waypoint following process.

Algorithm 2: Waypoint following.

4.2. Graphic User Interface

- Optical sensors: This consists of two displays, where the color and thermal images are shown. Both images are compressed so that they can be transmitted without delay.

- Autopilot information: The second group illustrates the data from the autopilot, such as GPS position and altitude, providing the information about the status of the flight.

- Positioning map: This group provides the UAV position in a satellite mode map. Moreover, navigation algorithms are implemented to allow the operator at the ground station to add new waypoints to the predefined generated path, if required.

- Temperature sensors: The last group provides information about the temperature of different segments of the UAV, in order to keep an eye on the operational conditions of the UAV.

5. Experimental Results

5.1. Scenario

5.2. Mission and Results

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote. Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef] [Green Version]

- Erdos, D.; Erdos, A.; Watkins, S.E. An experimental UAV system for search and rescue challenge. IEEE Aerosp. Electron. Syst. Mag. 2013, 28, 32–37. [Google Scholar] [CrossRef]

- Al-Kaff, A.; Gómez-Silva, M.J.; Moreno, F.M.; de la Escalera, A.; Armingol, J.M. An appearance-based tracking algorithm for aerial search and rescue purposes. Sensors 2019, 19, 652. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Toriz, A.; Raygoza, M.; Martínez, D. Modelo de inclusión tecnológica UAV para la prevención de trabajos de alto riesgo, en industrias de la construcción basado en la metodología IVAS. Revista Iberoamericana de Automática e Informática Industrial 2017, 14, 94–103. [Google Scholar] [CrossRef] [Green Version]

- San-Miguel-Ayanz, J.; Durrant, T.; Boca, R.; Libertà, G.; Branco, A.; de Rigo, D.; Ferrari, D.; Maianti, P.; Artes, T.; Costa, H.; et al. Forest Fires in Europe, Middle East and North Africa 2017; Joint Research Centre Technical Report; Publications Office of the European Union: Luxembourg, 2018. [Google Scholar] [CrossRef]

- Loureiro, M.L.; Allo, M. Los Incendios Forestales Y Su Impacto Económico: Propuesta Para Una Agenda Investigadora. Revista Galega de Economía 2018, 27, 129–142. [Google Scholar]

- Wang, N.; Deng, Q.; Xie, G.; Pan, X. Hybrid finite-time trajectory tracking control of a quadrotor. ISA Trans. 2019, 90, 278–286. [Google Scholar] [CrossRef] [PubMed]

- Wang, N.; Su, S.F.; Han, M.; Chen, W.H. Backpropagating constraints-based trajectory tracking control of a quadrotor with constrained actuator dynamics and complex unknowns. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 1322–1337. [Google Scholar] [CrossRef]

- Al-Kaff, A.; de La Escalera, A.; Armingol, J.M. Homography-based navigation system for unmanned aerial vehicles. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Antwerp, Belgium, 18–21 September 2017; Springer: Berlin, Germany, 2017; pp. 288–300. [Google Scholar]

- Albani, D.; Nardi, D.; Trianni, V. Field coverage and weed mapping by UAV swarms. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4319–4325. [Google Scholar]

- Lottes, P.; Khanna, R.; Pfeifer, J.; Siegwart, R.; Stachniss, C. UAV-based crop and weed classification for smart farming. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3024–3031. [Google Scholar]

- Ostovar, A.; Hellström, T.; Ringdahl, O. Human detection based on infrared images in forestry environments. In Proceedings of the International Conference on Image Analysis and Recognition, Póvoa de Varzim, Portugal, 13–15 July 2016; pp. 175–182. [Google Scholar]

- Yuan, C.; Liu, Z.; Zhang, Y. Vision-based forest fire detection in aerial images for firefighting using UAVs. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Washington, DC, USA, 7–10 June 2016; pp. 1200–1205. [Google Scholar]

- Yuan, C.; Liu, Z.; Zhang, Y. Aerial images-based forest fire detection for firefighting using optical remote sensing techniques and unmanned aerial vehicles. J. Intell. Robot. Syst. 2017, 88, 635–654. [Google Scholar] [CrossRef]

- Harikumar, K.; Senthilnath, J.; Sundaram, S. Multi-UAV Oxyrrhis Marina-Inspired Search and Dynamic Formation Control for Forest Firefighting. IEEE Trans. Autom. Sci. Eng. 2018, 16, 863–873. [Google Scholar] [CrossRef]

- Merino, L.; Caballero, F.; de Dios, J.R.M.; Maza, I.; Ollero, A. Automatic forest fire monitoring and measurement using unmanned aerial vehicles. In Proceedings of the 6th International Congress on Forest Fire Research, Coimbra, Portugal, 15–18 November 2010. [Google Scholar]

- Zharikova, M.; Sherstjuk, V. Forest Firefighting Monitoring System Based on UAV Team and Remote Sensing. In Automated Systems in the Aviation and Aerospace Industries; IGI Global: Pennsylvania, PA, USA, 2019; pp. 220–241. [Google Scholar]

- Couceiro, M.S.; Portugal, D.; Ferreira, J.F.; Rocha, R.P. SEMFIRE: Towards a new generation of forestry maintenance multi-robot systems. In Proceedings of the 2019 IEEE/SICE International Symposium on System Integration (SII), Paris, France, 14–16 January 2019; pp. 270–276. [Google Scholar]

- Qin, H.; Cui, J.Q.; Li, J.; Bi, Y.; Lan, M.; Shan, M.; Liu, W.; Wang, K.; Lin, F.; Zhang, Y.; et al. Design and implementation of an unmanned aerial vehicle for autonomous firefighting missions. In Proceedings of the 2016 12th IEEE International Conference on Control and Automation (ICCA), Kathmandu, Nepal, 1–3 June 2016; pp. 62–67. [Google Scholar]

- Imdoukh, A.; Shaker, A.; Al-Toukhy, A.; Kablaoui, D.; El-Abd, M. Semi-autonomous indoor firefighting UAV. In Proceedings of the 2017 18th International Conference on Advanced Robotics (ICAR), Hong Kong, China, 10 July 2017; pp. 310–315. [Google Scholar]

- Chen, K.; Sun, Q.; Zhou, A.; Wang, S. Adaptive Multiple Task Assignments for UAVs Using Discrete Particle Swarm Optimization. In Proceedings of the International Conference on Internet of Vehicles, Paris, France, 6–9 November 2018; pp. 220–229. [Google Scholar]

- Instituto Geográfico Nacional de España. Iberpix 4. 2018. Available online: https://www.ign.es/iberpix2/visor/ (accessed on 13 September 2019).

| Average 2007–2016 | 2017 | |

|---|---|---|

| Number of fires <1 ha | 8228 | 8705 |

| Number of fires ≥ 1 ha | 4135 | 5088 |

| Total | 12,363 | 13,793 |

| Average 2007–2016 | 2017 | |

|---|---|---|

| Burnt area of other wooded land (ha) | 27,226.41 | 66,839.02 |

| Burnt area of forest (ha) | 91,846.74 | 178,233.93 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Kaff, A.; Madridano, Á.; Campos, S.; García, F.; Martín, D.; de la Escalera, A. Emergency Support Unmanned Aerial Vehicle for Forest Fire Surveillance. Electronics 2020, 9, 260. https://doi.org/10.3390/electronics9020260

Al-Kaff A, Madridano Á, Campos S, García F, Martín D, de la Escalera A. Emergency Support Unmanned Aerial Vehicle for Forest Fire Surveillance. Electronics. 2020; 9(2):260. https://doi.org/10.3390/electronics9020260

Chicago/Turabian StyleAl-Kaff, Abdulla, Ángel Madridano, Sergio Campos, Fernando García, David Martín, and Arturo de la Escalera. 2020. "Emergency Support Unmanned Aerial Vehicle for Forest Fire Surveillance" Electronics 9, no. 2: 260. https://doi.org/10.3390/electronics9020260

APA StyleAl-Kaff, A., Madridano, Á., Campos, S., García, F., Martín, D., & de la Escalera, A. (2020). Emergency Support Unmanned Aerial Vehicle for Forest Fire Surveillance. Electronics, 9(2), 260. https://doi.org/10.3390/electronics9020260