2. Characterizations of Operators with Null Kernel

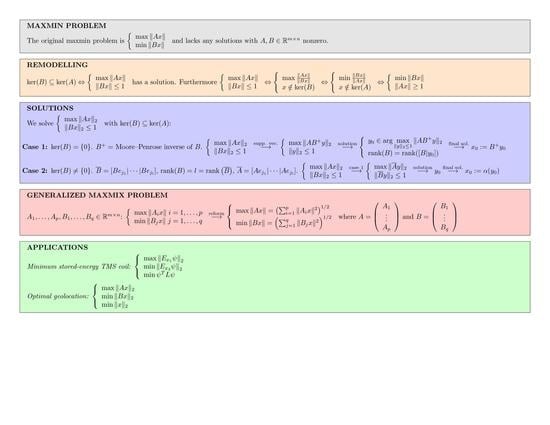

Kernels will play a fundamental role towards solving the general reformulated maxmin (

2) as shown in the next section. This is why we first study the operators with null kernel.

Throughout this section, all monoid actions considered will be left, all rings will be associative, all rings will be unitary rngs, all absolute semi-values and all semi-norms will be non-zero, all modules over rings will be unital, all normed spaces will be real or complex and all algebras will be unitary and complex.

Given a rng

R and an element

, we will denote by

to the set of left divisors of

s, that is,

Similarly, stands for the set of right divisors of s. If R is a ring, then the set of its invertibles is usually denoted by . Notice that () is precisely the subset of elements of R which are right-(left) invertible. As a consequence, . Observe also that . In general we have that and . Later on in Example 1 we will provide an example of a ring where .

Recall that an element p of a monoid is called involutive if . Given a rng R, an involution is an additive, antimultiplicative, composition-involutive map . A -rng is a rng endowed with an involution.

The categorical concept of monomorphism will play an important role in this manuscript. A morphism between objects A and B in a category is said to be a monomorphism provided that implies for all and all . Once can check that if and there exist and such that is a monomorphism, then f is also a monomorphism. In particular, if is a section, that is, exists such that , then f is a monomorphism. As a consequence, the elements of that have a left inverse are monomorphisms. In some categories, the last condition suffices to characterize monomorphisms. This is the case, for instance, of the category of vector spaces over a division ring.

Recall that denotes the space of continuous linear operators from a topological vector space X to another topological vector space Y.

Proposition 1. A continuous linear operator between locally convex Hausdorff topological vector spaces X and Y verifies that if and only if exists with . In particular, if , then if and only if in .

Proof. Let

such that

. Fix any

, then

and

so

. Conversely, if

, then fix

and

(the existence of

is guaranteed by the Hahn-Banach Theorem on the Hausdorff locally convex topological vector space

Y). Next, consider

Notice that and . □

Theorem 1. Let be a continuous linear operator between locally convex Hausdorff topological vector spaces X and Y. Then:

- 1.

If T is a section, then

- 2.

In case X and Y are Banach spaces, is topologically complemented in Y and , then T is a section.

Proof. Trivial since sections are monomorphisms.

Consider . Since is topologically complemented in Y we have that is closed in Y, thus it is a Banach space. Therefore, the Open Mapping Theorem assures that is an isomorphism. Let be the inverse of . Now consider to be a continuous linear projection such that . Finally, it suffices to define since .

□

We will finalize this section with a trivial example of a matrix such that .

Example 1. It is not hard to check that thus A is left-invertible by Theorem 1(2) and so . In fact, 4. Solving the Maxmin Problem Subject to

We will distinguish between two cases.

4.1. First Case: S Is an Isomorphism Over Its Image

By bearing in mind Theorem 5, we can focus on the first reformulation proposed at the beginning of the previous section:

The idea we propose to solve the previous reformulation is to make use of supporting vectors (see [

7,

8,

9,

10]). Recall that if

is a continuous linear operator between Banach spaces, then the set of supporting vectors of

R is defined by

The idea of using supporting vectors is that the optimization problem

whose solutions are by definition the supporting vectors of

R, can be easily solved theoretically and computationally (see [

8]).

Our first result towards this direction considers the case where S is an isomorphism over its image.

Theorem 6. Let where X and Y are Banach spaces. Suppose that S is an isomorphism over its image and denotes its inverse. Suppose also that is complemented in Y, being a continuous linear projection onto . Then If, in addition, , then Proof. Let

. We will show that

. Indeed, let

with

. Since

, by assumption we obtain

Now assume that

. We will show that

Let

, we will show that

. Indeed, let

. Observe that

so by assumption

□

Notice that, in the settings of Theorem 6, is a left-inverse of S, in other words, S is a section, as in Theorem 1(2).

Taking into consideration that every closed subspace of a Hilbert space is 1-complemented (see [

11,

12] to realize that this fact characterizes Hilbert spaces of dimension

), we directly obtain the following corollary.

Corollary 1. Let where X is a Banach space and Y a Hilbert space. Suppose that S is an isomorphism over its image and let be its inverse. Then where is the orthogonal projection on .

4.2. The Moore–Penrose Inverse

If , then the Moore–Penrose inverse of B, denoted by , is the only matrix which verifies the following:

.

.

.

.

If , then is a left-inverse of B. Even more, is the orthogonal projection onto the range of B, thus we have the following result from Corollary 1.

Corollary 2. Let such that . Then According to the previous Corollary, in its settings, if

and there exists

such that

, then

and

can be computed as

4.3. Second Case: S Is Not an Isomorphism Over Its Image

What happens if S is not an isomorphism over its image? Next theorem answers this question.

Theorem 7. Let where X and Y are Banach spaces. Suppose that . If denotes the quotient map, then Proof. Let

. Fix an arbitrary

with

. Then

therefore

This shows that

. Conversely, let

Fix an arbitrary

with

. Then

therefore

This shows that . □

Please note that in the settings of Theorem 7, if is closed in Y, then is an isomorphism over its image , and thus in this case Theorem 7 reduces the reformulated maxmin to Theorem 6.

4.4. Characterizing When the Finite Dimensional Reformulated Maxmin Has a Solution

The final part of this section is aimed at characterizing when the finite dimensional reformulated maxmin has a solution.

Lemma 3. Let be a bounded operator between finite dimensional Banach spaces X and Y. If is a sequence in , then there is a sequence in so that is bounded.

Proof. Consider the linear operator

Please note that

for all

, therefore the sequence

is bounded in

because

is finite dimensional and

has null kernel so its inverse is continuous. Finally, choose

such that

for all

. □

Lemma 4. Let . If , then A is bounded on and attains its maximum on that set.

Proof. Let

be a sequence in

. In accordance with Lemma 3, there exists a sequence

in

such that

is bounded. Since

by hypothesis (recall that

), we conclude that

A is bounded on

. Finally, let

be a sequence in

such that

as

. Please note that

for all

, so

is bounded in

and so is

in

. Fix

such that

for all

. This means that

is a bounded sequence in

so we can extract a convergent subsequence

to some

. At this stage, notice that

for all

and

converges to

, so

. Note also that, since

,

converges to

, which implies that

□

Theorem 8. Let . The reformulated maxmin problem has a solution if and only if .

Proof. If , then we just need to call on Lemma 4. Conversely, if , then it suffices to consider the sequence for , since for all and as . □

4.5. Matrices on Quotient Spaces

Consider the maxmin

being

X and

Y Banach spaces and

with

. Notice that if

is a Hamel basis of

X, then

is a generator system of

. By making use of the Zorn’s Lemma, it can be shown that

contains a Hamel basis of

. Observe that a subset

C of

is linearly independent if and only if

is a linearly independent subset of

Y.

In the finite dimensional case, we have

and

If denotes the canonical basis of , then is a generator system of . This generator system contains a basis of so let be a basis of . Please note that and for every . Therefore, the matrix associated with the linear map defined by can be obtained from the matrix B by removing the columns corresponding to the indices , in other words, the matrix associated with is . Similarly, the matrix associated with the linear map defined by is . As we mentioned above, recall that a subset C of is linearly independent if and only if is a linearly independent subset of . As a consequence, in order to obtain the basis , it suffices to look at the rank of B and consider the columns of B that allow such rank, which automatically gives us the matrix associated with , that is, .

Finally, let

denote the quotient map. Let

. If

, then

. The vector

defined by

verifies that

To simplify the notation, we can define the map

where

z is the vector described right above.