The Academy Color Encoding System (ACES): A Professional Color-Management Framework for Production, Post-Production and Archival of Still and Motion Pictures

Abstract

:1. Introduction

- sharing financial and content-security risks,

- reducing production times, and

- improving realistic outcome of the overall Computer-Generated Imaging (CGI), due to the differentiation of assets among several artists and VFX companies.

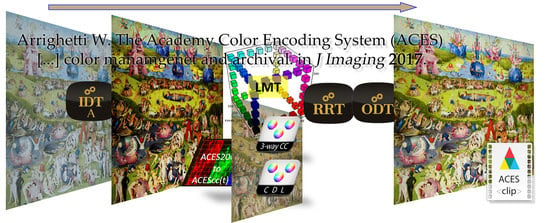

2. The Color Pipeline in the Post-Production and VFX Industry

2.1. Input Colorimetry

2.2. Creative-Process Colorimetry

2.3. Output Colorimetry

2.4. Digital Color Grading Process

3. ACES Components

3.1. Reference Implementation

- The ACES documentation is mostly public domain [1,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60], plus a group of standards by Society of Motion Picture and Television Engineers (SMPTE) standards which were assigned the ST2065 family name [61,62,63,64,65], plus an application of a different SMPTE standard [66] and two external drafts hosted on GitHub [67,68]. The SMPTE documents are technical standards mainly for vendors and manufacturers to build ACES-compliant hardware and software products; they are not needed by creatives and engineers that just want to use ACES in their workflows and pipelines across production, postproduction and VFX, as this framework was designed with low- and mid-level budget productions in mind. ACES requires neither major-studio budgets, nor top-notch engineers [15].

- The development codebase [43] is an open source implementation of ACES color encodings (in CTL language, Section 3.7), metrics and file formats. It is OS-neutral (although meant to be primarily compiled on Linux and macOS), written in C++ language, plus a few scripts in Python, and depends on a few additional open source libraries [69]. The executables are not intended for production uses—they are neither optimized for performance or batch/volume usage, nor have ergonomic interfaces (being mostly command-line utilities)—but rather for validating third-party products compliance with a reference, as specified at the beginning of the paragraph.

- Collection of reference images as a deck of still photographs about several categories of subjects in diverse lighting conditions, encoded in different ACES color-spaces (cfr. Section 3.3) and using elective file formats like OpenEXR, TIFF and DPX [69,70,71]. Together with the above codebase, ACES vendors and users are expected to test their products and workflows on them and compare them with their own rendered pictures for accuracy in different conditions.

3.2. Product Partners and the Logo Program

3.3. ACES Color Spaces

- A significant area of the AP0 gamut falls outside of average observer’s (imaginary colors), therefore many CVs are “lost” when these primaries are used in a color-space; besides, most of the in-gamut chromaticities cannot be captured/displayed by current technologies (as of 2017). Thus, a higher bit-depth may be needed to retain the same CV density within a “usable” gamut.

- Since no colorimetric cinema camera exists (yet), and ACES colorimetry is based on this, the correspondence between real tristimuli captured from a scene and the recorded CVs (even before conversion into ACES colorimetry), depends on the manufacturer-chosen sensitometry of today’s real cameras (or on the emulated physics of the CGI application’s lighting engine).

- ACES2065-1 is the main color-space of the whole framework and it is the one using AP0 primaries, since it is meant for short-/long-term storing as well as file archival of footage. It has a linear transfer characteristic and should be digitally encoded with floating-point CVs of at least 16 bits/channel precision according to [73].

- ACEScg was specifically designed as a working color-space for CGI applications [74], which it should be the standard working color-space for internal operations that still need linear-to-light transfer characteristic for physics-/optics-/lighting-based simulations. It is different from ACES2065-1 only due to the use of AP1 primaries; conversion to it is done via an isomorphism represented by Equation (3). Encoding uses 16 or 32 bits/channel floating-point CVs (“floats”).

- ACEScc was designed to help with color-correction applications, where a specifically crafted spline-logarithmic transfer function of Equation (4), whose inverse is Equation (5), supports color-grading operators; it applies indistinctly to all RGB channels after a color-space conversion to AP1 via Equation (3). Digital encoding for FPU or GPU processing [34], is in either 16 or 32 bits/channel floats.

- ACEScct is an alternate color-grading space to ACEScc, specifically designed with a different linear/logarithmic spline curve (6) instead of (4), resulting in a distinct “milking” look on shadows, due to additional toe added in that range; this additional characteristics was introduced following many colorists’ requests to have a “log” working space more alike those used in traditional film color-grading and have a similar and vendor-neutral feeling/response when manipulating control surfaces, cfr. Section 2.4 and Figure 3. ACEScc and ACEScct are identical above CVACES2065 0.0078125, although their black pedestal is different (cfr. Table 3 and Figure 9a).

- ACESproxy is introduced to work with either devices transporting video signals (with integer CV encoding), or with intermediate hardware that supports integer-based arithmetic only (instead of floating-point) [34]. These include video-broadcast equipment based on Serial Digital Interface (SDI) among the former category; LUT boxes and references monitors among the latter. Such professional encodings are implemented in either 10 or 12 bits/channel, therefore two isomorphic flavors exist: ACESproxy10 and ACESproxy12. This is the elective encoding as long as it is used only for transport of video signals to endpoint devices (and processing finalized for such intents only), with no signal or data ever stored in, or re-converted back from ACESproxy. By design, it is an integer epimorphism of ACEScc (WARNING: not of ACEScct); it also scales CV to video-legal levels [34] for compatibility with broadcast equipment, as shown in Figure 9b, as they may include legalization or clipping across the internal signal paths. The conversion from ACES2065-1 is done applying (3) first, followed by either one of the two functions in Equation (7) (red for 10-bits/channel or blue for 12-bits/channel).

- ADX is different from all the other color-space, is reserved for film-based workflows, and will be discussed in Section 3.11.

3.4. Entering ACES

- sensitivity, measured in EI (ISO exposure index),

- correlated color temperature (CCT), measured in Kelvin or, equivalently,

- generic shooting illumination conditions (e.g., daylight, tungsten light,…),

- presence of special optics and/or filters along the optic path,

- emulation of the sensor’s gamut of some cameras (e.g., redColor, dragonColor, S-Log),

3.5. Viewing and Delivering ACES

3.6. Creative Intent in ACES: The Transport of “Color Look” Metadata

3.7. Color Transformation Language

- used in most ODTs (e.g., white roll, black/white points, dim/dark surround conversion);

- the RRT itself, including generic tone-scale and spline functions contained together with it;

- basic color-space linear conversion formulas (plus colorimetry constants);

- functions from linear algebra and calculus (plus a few mathematical constants).

3.8. CommonLUT Format

- combined, single-process computation of ASC CDL, RGB matrix, 1D + 3D LUT and range scaling;

- algorithms for linear/cubic (1D LUT) as well as trilinear/tetrahedral (3D LUT) interpolations;

- support for LUT shapers (cfr. Section 2.4) as well as integer and floating-point arithmetics.

3.9. ACESclip: A Sidecar for Video Footage

- reference to the clip itself by means of its filename(s) and/or other UIDs/UUIDs;

- reference to the Input Transform either used to process the clip in the past or intended for entering the clip into an ACES pipeline in the future;

- reference to LMTs that were applied during the lifecycle of the clip, with explicit indication whether each is burnt on the asset in its CVs, or this a metadata association only;

- in case of “exotic” workflows, the Output Transform(s) used to process and/or view the clip.

- the clip’s color pedigree, i.e., full history of the clip’s past color-transformations (e.g., images rendered in several passages and undergoing different technical and creative color transforms);

- extending the ACESclip XML dialect with other production metadata potentially useful in different parts of a complete postproduction/VFX/versioning workflow (frame range/number, framerate, clip-/tape-name, TimeCode/KeyKode, frame/pixel format, authoring and © info, …).

3.10. Storage and Archival

- video tracks, (monoscopic or S3D stereoscopic);

- sound groups, as separate sets of audio tracks, each with possibly multiple channels (e.g., a sound group may have 3 audio tracks: one has “5.1” = 6 discrete channels, one 2 discrete “stereo” channels and the other a Dolby-E® dual-channel—all with different mixes of the same content);

- TT tracks (e.g., subtitles, closed captions, deaf-&-hard-of-hearing, forced narratives, …);

- one Packing List (PKL) as the inventory of files (assets) belonging to the same IMP, listing their filenames, UUIDs, hash digests and sizes;

- one Composition Play-List (CPL) describing how PKL assets are laid out onto a virtual timeline;

- Output Profile List(s) (OPLs) each describing one output format rendering the IMP into a master.

3.11. ACES Integration with Photochemical Film Process

4. The ACES Color Pipeline, from Theory to Practice

5. Use Case of an End-to-End ACES Workflow in a Full-Feature Film

5.1. Production Workflow and Color Metadata Path

- filesystem naming-convention enforced for input (VFX plate footage) and output files (renders);

- OpenColorIO set as color-management system, with ACES 1.0.3 configuration, cfr. Section 3.1;

- all color-spaces for input footage, optional CGI models, CDLs and render files, automatically set;

- customizations for the specific artist assigned to the job (name, OS, workplace UI, …);

- customizations/metadata for the specific job type (rotoscoping, “prep”, compositing, …);

- set up of a render node that writes both the full-resolution composited plates (as a ST2065-4-compliant EXR sequence), and a reference QuickTime-wrapped Apple® ProRes 4444, with informative slate at the beginning, to use for previz of VFX progress in calibrated UHD TV room;

- set up of viewing path that includes the CDL for each plate node (with ACEScg to ACEScc implicit conversion), just before the Viewer node (implicit Output Transform to the monitor);

- set up of alternative viewing path using a Baselight for Nuke plugin, to previsualize the specific secondary color-corrections from the finishing department (read below).

5.2. Camera Department Test

- EXR sequence (ST2065-4-compliant, original resolution), without {1} and with {2} baked CDL;

- DPX sequence, resampled to 2K and Rec.709 color-space, without {3} and with {4} baked CDL.

5.3. VFX Department Test

- EXR sequence (ST2065-4-compliant), exported by Baselight {5}, with and without baked CDL {6};

- Nuke render of sequence {5} with composited 3D asset {7}, and with baked CDL {8}.

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

References

- AMPAS. Academy Color Encoding System (ACES) Documentation Guide; Technical Bulletin TB-2014-001; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/TB-2014-001 (accessed on 20 September 2017).

- Arrighetti, W. Motion Picture Colour Science and film ‘Look’: The maths behind ACES 1.0 and colour grading. Color Cult. Sci. 2015, 4, 14–21. Available online: jcolore.gruppodelcolore.it/numeri/online/R0415 (accessed on 20 September 2017). [CrossRef]

- Okun, J.A.; Zwerman, S.; Rafferty, K.; Squires, S. The VES Handbook of Visual Effects, 2nd ed.; Okun, J.A., Zwerman, S., Eds.; Focal Press: Waltham, MA, USA, 2015; ISBN 978-1-138-01289-9. [Google Scholar]

- Academy Web Page on ACES. Available online: www.oscars.org/science-technology/sci-tech-projects/aces (accessed on 20 September 2017).

- Pierotti, F. The color turn. L’impatto digitale sul colore cinematografico. Bianco Nero 2014, 580, 26–34. [Google Scholar]

- MPAA. Theatrical Marketing Statistics; Dodd, C.J., Ed.; MPAA Report; Motion Picture Association of America: Washington, DC, USA, 2016; Available online: www.mpaa.org/wp-content/uploads/2017/03/MPAA-Theatrical-Market-Statistics-2016_Final-1.pdf (accessed on 20 September 2017).

- Anonymous; U.S. Availability of Film and TV Titles in the Digital Age 2016 March; SNL Kagan, S & P Global Market Intelligence. Available online: www.mpaa.org/research-and-reports (accessed on 15 September 2017).

- Mukherjee, D.; Bhasin, R.; Gupta, G.; Kumar, P. And Action! Making Money in the Post-Production Services Industry, A.T. Kearney, 2013. Available online: www.mpaa.org/research-and-reports (accessed on 15 September 2017).

- Arrighetti, W. La Cybersecurity e le nuove tecnologie del Media & Entertainment nel Cloud. Quad. CSCI 2017, 13. in press. [Google Scholar]

- Storaro, V.; Codelli, L.; Fisher, B. The Art of Cinematography; Albert Skira: Geneva, Switzerland, 1993; ISBN 978-88-572-1753-6. [Google Scholar]

- Arrighetti, W. New trends in Digital Cinema: From on-set colour grading to ACES. In Proceedings of the CHROMA: Workshop on Colour Image between Motion Pictures and Media, Florence, Italy, 18 September 2013; Arrighetti, W., Pierotti, F., Rizzi, A., Eds.; Available online: chroma.di.unimi.it/inglese/ (accessed on 15 September 2017).

- Salt, B. Film Style and Technology: History and Analysis, 3rd ed.; Starword: London, UK, 2009; ISBN 978-09-509-0665-2. [Google Scholar]

- Haines, R.W. Technicolor Movies: The History of Dye Transfer Printing; McFarland & Company: Jefferson, NC, USA, 1993; ISBN 978-08-995-0856-6. [Google Scholar]

- Arrighetti, W.; (Frame by Frame, Rome, Italy); Maltz, A.; (AMPAS, Beverly Hills, CA, USA); Tobenkin, S.; (LeTo Entertainment, Las Vegas, NV, USA). Personal communication, 2016.

- Arrighetti, W.; Giardiello, F.L. ACES 1.0: Theory and Practice, SMPTE Monthly Educational Webcast. Available online: www.smpte.org/education/webcasts/aces-1-theory-and-practice (accessed on 15 September 2017).

- Arrighetti, W. The Academy Color Encoding System (ACES) in a video production and post-production colour pipeline. In Colour and Colorimetry, Proceedings of 11th Italian Color Conference, Milan, Italy, 10–21 September 2015; Rossi, M., Casciani, D., Eds.; Maggioli: Rimini, Italy, 2015; Volume XI/B, pp. 65–75. ISBN 978-88-995-1301-6. [Google Scholar]

- Stump, D. Digital Cinematography; Focal Press: Waltham, MA, USA, 2014; ISBN 978-02-408-1791-0. [Google Scholar]

- Poynton, C. Digital Video and HD: Algorithms and Interfaces, 2nd ed.; Elsevier: Amsterdam, The Netherlands, 2012; ISBN 978-01-239-1926-7. [Google Scholar]

- Reinhard, E.; Khan, E.A.; Arkyüz, A.O.; Johnson, G.M. Color Imaging: Fundamentals and Applications; A.K. Peters Ltd.: Natick, MA, USA, 2008; ISBN 978-1-56881-344-8. [Google Scholar]

- Arrighetti, W. Colour Management in motion picture and television industries. In Color and Colorimetry, Proceedings of 7th Italian Color Conference, Rome, Italy, 15–16 September 2011; Rossi, M., Ed.; Maggioli: Rimini, Italy, 2011; Volume VII/B, pp. 63–70. ISBN 978-88-387-6043-3. [Google Scholar]

- Arrighetti, W. Kernel- and CPU-level architectures for computing and A\V post-production environments. In Communications to SIMAI Congress; DeBernardis, E., Fotia, G., Puccio, L., Eds.; World Scientific: Singapore, 2009; Volume 3, pp. 267–279. Available online: cab.unime.it/journals/index.php/congress/article/view/267 (accessed on 15 September 2017). [CrossRef]

- Hullfish, S. The Art and Technique of Digital Color Correction, 2nd ed.; Focal Press: Waltham, MA, USA, 2012; ISBN 978-0-240-81715-6. [Google Scholar]

- Doyle, P.; Technicolor, London, UK. “Meet the Colourist” Video Interview, FilmLight, 2017.

- IEC. Colour Measurement and Management in Multimedia Systems and Equipment—Part 2-1: Default RGB Colour Space—sRGB; Standard 61966-2-1:1999; IEC: Geneva, Switzerland, 1998. [Google Scholar]

- ITU. Parameter Values for the HDTV Standards for Production and International Programme Exchange; Recommendation BT.709-6; ITU: Geneva, Switzerland, 2015; Available online: www.itu.int/rec/R-REC-BT.709-6-201506-I (accessed on 20 September 2017).

- SMPTE. D-Cinema Quality—Reference Projector and Environment for the Display of DCDM in Review Rooms and Theaters; Recommended Practice RP431-2; SMPTE: White Plains, NY, USA, 2011. [Google Scholar]

- ITU. Reference Electro-Optical Transfer Function for Flat Panel Displays Used in HDTV Studio Production; Recommendation BT.1886; ITU: Geneva, Switzerland, 2011; Available online: www.itu.int/rec/R-REC-BT.1886 (accessed on 20 September 2017).

- ITU. Image Parameter Values for High Dynamic Range Television for Use in Production and International Programme Exchange; Recommendation BT.2100; ITU: Geneva, Switzerland, 2016; Available online: www.itu.int/rec/R-REC-BT.2100 (accessed on 20 September 2017).

- SMPTE. Dynamic Range Electro-Optical Transfer Function of Mastering Reference Displays; Standard ST 2084:2014; SMPTE: White Plains, NY, USA, 2014. [Google Scholar]

- ITU. Parameter Values for Ultra-High Definition Television Systems for Production and International Programme Exchange; Recommendation BT.2020-2; ITU: Geneva, Switzerland, 2014; Available online: www.itu.int/rec/R-REC-BT.2020 (accessed on 20 September 2017).

- Throup, D. Film in the Digital Age; Quantel: Newbury, UK, 1996. [Google Scholar]

- CIE. Colorimetry; Standard 11664-6:2014(E); ISO/IEC: Vienna, Austria, 2014. [Google Scholar]

- Pines, J.; Reisner, D. ASC Color Decision List (ASC CDL) Transfer Functions and Interchange Syntax; Version 1.2; American Society of Cinematographers’ Technology Committee, DI Subcommittee: Los Angeles, CA, USA, 2009. [Google Scholar]

- Clark, C.; Reisner, D.; Stump, D.; Levinson, L.; Pines, J.; Demos, G.; Benitez, A.; Ollstein, M.; Kennel, G.; Hart, A.; et al. Progress Report: ASC Technology Committee. SMPTE Motion Imaging J. 2007, 116, 345–354. [Google Scholar] [CrossRef]

- Arrighetti, W. Moving picture colour science: The maths behind Colour LUTs, ACES and film “looks”. In Color and Colorimetry, Proceedings of 8th Italian Color Conference, Bologna, Italy, 13–14 September 2012; Rossi, M., Ed.; Maggioli: Rimini, Italy, 2012; Volume VIII/B, pp. 27–34. ISBN 978-88-387-6137-9. [Google Scholar]

- CIE; International Electrotechnical Commission (IEC). International Lighting Vocabulary, 4th ed.; Publication 17.4; CIE: Geneva, Switzerland, 1987. [Google Scholar]

- CIE. Chromaticity Difference Specification for Light Sources, 3rd ed.; Technical Note 1:2014; CIE: Geneva, Switzerland, 2004. [Google Scholar]

- Tooms, M.S. Colour Reproduction in Electronic Imaging Systems: Photography, Television, Cinematography; Wiley: Hoboken, NJ, USA, 2016; ISBN 978-11-190-2176-6. [Google Scholar]

- SMPTE. Derivation of Basic Television Color Equations; Recommended Practice RP 177:1993; SMPTE: White Plains, NY, USA, 1993. [Google Scholar]

- Westland, S.; Ripamonti, C.; Cheung, V. Computational Colour Science; Wiley: Hoboken, NJ, USA, 2012; ISBN 978-0-470-66569-5. [Google Scholar]

- Arrighetti, W. Colour correction calculus (CCC): Engineering the maths behind colour grading. In Colour and Colorimetry, Proceedings of 9th Italian Color Conference, Florence, Italy, 19–20 September 2013; Rossi, M., Ed.; Maggioli: Rimini, Italy, 2013; Volume IX/B, pp. 13–19. ISBN 978-88-387-6242-0. [Google Scholar]

- Sætervadet, T. FIAF Digital Projection Guide; Indiana University Press: Bloomington, IN, USA, 2012; ISBN 978-29-600-2962-8. [Google Scholar]

- AMPAS Software Repository on GitHub (Version 1.0.3). Available online: github.com/ampas/aces-dev (accessed on 20 September 2017).

- ACES Central Website. Available online: ACEScentral.com (accessed on 20 September 2017).

- AMPAS. ACES Version 1.0 Component Names; Technical Bulletin TB-2014-012; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/TB-2014-012 (accessed on 20 September 2017).

- AMPAS. ACES Version 1.0 User Experience Guidelines; Technical Bulletin TB-2014-002; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/TB-2014-002 (accessed on 20 September 2017).

- AMPAS. ACES—Versioning System; Standard S-2014-002; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/S-2014-002 (accessed on 20 September 2017).

- AMPAS. Informative Notes on SMPTE ST2065-1—ACES; Technical Bulletin TB-2014-004; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/S-2014-004 (accessed on 20 September 2017).

- AMPAS. ACEScg—A Working Space for CGI Render and Compositing; Standard S-2014-004; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/S-2014-004 (accessed on 20 September 2017).

- AMPAS. ACEScc—A Working Logarithmic Encoding of ACES Data for Use within Color Grading Systems; Standard S-2014-003; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/S-2014-003 (accessed on 20 September 2017).

- Houston, J.; Benitez, A.; Walker, D.; Feeney, R.; Antley, R.; Arrighetti, W.; Barbour, S.; Barton, A.; Borg, L.; Clark, C.; et al. A Common File Format for Look-up Tables; Standard S-2014-006; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/S-2014-006 (accessed on 20 September 2017).

- AMPAS. ACEScct—A Quasi-Logarithmic Encoding of ACES Data for Use within Color Grading Systems; Standard S-2016-001; AMPAS: Beverly Hills, CA, USA, 2016; Available online: j.mp/S-2016-001 (accessed on 20 September 2017).

- AMPAS. ACESproxy—An Integer Log Encoding of ACES Image Data; Standard S-2013-001; AMPAS: Beverly Hills, CA, USA, 2013; Available online: j.mp/S-2013-001 (accessed on 20 September 2017).

- AMPAS. Informative Notes on SMPTE ST2065-4 [64]; Technical Bulletin TB-2014-005; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/TB-2014-005 (accessed on 20 September 2017).

- AMPAS. Recommended Procedures for the Creation and Use of Digital Camera System Input Device Transforms (IDTs); Procedure P-2013-001; AMPAS: Beverly Hills, CA, USA, 2013; Available online: j.mp/P-2013-001 (accessed on 20 September 2017).

- AMPAS. Design, Integration and Use of ACES Look Modification Transforms; Technical Bulletin TB-2014-010; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/TB-2014-010 (accessed on 20 September 2017).

- AMPAS. Alternative ACES Viewing Pipeline User Experience; Technical Bulletin TB-2014-013; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/TB-2014-013 (accessed on 20 September 2017).

- AMPAS. ACES Clip-Level Metadata File Format Definition and Usage; Technical Bulletin TB-2014-009; Academy of Motion Picture Arts and Sciences: Beverly Hills, CA, USA, 2014; Available online: j.mp/TB-2014-009 (accessed on 20 September 2017).

- AMPAS. Informative Notes on SMPTE ST2065-2 [62] and SMPTE ST2065-3 [63]; Technical Bulletin TB-2014-005; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/TB-2014-005 (accessed on 20 September 2017).

- AMPAS. Informative Notes on SMPTE ST268:2014—File Format for Digital Moving Picture Exchange (DPX); Technical Bulletin TB-2014-007; AMPAS: Beverly Hills, CA, USA, 2014; Available online: j.mp/TB-2014-007 (accessed on 20 September 2017).

- SMPTE. Academy Color Encoding Specification (ACES); Standard ST 2065-1:2012; SMPTE: White Plains, NY, USA, 2012. [Google Scholar]

- SMPTE. Academy Printing Density (APD)—Spectral Responsivities, Reference Measurement Device and Spectral Calculation; Standard ST 2065-2:2012; SMPTE: White Plains, NY, USA, 2012. [Google Scholar]

- SMPTE. Academy Density Exchange Encoding (ADX)—Encoding Academy Printing Density (APD) Values; Standard ST 2065-3:2012; SMPTE: White Plains, NY, USA, 2012. [Google Scholar]

- SMPTE. ACES Image Container File Layout; Standard ST 2065-4:2013; SMPTE: White Plains, NY, USA, 2013. [Google Scholar]

- SMPTE. Material Exchange Format—Mapping ACES Image Sequences into the MXF Generic Container; Standard ST 2065-5:2016; SMPTE: White Plains, NY, USA, 2016. [Google Scholar]

- SMPTE. IMF Application #5—ACES (Working Draft); Standard ST 2067-50; SMPTE: White Plains, NY, USA, 2017. [Google Scholar]

- Kainz, F.; Kunz, A. Color Transformation Language User Guide and Reference Manual, 2007. Available online: github.com/ampas/CTL (accessed on 20 September 2017).

- Houston, J. A Common File Format for Look-up Tables, Version 1.01; Starwatcher Digital, 2008. Available online: github.com/starwatcherdigital/commonlutformat; http://github.com/ampas/CLF (accessed on 15 September 2017).

- Kainz, F.; Bogart, R.; Stanczyk, P.; Hillman, P. OpenEXR Documentation; Industrial Light & Magic, 2013. Available online: www.openexr.com (accessed on 20 September 2017).

- ITU-T Specification, TIFF™, Revision 6.0; Adobe Systems Incorporated, 1992. Available online: www.itu.int/itudoc/itu-t/com16/tiff-fx/ (accessed on 15 September 2017).

- SMPTE. File Format for Digital Moving Picture Exchange (DPX); Standard ST 268:2014; SMPTE: White Plains, NY, USA, 2014. [Google Scholar]

- OpenColorIO Project Website and GitHub Page; Sony ImageWorks, 2003. Available online: http://www.opencolorio.org; http://github.com/imageworks/OpenColorIO (accessed on 15 September 2017).

- IEEE Computer Society Standards Committee; American National Standard Institute. Standard for Floating-Point Arithmetic; IEEE Standards Board. Standard P754-2008; IEEE: New York, NY, USA, 2008. [Google Scholar]

- Duiker, H.P.; Forsythe, A.; Dyer, S.; Feeney, R.; McCown, W.; Houston, J.; Maltz, A.; Walker, D. ACEScg: A common color encoding for visual effects applications. In Proceedings of the Digital Symposium on Digital Production (DigiPro’15), Los Angeles, CA, USA, 8 August 2015; Spencer, S., Ed.; ACM: New York, NY, USA, 2015; p. 53, ISBN 978-1-4503-2136-5. [Google Scholar] [CrossRef]

- SMPTE. Mastering Display Color Volume Metadata Supporting High Luminance and Wide Color Gamut Images; Standard ST 2086:2014; SMPTE: White Plains, NY, USA, 2014. [Google Scholar]

- Arrighetti, W. Topological Calculus: From algebraic topology to electromagnetic fields. In Applied and Industrial Mathematics in Italy, 2nd ed.; Cutello, V., Fotia, G., Puccio, L., Eds.; World Scientific: Singapore, 2007; Volume 2, pp. 78–88. [Google Scholar] [CrossRef]

- Arrighetti, W. Mathematical Models and Methods for Electromagnetics on Fractal Geometries. Ph.D. Thesis, Università degli Studi di Roma “La Sapienza”, Rome, Italy, September 2007. [Google Scholar]

- SMPTE. ARRIRAW Image File Structure and Interpretation Supporting Deferred Demosaicing to a Logarithmic Encoding; Registered Disclosure Document RDD 30:2014; SMPTE: White Plains, NY, USA, 2014. [Google Scholar]

- Adobe Systems Incorporated. CinemaDNG Image Data Format Specification, Version 1.1.0.0; Available online: www.adobe.com/devnet/cinemadng.html (accessed on 20 September 2017).

- Arrighetti, W.; (Technicolor, Hollywood, CA, USA); Frith, J.; (MPC, London, UK). Personal communication, 2014.

- SMPTE. Material Exchange Format (MXF)—File Format Specifications; Standard ST 377-1:2011; SMPTE: White Plains, NY, USA, 2011. [Google Scholar]

- SMPTE. Interoperable Master Format—Core Constraints; Standard ST 2067-2:2016; SMPTE: White Plains, NY, USA, 2016. [Google Scholar]

- National Association of Photographic Manufacturers, Inc.; American National Standards Institute. Density Measurements—Part 3: Spectral Conditions, 2nd ed.; Standard 5-3:1995(E); ISO: London, UK, 1995. [Google Scholar]

- Internet Movie Database (IMDb) Page on “Il Ragazzo Invisibile: Seconda Generazione Movie”. Available online: www.imdb.com/title/tt5981944 (accessed on 15 September 2017).

- Arrighetti, W.; (Frame by Frame, Rome, Italy); Giardiello, F.L.; (Kiwii Digital Solutions, London, UK); Tucci, F.; (Kiwii Digital Solutions, Rome, Italy). Personal communication, 2016.

- ShotOnWhat Page on “Il Ragazzo Invisibile: Seconda Generazione Movie”. Available online: shotonwhat.com/il-ragazzo-invisibile-fratelli-2017 (accessed on 15 September 2017).

- The IMF (Interoperable Master Format) User Group Website. Available online: https://imfug.com (accessed on 15 September 2017).

- Netflix Technology Blog on Medium. IMF: A Prescription for “Versionitis”, 2016. Available online: medium.com/netflix-techblog/imf-a-prescription-for-versionitis-e0b4c1865c20 (accessed on 15 September 2017).

- DCI Specification, Version 1.2; Digital Cinema Initiatives (DCI), 2017. Available online: www.dcimovies.com (accessed on 15 September 2017).

- Diehl, E. Securing Digital Video: Techniques for DRM and Content Protection; Springer: Berlin, Germany, 2012; ISBN 978-3-642-17345-5. [Google Scholar]

- SMPTE. IMF Application #4—Cinema Mezzanine Format; Standard ST 2067-40:2016; SMPTE: White Plains, NY, USA, 2016. [Google Scholar]

- Mansecal, T.; Mauderer, M.; Parsons, M. Colour-Science.org’s Colour API. Available online: http://colour-science.org; http://github.com/colour-science/colour (accessed on 15 September 2017). [CrossRef]

- Arrighetti, W.; Gazzi, C.; (Frame by Frame, Rome, Italy); Zarlenga, E.; (Rome, Italy). Personal communication, 2015.

| Dynamic Range | PC Monitor | HD TV | Cinema | UltraHD TV |

|---|---|---|---|---|

| SDR | sRGB [24] | BT.709 3 [25] | DCI P3 4 [26] | BT.1886 [27] |

| HDR 1 | ― 2 | BT.2100 3 [28] | ST2084 5 [29] | BT.2020 3 [30] and BT.2100 3 |

| Gamut Name | Red | Green | Blue | White-Point | |||||

|---|---|---|---|---|---|---|---|---|---|

| AP0 | 0.7347 | 0.2653 | 0.0 | 1.0 | 0.0001 | −0.0770 | D60 | 0.32168 | 0.33767 |

| AP1 | 0.7130 | 0.2930 | 0.1650 | 0.8300 | 0.1280 | 0.0440 | D60 | 0.32168 | 0.33767 |

| sRGB/BT.709 | 0.6400 | 0.3200 | 0.3000 | 0.6000 | 0.1500 | 0.0600 | D65 | 0.31270 | 0.32900 |

| ROMM RGB | 0.7347 | 0.2653 | 0.3000 | 0.6000 | 0.0366 | 0.0001 | D50 | 0.34567 | 0.35850 |

| CIE RGB | 0.7347 | 0.2653 | 0.2738 | 0.7174 | 0.1666 | 0.0089 | E | 0.33333 | 0.3333 |

| ARRI W.G. | 0.6840 | 0.3130 | 0.2210 | 0.8480 | 0.0861 | −0.1020 | D65 | 0.31270 | 0.32900 |

| DCI P3 | 0.6800 | 0.3200 | 0.2650 | 0.6900 | 0.1500 | 0.0600 | DCI | 0.31400 | 0.35105 |

| BT.2100/2020 | 0.7080 | 0.2920 | 0.1700 | 0.7970 | 0.1310 | 0.0460 | D65 | 0.31270 | 0.32900 |

| ACES2065-1 | ACEScg | ACEScc/ACEScct | ACESproxy | ADX | |

|---|---|---|---|---|---|

| primaries | AP0 | AP1 | AP1 | AP1 | APD |

| white-point | D60 | D60 | D60 | D60 | ~Status-M |

| gamma | 1.0 | 1.0 | |||

| arithmetic | floats 16b | floats 16/32b | floats 16/32b | int. 10/12b | int. 10/16b |

| CV ranges: | Full | Full | Full | SDI-legal | Full |

| legal (IRE) | [−65504.0, 65504.0] | same 1 | [−0.358447,65504.0] 2 | [64, 940] 3 | [0, 65535] 4 |

| ±6.5 EV | [−0.0019887, 16.2917] | same 1 | [0.042584, 0.78459] 2 | [101, 751] | ― 5 |

| 18%, 100% grey | 0.180053711, 1. | same 1 | 0.4135884, 0.5579 | 426, 550 3 | ― 5 |

| Purpose | file interchange; mastering; archival | CGI; compositing | color grading | real-time video transport only | film scans |

| Specification | [1,48,61] | [49] | [50]/[52] | [53] | [54,62,63] |

| OpenEXR (ST2065-4) | MXF (ST2065-5) |

|---|---|

| version/endianness/header: 2.0/little/≤1 MiB | essence container: MXF Generic Container |

| attribute structure: name, type name, size, value | mapping kind: ACES Picture Element |

| tiling: scanlines (↑↓ order), pixel-packed | content kind: Frame- or Clip-wrapped as ST2065-4 |

| bit-depth/compression: 16 bpc floats [73]/none | MXF Operational Pattern(s): any |

| channels: (b,g,r) or (α,b,g,r) or S3D: ([α],b,g,r,[left.α],left.b,left.g,left.r) | content package (frame-wrapped): in-sync and unfragmented items of each system/picture/audio/data/compound type |

| color-space: ACES2065-1 [61] | Image Track File’s top-level file package: RGBA Picture Essence |

| raster/ch./file size: ≤4096 × 3112/ ≤8 / >200 MB | channels: (b,g,r) or (α,b,g,r) |

| Mandatory metadata: acesImageContainerFlag=1, adoptedNeutral, channels, chromaticities, compression=0, dataWindow, displayWindow, lineOrder, pixelAspectRatio, screenWindowCenter, screenWindowWidth (stereoscopic images: multiView) | Mandatory essence descriptors: Frame Layout = 0 = full_frame, Video Line Map = [0,0], Aspect Ratio = abs(displayWindow[0]· pixelAspectRatio)/displayWindpw [1], Transfer Characteristic = RP224, Color Primaries = AP0 [61], Scanning Direction = 0 |

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arrighetti, W. The Academy Color Encoding System (ACES): A Professional Color-Management Framework for Production, Post-Production and Archival of Still and Motion Pictures. J. Imaging 2017, 3, 40. https://doi.org/10.3390/jimaging3040040

Arrighetti W. The Academy Color Encoding System (ACES): A Professional Color-Management Framework for Production, Post-Production and Archival of Still and Motion Pictures. Journal of Imaging. 2017; 3(4):40. https://doi.org/10.3390/jimaging3040040

Chicago/Turabian StyleArrighetti, Walter. 2017. "The Academy Color Encoding System (ACES): A Professional Color-Management Framework for Production, Post-Production and Archival of Still and Motion Pictures" Journal of Imaging 3, no. 4: 40. https://doi.org/10.3390/jimaging3040040

APA StyleArrighetti, W. (2017). The Academy Color Encoding System (ACES): A Professional Color-Management Framework for Production, Post-Production and Archival of Still and Motion Pictures. Journal of Imaging, 3(4), 40. https://doi.org/10.3390/jimaging3040040