Hybrid Classical–Quantum Transfer Learning for Cardiomegaly Detection in Chest X-rays

Abstract

:1. Introduction

2. Materials and Methods

2.1. Dataset and Data Curation

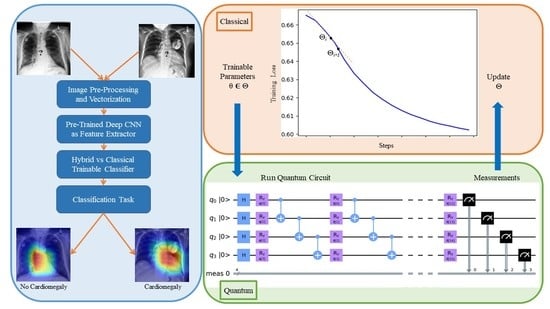

2.2. General Design of the Models

2.3. Image Preprocessing and Vectorization

2.4. Feature Extractor

2.5. Trainable Classifier

2.6. Parameterized Quantum Circuit

2.7. Training

2.8. Performance Metrics

2.9. Image Interpretation

2.10. Normalized Global Effective Dimension

3. Results

3.1. Selection of Models and Training Protocols

3.2. Performances of the CC Models

3.3. Performances of the CQ Models

3.4. Grad-CAM++ Analysis

3.5. Normalized Global Effective Dimension

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Model Name | AUC | Acc | B Acc | Prec 0 | Prec 1 | Rec 0 | Rec 1 |

|---|---|---|---|---|---|---|---|

| F-Dnet-C | 0.933 | 0.864 | 0.865 | 0.861 | 0.868 | 0.873 | 0.856 |

| F-Axnet-C | 0.860 | 0.785 | 0.785 | 0.785 | 0.785 | 0.791 | 0.778 |

| F-Dnet-P-4q | 0.931 | 0.862 | 0.862 | 0.885 | 0.840 | 0.835 | 0.889 |

| F-Dnet-Q-4q-2D | 0.917 | 0.862 | 0.862 | 0.838 | 0.889 | 0.900 | 0.823 |

| F-Dnet-Q-4q-4D | 0.925 | 0.842 | 0.842 | 0.790 | 0.921 | 0.938 | 0.745 |

| N-Dnet-C | 0.922 | 0.853 | 0.853 | 0.823 | 0.892 | 0.905 | 0.801 |

| N-Axnet-C | 0.908 | 0.822 | 0.822 | 0.839 | 0.806 | 0.802 | 0.842 |

| N-Dnet-P-4q | 0.908 | 0.832 | 0.831 | 0.787 | 0.894 | 0.913 | 0.748 |

| N-Dnet-Q-4q-2D | 0.833 | 0.779 | 0.780 | 0.810 | 0.754 | 0.737 | 0.823 |

| N-Dnet-Q-4q-4D | 0.873 | 0.804 | 0.804 | 0.788 | 0.822 | 0.837 | 0.770 |

| F-Dnet-P-6q | 0.904 | 0.866 | 0.865 | 0.841 | 0.895 | 0.905 | 0.825 |

| F-Dnet-P-8q | 0.936 | 0.858 | 0.857 | 0.818 | 0.911 | 0.924 | 0.789 |

| F-Dnet-P-10q | 0.932 | 0.860 | 0.860 | 0.850 | 0.871 | 0.878 | 0.842 |

| Model Name | Processing Unit | Calculation Time per Epoch |

|---|---|---|

| F-Dnet-C/N-Dnet-C | GPU | 1 min |

| F-Axnet-C/C-Axnet-C | GPU | 5 s |

| F-Dnet-P-4q/N-Dnet-P-4q | GPU | 3 min |

| F-Dnet-Q-4q-2D/N-Dnet-Q-4q-2D | GPU and CPU | 11 min |

| F-Dnet-Q-4q-4D/N-Dnet-Q-4q-4D | GPU and CPU | 11 min |

| F-Dnet-P-6q | GPU | 4 min |

| F-Dnet-P-8q | GPU | 5 min |

| F-Dnet-P-10q | GPU | 7 min |

| Model * | AUC | Acc | B Acc | Prec 0 | Prec 1 | Rec 0 | Rec 1 |

|---|---|---|---|---|---|---|---|

| F-Dnet-C 10 Epochs | 0.929 [0.919, 0.938] | 0.862 [0.847, 0.877] | 0.861 [0.847, 0.876] | 0.843 [0.827, 0.860] | 0.884 [0.861, 0.908] | 0.892 [0.870, 0.915] | 0.831 [0.809, 0.852] |

| F-Dnet-C 15 Epochs | 0.931 [0.921, 0.940] | 0.862 [0.850, 0.875] | 0.863 [0.850, 0.875] | 0.844 [0.820, 0.868] | 0.884 [0.861, 0.906] | 0.891 [0.870, 0.913] | 0.834 [0.810, 0.859] |

| F-Dnet-C 20 Epochs | 0.931 [0.923, 0.939] | 0.863 [0.853, 0.873] | 0.862 [0.852, 0.873] | 0.845 [0.831, 0.858] | 0.884 [0.870, 0.897] | 0.892 [0.883, 0.902] | 0.832 [0.812, 0.853] |

| F-Axnet-C 10 Epochs | 0.928 [0.918, 0.938] | 0.851 [0.839, 0.862] | 0.851 [0.839, 0.862] | 0.848 [0.829, 0.868] | 0.854 [0.833, 0.876] | 0.856 [0.830, 0.883] | 0.845 [0.825, 0.866] |

| F-Axnet-C 15 Epochs | 0.927 [0.911, 0.942] | 0.857 [0.840, 0.874] | 0.857 [0.839, 0.874] | 0.855 [0.834, 0.877] | 0.857 [0.839, 0.876 | 0.860 [0.841, 0.879] | 0.853 [0.834, 0.873] |

| F-Axnet-C 20 Epochs | 0.933 [0.925, 0.940] | 0.858 [0.852, 0.863] | 0.858 [0.853, 0.864] | 0.858 [0.843, 0.873] | 0.858 [0.835, 0.880] | 0.860 [0.838, 0.881] | 0.857 [0.844, 0.870] |

References

- Cause-Specific Mortality, 2000–2019. Available online: https://www.who.int/data/gho/data/themes/mortality-and-global-health-estimates/ghe-leading-causes-of-death (accessed on 26 March 2023).

- Centers for Disease Control and Prevention. Heart Disease Facts. Available online: https://www.cdc.gov/heartdisease/facts.htm (accessed on 26 March 2023).

- Timmis, A.; Vardas, P.; Townsend, N.; Torbica, A.; Katus, H.; De Smedt, D.; Gale, C.P.; Maggioni, A.P.; Petersen, S.E.; Huculeci, R.; et al. European Society of Cardiology: Cardiovascular Disease Statistics 2021: Executive Summary. Eur. Heart J. Qual. Care Clin. Outcomes 2022, 8, 377–382. [Google Scholar] [CrossRef] [PubMed]

- Averbuch, T.; Sullivan, K.; Sauer, A.; Mamas, M.A.; Voors, A.A.; Gale, C.P.; Metra, M.; Ravindra, N.; Van Spall, H.G.C. Applications of Artificial Intelligence and Machine Learning in Heart Failure. Eur. Heart J. Digit. Health 2022, 3, 311–322. [Google Scholar] [CrossRef]

- Wang, W.; Wang, C.-Y.; Wang, S.-I.; Wei, J.C.-C. Long-Term Cardiovascular Outcomes in COVID-19 Survivors among Non-Vaccinated Population: A Retrospective Cohort Study from the TriNetX US Collaborative Networks. eClinicalMedicine 2022, 53, 101619. [Google Scholar] [CrossRef]

- Mitra, M.; Samanta, R.K. Cardiac Arrhythmia Classification Using Neural Networks with Selected Features. Procedia Technol. 2013, 10, 76–84. [Google Scholar] [CrossRef] [Green Version]

- Yıldırım, Ö.; Pławiak, P.; Tan, R.-S.; Acharya, U.R. Arrhythmia Detection Using Deep Convolutional Neural Network with Long Duration ECG Signals. Comput. Biol. Med. 2018, 102, 411–420. [Google Scholar] [CrossRef]

- Amin, H.; Siddiqui, W.J. Cardiomegaly. In StatPearls [Internet]; StatPearls Publishing: Treasure Island, FL, USA, 2022. Available online: https://www.ncbi.nlm.nih.gov/books/NBK542296/ (accessed on 20 November 2022).

- Felker, G.M.; Thompson, R.E.; Hare, J.M.; Hruban, R.H.; Clemetson, D.E.; Howard, D.L.; Baughman, K.L.; Kasper, E.K. Underlying Causes and Long-Term Survival in Patients with Initially Unexplained Cardiomyopathy. N. Engl. J. Med. 2000, 342, 1077–1084. [Google Scholar] [CrossRef]

- Heusch, G.; Libby, P.; Gersh, B.; Yellon, D.; Böhm, M.; Lopaschuk, G.; Opie, L. Cardiovascular Remodelling in Coronary Artery Disease and Heart Failure. Lancet 2014, 383, 1933–1943. [Google Scholar] [CrossRef] [Green Version]

- Bui, A.L.; Horwich, T.B.; Fonarow, G.C. Epidemiology and Risk Profile of Heart Failure. Nat. Rev. Cardiol. 2010, 8, 30–41. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-ray8: Hospital-Scale Chest X-ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef] [Green Version]

- Irvin, J.; Rajpurkar, P.; Ko, M.; Yu, Y.; Ciurea-Ilcus, S.; Chute, C.; Marklund, H.; Haghgoo, B.; Ball, R.; Shpanskaya, K.; et al. CheXpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison. arXiv 2019, arXiv:1901.07031. [Google Scholar] [CrossRef] [Green Version]

- Johnson, A.E.W.; Pollard, T.J.; Berkowitz, S.J.; Greenbaum, N.R.; Lungren, M.P.; Deng, C.; Mark, R.G.; Horng, S. MIMIC-CXR, a De-Identified Publicly Available Database of Chest Radiographs with Free-Text Reports. Sci. Data 2019, 6, 317. [Google Scholar] [CrossRef] [Green Version]

- Bressem, K.K.; Adams, L.C.; Erxleben, C.; Hamm, B.; Niehues, S.M.; Vahldiek, J.L. Comparing Different Deep Learning Architectures for Classification of Chest Radiographs. Sci. Rep. 2020, 10, 13590. [Google Scholar] [CrossRef] [PubMed]

- Susan, S.; Kumar, A. The Balancing Trick: Optimized Sampling of Imbalanced Datasets—A Brief Survey of the Recent State of the Art. Eng. Rep. 2020, 3, e12298. [Google Scholar] [CrossRef]

- Joseph, C. Brain Facts: A Primer on the Brain and Nervous System. Ed.gov. Available online: https://eric.ed.gov/?id=ED340602 (accessed on 24 September 2019).

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning. Deeplearningbook.org. Available online: https://www.deeplearningbook.org/ (accessed on 21 June 2023).

- Valverde, J.M.; Imani, V.; Abdollahzadeh, A.; De Feo, R.; Prakash, M.; Ciszek, R.; Tohka, J. Transfer Learning in Magnetic Resonance Brain Imaging: A Systematic Review. J. Imaging 2021, 7, 66. [Google Scholar] [CrossRef] [PubMed]

- Mukhlif, A.A.; Al-Khateeb, B.; Mohammed, M.A. An Extensive Review of State-of-The-Art Transfer Learning Techniques Used in Medical Imaging: Open Issues and Challenges. J. Intell. Syst. 2022, 31, 1085–1111. [Google Scholar] [CrossRef]

- Matsumoto, T.; Kodera, S.; Shinohara, H.; Ieki, H.; Yamaguchi, T.; Higashikuni, Y.; Kiyosue, A.; Ito, K.; Ando, J.; Takimoto, E.; et al. Diagnosing Heart Failure from Chest X-ray Images Using Deep Learning. Int. Heart J. 2020, 61, 781–786. [Google Scholar] [CrossRef] [PubMed]

- Abbas, A.; Sutter, D.; Zoufal, C.; Lucchi, A.; Figalli, A.; Woerner, S. The Power of Quantum Neural Networks. Nat. Comput. Sci. 2021, 1, 403–409. [Google Scholar] [CrossRef]

- Maheshwari, D.; Garcia-Zapirain, B.; Sierra-Sosa, D. Quantum Machine Learning Applications in the Biomedical Domain: A Systematic Review. IEEE Access 2022, 10, 80463–80484. [Google Scholar] [CrossRef]

- Houssein, E.H.; Abohashima, Z.; Elhoseny, M.; Mohamed, W.M. Hybrid Quantum-Classical Convolutional Neural Network Model for COVID-19 Prediction Using Chest X-ray Images. J. Comput. Des. Eng. 2022, 9, 343–363. [Google Scholar] [CrossRef]

- Mari, A.; Bromley, T.R.; Izaac, J.; Schuld, M.; Killoran, N. Transfer Learning in Hybrid Classical-Quantum Neural Networks. Quantum 2020, 4, 340. [Google Scholar] [CrossRef]

- Shahwar, T.; Zafar, J.; Almogren, A.; Zafar, H.; Rehman, A.U.; Shafiq, M.; Hamam, H. Automated Detection of Alzheimer’s via Hybrid Classical Quantum Neural Networks. Electronics 2022, 11, 721. [Google Scholar] [CrossRef]

- Ovalle-Magallanes, E.; Avina-Cervantes, J.G.; Cruz-Aceves, I.; Ruiz-Pinales, J. Hybrid Classical–Quantum Convolutional Neural Network for Stenosis Detection in X-ray Coronary Angiography. Expert Syst. Appl. 2022, 189, 116112. [Google Scholar] [CrossRef]

- Grote, T.; Berens, P. On the Ethics of Algorithmic Decision-Making in Healthcare. J. Med. Ethics 2019, 46, 205–211. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Winter, P.; Carusi, A. If You’re Going to Trust the Machine, Then That Trust Has Got to Be Based on Something. Sci. Technol. Stud. 2022, 35, 58–77. [Google Scholar] [CrossRef]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Generalized Gradient-Based Visual Explanations for Deep Convolutional Networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018. [Google Scholar] [CrossRef] [Green Version]

- Benedetti, M.; Lloyd, E.; Sack, S.; Fiorentini, M. Parameterized Quantum Circuits as Machine Learning Models. Quantum Sci. Technol. 2019, 4, 043001. [Google Scholar] [CrossRef] [Green Version]

- Ravichandran, K.; Jain, A.; Rakhlin, A. Using Effective Dimension to Analyze Feature Transformations in Deep Neural Networks. In Proceedings of the ICML 2019 Workshop on Identifying and Understanding Deep Learning Phenomena, Long Beach, CA, USA, 15 June 2019; Available online: https://openreview.net/pdf?id=HJGsj13qTE (accessed on 1 April 2023).

- CheXpert Chest X-rays. Available online: https://aimi.stanford.edu/chexpert-chest-x-rays (accessed on 26 March 2023).

- Truszkiewicz, K.; Poręba, R.; Gać, P. Radiological Cardiothoracic Ratio in Evidence-Based Medicine. J. Clin. Med. 2021, 10, 2016. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A. One Weird Trick for Parallelizing Convolutional Neural Networks. arXiv 2014, arXiv:1404.599. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef] [Green Version]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

- Torch Connector and Hybrid QNNs. Available online: https://qiskit.org/documentation/machine-learning/tutorials/05_torch_connector.html (accessed on 31 March 2023).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Müller, A.; Nothman, J.; Louppe, G.; et al. Scikit-Learn: Machine Learning in Python. arXiv 2018, arXiv:1201.0490. [Google Scholar]

- Singh, V.; Pencina, M.; Einstein, A.J.; Liang, J.X.; Berman, D.S.; Slomka, P. Impact of Train/Test Sample Regimen on Performance Estimate Stability of Machine Learning in Cardiovascular Imaging. Sci. Rep. 2021, 11, 14490. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Ball, R.L.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.P.; et al. Deep Learning for Chest Radiograph Diagnosis: A Retrospective Comparison of the CheXNeXt Algorithm to Practicing Radiologists. PLoS Med. 2018, 15, e1002686. [Google Scholar] [CrossRef]

- Arun, N.; Gaw, N.; Singh, P.; Chang, K.; Aggarwal, M.; Chen, B.; Hoebel, K.; Gupta, S.; Patel, J.; Gidwani, M.; et al. Assessing the Trustworthiness of Saliency Maps for Localizing Abnormalities in Medical Imaging. Radiol. Artif. Intell. 2021, 3, e200267. [Google Scholar] [CrossRef]

- Mangini, S.; Tacchino, F.; Gerace, D.; Bajoni, D.; Macchiavello, C. Quantum Computing Models for Artificial Neural Networks. EPL Europhys. Lett. 2021, 134, 10002. [Google Scholar] [CrossRef]

- Effective Dimension of Qiskit Neural Networks. Available online: https://qiskit.org/documentation/machine-learning/tutorials/10_effective_dimension.html (accessed on 31 March 2023).

- Sammut, C.; Webb, G.I. (Eds.) Encyclopedia of Machine Learning; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Quantum Neural Networks. Available online: https://qiskit.org/documentation/machine-learning/tutorials/01_neural_networks.html (accessed on 31 March 2023).

- Berezniuk, O.; Figalli, A.; Ghigliazza, R.; Musaelian, K. A Scale-Dependent Notion of Effective Dimension. arXiv 2020, arXiv:2001.10872. [Google Scholar]

- Rissanen, J.J. Fisher Information and Stochastic Complexity. IEEE Trans. Inf. Theory 1996, 42, 40–47. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; Wiley-Interscience: New York, NY, USA, 1991; pp. 485–507. [Google Scholar]

- Arrasmith, A.; Cerezo, M.; Czarnik, P.; Cincio, L.; Coles, P.J. Effect of Barren Plateaus on Gradient-Free Optimization. Quantum 2021, 5, 558. [Google Scholar] [CrossRef]

- Wang, S.; Fontana, E.; Cerezo, M.; Sharma, K.; Sone, A.; Cincio, L.; Coles, P.J. Noise-Induced Barren Plateaus in Variational Quantum Algorithms. Nat. Commun. 2021, 12, 6961. [Google Scholar] [CrossRef]

- Hossain, B.; Iqbal, S.M.H.S.; Islam, M.; Akhtar, N.; Sarker, I.H. Transfer Learning with Fine-Tuned Deep CNN ResNet50 Model for Classifying COVID-19 from Chest X-ray Images. Inform. Med. Unlocked 2022, 30, 100916. [Google Scholar] [CrossRef]

- Oh, S.; Choi, J.; Kim, J. A Tutorial on Quantum Convolutional Neural Networks (QCNN). IEEE Xplore. In Proceedings of the 2020 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 21–23 October 2020. [Google Scholar] [CrossRef]

- Jiang, J.; Lin, S. COVID-19 Detection in Chest X-ray Images Using Swin-Transformer and Transformer in Transformer. arXiv 2022, arXiv:2110.08427. [Google Scholar]

- Xu, X.; Benjamin, S.; Sun, J.; Yuan, X.; Zhang, P. A Herculean Task: Classical Simulation of Quantum Computers. arXiv 2023, arXiv:2302.08880. [Google Scholar]

- Yang, Z.; Zolanvari, M.; Jain, R. A Survey of Important Issues in Quantum Computing and Communications. IEEE Commun. Surv. Tutor. 2023, 25, 1059–1094. [Google Scholar] [CrossRef]

- Saporta, A.; Gui, X.; Agrawal, A.; Pareek, A.; Truong, S.Q.H.; Nguyen, C.D.T.; Ngo, V.-D.; Seekins, J.; Blankenberg, F.G.; Ng, A.Y.; et al. Benchmarking Saliency Methods for Chest X-ray Interpretation. Nat. Mach. Intell. 2022, 4, 867–878. [Google Scholar] [CrossRef]

- Anis, M.S.; Abraham, H.; Adu, O.; Agarwal, R.; Agliardi, G.; Aharoni, M.; Akhalwaya, I.Y.; Aleksandrowicz, G.; Alexander, T.; Amy, M.; et al. Qiskit: An Open-Source Framework for Quantum Computing. 2021. Available online: https://raw.githubusercontent.com/Qiskit/qiskit/master/Qiskit.bib (accessed on 21 June 2023).

- Bergholm, V.; Izaac, J.; Schuld, M.; Gogolin, C.; Ahmed, S.; Ajith, V.; Alam, M.S.; Alonso-Linaje, G.; AkashNarayanan, B.; Asadi, A.; et al. PennyLane: Automatic Differentiation of Hybrid Quantum-Classical Computations. arXiv 2022, arXiv:1811.04968. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. 2019. Available online: http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf (accessed on 21 June 2023).

| All | Control | Cardiomegaly | |

|---|---|---|---|

| Number of Patients: | 2436 | 1225 | 1211 |

| Age: | 58.6 ± 17.7 | 54.4 ± 17.2 | 62.9 ± 17.1 *** |

| Gender Male: | 1520 (62%) | 794 (65%) | 726 (59%) * |

| One or Several Other Findings: | 1474 (61%) | 559 (46%) | 915 (75%) *** |

| Enlarged Cardiomediastinum | 293 (12%) | 151 (12%) | 142 (12%) |

| Lung Opacity | 727 (30%) | 266 (22%) | 461 (38%) *** |

| Lung Lesion | 191 (8%) | 91 (7%) | 100 (8%) |

| Edema | 633 (26%) | 133 (11%) | 500 (41%) *** |

| Consolidation | 647 (27%) | 315 (26%) | 332 (27%) |

| Pneumonia | 244 (10%) | 83 (7%) | 161 (13%) *** |

| Atelectasis | 405 (17%) | 139 (11%) | 266 (22%) *** |

| Pneumothorax | 377 (15%) | 215 (18%) | 162 (13%) ** |

| Pleural Effusion | 1054 (43%) | 435 (36%) | 619 (51%) *** |

| Pleural Other | 135 (6%) | 46 (4%) | 89 (7%) *** |

| Fracture | 119 (5%) | 59 (5%) | 60 (5%) |

| Support Devices | 558 (23%) | 226 (18%) | 332 (27%) *** |

| Abbreviation in Tables | Synonyms | |

|---|---|---|

| AUC score | AUC | --- |

| Accuracy | Acc | --- |

| Balanced Accuracy | B Acc | --- |

| Precision 0 | Prec 0 | Negative predictive value |

| Precision 1 | Prec 1 | Positive predictive value |

| Recall 0 | Rec 0 | Specificity |

| Recall 1 | Rec 1 | Sensitivity |

| Model Name | Training with Freezer | Pre-Trained CNN | SDK | n | D |

|---|---|---|---|---|---|

| F-Dnet-C | YES | DenseNet-121 | None | --- | --- |

| F-Axnet-C | YES | AlexNet | None | --- | --- |

| F-Dnet-P-4q | YES | DenseNet-121 | PennyLane | 4 | --- |

| F-Dnet-Q-4q-2D | YES | DenseNet-121 | Qiskit | 4 | 2 |

| F-Dnet-Q-4q-4D | YES | DenseNet-121 | Qiskit | 4 | 4 |

| N-Dnet-C | NO | DenseNet-121 | None | 4 | -- |

| N-Axnet-C | NO | AlexNet | None | --- | -- |

| N-Dnet-P-4q | NO | DenseNet-121 | PennyLane | --- | -- |

| N-Dnet-Q-4q-2D | NO | DenseNet-121 | Qiskit | 4 | 2 |

| N-Dnet-Q-4q-4D | NO | DenseNet-121 | Qiskit | 4 | 4 |

| F-Dnet-P-6q | YES | DenseNet-121 | PennyLane | 6 | -- |

| F-Dnet-P-8q | YES | DenseNet-121 | PennyLane | 8 | -- |

| F-Dnet-P-10q | YES | DenseNet-121 | PennyLane | 10 | -- |

| Model | AUC | Acc | B Acc | Prec 0 | Prec 1 | Rec 0 | Rec 1 |

|---|---|---|---|---|---|---|---|

| F-Dnet-C | 0.931 [0.923, 0.939] | 0.863 [0.853, 0.873] | 0.862 [0.852, 0.873] | 0.845 [0.831, 0.858] | 0.884 [0.870, 0.897] | 0.892 [0.883, 0.902] | 0.832 [0.812, 0.853] |

| F-Axnet-C | 0.933 [0.925, 0.940] | 0.858 [0.852, 0.863] | 0.858 [0.853, 0.864] | 0.858 [0.843, 0.873] | 0.858 * [0.835, 0.880] | 0.860 * [0.838, 0.881] | 0.857 [0.844, 0.870] |

| N-Dnet-C | 0.934 [0.926, 0.942] | 0.863 [0.855, 0.870] | 0.862 [0.855, 0.870] | 0.841 [0.828, 0.853] | 0.889 [0.871, 0.908] | 0.897 [0.876, 0.917] | 0.828 [0.813, 0.843] |

| N-Axnet-C | 0.921 [0.909, 0.933] | 0.849 [0.836, 0.863] | 0.850 [0.835, 0.864] | 0.850 [0.834, 0.866] | 0.848 * [0.825, 0.872] | 0.851 * [0.830, 0.872] | 0.848 [0.830, 0.865] |

| Model | AUC | Acc | B Acc | Prec 0 | Prec 1 | Rec 0 | Rec 1 |

|---|---|---|---|---|---|---|---|

| F-Dnet-P-4q | 0.923 [0.912, 0.935] | 0.862 [0.852, 0.872] | 0.860 [0.849, 0.871] | 0.832 [0.815, 0.848] | 0.902 [0.883, 0.920] | 0.910 [0.890, 0.931] | 0.810 [0.779, 0.841] |

| F-Dnet-P-6q | 0.922 [0.912, 0.933] | 0.860 [0.843, 0.878] | 0.860 [0.841, 0.878] | 0.844 [0.822, 0.865] | 0.882 [0.855, 0.909] | 0.890 [0.863, 0.918] | 0.829 [0.797, 0.862] |

| F-Dnet-P-8q | 0.926 [0.919, 0.934] | 0.862 [0.852, 0.873] | 0.862 [0.851, 0.873] | 0.844 [0.827, 0.861] | 0.887 [0.860, 0.913] | 0.893 [0.866, 0.920] | 0.830 [0.803, 0.858] |

| F-Dnet-P-10q | 0.912 ** [0.898, 0.925] | 0.860 [0.849, 0.871] | 0.861 [0.850, 0.872] | 0.839 [0.816, 0.863] | 0.888 [0.860, 0.917] | 0.897 [0.869, 0.924] | 0.826 [0.798, 0.853] |

| F-Dnet-Q-4q-2D | 0.901 ** [0.886, 0.915] | 0.867 [0.859, 0.875] | 0.866 [0.858, 0.874] | 0.845 [0.830, 0.860] | 0.896 [0.877, 0.914] | 0.901 [0.874, 0.928] | 0.831 [0.807, 0.855] |

| F-Dnet-Q-4q-4D | 0.911 ** [0.902, 0.920] | 0.867 [0.859, 0.875] | 0.867 [0.859, 0.876] | 0.845 [0.829, 0.861] | 0.894 [0.879, 0.909] | 0.902 [0.887, 0.917] | 0.832 [0.814, 0.850] |

| Heatmap Pattern | Label | CC Model | CQ Model (Qiskit) | CQ Model (PennyLane) |

|---|---|---|---|---|

| Trustworthy | Control | 209 | 354 | 342 |

| Cardiomegaly | 237 | 330 | 326 | |

| Total | 446 (61%) | 684 (94%) | 668 (92%) | |

| Non-trustworthy | Control | 160 | 15 | 27 |

| Cardiomegaly | 124 | 31 | 35 | |

| Total | 284 (39%) | 46 (6%) | 62 (8%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Decoodt, P.; Liang, T.J.; Bopardikar, S.; Santhanam, H.; Eyembe, A.; Garcia-Zapirain, B.; Sierra-Sosa, D. Hybrid Classical–Quantum Transfer Learning for Cardiomegaly Detection in Chest X-rays. J. Imaging 2023, 9, 128. https://doi.org/10.3390/jimaging9070128

Decoodt P, Liang TJ, Bopardikar S, Santhanam H, Eyembe A, Garcia-Zapirain B, Sierra-Sosa D. Hybrid Classical–Quantum Transfer Learning for Cardiomegaly Detection in Chest X-rays. Journal of Imaging. 2023; 9(7):128. https://doi.org/10.3390/jimaging9070128

Chicago/Turabian StyleDecoodt, Pierre, Tan Jun Liang, Soham Bopardikar, Hemavathi Santhanam, Alfaxad Eyembe, Begonya Garcia-Zapirain, and Daniel Sierra-Sosa. 2023. "Hybrid Classical–Quantum Transfer Learning for Cardiomegaly Detection in Chest X-rays" Journal of Imaging 9, no. 7: 128. https://doi.org/10.3390/jimaging9070128

APA StyleDecoodt, P., Liang, T. J., Bopardikar, S., Santhanam, H., Eyembe, A., Garcia-Zapirain, B., & Sierra-Sosa, D. (2023). Hybrid Classical–Quantum Transfer Learning for Cardiomegaly Detection in Chest X-rays. Journal of Imaging, 9(7), 128. https://doi.org/10.3390/jimaging9070128