Traffic Congestion Detection System through Connected Vehicles and Big Data

Abstract

:1. Introduction

2. Related Work

3. Proposal Architecture

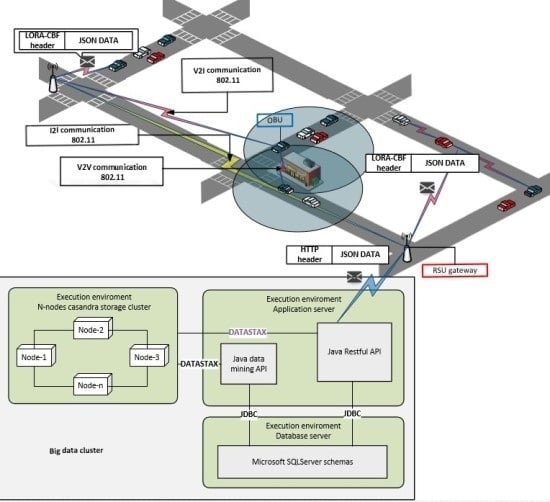

3.1. Architecture

3.2. Big Data Cluster

- Acknowledgement: a 0 (false) or 1 (true) condition that states whether or not a vehicle has been previously recognized.

- Latitude: the latitude in a decimal format.

- Longitude: the longitude in decimal format.

- Temperature: a floating number that indicates temperature.

- Vehicleid: a text chain that identifies the vehicle.

- VehicleIp: an IP address that identifies a vehicle in the network.

- packageCounter: a whole number that indicates the number of the received package.

- Speed: a floating number that indicates the speed of the vehicle.

- Timestamp: the time and date in which the registry was sent.

- Latitude: the latitude in decimal format.

- Longitude: the longitude in decimal format.

- Vehicleid: a text chain that identifies the vehicle.

- VehicleIp: an IP address that identifies a vehicle in the network.

- Timestamp: the time and date in which the consult was sent.

- congestionSearchRadius: the radius in meters in which the registered traffic will be searched.

- congestionDetecionTimeframe: the time in seconds to consider previous alerts.

- vehicleId: a text chain that identifies the vehicle that filed the request.

- vehicleIp: an IP address that identifies the vehicle that filed the request.

- congestionAlertId: a unique identifier of the alert.

- timestamp: the time and date in which the traffic event was detected.

- Latitude: the approximate latitude from the central point of traffic in decimal format.

- Longitude: the approximate longitude from the central point of traffic in a decimal format.

- congestionLength: the longitude of traffic in meters.

- congestionAvgSpeed: the average speed of circulation in km/h.

3.3. On-Board Unit

3.3.1. Route Monitoring Algorithm

3.3.2. Algorithm LORA-CBF

3.4. Road-Side Unit

3.5. Design of the Packet Structure

- Begin: a special character to indicate the beginning of the packet.

- Length: the number of bytes contained between the size data and the verification sum.

- Transmission method: the manner in which the packet is sent (Multicast, Broadcast, Unicast).

- Source Address: the address of the sending node (changes with each jump).

- Destination Address: the address of the receiving code (changes with each jump).

- RSSI: the received signal intensity (dBm).

- Payload: the content of the packet with a maximum size of 1488 bytes.

- Checksum: a number for checking the packet’s integrity.

- Forcer: a byte to force the 802.11 transceptor to send the datagram and it doesn’t wait to fill its maximum capacity.

3.5.1. Types of Packet Payload Content

- Packet type: a code corresponding to the packet type.

- Node type: a code corresponding to the node type (cluster head, member, gateway).

- Latitude: the 12-byte latitude given by the GPS.

- Longitude: the 12-byte longitude given by the GPS.

- Speed: the vehicle speed.

- Packet type: a code corresponding to the packet type.

- Identification field: the field that records if a packet has been previously seen.

- Node type: a code corresponding to the node type (Cluster Head, Member, Gateway)

- Applicant address: the address of the node that initiates the search.

- Address to search: the node address to be discovered.

- Latitude: the 12-byte latitude given by the GPS.

- Longitude: the 12-byte longitude given by the GPS.

- Speed: the vehicle speed.

- Packet type: a code corresponding to the packet type.

- Node type: a code corresponding to the node type (Cluster Head, Member, Gateway).

- Applicant address: the address of the node initiating the search.

- Address to search: the node address to be searched.

- Latitude: the 12-byte latitude given by the GPS.

- Longitude: the 12-byte longitude given by the GPS.

- Speed: the vehicle speed.

- Packet type: a code corresponding to the type of DATA.

- Initial source address: the origin address of the maintained data.

- Final destination address: the destination direction of the maintained data.

- Hops: the number of jumps from the data’s origin.

- Packet counter: an incremental packet counter of the data source.

- Data: the information that will be sent through the network.

- Latitude: the 12-byte latitude given by the GPS.

- Longitude: the 12-byte longitude given by the GPS.

3.5.2. DATA Packet De-Encapsulation and Encapsulation

4. Evaluation

4.1. Evaluation Scenario

4.2. Simulation Model

4.3. Cluster Configuration

5. Results

5.1. Performance of the Traffic Congestion Detection System

5.2. Time and CO2 Reductions Achieved by the System

6. Conclusions

- The algorithm’s accuracy in detecting traffic congestion with 10.1% of the vehicles equipped with an OBU is of 93.7% in 64 programmed traffic congestions.

- The algorithm’s accuracy in the detection of traffic congestion of 50% of the vehicles with an OBU in place, increases to 98.4% in 64 programmed traffic congestions.

- CO2 greenhouse gas emissions are reduced by 50% on average, by detecting and conveniently modifying the route.

- The average arrival time to the destination is 70% shorter, by detecting traffic congestion and changing the routes.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- WHO. Urban Population Growth. Available online: http://www.who.int/gho/urban_health/situation_trends/urban_population_growth_text/en/# (accessed on 25 April 2016).

- World Bank Tasa de Crecimiento de la Población. Available online: http://www.worldbank.org/depweb/spanish/modules/social/pgr/print.html (accessed on 25 April 2016). (In Spanish)

- WHO. WHO Website. Climate Change and Health. Available online: http://www.who.int/mediacentre/factsheets/fs266/en/ (accessed on 25 April 2016).

- WHO. WHO Website. Ambient (Outdoor) Air Quality and Health. Available online: http://www.who.int/mediacentre/factsheets/fs313/en/ (accessed on 25 April 2016).

- WHO. WHO Website. 7 Million Premature Deaths Annually Linked to Air Pollution. Available online: http://www.who.int/mediacentre/news/releases/2014/air-pollution/en/ (accessed on 25 April 2016).

- UN. Air Pollution from Ground Transportation. Available online: http://www.un.org/esa/gite/csd/gorham.pdf (accessed on 25 April 2016).

- Enrique Kogan Cuántos Vehículos Hay en el Mundo, quienes los Fabrican y Que se Espera Para el Futuro. Available online: http://voces.huffingtonpost.Com/enrique-kogan/numero-de-vehiculos-en-el-mundo_b_6237052.html (accessed on 25 April 2016). (In Spanish)

- Climatewire, J.L. Can Local “Domes” of Carbon Dioxide Affect Human Health? Available online: http://www.scientificamerican.com/article/can-carbon-dioxide-domes-affect-health/ (accessed on 25 April 2016).

- TOM. TOM TOM Website. Traffic Index. Available online: http://www.tomtom.com/en_gb/trafficindex/#/press (accessed on 25 April 2016).

- CNN Expansion ¿Se te va la vida en el tráfico? ¡Cuidado! Available online: http://www.cnnexpansion.com/mi-carrera/2011/07/15/se-te-va-la-vida-en-el-trafico-cuidado (accessed on 25 April 2016). (In Spanish)

- CNN México. CNN Mexico Website. Los Traslados en el DF son Cinco Veces Más Lentos que Hace 25 Años: ONG. Available online: http://mexico.cnn.com/nacional/2015/06/10/los-traslados-en-el-df-son-cinco-veces-mas-lentos-que-hace-25-anos-ong (accessed on 25 April 2016).

- OMNeT++ Discrete Event Simulator—Home. Available online: https://omnetpp.org/ (accessed on 25 April 2016).

- DLR—Institute of Transportation Systems. SUMO—Simulation of Urban MObility. Available online: http://www.dlr.de/ts/en/desktopdefault.aspx/tabid-9883/16931_read-41000/ (accessed on 25 April 2016).

- Veins. Available online: http://veins.car2x.org/ (accessed on 25 April 2016).

- Kopetz, H. Internet of Things. In Real-Time Systems; Real-Time Systems Series; Springer US: Boston, MA, USA, 2011; pp. 307–323. [Google Scholar]

- Tan, L.; Wang, N. Future internet: The Internet of Things. In Proceedings of the 2010 3rd International Conference on Advanced Computer Theory and Engineering (ICACTE), Chengdu, China, 20–22 August 2010.

- Ma, H.-D. Internet of Things: Objectives and Scientific Challenges. J. Comput. Sci. Technol. 2011, 26, 919–924. [Google Scholar] [CrossRef]

- Perry, Y. En 2020 habrá 20 mil Millones de Dispositivos Conectados al Internet de las Cosas. Available online: https://www.fayerwayer.com/2015/11/en-2020-habra-20-mil-millones-de-dispositivos-conectados-al-internet-de-las-cosas/ (accessed on 25 April 2016).

- Hu, H.; Wen, Y.; Chua, T.-S.; Li, X. Toward Scalable Systems for Big Data Analytics: A Technology Tutorial. IEEE Access 2014, 2, 652–687. [Google Scholar]

- Gartner Gartner’s 2014 Hype Cycle for Emerging Technologies Maps the Journey to Digital Business. Available online: http://www.gartner.com/newsroom/id/2819918 (accessed on 25 April 2016).

- Tariq RS, N.T. Big Data Challenges. Comput. Eng. Inf. Technol. 2015, 4, 1–10. [Google Scholar] [CrossRef]

- Ostrowski, D.; Rychtyckyj, N.; MacNeille, P.; Kim, M. Integration of Big Data Using Semantic Web Technologies. In Proceedings of the 2016 IEEE Tenth International Conference on Semantic Computing (ICSC), Laguna Hills, CA, USA, 4–6 February 2016; pp. 382–385.

- Nimmagadda, S.L.; Dreher, H.V. Big-data integration methodologies for effective management and data mining of petroleum digital ecosystems. In Proceedings of the 2013 7th IEEE International Conference on Digital Ecosystems and Technologies (DEST), Menlo Park, CA, USA, 24–26 July 2013; pp. 148–153.

- Bansal, S.K. Towards a Semantic Extract-Transform-Load (ETL) Framework for Big Data Integration. In Proceedings of the 2014 IEEE International Congress on Big Data, Anchorage, AK, USA, 27 June–2 July 2014; pp. 522–529.

- Baldominos, A.; Albacete, E.; Saez, Y.; Isasi, P. A scalable machine learning online service for big data real-time analysis. In Proceedings of the 2014 IEEE Symposium on Computational Intelligence in Big Data (CIBD), Orlando, FL, USA, 9–12 December 2014; pp. 1–8.

- Rathore, M.M.U.; Paul, A.; Ahmad, A.; Chen, B.-W.; Huang, B.; Ji, W. Real-Time Big Data Analytical Architecture for Remote Sensing Application. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4610–4621. [Google Scholar] [CrossRef]

- Yang, W.; Liu, X.; Zhang, L.; Yang, L.T. Big Data Real-Time Processing Based on Storm. In Proceedings of the 12th IEEE International Conference on Trust, Security and Privacy in Computing and Communications, Melbourne, Australia, 16–18 July 2013; pp. 1784–1787.

- Data Warehouse Augmentation, Part 1: Big Data and Data Warehouse Augmentation. Available online: http://www.ibm.com/developerworks/library/ba-augment-data-warehouse1/index.html (accessed on 10 April 2016).

- Xu, L.; Jiang, C.; Wang, J.; Yuan, J.; Ren, Y. Information Security in Big Data: Privacy and Data Mining. IEEE Access 2014, 2, 1149–1176. [Google Scholar]

- Adluru, P.; Datla, S.S.; Zhang, X. Hadoop eco system for big data security and privacy. In Proceedings of the 2015 IEEE Long Island Systems, Applications and Technology Conference (LISAT), Farmingdale, NY, USA, 1 May 2015; pp. 1–6.

- Terzi, D.S.; Terzi, R.; Sagiroglu, S. A survey on security and privacy issues in big data. In Proceedings of the 2015 10th International Conference for Internet Technology and Secured Transactions (ICITST), London, UK, 14–16 December 2015; pp. 202–207.

- Matturdi, B.; Zhou, X.; Li, S.; Lin, F. Big Data security and privacy: A review. China Commun. 2014, 11, 135–145. [Google Scholar] [CrossRef]

- Liebig, T. Privacy Preserving Centralized Counting of Moving Objects. In AGILE 2015; Bacao, F., Santos, M.Y., Painho, M., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 91–103. [Google Scholar]

- Refonaa, J.; Lakshmi, M.; Vivek, V. Analysis and prediction of natural disaster using spatial data mining technique. In Proceedings of the 2015 International Conference on Circuit, Power and Computing Technologies (ICCPCT), Nagercoil, India, 19–20 March 2015; pp. 1–6.

- Fang, S.; Xu, L.; Zhu, Y.; Liu, Y.; Liu, Z.; Pei, H.; Yan, J.; Zhang, H. An integrated information system for snowmelt flood early-warning based on internet of things. Inf. Syst. Front. 2013, 17, 321–335. [Google Scholar] [CrossRef]

- Wei, W.; Kun, Z.; Yun, H. Research on the innovation system of IOT industry of Heilongjiang province based on the LAFIS method. In Proceedings of the 2012 International Conference on Information Management, Innovation Management and Industrial Engineering (ICIII), Sanya, China, 20–21 October 2012; Volume 1, pp. 127–130.

- Wei, W.; Liping, L. Research on the coupling relations between technology innovation and business modes innovation of IOT industry based on SD. In Proceedings of the 2013 6th International Conference on Information Management, Innovation Management and Industrial Engineering (ICIII), Xi’an, China, 23–24 November 2013; Volume 2, pp. 66–69.

- Khaleel, H.; Conzon, D.; Kasinathan, P.; Brizzi, P.; Pastrone, C.; Pramudianto, F.; Eisenhauer, M.; Cultrona, P.A.; Rusina, F.; Lukac, G.; et al. Heterogeneous Applications, Tools, and Methodologies in the Car Manufacturing Industry Through an IoT Approach. IEEE Syst. J. 2015, 1, 1–12. [Google Scholar] [CrossRef]

- Wu, C.-L.; Tseng, Y.-S.; Fu, L.-C. Spatio-temporal Feature Enhanced Semi-supervised Adaptation for Activity Recognition in IoT-Based Context-Aware Smart Homes. In Proceedings of the IEEE International Conference on and IEEE Cyber, Physical and Social Computing Green Computing and Communications (GreenCom), 2013 IEEE and Internet of Things (iThings/CPSCom), Beijing, China, 20–23 August 2013; pp. 460–467.

- Amadeo, M.; Campolo, C.; Iera, A.; Molinaro, A. Information Centric Networking in IoT scenarios: The case of a smart home. In Proceedings of the 2015 IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015; pp. 648–653.

- Santoso, F.K.; Vun, N.C.H. Securing IoT for smart home system. In Proceedings of the 2015 IEEE International Symposium on Consumer Electronics (ISCE), Madrid, Spain, 24–26 June 2015; pp. 1–2.

- Magaña-Espinoza, P.; Aquino-Santos, R.; Cárdenas-Benítez, N.; Aguilar-Velasco, J.; Buenrostro-Segura, C.; Edwards-Block, A.; Medina-Cass, A. WiSPH: A Wireless Sensor Network-Based Home Care Monitoring System. Sensors 2014, 14, 7096–7119. [Google Scholar] [CrossRef]

- Swiatek, P.; Rucinski, A. IoT as a service system for eHealth. In Proceedings of the 2013 IEEE 15th International Conference on e-Health Networking, Applications Services (Healthcom), Lisbon, Portugal, 9–12 October 2013; pp. 81–84.

- Celentano, U.; Roning, J. Framework for dependable and pervasive eHealth services. In Proceedings of the 2015 IEEE 2nd World Forum on Internet of Things (WF-IoT), Milan, Italy, 14–16 December 2015; pp. 634–639.

- Liu, Y.; Wang, H.; Wang, J.; Qian, K.; Kong, N.; Wang, K.; Shi, Y.; Zheng, L. Enterprise-Oriented IoT Name Service for Agriculture Product Supply Chain Management. In Proceedings of the 2014 International Conference on Identification, Information and Knowledge in the Internet of Things (IIKI), Beijing, China, 17–18 October 2014; pp. 237–241.

- Jayaraman, P.P.; Palmer, D.; Zaslavsky, A.; Georgakopoulos, D. Do-it-Yourself Digital Agriculture applications with semantically enhanced IoT platform. In Proceedings of the 2015 IEEE Tenth International Conference on Intelligent Sensors, Sensor Networks and Information Processing (ISSNIP), Singapore, 7–9 April 2015; pp. 1–6.

- Bing, F. Research on the agriculture intelligent system based on IOT. In Proceedings of the 2012 International Conference on Image Analysis and Signal Processing, Hangzhou, China, 9–11 November 2012; pp. 1–4.

- Ganchev, I.; Ji, Z.; O’Droma, M. A generic IoT architecture for smart cities. In Proceedings of the 25th IET Irish Signals Systems Conference 2014 and 2014 China-Ireland International Conference on Information and Communications Technologies (ISSC 2014/CIICT 2014), Limerick, UK, 26–27 June 2013; pp. 196–199.

- Lea, R.; Blackstock, M. City Hub: A Cloud-Based IoT Platform for Smart Cities. In Proceedings of the 2014 IEEE 6th International Conference on Cloud Computing Technology and Science (CloudCom), Singapore, 15–18 December 2014; pp. 799–804.

- Aly, H.H.; Soliman, A.H.; Mouniri, M. Towards a fully automated monitoring system for Manhole Cover: Smart cities and IOT applications. In Proceedings of the 2015 IEEE First International Smart Cities Conference (ISC2), Guadalajara, Mexico, 25–28 October 2015; pp. 1–7.

- Deans, C. The design of an intelligent urban transportation system in Jamaica based on the Internet of Things. In Proceedings of the SoutheastCon 2015, Fort Lauderdale, FL, USA, 9–12 April 2015; pp. 1–2.

- Carignani, M.; Ferrini, S.; Petracca, M.; Falcitelli, M.; Pagano, P. A prototype bridge between automotive and the IoT. In Proceedings of the 2015 IEEE 2nd World Forum on Internet of Things (WF-IoT), Milan, Italy, 14–16 December 2015; pp. 12–17.

- Zambada, J.; Quintero, R.; Isijara, R.; Galeana, R.; Santillan, L. An IoT based scholar bus monitoring system. In Proceedings of the 2015 IEEE First International Smart Cities Conference (ISC2), Guadalajara, Mexico, 25–28 October 2015; pp. 1–6.

- Galaviz-Mosqueda, G.; Aquino-Santos, R.; Villarreal-Reyes, S.; Rivera-Rodríguez, R.; Villaseñor-González, L.; Edwards, A. Reliable Freestanding Position-Based Routing in Highway Scenarios. Sensors 2012, 12, 14262–14291. [Google Scholar] [CrossRef]

- Saleet, H.; Basir, O. Location-Based Message Aggregation in Vehicular Ad Hoc Networks. In Proceedings of the 2007 IEEE Globecom Workshops, Washington, DC, USA, 26–30 November 2007; pp. 1–7.

- Li, J.; Blake, C.; De Couto, D.S.J.; Lee, H.I.; Morris, R. Capacity of Ad Hoc wireless networks. In MobiCom 01 Proceedings of the 7th Annual International Conference on Mobile computing and Networking; ACM Press: New York, NY, USA, 2001; pp. 61–69. [Google Scholar]

- Przydatek, B.; Song, D.; Perrig, A. SIA: Secure information aggregation in sensor networks. In Journal of Computer Securit-Special Issue on Security of Ad-hoc and Sensor Networks; ACM Press: Amsterdam, The Netherlands, 2003. [Google Scholar]

- Madden, S.; Franklin, M.J.; Hellerstein, J.M.; Hong, W. TAG: A Tiny AGgregation service for ad-hoc sensor networks. ACM SIGOPS Oper. Syst. Rev. 2002, 36, 131–146. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, X.; Zhu, S. SDAP: A secure hop-by-Hop data aggregation protocol for sensor networks. In MobiHoc 06 Proceedings of the 7th International Symposium on Mobile Ad-hoc Networking and Computing; ACM Press: New York, NY, USA, 2006. [Google Scholar]

- Ibrahim, K.; Weigle, M.C. CASCADE: Cluster-Based Accurate Syntactic Compression of Aggregated Data in VANETs. In Proceedings of the 2008 IEEE Goblecom Workshops, New Orleans, LO, USA, 30 November–4 December 2008; pp. 1–10.

- Picconi, F.; Ravi, N.; Gruteser, M.; Iftode, L. Probabilistic validation of aggregated data in vehicular ad-hoc networks. In VANET 06 Proceedings of the 3rd International Workshop on Vehicular Ad-hoc Networks; ACM Press: New York, NY, USA, 2006; p. 76. [Google Scholar]

- Raya, M.; Aziz, A.; Hubaux, J.-P. Efficient secure aggregation in VANETs. In VANET 06 Proceedings of the 3rd International Workshop on Vehicular Ad-hoc Networks; ACM Press: New York, NY, USA, 2006. [Google Scholar]

- Milanes, V.; Villagra, J.; Godoy, J.; Simo, J.; Perez, J.; Onieva, E. An Intelligent V2I-Based Traffic Management System. IEEE Trans. Intell. Transp. Syst. 2012, 13, 49–58. [Google Scholar] [CrossRef]

- Misbahuddin, S.; Zubairi, J.A.; Saggaf, A.; Basuni, J.; A-Wadany, S.; Al-Sofi, A. IoT based dynamic road traffic management for smart cities. In Proceedings of the 2015 12th International Conference on High-Capacity Optical Networks and Enabling/Emerging Technologies (HONET), Islamabad, Pakistan, 21–23 December 2015; pp. 1–5.

- Pandit, K.; Ghosal, D.; Zhang, H.M.; Chuah, C.-N. Adaptive Traffic Signal Control With Vehicular Ad hoc Networks. IEEE Trans. Veh. Technol. 2013, 62, 1459–1471. [Google Scholar] [CrossRef]

- Liu, Y.; Li, G.; Hu, S.; Ye, T. Real-time detection of traffic flow combining virtual detection-line and contour feature. In Proceedings of the 2011 International Conference on Transportation, Mechanical, and Electrical Engineering (TMEE), Changchun, China, 16–18 December 2011; pp. 408–413.

- Tosi, D.; Marzorati, S.; Pulvirenti, C. Vehicular traffic predictions from cellular network data #x2014; A real world case study. In Proceedings of the 2014 International Conference on Connected Vehicles and Expo (ICCVE), Vienna, Austria, 3–7 November 2014; pp. 485–491.

- Xiao, L.; Wang, Z. Internet of Things: A New Application for Intelligent Traffic Monitoring System. J. Netw. 2011, 6, 887–894. [Google Scholar] [CrossRef]

- D’Andrea, E.; Ducange, P.; Lazzerini, B.; Marcelloni, F. Real-Time Detection of Traffic from Twitter Stream Analysis. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2269–2283. [Google Scholar] [CrossRef]

- Jung, Y.; Kim, K. Movement Awareness Position-Based Routing Protocol for Intersection of Urban Environment. In Proceedings of the 2008 International Symposium on Parallel and Distributed Processing with Applications, Sydney, Australia, 10–12 December 2008; pp. 795–798.

- Santos, R.; Edwards, A.; Alvarez, O. Towards an Inter-vehicle Communication Algorithm. In Proceedings of the 2006 3rd International Conference on Electrical and Electronics Engineering, Veracruz, Mexico, 6–8 September 2006; pp. 1–4.

- Naumov, V.; Gross, T.R. Connectivity-Aware Routing (CAR) in Vehicular Ad-hoc Networks. In Proceedings of the IEEE INFOCOM 2007—26th IEEE International Conference on Computer Communications, Anchorage, AK, USA, 6–12 May 2007; pp. 1919–1927.

- Bauza, R.; Gozalvez, J. Traffic congestion detection in large-scale scenarios using vehicle-to-vehicle communications. Int. J. Netw. Comput. Appl. 2013, 36, 1295–1307. [Google Scholar] [CrossRef]

- Gupta, A.; Choudhary, S.; Paul, S. DTC: A framework to Detect Traffic Congestion by mining versatile GPS data. In Proceedings of the 2013 1st International Cenference Emerging Trends and Applications in Computer Science (ICETACS), Shillong, Meghalayav, 13–14 September 2013; pp. 97–103.

- Cappiello, A.; Chabini, I.; Nam, E.K.; Lue, A.; Zeid, M.A. A statistical model of vehicle emissions and fuel consumption. In Proceedings of the IEEE 5th International Conference Intelligent Transportation Systems, Singapore, 6 September 2002; pp. 801–809.

| Begin | Lenght | Type Send | RSSI | Reserved | Source Address | Destination Address | Payload | Checksum | Forcer | |

|---|---|---|---|---|---|---|---|---|---|---|

| 7E | 00 | 08 | 01 | 1 Byte | 1 Byte | 4 Bytes | 4 Bytes | 0–1488 Bytes | 9D | 7D |

| Packet Type | Node Type | Latitude | Longitude | Speed |

|---|---|---|---|---|

| 48 | 01 | 12 Bytes | 12 Bytes | 1F |

| Packet Type | Identification Field | Node Type | Applicant Address | Address to Search | Latitude | Longitude | Speed |

|---|---|---|---|---|---|---|---|

| 68 | 01 | 01 | 4 Bytes | 4 Bytes | 12 Bytes | 12 Bytes | 1F |

| Packet Type | Node Type | Applicant Address | Address to Search | Latitude | Longitude | Speed |

|---|---|---|---|---|---|---|

| 78 | 01 | 4 Bytes | 4 Bytes | 12 Bytes | 12 Bytes | 1F |

| Packet Type | Initial Source Address | Final Destination Address | Hops | Packet Counter | Data | Latitude | Longitude |

|---|---|---|---|---|---|---|---|

| 44 | 4 Bytes | 4 Bytes | 02 | 3 Bytes | 0–1455 bytes | 12 bytes | 12 bytes |

| Packet Type | Initial Source Address | Final Destination Address | Hops | Packet Counter | Data | Latitude | Longitude |

|---|---|---|---|---|---|---|---|

| 45 | 4 Bytes | 4 Bytes | 02 | 3 Bytes | 0–1455 Bytes | 12 Bytes | 12 Bytes |

| Packet Type | Initial Source Address | Final Destination Address | Hops | Packet Counter | Data | Latitude | Longitude |

|---|---|---|---|---|---|---|---|

| 46 | 4 Bytes | 4 Bytes | 02 | 3 Bytes | 0–1455 Bytes | 12 Bytes | 12 Bytes |

| Field | Content | |

| Beginning | 7E | |

| Size | 01 03 | |

| Transmission method | 01 | |

| RSSI | 40 | |

| Reserved | 01 | |

| Origin address | C0 A8 05 02 | |

| Destination address | C0 A8 04 01 | |

| Useful charge | Type | 44 |

| Initial Origin Address | 00 56 45 52 | |

| Final Destination Address | 00 62 67 62 | |

| Jumps | 02 | |

| Source Packet counter | 00 00 0A | |

| Data | “On-Road_Vehicle_Data_Message”:[

{ “acknowledgement” : “1”, “latitude” : “19.2651047871”, “temperature” : “26.5”, “vehicleId”: “642c5dd1163518942a44440a145fb1ba5f96787c”, “packageCounter”:”322” “speed” : “0.037”, “timestamp”:“2016-03-28 20:13:42”, “longitude” : “−103.713618619”, }, { “acknowledgement” : “1”, “latitude” : “19.2651047549”, “temperature” : “26.6”, “vehicleId”: “645864b8e49bb0ac130d26de690231f9a9a9069a”, “packageCounter”:”452” “speed” : “0.018”, “timestamp” : “2016-03-28 20:13:42”, “longitude” : “−103.713618561”, }, { “acknowledgement” : “1”, “latitude” : “19.2651047388”, “temperature” : “26.9”, “vehicleId”: “649164b8e49cc0ac130d26de690231f9a9a9879b”, “packageCounter”:”452” “speed” : “0.020”, “timestamp” : “2016-03-28 20:13:42”, “longitude” : “−103.713618532”, }] | |

| Latitude | 19.2651047871 | |

| Longitude | −103.713618619 | |

| Verification sum | 9D | |

| Forcer | 7D | |

| Qty. Vehicles with OBU. | Scheduled Traffic Congestions | Traffic Alerts Generated | False Traffic Alerts Generated | Traffic Alerts not Generated | Percentage of Precision |

|---|---|---|---|---|---|

| 24 (10.1%) | 64 | 63 | 1 | 2 | 93.7% |

| 118 (50%) | 64 | 63 | 0 | 1 | 98.4% |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cárdenas-Benítez, N.; Aquino-Santos, R.; Magaña-Espinoza, P.; Aguilar-Velazco, J.; Edwards-Block, A.; Medina Cass, A. Traffic Congestion Detection System through Connected Vehicles and Big Data. Sensors 2016, 16, 599. https://doi.org/10.3390/s16050599

Cárdenas-Benítez N, Aquino-Santos R, Magaña-Espinoza P, Aguilar-Velazco J, Edwards-Block A, Medina Cass A. Traffic Congestion Detection System through Connected Vehicles and Big Data. Sensors. 2016; 16(5):599. https://doi.org/10.3390/s16050599

Chicago/Turabian StyleCárdenas-Benítez, Néstor, Raúl Aquino-Santos, Pedro Magaña-Espinoza, José Aguilar-Velazco, Arthur Edwards-Block, and Aldo Medina Cass. 2016. "Traffic Congestion Detection System through Connected Vehicles and Big Data" Sensors 16, no. 5: 599. https://doi.org/10.3390/s16050599

APA StyleCárdenas-Benítez, N., Aquino-Santos, R., Magaña-Espinoza, P., Aguilar-Velazco, J., Edwards-Block, A., & Medina Cass, A. (2016). Traffic Congestion Detection System through Connected Vehicles and Big Data. Sensors, 16(5), 599. https://doi.org/10.3390/s16050599