Measuring System for Synchronous Recording of Kinematic and Force Data during Handover Action of Human Dyads

Abstract

:1. Introduction

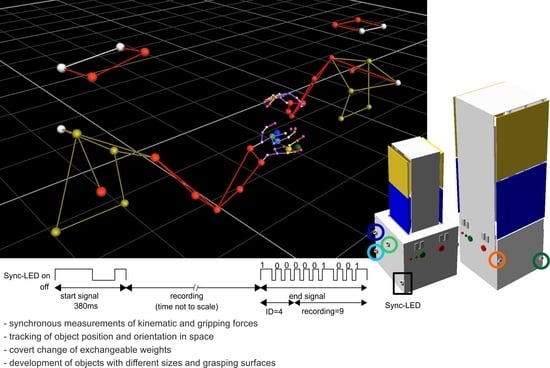

- It should be freely movable. Accordingly, the synchronization of the grip force measurement must be performed wirelessly.

- The weight of the object should be able to be changed quickly and easily in the range of at least 400–1000 g.

- The construction plan should allow for the development of measuring objects with different sizes and different grasping surfaces.

2. Materials and Methods

2.1. Reasons for the Design of the Measurement Object

2.2. Test Objects and Motion Tracking

2.3. Participants and Procedure

2.4. Preprocessing and Data analysis

3. Results

4. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Johansson, R.S. Sensory Control of Dexterous Manipulation in Humans. In Hand and Brain; Wing, A.M., Haggard, P., Flanagan, J.R., Eds.; Academic Press: San Diego, CA, USA, 1996; pp. 381–414. [Google Scholar]

- Flanagan, J.R.; Johansson, R.S. Hand Movements. In Encyclopedia of the Human Brain; Ramachandran, V.S., Ed.; Academic Press: San Diego, CA, USA, 2002. [Google Scholar]

- Johansson, R.S.; Flanagan, J.R. Coding and use of tactile signals from the fingertips in object manipulation tasks. Nat. Rev. Neurosci. 2009, 10, 345–359. [Google Scholar] [CrossRef]

- Kutz, D.F.; Wölfel, A.; Meindl, T.; Timmann, D.; Kolb, F.P. Spatio-Temporal Human Grip Force Analysis via Sensor Arrays. Sensors 2009, 9, 6330–6345. [Google Scholar] [CrossRef] [PubMed]

- Kutz, D.F.; Wölfel, A.; Timmann, D.; Kolb, F.P. Dynamic torque during a precision grip task comparable to picking a raspberry. J. Neurosci. Methods 2009, 177, 80–86. [Google Scholar] [CrossRef] [PubMed]

- Kopnarski, L.; Rudisch, J.; Voelcker-Rehage, C. A systematic review of handover actions in human dyads. Front. Psychol. 2023, 14, 1147296. [Google Scholar] [CrossRef] [PubMed]

- Sebanz, N.; Bekkering, H.; Knoblich, G. Joint Action: Bodies and Minds Moving Together. Trends Cogn. Sci. 2006, 10, 70–76. [Google Scholar] [CrossRef] [PubMed]

- Kovacs, A.J.; Wang, Y.; Kennedy, D.M. Accessing interpersonal and intrapersonal coordination dynamics. Exp. Brain Res. 2019, 238, 17–27. [Google Scholar] [CrossRef] [PubMed]

- Brand, T.K.; Maurer, L.K.; Müller, H.; Döhring, F.R.; Joch, M. Predictability shapes movement kinematics and grip force regulation in human object handovers. Hum. Mov. Sci. 2022, 85, 102976. [Google Scholar] [CrossRef] [PubMed]

- Kopnarski, L.; Lippert, L.; Rudisch, J.; Voelcker-Rehage, C. Predicting object properties based on movement kinematics. Brain Inform. 2023, 10, 29. [Google Scholar] [CrossRef]

- Mason, A.H.; MacKenzie, C.L. Grip forces when passing an object to a partner. Exp. Brain Res. 2005, 163, 173–187. [Google Scholar] [CrossRef]

- Kapandji, I.A. The Physiology of Joints, Volume 1: Upper Limb, 5th ed.; Churchill Livingston: Edinburgh, UK, 1995. [Google Scholar]

- Gao, F.; Latash, M.L.; Zatsiorsky, V.M. Maintaining rotational equilibrium during object manipulation: Linear behavior of a highly non-linear system. Exp. Brain Res. 2005, 169, 519–531. [Google Scholar] [CrossRef]

- Gao, F.; Latash, M.L.; Zatsiorsky, V.M. Similar Motion of a Hand-held Object may Trigger Nonsimilar Grip Force Adjustments. J. Hand Ther. 2007, 20, 300–308. [Google Scholar] [CrossRef]

- Latash, M.L.; Zatsiorsky, V.M. Grasping. In Biomechanics and Motor Control: Defining Central Consepts; Academic Press: Amsterdam, The Netherlands; Boston, MA, USA; Heidelberg, Germany; London, UK; New York, NY, USA; Oxford, UK; Paris, France; San Diego, CA, USA; San Francisco, CA, USA; Singapore; Sydney, Australia; Tokyo, Japan, 2016. [Google Scholar]

- Arbib, M.A.; Iberall, T.; Lysons, D. Coordinated Control Programs for Movements of the Hand. In Hand Function and the Neocortex; Goodwin, A.W., Darian-Smith, I., Eds.; Springer Verlag: Berlin, Germany, 1985; pp. 111–129. [Google Scholar]

- Iberall, T. The Nature of Human Prehension: Three Dextrous Hands in One. In Proceedings of the 1987 IEEE International Conference on Robotics and Automation, Raleigh, NC, USA, 31 March–3 April 1987. [Google Scholar]

- Baud-Bovy, G.; Soechting, J.F.; Rácz, K.; Brown, D.; Valero-Cuevas, F.J.; Winges, S.A.; Eonta, S.E.; Flanders, M.; Niu, X.; Latash, M.L.; et al. Two virtual fingers in the control of the tripod grasp. J. Neurophysiol. 2001, 86, 604–615. [Google Scholar] [CrossRef]

- Mason, M.T.; Salisbury, J.K. Robot Hands and the Mechanics of Manipulation; The MIT Press: Cambridge, MA, USA, 1985. [Google Scholar]

- Serina Elaine, R.; Mote, C.D.; Rempel, D. Force Response of the Fingertip Pulp to Repeated Compression—Effects of Loading Rate, Loading Angle and Anthropometry. J. Biomech. 1997, 30, 1035–1040. [Google Scholar] [CrossRef]

- Nakazawa, N.; Ikeura, R.; Inooka, H. Characteristics of human fingertips in the shearing direction. Biol. Cybern. 2000, 82, 207–214. [Google Scholar] [CrossRef]

- Zatsiorsky, V.M.; Gao, F.; Latash, M.L. Finger force vectors in multi-finger prehension. J. Biomech. 2003, 36, 1745–1749. [Google Scholar] [CrossRef]

- Zatsiorsky, V.M.; Li, Z.-M.; Latash, M.L. Coordinated force production in multi-finger tasks: Finger interaction and neural network modeling. Biol. Cybern. 1998, 79, 139–150. [Google Scholar] [CrossRef]

- Zatsiorsky, V.M.; Li, Z.M.; Latash, M.L. Enslaving Effects in Multi-Finger Force Production. Exp. Brain Res. 2000, 131, 187–195. [Google Scholar] [CrossRef]

- Oliveira, M.A.; Hsu, J.; Park, J.; Clark, J.E.; Shim, J.K. Age-related changes in multi-finger interactions in adults during maximum voluntary finger force production tasks. Hum. Mov. Sci. 2008, 27, 714–727. [Google Scholar] [CrossRef]

- Pataky, T.C.; Latash, M.L.; Zatsiorsky, V.M. Tangential load sharing among fingers during prehension. Ergonomics 2004, 47, 876–889. [Google Scholar] [CrossRef] [PubMed]

- Plug-in Gait Reference Guide—Nexus 2.14 Documentation—Vicon Documentation. Available online: https://docs.vicon.com/display/Nexus214/Plug-in+Gait+Reference+Guide (accessed on 4 December 2023).

- Hand Model Plug-in for Vicon Nexus. Available online: https://docs.vicon.com/display/Nexus214/PDF+downloads+for+Vicon+Nexus?preview=/83296552/83296566/Model_UpperLimb_ProductGuide_Rev1.0_2007Jul.pdf (accessed on 4 December 2023).

- Oldfield, R.C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef] [PubMed]

- Kutz, D.F.; Wölfel, A.; Timmann, D.; Kolb, F.P. Detection of changes in grip forces on a sliding object. J. Neurosci. Methods 2007, 166, 250–258. [Google Scholar] [CrossRef] [PubMed]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2018; Available online: https://www.r-project.org/ (accessed on 4 December 2023).

- Ez: Easy Analysis and Visualization of Factorial Experiments Version R package version 4.4-0. Available online: https://cran.r-project.org/web/packages/ez/index.html (accessed on 4 December 2023).

- Create American Psychological Association (APA) Style Tables Version R package Version 2.0.8. Available online: https://cran.r-project.org/web/packages/apaTables/index.html (accessed on 4 December 2023).

- Kutz, D.F.; Schmid, B.C.; Meindl, T.; Timmann, D.; Kolb, F.P. Contribution of the Cerebellum in Cue-Dependent Force Changes During an Isometric Precision Grip Task. Cerebellum 2015, 15, 439–450. [Google Scholar] [CrossRef] [PubMed]

- Bastian, A.J.; Martin, T.A.; Keating, J.G.; Pienciak-Siewert, A.; Horan, D.P.; Ahmed, A.A.; Weiler, J.; Gribble, P.L.; Pruszynski, J.A.; Dounskaia, N.; et al. Cerebellar ataxia: Abnormal control of interaction torques across multiple joints. J. Neurophysiol. 1996, 76, 492–509. [Google Scholar] [CrossRef] [PubMed]

- Bastian, A.J.; Zackowski, K.M.; Thach, W.T. Cerebellar Ataxia: Torque Deficiency or Torque Mismatch between Joints? J. Neurophysiol. 2000, 83, 3019–3030. [Google Scholar] [CrossRef] [PubMed]

- Timmann, D.; Watts, S.; Hore, J. Causes of left-right ball inaccuracy in overarm throws made by cerebellar patients. Exp. Brain Res. 2000, 130, 441–452. [Google Scholar] [CrossRef]

- Bhanpuri, N.H.; Okamura, A.M.; Bastian, A.J. Active force perception depends on cerebellar function. J. Neurophysiol. 2012, 107, 1612–1620. [Google Scholar] [CrossRef]

- Bhanpuri, N.H.; Okamura, A.M.; Bastian, A.J. Predictive Modeling by the Cerebellum Improves Proprioception. J. Neurosci. 2013, 33, 14301–14306. [Google Scholar] [CrossRef]

- Mottolese, C.; Richard, N.; Harquel, S.; Szathmari, A.; Sirigu, A.; Desmurget, M. Mapping motor representations in the human cerebellum. Brain 2012, 136, 330–342. [Google Scholar] [CrossRef]

| Small Object | Large Object | |||||

|---|---|---|---|---|---|---|

| Light | Medium | Heavy | Light | Medium | Heavy | |

| finger force | 226.5/65.6 | 275.5/74.0 | 359.0/119.5 | 244.0/60.0 | 303.0/98.0 | 373.0/180.5 |

| finger distance | 117.0/52.5 | 161.5/62.5 | 207.5/81.9 | 116.5/53.0 | 157.5/84.0 | 202.5/107.6 |

| lift-delta | 103.0/34.5 | 111.0/36.5 | 142.5/72.5 | 133.0/48.5 | 152.5/58.0 | 163.5/92.9 |

| Coefficients | Estimate/Std. Error, p |

|---|---|

| intercept | 145.1/3.4, <2 × 10−16 |

| finger index | −63.8/26.0, 0.01 |

| finger index: size | 23.1/12.9, 0.07 |

| finger index: weight | 18.5/7.9, 0.02 |

| F(3, 226) = 3.755, p = 0.01, adjusted R2 = 0.03 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kutz, D.F.; Kopnarski, L.; Püschel, J.; Rudisch, J.; Voelcker-Rehage, C. Measuring System for Synchronous Recording of Kinematic and Force Data during Handover Action of Human Dyads. Sensors 2023, 23, 9694. https://doi.org/10.3390/s23249694

Kutz DF, Kopnarski L, Püschel J, Rudisch J, Voelcker-Rehage C. Measuring System for Synchronous Recording of Kinematic and Force Data during Handover Action of Human Dyads. Sensors. 2023; 23(24):9694. https://doi.org/10.3390/s23249694

Chicago/Turabian StyleKutz, Dieter F., Lena Kopnarski, Jochen Püschel, Julian Rudisch, and Claudia Voelcker-Rehage. 2023. "Measuring System for Synchronous Recording of Kinematic and Force Data during Handover Action of Human Dyads" Sensors 23, no. 24: 9694. https://doi.org/10.3390/s23249694

APA StyleKutz, D. F., Kopnarski, L., Püschel, J., Rudisch, J., & Voelcker-Rehage, C. (2023). Measuring System for Synchronous Recording of Kinematic and Force Data during Handover Action of Human Dyads. Sensors, 23(24), 9694. https://doi.org/10.3390/s23249694