Classification Metrics for Improved Atmospheric Correction of Multispectral VNIR Imagery

Abstract

:1. Introduction

2. Method

2.1. New Method

3. Selected Results

3.1. Scene with haze

3.2. Scene with saturated pixels

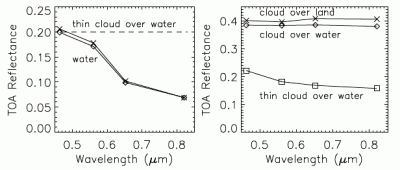

3.3. Coastal scene: bright sand and cloud, cloud over water

4. Summary and Conclusions

Acknowledgments

References

- Birk, R.J.; Stanley, T.; Snyder, G.I.; Henning, T.A.; Fladeland, M.M.; Policelli, F. Government programs for research and operational uses of commercial remote sensing data. Remote Sens. Environ. 2003, 88, 3–16. [Google Scholar]

- Goetz, S.J.; Wright, R.K.; Smith, A.J.; Zinecker, E.; Schaub, E. IKONOS imagery for resource management: tree cover, impervious surfaces, and riparian buffer analyses in the mid-Atlantic region. Remote Sens. Environ. 2003, 88, 187–194. [Google Scholar]

- Cablk, M.E.; Minor, T.B. Detecting and discriminating impervious cover with high-resolution IKONOS data using principal component analysis and morphological operators. Int. J. Remote Sens. 2003, 24, 4627–4645. [Google Scholar]

- Aguera, F.; Aguilar, M.A.; Aguilar, F.J. Detecting greenhouse changes from QuickBird imagery on the Mediterranean coast. Int. J. Remote Sens. 2006, 27, 4751–4767. [Google Scholar]

- Wolter, P.T.; Johnston, C.A.; Niemi, G.J. Mapping submergent aquatic vegetation in the US Great Lakes using Quickbird satellite data. Int. J. Remote Sens. 2005, 26, 5255–5274. [Google Scholar]

- JAXA web page. http://www.eorc.jaxa.jp/ALOS/doc/format.htm.

- Berk, A.; Anderson, G.P.; Acharya, P.K.; Hoke, M.L.; Chetwynd, J.H.; Bernstein, L.S.; Shettle, E.P.; Matthew, M.W.; Adler-Golden, S.M. MODTRAN4 Version 3 Revision 1 User's Manual; Air Force Research Lab: Hanscom AFB, MA, 2003. [Google Scholar]

- Richter, R. A spatially adaptive fast atmospheric correction algorithm. Int. J. Remote Sens. 1996, 17, 1201–1214. [Google Scholar]

- Richter, R. Correction of satellite imagery over mountainous terrain. Appl. Optics 1998, 37, 4004–4015. [Google Scholar]

- Shettle, E.P.; Fenn, R.W. Models for the aerosol lower atmosphere and the effects of humidity variations on their optical properties; Rep. TR-79-0214; Hanscom Air Force Base, MA, 1979. [Google Scholar]

- Kaufman, Y.J.; Wald, A.E.; Remer, L.A.; Gao, B-C.; Li, R.R.; Flynn, L. The MODIS 2.1 μm channel – correlation with visible reflectance for use in remote sensing of aerosol. IEEE Trans. Geosci. Remote Sens. 1997, 35, 1286–1298. [Google Scholar]

- Guanter, L.; Alonso, L.; Moreno, J. First results from the Proba/Chris hyperspectral / multiangular satellite system over land and water targets. IEEE Geosci. Remote. Sens. Lett. 2005, 2, 250–254. [Google Scholar]

- Guanter, L.; Gonzales-Sanpedro, D.C.; Moreno, J. A method for the atmospheric correction of ENVISAT / MERIS data over land targets. Int. J. Remote Sens. 2007, 28, 709–728. [Google Scholar]

- Richter, R.; Schläpfer, D.; Müller, A. An automatic atmospheric correction algorithm for visible/NIR imagery. Int. J. Remote Sens. 2006, 27, 2077–2085. [Google Scholar]

- Knap, W.H.; Stamnes, P.; Koehlemeijer, R.B.A. Cloud thermodynamic-phase determination from near-infrared spectra of reflected sunlight. J. Atm. Sci. 2002, 59, 83–96. [Google Scholar]

- Stoner, E.R.; Baumgardner, M.F. Characteristic variations in reflectance of surface soils. Soil Sci. Soc. Am. J. 1981, 45, 1161–1165. [Google Scholar]

- Hunt, G.R.; Salisbury, J.W. Visible and near-infrared spectra of minerals and rocks. I. Silicate minerals. Mod. Geol. 1970, 1, 283–300. [Google Scholar]

- Hunt, G.R.; Salisbury, J.W. Visible and near-infrared spectra of minerals and rocks. II. Carbonates. Mod. Geol. 1971, 2, 23–30. [Google Scholar]

- Wiscombe, W.J.; Warren, S.G. A model for the spectral albedo of snow. I. Pure snow. J. Atmos. Sci. 1980, 37, 2712–2733. [Google Scholar]

- Zhang, Y.; Guindon, B.; Cihlar, J. An image transform to characterize and compensate for spatial variations in thin cloud contamination of Landsat images. Remote Sens. Environ. 2002, 82, 173–187. [Google Scholar]

- Richter, R. Atmospheric / topographic correction for satellite imagery. User Guide. Rep. DLR-IB 565-01/08. Wessling, Germany, 2008. online: ftp://ftp.dfd.dlr.de/put/richter/ATCOR/.

- Crist, E.P.; Cicone, R.C. A physically-based transformation of Thematic Mapper data - The TM tasseled cap. IEEE Trans. Geosci. Rem. Sens. 1984, GE-22, 256–263. [Google Scholar]

- Richter, R.; Müller, A. De-shadowing of satellite/airborne imagery. Int. J. Remote Sens. 2005, 26, 3137–3148. [Google Scholar]

| AVNIR-2 band | Vegetation:ρ = (2.7, 4.8, 2.8, 41.4)% | Soil:ρ = (5.2, 9.5, 10.4, 23.3)% | ||

|---|---|---|---|---|

| Δρ (W=0.8 cm) | Δρ (W=4.1 cm) | Δρ (W=0.8 cm) | Δρ (W=4.1 cm) | |

| 1 | -0.3 | +0.1 | -0.3 | -0.5 |

| 2 | -0.3 | +0.1 | -0.2 | -0.1 |

| 3 | -0.3 | 0 | 0 | -0.1 |

| 4 | +1.7 | -1.8 | +0.8 | -1.3 |

© by the authors; licensee Molecular Diversity Preservation International, Basel, Switzerland. This article is an open-access article distributed under the terms and conditions of the CreativeCommons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Richter, R. Classification Metrics for Improved Atmospheric Correction of Multispectral VNIR Imagery. Sensors 2008, 8, 6999-7011. https://doi.org/10.3390/s8116999

Richter R. Classification Metrics for Improved Atmospheric Correction of Multispectral VNIR Imagery. Sensors. 2008; 8(11):6999-7011. https://doi.org/10.3390/s8116999

Chicago/Turabian StyleRichter, Rudolf. 2008. "Classification Metrics for Improved Atmospheric Correction of Multispectral VNIR Imagery" Sensors 8, no. 11: 6999-7011. https://doi.org/10.3390/s8116999

APA StyleRichter, R. (2008). Classification Metrics for Improved Atmospheric Correction of Multispectral VNIR Imagery. Sensors, 8(11), 6999-7011. https://doi.org/10.3390/s8116999