1. Introduction

1.1. Differentiated Instruction

The evolution of the educational system and society embracing more inclusivity, egalitarianism, and equity have consequently brought the imperative for substantial changes in the classroom. Differentiated instruction has emerged to respond to these necessities of learning that teachers face. This teaching method has notably impacted the world, provoking significant changes in how teachers perceive and practice education [

1]. Differentiated learning is defined as a flexible, equitable, and intelligent teaching method that starts from the premise that all students are different and learn differently [

2]. Thus, this type of teaching considers the differences among students: their skills, tastes, learning styles, strengths, the conditions in which they perform the best, and the things that represent a challenge.

Under differentiated instruction, students find themselves more intrinsically motivated. They perform tasks that match their abilities and prepare to achieve their goals, thus developing self-competency [

3]. This method also promotes active and collaborative learning. The teacher utilizes flexible grouping. All students work together at their own pace, with below-average students using auxiliary materials. The teacher provides extra support and challenges the students based on their progress during the lesson [

4].

Therefore, differentiated instruction can be defined as a variable teaching method that is adaptable to students’ skills, using systematic procedures for progress-monitoring and data-driven decision-making. The method focuses on differentiating the student achievement levels. Teachers must continuously monitor their academic progress to identify their educational needs and then adapt the teaching to them. How the progress is monitored and the teaching adaptations can vary substantially, and they can be carried out in different formats [

5].

Tomlinson [

6] states that differentiated instruction does not refer to letting students “learn” without control, nor designing individualized classes for each student. It does not mean constantly forming small, homogenous workgroups, giving more work to advanced students, or evaluating one student differently from another. Differentiated instruction refers to having all the students

on the radar, keeping them always present, designing lessons with different methodologies to achieve learning through a greater focus on the quality of work rather than the quantity. The evaluation should be carried out with different tools and products that must always have a diagnostic focus, not accreditation. In other words, the evaluating is done to intervene to help. Differentiated instruction focuses totally on the students.

Kingore [

7] proposed what differentiated instruction does and does not involve. Tomlinson [

6] considered the organization that a class should follow under this method. He proposed a lesson design structure that should be followed under differentiated instruction, including the recursion of all the components, as shown in

Figure 1.

As can be appreciated above, differentiated instruction is a methodology that provides genuine equal opportunity in education; students truly receive instruction that accords with their preparation, interests, and learning preferences, which maximizes their growth opportunities [

8]. All the experts on this methodology stress the importance of knowing the students. On the one hand, this is about knowing their achievement levels: where they are and what learning problems they are encountering. On the other hand, this requires knowing the students’ pedagogical needs, interests, peer relations, motivations, and the problem-solving strategies they will understand [

9]. Along these lines, the authors propose a methodology to implement differentiated instruction with one variable: the standardized measurement of learning.

1.2. Standardized Assessment

Since 1990, there has been a growing interest in the standardized assessment of learning, not only in education but also in the political, economic, and social sectors. This assessment system has become the principal indicator of students’ academic performance and educational institutions’ operations [

10]. For example, in the early 1990s, the College Board applied approximately 25,000 Aptitude Tests in Latin America; by the beginning of the next decade, there were already twice that number in Mexico alone. The National Center of Evaluation for Higher Education (CENEVAL) applied about 350,000 tests in the 1994–95 school year, and just five years later, it tripled that number of applications [

11].

In Mexico, most of the curricula are shared among educational institutions from the primary schools to the upper-middle levels; therefore, it is understandable that evaluating the different knowledge and skills in various study programs through standardized tests is considered optimal [

12]. Since 1993, this type of evaluation has formed part of the state’s policies to exclude students, control teacher workloads, and manage educational institutions in the country [

13].

According to Jornet [

14], the standardized evaluation implies that all measuring instrument elements are systematized and applied in the same way to all persons. That is, the same stimuli are presented, and the exact application instructions are given to all. These tests are administered in the same type of situation, corrected in the same way, and scored by the same criteria. Initially, this kind of evaluation was used to select students to enter a school; however, with the passing of the years, the uses for this type of evaluation have increased [

15]. There are now standardized tests for admissions, graduation, diagnostic purposes, and midterm assessments, and others.

1.3. The Debate over Differentiation and Standardization

On par with the increase in the popularity of standardized evaluations has been an increase in the number of their detractors. Many experts consider that the standardized tests do not reflect students’ learning thoroughly and do not seem suitable for what students need to learn currently [

16]. Although it is beyond this article’s scope to enter into the debate about this type of assessment’s relevance, the authors consider it necessary to contextualize both positions.

Fernández, Alcaraz, and Sola [

17] point out that this mechanical vision based on the pursuit of educational efficiency is far from being a complete and rigorous vision of the educational world. Standardized tests operate from a technological paradigm based on efficiency and behaviorism, which is insufficient. They do not clearly explain what is happening contextually within the social sciences or the

how or

why of the results to make appropriate intervention decisions. This leads to a flawed interpretation of educational reality, which can end up causing significant consequences where attaining the result ends up being the goal.

It must be considered that the goal of the evaluation is to obtain objective data that support students and help obtain the corresponding accreditation [

18]. Miles, Fulbrook, and Mainwaring-Mugi [

19] believe that these tests are insufficient to ensure quality assessments due to the lack of validation criteria. Rodrigo [

20] points out that some of these tests even measure competencies that depend on the students’ experience and not on the knowledge they acquired in schools. They do not consider, in some cases, the pedagogical or political changes of the programs. Thus, the principal goal of improvement in the schools is called into question.

Barrenechea [

21], in his article, “Standardized Evaluations: Six Critical Reflections,” identifies some specific limitations to this testing:

They undermine the motivation of students. The only ones motivated are the students who accredit the test.

They do not consider the different types of intelligence. The standardization evaluates only a part of intellectual development.

They leave out a corpus of knowledge. They evaluate only a part of the contents of the curriculum.

They force the teachers to work for them. The teaching becomes directed towards obtaining results.

They promote corruption, generating a structure that incentivizes obtaining the desired results.

They are insufficient for the context. The standardized measurements are not adjusted for changing environments.

For the pro argument, some researchers support using these assessments because of some strengths compared to others. Standardized tests can be used to assess large groups of students because they can cover large amounts of material very efficiently and are affordable for testing many students. Scoring is easy, reliable, requires less time, and is an effective way to measure student knowledge on a large scale. They are applied equally to students everywhere in objective and fair assessment to identify students’ achievement gaps [

16]. Without standardized assessment, measuring student performance would fall under the subjectivity of each teacher. He or she would have the liberty to adapt the assessment to their particular teaching, making it almost impossible to measure a student’s performance in different contexts [

22].

Another recurring argument in defense of standardized testing is that they provide valid and comparable results across different student populations [

23]. In addition to measuring and comparing results, the test scores can be used for tracking students based on their perceived abilities [

24]. Then, some actions can be taken to improve student performance, educational quality, teaching performance, and educational institutions’ operations. Despite being labeled as unfair and discriminatory, these tests are the opposite. Their results rightly avoid exclusion based on gender, race, sexual orientation, and age; instead, they report student performance [

22].

This debate makes it evident that there is an absolute contradiction between differentiated instruction and personalized measurements, and standardized assessments. The issue of students’ rights comes into play. Should the learning measurement be aligned with the individual’s (student’s) goals or society’s (the educational system)? Perhaps a universal, correct answer does not exist. The students have the right to be evaluated considering their differences, but educational institutions and governments must examine the students’ progress objectively and homogeneously [

25].

1.4. Some Studies about Differentiation and Standardization

Various educators and researchers have been interested in conducting studies on differentiated instruction, while others have been attracted to standardized assessment. The studies presented here exemplify the academic community’s interest in both techniques and their effectiveness in various contexts.

For example, in the Republic of Cyprus, a study was done to assess differentiated instruction’s impact on students’ learning in classrooms of students with various skills [

26]. The results indicated that the students showed more learning progress in the classes where differentiated instruction was conducted systematically. Therefore, according to this research, this methodology promotes equity and optimizes education quality and effectiveness.

On the other hand, Förster, Kawohl, and Souvignier [

27] conducted research in Germany to evaluate differentiated instruction effects on students’ reading comprehension. The results showed that considering each student’s particular needs to develop their reading competency improved only their reading speed.

In the Netherlands, research was carried out to learn students’ particularities that teachers considered when they applied differentiated instruction [

28]. The results showed that the teachers prioritized students’ backgrounds over their learning styles or their interests when designing and implementing study lessons under the differentiated instruction method.

These studies are evidence of the importance being given to the differentiated instruction method. There have been studies conducted that show the importance of standardized assessment as well. The standardized test PISA (Program for International Student Assessment) has been used as a framework for various studies. In Spain, Cordero and Gil-Izquierdo [

29] conducted research to correlate students’ performance on the PISA with the teachers’ characteristics and practices. They concluded that traditional teaching methods positively influence students’ performance in mathematics, while dynamic and innovative learning strategies negatively impact the results.

One research study conducted in England [

30] uses the PISA results to validate whether students’ performance in science improves when using the Research-based Learning model, i.e., when students are allowed to conduct their own experiments, and the teacher offers little guidance. The results showed that this method does not favor the PISA test results; on the contrary, when more guided teaching is used, the student performance improves on this standardized test.

Like the study conducted in Colombia, in Malaysia, research was done where PISA was used to compare and study the assessment score gaps between South Korea, Singapore, and Malaysia [

31], the latter having the lowest test scores. Using Blinder–Oaxaca Decomposition (an outcomes decomposition method), the authors concluded that, despite socio-economic and school factors reasons, there were unknown and unexplainable causes why the assessment scores in Malaysia were lower than in the other two countries.

1.5. The Methodology to Improve Spanish Grammar Competency in Mexico

PrepaTec CCM is a high school located in Mexico City. Its students must take eight topics per semester. One of them is a class in Spanish as a mother tongue. Students must take this class during semesters four or five, depending on their academic program. Although the communicative approach is the teaching methodology, there is also some traditional teaching of the Spanish language: the grammar method. The reason is that students do not consider the mother tongue class a priority; they believe that because they are native Spanish speakers, their language proficiency is good. This misconception causes them to continually make grammar mistakes in their performance, especially in writing and speaking. For this reason, Spanish teachers continuously search for educational methods that engage students and help them to correct specific Spanish grammar mistakes.

Regardless of the diversity of teaching techniques used, all students must take a standardized test to measure their competencies at the end of high school, including the Spanish language skills. This test is essential, as it presents a final measurement of students’ competencies developed in mathematical thinking, communication in Spanish and English, and scientific comprehension of the world [

32]. The test is called DOMINA. It is designed, administered, and evaluated by the National Center of Evaluation for Higher Education [

32]. This instrument has 220 multiple choice questions, each with four answer options. The student results are compared with those of other schools to determine each educational institution’s national ranking.

Because students have to take such a critical standardized test, professors teach students specific test-taking strategies and procedures [

33] related to their knowledge area.

To help students to improve their academic performance in the Spanish class, we designed a methodology that considers the most convenient teaching method for them and the practice of standardized tests. To get to know each student’s particularities and design an authentic differentiated instruction methodology, we applied a learning styles test utilizing the Fuzzy Logic Type 2 system for its analysis and a standardized test that measures their actual knowledge of Spanish. Therefore, this research aimed to improve students’ performance in Spanish language competency through the proposed methodology that merges the analysis of learning styles through the Fuzzy Logic Type 2 system, differentiated instruction, and standardized metrics.

2. Materials and Methods

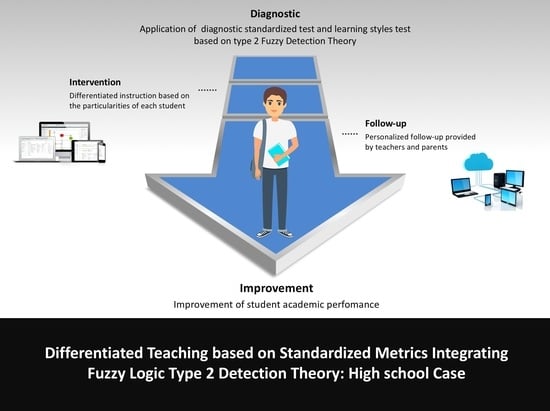

The methodology implemented in this study fused the differentiated instruction method with standardized metrics for the Spanish language class. The process begins with a standardized test that diagnoses the development of the students’ competencies in this area and a learning styles test that identifies students’ learning particularities. The learning styles test results are analyzed with the Fuzzy Logic Type 2 system to detect these characteristics, not just binary values. Then, the teaching process is carried out under the differentiated instruction method, and, finally, another standardized test is applied that measures the ending status of the competencies. The specifications for each step of the differentiated instruction methodology conducted with standardized metrics integrating the Fuzzy Logic Type 2 system can be observed in

Figure 2. The diagram of the methodology can be observed in

Figure 3.

The instructional design carried out for the workshops focused on the linguistic reflection methodology. Grammar is reinforced with reflective analysis. The student reflects on what presented him with difficulty or doubt during his linguistic production or comprehension. The reflection on the grammatical rules is an activity that improves knowledge of the language and structuring of thought [

34]. The workshops featured a wide variety of learning activities designed to include each group’s different learning styles and the difficulties each student had with the topics.

This research’s participant population consisted of 527 high school senior students from PrepaTec Campus Mexico City, a private school located in Mexico City: 283 girls and 244 boys. Their ages were between 17 and 18. Their socio-economic status was middle to upper-middle, as classified by the National Institute of Statistics and Geography in Mexico [

35].

Figure 2.

Differentiated instruction methodology with standardized metrics integrating the Fuzzy Logic Type 2 system [

36,

37]. Students and parents received presentations on various technology platforms and videos links to the YouTube platform, designed by the director and teachers of the department, to reinforce various topics of the Spanish language and reading comprehension (See

Appendix A).

Figure 2.

Differentiated instruction methodology with standardized metrics integrating the Fuzzy Logic Type 2 system [

36,

37]. Students and parents received presentations on various technology platforms and videos links to the YouTube platform, designed by the director and teachers of the department, to reinforce various topics of the Spanish language and reading comprehension (See

Appendix A).

Figure 3.

Differentiated instruction methodology with standardized metrics integrating the Fuzzy Logic Type 2 system.

Figure 3.

Differentiated instruction methodology with standardized metrics integrating the Fuzzy Logic Type 2 system.

2.1. Strategic Action

For this study, we used the Action research method because it helps the educator [

38] carry out an intervention to improve the teaching-learning process. This study’s action hypothesis focuses on improving the population’s learning outcomes in the Spanish language as measured by two standardized tests.

2.2. Data Collection

Like any study carried out under the Action research methodology, the implemented strategy is an action-observation-reflection-planning spiral. These four steps are interlinked, and their actions generate the information and data that causes the action strategy to become a source of knowledge [

39]. Thus, in this research, information collection techniques were based on observation, conversation, and analyses of documents [

38].

Researcher’s Diary: Accompanied by participant observation, this instrument became a continuous and systematic record of events, interpretations, and reflections on the research process [

38]. This

anecdotal collection was put in digital format, and in it were recorded the events that emerged during the implementation of the strategic action plan.

Documents analysis: This technique was applied in its two classes, namely, official and personal documents [

38]. In the first case, the analysis was performed on the students’ official results in the applied standardized tests. A comparative analysis was made between the diagnostic test results and the final test results of the population who experienced the intervention plan. In the personal documents, the analysis was performed on the anecdotal records requested from the professors involved in the research. These teachers were asked to keep track of relevant incidents during the tests, their evaluations, and the implementation of the entire intervention plan.

Discussion group: This was the technique applied with the participating teachers to know their perceptions and concerns about the strategy being implemented [

38]. Two discussion groups were held. The teachers were assigned according to their schedule availability; however, the same script was followed in both groups.

2.3. Detecting Learning Styles Using Fuzzy Type 2 Detection Theory

Defining learning styles (visual, auditory, kinesthetic) could be extremely difficult if only a conventional survey is applied because students could be classified into more than one learning style. Thus, a method that improves the classification of those learning styles was integrated. As a result, fuzzy logic type 2 detection theory (FDT2) was implemented. FDT2 was presented by [

40] to improve the detection of stimuli according to the stimuli’s intensity and the person’s physical and psychological state. In general, fuzzy signal detection theory can describe human perception using fuzzy values (from 0 to 1). Hence, this approach is usually better than conventional signal detection theory, which only has two crisp values, 0 or 1 (see

Figure 4). To represent human perception’s uncertainty is not possible using conventional fuzzy signal detection theory. FDT2 must be implemented based on membership values derived from uncertainty’s footprint (see

Figure 5). The flow diagram of FDT2 is shown in

Figure 6.

To implement FDT2, we used a set of questions presented by De la Parra Paz [

41] (see

Table 1). Each question is linked with a specific learning style so the student can select a value from 1 to 7 (

Figure 7). When FDT2 is implemented, it is possible to detect fuzzy values that provide degrees of membership regarding each learning style to get information based on more than one learning style. Thus, the students can get a program better adjustable than a program with only one learning style.

Table 2 provides an example of how the questions were defined according to FCT2 and conventional signal detection theory to detect and classify learning styles and perceptions. The fuzzy signal and response values are selected according to each question and option. For example, suppose question 1 is evaluated, and the auditive learning style is being assessed (Listening to music). In that case, the fuzzy stimuli value is high (S), and the expected response is also high (R). Still, if question 39 is evaluated (when you are in the city, what do you miss most about the country?), the stimuli and fuzzy response value are low because it is not a direct question about auditive stimuli like question 1. Thus, in this case, the stimuli and response are low.

Each question is assigned a fuzzy value for the signal (stimuli) and the response value. Those values are from 0 to 1. On the other hand, conventional signal detection theory provides only two possible values, 1 or 0.

Next, a confusion table of each learning style can be calculated for each student to achieve a tailored program based on more than one learning style (

Table 3) using the membership values (

Figure 4). According to the received stimulus, the response generated can fall into the following categories: visual, auditory, or kinesthetic. This would be valid for crisp values 0 and 1 in signal detection theory. If fuzzy logic detection theory is used, it is possible to get membership values between 0 and 1.

3. Results

The learning styles detected allow to tailor to each student a specific instructional program. Hence, the designed learning program is unique for every student. It is always recommended to completely run the proposed framework and to not implement a new instructional design based on previous results of students. This paper does not pretend generalized the results of the sample in terms of learning styles. On the other hand, this paper shows an entire metodologhy that can be used for reaching a tailored instructional learning design.

This paper shows how to achieve an instructional design that is tailored with specific features. Some of those features have been used in previous literature. However, the proposed methodology includes some features that are not integrated in previous proposals. A comparison of the proposed methodology with previous methodologies is out of the scope of this research. However, as a future work, the comparison between methologies could be the next step.

This study showed an improvement in students’ Spanish grammar skills when the differentiated instruction methodology was applied. The second test application resulted in higher scores than the first. When the students’ learning styles were known, we designed an individual strategy for each student; the differentiated instruction was applied, so more students passed the text and obtained higher results.

Of the 527 students who took the first standardized test, 49% reached the accreditation level, i.e., they scored an assessment of at least 70 points out of 100. Therefore, 51% of the population, 269 students, was the group considered for implementing the strategic action. It is worth noting that the highest percentage of the population was positioned near the minimum accreditation score: 219 students, 41% of the population. A high percentage obtained just the minimum score to pass the test: 200 students, 38%. The distribution of scores obtained by the population can be observed in

Figure 8.

Once the methodology for this study was applied, the results improved considerably. Of 269 students who had not reached the first test’s minimum accreditation level and had the intervention plan applied, 267 took the second test; two were unjustifiably absent and lost the right to the application. Of the 267 students who took the final test, 212 achieved the minimum accreditation level. These results show that the percentage of the population who took the tests improved after the intervention plan was applied. Only 49% of students accredited the first standardized test, while 79% of students accredited the second after the intervention planned was applied. The distribution of the scores obtained in the final test can be observed in

Figure 9.

The final results show that, of the total population, after the intervention plan based on differentiated instruction occurred, 89% demonstrated competency in the Spanish language, as measured by a standardized test.

On the other hand, when comparing the results of the 267 students on both tests and after experiencing the intervention plan with differentiated instruction, the following was found: five students got lower scores than their first, five remained the same, 105 students went up 1 to 9 points, 121 rose between 10 and 19 points, and 31 rose more than 31 points. Thus, 257 students out of 267 showed improvement in their Spanish skills (96% of the population). See distributions in

Figure 10,

Figure 11 and

Figure 12.

The distribution of improvement points in the student’s academic performance in the Spanish language can be observed in these figures. As can be seen, when comparing the results of the first standardized test with those of the second, after carrying out the intervention, the densest recurrence is between 9 and 15 improvement points.

As for the data collected from the teachers’ anecdotal records, participating teachers were asked to report only extraordinary incidents to the application of the tests. Of the 14 participating teachers, only 8 submitted reports. The researcher’s diary was used in a similar manner, only extraordinary incidents were reported in it. The incidents found are summarized below:

Six students were late for the application of the diagnostic test but were allowed in.

Twenty-three students did not finish the diagnostic test in time.

No students were late for the application of the second test.

Four students did not finish the second test in time.

As for the information collected through the discussion groups, the following questions were presented to be discussed:

Two discussion groups were held at different times so that the 14 teachers could attend. In the first one, 7 teachers participated and in the second one, 6; 1 could not attend because she got sick. Their answers are summarized below:

More participation from parents is necessary because only 76% became involved. Perhaps a phone call would help.

Students need the training to be more independent when working with videos and other materials on their own.

The tests should be applied in an electronic version to be graded faster.

Saturday workshops are more suitable than those on weekday afternoons. In the second workshop, students feel very tired.

Mock tests should be applied as practice before proctoring the second test.

4. Discussion

Teachers know that they work with heterogeneous groups of students. They know that all students have strengths, areas that need reinforcement, and brains as unique as their fingerprints. They realize that emotions, feelings, and attitudes all affect learning and that there are different learning styles. They know that due to these particularities, not all students learn at the same pace and with the same depth [

42]. Some specific content has to be covered to achieve the

standard that the educational institution solicits.

Several studies have already demonstrated the effectiveness of differentiated instruction on students’ learning. This topic no longer requires discussion. However, the need to achieve the standardization demanded by educational institutions and governments makes it difficult for teachers to find a balance between complying with each student’s individual learning needs and the required standardized learning. It is essential to consider that teachers must offer various alternatives to students in the differentiated instruction methodology for them to demonstrate what they have learned from the lesson [

1].

The methodology presented in this study managed to merge standardization and differentiation. Additionally, the main objective was achieved: students improved their academic performance in the Spanish language. The standardized diagnostic test and the learning styles test helped to know the particular characteristics of each student. With this information, it was possible to design an individualized intervention plan with the differentiated instruction methodology, allowing each student to receive the instruction they needed to improve.

Due to this favorable result, we believe that this methodology can be implemented in other contexts. It would be noteworthy if, in other areas of knowledge (mathematics, science, history, etc.), educational levels (elementary, secondary, university) or countries, the improvement is achieved. For this, it would be necessary to assess the context well and consider the methodology’s different steps. Additionally, the following limitations should be considered:

Students need to be trained to work independently with the videos and materials.

The involvement of all parents is vital because they can provide follow-up at home.

Reinforcement workshops should be held at times when students can best learn.

A questionnaire should be applied to students to evaluate aspects of the methodology, such as materials, instruction, and workshop schedules, to improve the learning experience and validate results.

Currently, no studies have been found where a similar methodology is applied, so it is impossible to contrast this study with others; however, this study can be compared with future studies where this methodology or a similar one has been implemented.

5. Conclusions

This study demonstrated that the designed methodology helped improve students’ performance in the Spanish language class. When comparing the results of the standardized diagnostic test and the final test, it was found that the students improved their scores.

The preceding helps us conclude that a differentiated instruction methodology is optimal to support students in their learning in this population and knowledge area. For this, it was necessary to know the students’ particularities, so the standardized diagnostic test was able to identify their level of competency in Spanish. The learning styles test was a handy instrument as well. After the analysis using the Fuzzy logic type 2 system, we could identify the best way each student learned: visually, auditorily, or kinesthetically. It was then found that these two elements supported the design of a personalized intervention plan that helped reinforce the specific areas of language proficiency that needed improvement.

Instruction focused on the competency areas that each student needed to improve and selected materials and learning activities designed especially for their learning style, helping students improve markedly on the final test. It was found that 79% of all the students who had failed the first diagnostic test passed the second standardized test. Even more significant is the distribution of results, where 257 of the 267 students increased their score, even if several failed to accredit. That is, 96% of the participating population benefited from this methodology.

Author Contributions

Conceptualization, M.A.S.J.; methodology, M.A.S.J.; validation, M.A.S.J.; formal analysis, M.A.S.J.; investigation, M.A.S.J.; resources, M.A.S.J.; data curation, M.A.S.J.; writing—original draft preparation, M.A.S.J.; writing—review and editing, P.P.; visualization, P.P.; supervision, P.P.; project administration, M.A.S.J.; funding acquisition, M.A.S.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Writing Lab, TecLabs, Tecnologico de Monterrey, Mexico.

Data Availability Statement

Not Applicable, the study does not report any data.

Acknowledgments

The authors would like to acknowledge the financial and technical support of Writing Lab, TecLabs, Tecnologico de Monterrey, Mexico, in the production of this work.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the study’s design, in the collection, analyses, or interpretation of data, in the writing of the manuscript, or in the decision to publish the results.

Appendix A

Example of videos designed by the Department of Spanish:

References

- Valiandes, S.; Neophytou, L.; Hajisoteriou, C. Establishing a framework for blending intercultural education with differentiated instruction. Intercult. Educ. 2018, 29, 379–398. [Google Scholar] [CrossRef]

- Suprayogi, M.N.; Valcke, M.; Godwin, R. Teachers and their implementation of differentiated instruction in the classroom. Teach. Teach. Educ. 2017, 67, 291–301. [Google Scholar] [CrossRef]

- Guay, F.; Roy, A.; Valois, P. Teacher structure as a predictor of students’ perceived competence and autonomous motivation: The moderating role of differentiated instruction. Br. J. Educ. Psychol. 2017, 87, 224–240. [Google Scholar] [CrossRef] [PubMed]

- Awofala, A.O.A.; Lawani, A.O. Increasing Mathematics Achievement of Senior Secondary School Students through Differentiated Instruction. J. Educ. Sci. 2020, 4, 1–19. [Google Scholar] [CrossRef] [Green Version]

- Prast, E.J.; Van de Weijer-Bergsma, E.; Kroesbergen, E.H.; Van Luit, J.E. Differentiated instruction in primary mathematics: Effects of teacher professional development on student achievement. Learn. Instr. 2018, 54, 22–34. [Google Scholar] [CrossRef]

- Tomlinson, C. How to Differentiate Instruction in Academically Diverse Classrooms, 2nd ed.; ASCD: Virginia, VA, USA, 2017. [Google Scholar]

- Kingore, B. Differentiated Instruction: Rethinking Traditional Practices. Duneland School Corporation. 2005. Available online: http://www.msdwt.k12.in.us/wp-content/uploads/2013/03/Differentiating-Instruction-Kingore.pdf (accessed on 9 April 2021).

- Tomlinson, C.A.; Brighton, C.; Hertberg, H.; Callahan, C.M.; Moon, T.R.; Brimijoin, K.; Conover, L.A.; Reynolds, T. Differentiating Instruction in Response to Student Readiness, Interest, and Learning Profile in Academically Diverse Classrooms: A Review of Literature. J. Educ. Gift. 2003, 27, 119–145. [Google Scholar] [CrossRef] [Green Version]

- Van Geel, M.; Keuning, T.; Frèrejean, J.; Dolmans, D.; Van Merriënboer, J.; Visscher, A.J. Capturing the complexity of differentiated instruction. Sch. Eff. Sch. Improv. 2018, 30, 51–67. [Google Scholar] [CrossRef] [Green Version]

- Díaz, K.; Osuna, C. Las Evaluaciones Estandarizadas del Aprendizaje y la Mejora de la Calidad Educativa. Temas Educ. 2016, 22. Available online: https://revistas.userena.cl/index.php/teduacion/article/view/741 (accessed on 9 April 2021).

- Martínez, F. Evaluación Educativa y Pruebas Estandarizadas. Elementos Para Enriquecer el Debate. Rev. Educ. Super. 2001, 30. Available online: http://publicaciones.anuies.mx/revista/120/3/3/es/evaluacion-educativa-y-pruebas-estandarizadas-elementos-para (accessed on 9 April 2021).

- Jiménez, A.M. Las pruebas estandarizadas en entredicho. Perfiles Educ. 2014, 36, 3–9. [Google Scholar] [CrossRef]

- Arriaga, M. Reporte Sobre Exámenes Estandarizados: México Laboratorio de Políticas Privatizadoras de la Educación. Sección Mexicana de la Coalición Tradicional. 2008. Available online: http://es.idea-network.ca/wp-content/uploads/2012/04/evaluacion-informe-mexico.pdf (accessed on 9 April 2021).

- Jornet, J. Evaluación Estandarizada. Rev. Iberoam. Eval. Educ. 2017, 10, 5–8. Available online: https://revistas.uam.es/index.php/riee/article/view/7590 (accessed on 9 April 2021).

- Wightman, L. Standardized Testing and Equal Access: A Tutorial. The University of North Carolina. 2019. Available online: https://web.stanford.edu/~hakuta/www/policy/racial_dynamics/Chapter4.pdf (accessed on 9 April 2021).

- Setiawan, H.; Garnier, K.; Isnaeni, W. Rethinking standardized test of science education in Indonesian high school. J. Phys. Conf. Ser. 2019, 1321, 032078. [Google Scholar] [CrossRef] [Green Version]

- Fernández, M.; Alcaraz, N.; Sola, M. Evaluación y Pruebas Estandarizadas: Una Reflexión Sobre el Sentido, Utilidad y Efectos de Estas Pruebas en el Campo Educativo. Rev. Iberoam. Eval. Educ. 2017, 10, 51–67. Available online: https://revistas.uam.es/index.php/riee/article/view/7594 (accessed on 9 April 2021).

- Shavelson, R.J. Methodological perspectives: Standardized (summative) or contextualized (formative) evaluation? Educ. Policy Anal. Arch. 2018, 26, 48. [Google Scholar] [CrossRef] [Green Version]

- Miles, S.; Fulbrook, P.; Mainwaring-Mägi, D. Evaluation of Standardized Instruments for Use in Universal Screening of Very Early School-Age Children: Suitability, Technical Adequacy, and Usability. J. Psychoeduc. Assess. 2018, 36, 99–119. [Google Scholar] [CrossRef]

- Rodrigo, L. Los programas internacionales de evaluación estandarizada y el tratamiento de sus datos a nivel nacional. El caso de Argentina en el estudio PISA de la OCDE. Foro Educ. 2019, 17, 73–94. [Google Scholar] [CrossRef]

- Barrenechea, I. Evaluaciones Estandarizadas: Seis Reflexiones Críticas. Archivos Analíticos de Políticas Educativas. 2010, p. 18. Available online: https://www.redalyc.org/html/2750/275019712008/ (accessed on 9 April 2021).

- Phelps, R.; Walberg, H.; Stone, J.E. Kill the Messenger: The War on Standardized Testing; Routledge: London, UK, 2017. [Google Scholar]

- Backhoff, E. Evaluación Estandarizada de Logro Educativo: Contribuciones y Retos. Rev. Digit. Univ. 2018, 19. Available online: http://www.revista.unam.mx/2018v19n6/evaluacion-estandarizada-del-logro-educativo-contribuciones-y-retos/ (accessed on 9 April 2021).

- Cunningham, J. Missing the mark: Standardized testing as epistemological erasure in U.S. schooling. Power Educ. 2018, 11, 111–120. [Google Scholar] [CrossRef]

- Neuman, A.; Guterman, O. Academic achievements and homeschooling—It all depends on the goals. Stud. Educ. Eval. 2016, 51, 1–6. [Google Scholar] [CrossRef]

- Valiandes, S. Evaluating the impact of differentiated instruction on literacy and reading in mixed ability classrooms: Quality and equity dimensions of education effectiveness. Stud. Educ. Eval. 2015, 45, 17–26. [Google Scholar] [CrossRef]

- Förster, N.; Kawohl, E.; Souvignier, E. Short- and long-term effects of assessment-based differentiated reading instruction in general education on reading fluency and reading comprehension. Learn. Instr. 2018, 56, 98–109. [Google Scholar] [CrossRef]

- Stollman, S.; Meirink, J.; Westenberg, M.; Van Driel, J. Teachers’ interactive cognitions of differentiated instruction in a context of student talent development. Teach. Teach. Educ. 2019, 77, 138–149. [Google Scholar] [CrossRef]

- Cordero, J.M.; Gil-Izquierdo, M. The effect of teaching strategies on student achievement: An analysis using TALIS-PISA-link. J. Policy Model. 2018, 40, 1313–1331. [Google Scholar] [CrossRef]

- Jerrim, J.; Oliver, M.; Sims, S. The relationship between inquiry-based teaching and students’ achievement. New evidence from a longitudinal PISA study in England. Learn. Instr. 2019, 61, 35–44. [Google Scholar] [CrossRef]

- Perera, L.D.H.; Asadullah, M.N. Mind the gap: What explains Malaysia’s underperformance in Pisa? Int. J. Educ. Dev. 2019, 65, 254–263. [Google Scholar] [CrossRef]

- Centro Nacional de Evaluación para la Educación Superior. Guía del Examen DOMINA Competencias Disciplinares, 2nd ed.; Ceneval: Ciudad de México, México, 2017. [Google Scholar]

- Zohar, A.; Alboher, V. Raising test scores vs. teaching higher order thinking (HOT): Senior science teachers’ views on how several concurrent policies affect classroom practices. Res. Sci Technol. Educ. 2018, 36, 243–260. [Google Scholar] [CrossRef]

- Cassany, D.; Luna, M.; Sanz, G. Enseñar Lengua, 9th ed.; Editorial Graó: Barcelona, España, 2007; p. 362. [Google Scholar]

- INEGI. Clase Media. Instituto Nacional de Estadística y Geografía. 2021. Available online: https://www.inegi.org.mx/investigacion/cmedia/ (accessed on 9 April 2021).

- Alcantara, R.; Cabanilla, J.; Espina, F.; Villamin, A. Teaching Strategies 1, 3rd ed.; Katha Publishing Co.: Makaiti City, Philip-pines, 2003. [Google Scholar]

- Secretaría de Educación del Estado de Veracruz. Test Estilo de Aprendizaje (Modelo PNL). 2014. Available online: https://www.orientacionandujar.es/wp-content/uploads/2014/09/TEST-ESTILO-DEAPRENDIZAJES.pdf (accessed on 9 April 2021).

- Latorre, A. La Investigación-Acción, 3rd ed.; Editorial Graó: Barcelona, Spain, 2005; pp. 23–103. [Google Scholar]

- Mendoza, R.; Alatorre, G.; Dietz, G. Etnografía e investigación acción en la investigación educativa: Convergencias, límites y retos. Rev. Interam. Educ. Adultos 2018, 40. Available online: https://www.redalyc.org/jatsRepo/4575/457556162008/html/index.html (accessed on 9 April 2021).

- Ponce, P.; Polasko, K.; Molina, A. Technology transfer motivation analysis based on fuzzy type 2 signal detection theory. AI Soc. 2016, 31, 245–257. [Google Scholar] [CrossRef]

- De la Parra Paz, E. Herencia de Vida para tus Hijos. Crecimiento Integral con Técnicas PNL.; Grijalbo: Barcelona, Spain, 2004; pp. 88–95. [Google Scholar]

- Gregory, G.; Chapman, C. Differentiated Instructional Strategies. One Size Doesn’t Fit All, 1st ed.; Corwin Press: Thousand Oaks, CA, USA, 2007. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).