Early-Season Stand Count Determination in Corn via Integration of Imagery from Unmanned Aerial Systems (UAS) and Supervised Learning Techniques

Abstract

:1. Introduction

2. Materials and Methods

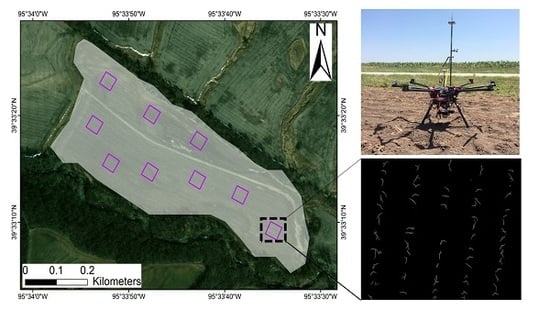

2.1. Experimental Sites

2.2. Platform, Sensor, and Field Data Collection

2.3. Data Processing

2.4. Vegetation Detection

2.5. Row Detection

2.6. Feature Descriptors

2.7. Classifier Training

2.8. Classifier Performance Evaluation

3. Results and Discussion

3.1. Evaluation Metrics: Training Modes

3.2. Evaluation Metrics: Spatial Resolution

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Lauer, J.G.; Rankin, M. Corn Response to Within Row Plant Spacing Variation. Agron. J. 2004, 96, 1464–1468. [Google Scholar] [CrossRef]

- Ciampitti, I.A.; Vyn, T.J. A comprehensive study of plant density consequences on nitrogen uptake dynamics of maize plants from vegetative to reproductive stages. Field Crop. Res. 2011, 121, 2–18. [Google Scholar] [CrossRef]

- Wiedong, L.; Tollenaar, M.; Stewart, G.; Deen, W. Impact of planter type, planting speed, and tillage on stand uniformity and yield of corn. Agron. J. 2004, 96, 1668–1672. [Google Scholar]

- Nielsen, R.L. Stand Establishment Variability in Corn; Publication AGRY-91-01; Purdue University: West Lafayette, IN, USA, 2001. [Google Scholar]

- Nafziger, E.D.; Carter, P.R.; Graham, E.E. Response of corn to uneven emergence. Crop Sci. 1991, 31, 811–815. [Google Scholar] [CrossRef]

- De Bruin, J.; Pedersen, P. Early Season Scouting; Extension and Outreach. IC-492:7; Iowa State University: Ames, IA, USA, 2004; pp. 33–34. [Google Scholar]

- Nielsen, B. Estimating Yield and Dollar Returns from Corn Replanting; Purdue University Cooperative Extension Service: West Lafayette, IN, USA, 2003. [Google Scholar]

- Nakarmi, A.D.; Tang, L. Automatic inter-plant spacing sensing at early growth stages using a 3D vision sensor. Comput. Electron. Agric. 2012, 82, 23–31. [Google Scholar] [CrossRef]

- Nakarmi, A.D.; Tang, L. Within-row spacing sensing of maize plants using 3D computer vision. Biosyst. Eng. 2014, 125, 54–64. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, N.; Taylor, R.K. Automatic corn plant location and spacing measurement using laser line-scan technique. Precis. Agric. 2013, 14, 478–494. [Google Scholar] [CrossRef]

- Shrestha, D.S.; Steward, B.L. Size and Shape Analysis of Corn Plant Canopies for Plant Population and Spacing Sensing. Appl. Eng. Agric. 2005, 21, 295–303. [Google Scholar] [CrossRef]

- Thorp, K.R.; Steward, B.L.; Kaleita, A.L.; Batchelor, W.D. Using aerial hyperspectral remote sensing imagery to estimate corn plant stand density. Trans. ASABE 2008, 51, 311–320. [Google Scholar] [CrossRef]

- Thorp, K.R.; Tian, L.; Yao, H.; Tang, L. Narrow-band and derivative-based vegetation indices for hyperspectral data. Trans. ASAE 2004, 47, 291–299. [Google Scholar] [CrossRef]

- Torres-Sanchez, J.; Lopez-Granados, F.; Peña, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- Salami, E.; Barrado, C.; Pastor, E. UAV flight experiments applied to the remote sensing of vegetated areas. Remote Sens. 2014, 6, 11051–11081. [Google Scholar] [CrossRef] [Green Version]

- Peña, J.M.; Torres-Sanchez, J.; Serrano-Perez, A.; de Castro, A.I.; Lopez-Granados, F. Quantifying efficacy and limits of unmanned aerial vehicle UAV technology for weed seedling detection as affected by sensor resolution. Sensors 2015, 15, 5609–5626. [Google Scholar] [CrossRef] [PubMed]

- Lottes, P.; Hoferlin, M.; Sander, S.; Muter, M.; Schulze-Lammers, P.; Stachniss, C. An effective classification system for separating sugar beets and weeds for precision farming applications. In Proceedings of the IEEE International Conference on Robotics & Automation (ICRA), Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- López-Granados, F. Weed detection for site-specific weed management: Mapping and real-time approaches. Weed Res. 2011, 51, 1–11. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña, J.M.; de Castro, A.I.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Perez-Ortiz, M.; Peña, J.M.; Gutierrez, P.A.; Torres-Sanchez, J.; Hervas-Martınez, C.; Lopez-Granados, F. Selecting patterns and features for between- and within-crop-row weed mapping using UAV imagery. Expert Syst. Appl. 2016, 47, 85–94. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Claupein, W. Combined spectral and spatial modeling of corn yield based on aerial images and crop surface models acquired with an unmanned aircraft system. Remote Sens. 2014, 6, 10335–10355. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Bendig, J.; Willkomm, M.; Tilly, N.; Gnyp, M.L.; Bennertz, S.; Qiang, C.; Miao, Y.; Lenz-Wiedemann, V.I.S.; Bareth, G. Very high resolution crop surface models (CSMs) from UAV-based stereo images for rice growth monitoring in Northeast China. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 45–50. [Google Scholar]

- Mathews, A.J.; Jensen, J.L.R. Visualizing and Quantifying Vineyard Canopy LAI Using an Unmanned Aerial Vehicle (UAV) Collected High Density Structure from Motion Point Cloud. Remote Sens. 2013, 5, 2164–2183. [Google Scholar] [CrossRef]

- Yao, X.; Wang, N.; Liu, Y.; Cheng, T.; Tian, Y.; Chen, Q.; Zhu, Y. Estimation of Wheat LAI at Middle to High Levels Using Unmanned Aerial Vehicle Narrowband Multispectral Imagery. Remote Sens. 2017, 9, 1304. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation índices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Pölönen, I.; Saari, H.; Kaivosoja, J.; Honkavaara, E.; Pesonen, L. Hyperspectral imaging based biomass and nitrogen content estimations from light-weight UAV. In Proceedings of the SPIE Remote Sensing, Dresden, Germany, 23–26 September 2013. [Google Scholar]

- Potgieter, A.B.; George-Jaeggli, B.; Chapman, S.C.; Laws, K.; Suárez Cadavid, L.A.; Wixted, J.; Wason, J.; Eldridge, M.; Jordan, D.R.; Hammer, G.L. Multi-spectral imaging from an unmanned aerial vehicle enables the assessment of seasonal leaf area dynamics of sorghum breeding lines. Front. Plant Sci. 2017, 8. [Google Scholar] [CrossRef] [PubMed]

- De Souza, C.H.W.; Lamparelli, R.A.C.; Rocha, J.V.; Magalhaes, P.S.G. Height estimation of sugarcane using an unmanned aerial system (UAS) based on structure from motion (SfM) point clouds. Int. J. Remote Sens. 2017, 38, 2218–2230. [Google Scholar] [CrossRef]

- Iqbal, F.; Lucieer, A.; Barry, K.; Wells, R. Poppy Crop Height and Capsule Volume Estimation from a Single UAS Flight. Remote Sens. 2017, 9, 647. [Google Scholar] [CrossRef]

- Caturegli, L.; Corniglia, M.; Gaetani, M.; Grossi, N.; Magni, S.; Migliazzi, M.; Angelini, L.; Mazzoncini, M.; Silvestri, N.; Fontanelli, M.; et al. Unmanned Aerial Vehicle to Estimate Nitrogen Status of Turfgrasses. PLoS ONE 2016, 11, e0158268. [Google Scholar] [CrossRef] [PubMed]

- Clevers, J.G.P.W.; Kooistra, L. Using Hyperspectral Remote Sensing Data for Retrieving Canopy Chlorophyll and Nitrogen Content. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 574–583. [Google Scholar] [CrossRef]

- Uto, K.; Seki, H.; Saito, G.; Kosugi, Y. Characterization of Rice Paddies by a UAV-Mounted Miniature Hyperspectral Sensor System. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 851–860. [Google Scholar] [CrossRef]

- Gomez-Candon, D.; Virlet, N.; Labbe, S.; Jolivot, A.; Regnard, J.L. Field phenotyping of water stress at tree scale by UAV-sensed imagery: New insights for thermal acquisition and calibration. Precis. Agric. 2016, 17, 786–800. [Google Scholar] [CrossRef]

- Gonzalez-Dugo, V.; Zarco-Tejada, P.; Nicolas, E.; Nortes, P.A.; Alarcon, J.J.; Intrigliolo, D.S.; Fereres, E. Using high resolution UAV thermal imagery to assess the variability in the water status of five fruit tree species within a commercial orchard. Precis. Agric. 2013, 14, 660–678. [Google Scholar] [CrossRef]

- Berni, J.; Zarco-Tejada, P.; Suarez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef] [Green Version]

- Jin, X.; Liu, S.; Baret, F.; Hemerlé, M.; Comar, A. Estimates of plant density of wheat crops at emergence from very low altitude UAV imagery. Remote Sens. Environ. 2017, 198, 105–114. [Google Scholar] [CrossRef]

- Guo, W.; Rage, U.K.; Ninomiya, S. Illumination invariant segmentation of vegetation for time series wheat images based on decision tree model. Comput. Electron. Agric. 2013, 96, 58–66. [Google Scholar] [CrossRef]

- Montalvo, M.; Pajares, G.; Guerrero, J.M.; Romeo, J.; Guijarro, M.; Ribeiro, A.; Ruz, J.J.; Cruz, J.M. Automatic detection of crop rows in maize fields with high weeds pressure. Expert Syst. Appl. 2012, 39, 11889–11897. [Google Scholar] [CrossRef] [Green Version]

- Gnädinger, F.; Schmidhalter, U. Digital counts of maize plants by unmanned aerial vehicles (UAVs). Remote Sens. 2017, 9, 544. [Google Scholar] [CrossRef]

- Baxes, G.A. Digital Image Processing, Principles and Application; John Wiley & Sons: Hoboken, NJ, USA, 1994; ISBN 0-471-00949-0. [Google Scholar]

- Savitzky, A.; Golay, M.J.E. Smoothing and differentiation of data by simplified least squares procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

- Tokekar, P.; Hook, J.V.; Mulla, D.; Isler, V. Sensor planning for a symbiotic UAV and UGV system for precision agriculture. IEEE Trans. Robot. 2016, 32, 5321–5326. [Google Scholar] [CrossRef]

- Hale Group. The Digital Transformation of Row Crop Agriculture, AgState Electronic Survey Findings. 2014. Available online: http://www.iowacorn.org/document/filelibrary/membership/agstate.AgState_Executive_Summary_0a58d2a59dbd3.pdf (accessed on 19 December 2017).

- Henry, M. Big Data and the Future of Farming; Australian Farm Institute Newsletter: Surry Hills, Australia, 2015; Volume 4. [Google Scholar]

- Meier, L.; Honegger, D.; Pollefeys, M. PX4: A node-based multithreaded open source robotics framework for deeply embedded platforms. In Proceedings of the IEEE International Conference on Robotics & Automation (ICRA), Seattle, WA, USA, 26–30 May 2015. [Google Scholar]

- Laganiere, R. OpenCV 2 Computer Vision Application Programming Cookbook; Packt Publishing Ltd.: Birmingham, UK, 2014. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histogram. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Hough, P.V.C. A Method and Means for Recognizing Complex Patterns. U.S. Patent 3,069,654, 18 December 1962. [Google Scholar]

- Guyon, I.; Elisseeff, A. An Introduction to Variable and Feature Selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Alaydin, E. Introduction to Machine Learning; MIT Press: Cambridge, MA, USA, 2004. [Google Scholar]

- Patel, N.; Upadhyay, S. Study of various decision tree pruning methods with their empirical comparison in WEKA. Int. J. Comput. Appl. 2012, 60, 20–25. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.; Olshen, R.; Stone, C. Classification and Regression Trees; Wadsworth: Belmont, CA, USA, 1984. [Google Scholar]

- Eastwood, M.; Gabrys, B. Generalised bottom-up pruning: A model level combination of decision trees. Expert Syst. Appl. 2012, 39, 9150–9158. [Google Scholar] [CrossRef]

- Powers, D.M.W. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

| Fields | Previous Crop | Planting Date (DOY) | Growth Stage | Flight Day (DOY) | Flight Altitude (m) |

|---|---|---|---|---|---|

| Site 1 | Soybean | 116 | v2 | 135 | 10 |

| Site 2 | Soybean | 130 | v2–v3 | 153 | 10 |

| Site 1 | Site 2 | |||

|---|---|---|---|---|

| Data Set | Training | Testing | Training | Testing |

| Images | 94 | 75 | 87 | 75 |

| Contours | 17,608 | 15,378 | 16,855 | 15,246 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Varela, S.; Dhodda, P.R.; Hsu, W.H.; Prasad, P.V.V.; Assefa, Y.; Peralta, N.R.; Griffin, T.; Sharda, A.; Ferguson, A.; Ciampitti, I.A. Early-Season Stand Count Determination in Corn via Integration of Imagery from Unmanned Aerial Systems (UAS) and Supervised Learning Techniques. Remote Sens. 2018, 10, 343. https://doi.org/10.3390/rs10020343

Varela S, Dhodda PR, Hsu WH, Prasad PVV, Assefa Y, Peralta NR, Griffin T, Sharda A, Ferguson A, Ciampitti IA. Early-Season Stand Count Determination in Corn via Integration of Imagery from Unmanned Aerial Systems (UAS) and Supervised Learning Techniques. Remote Sensing. 2018; 10(2):343. https://doi.org/10.3390/rs10020343

Chicago/Turabian StyleVarela, Sebastian, Pruthvidhar Reddy Dhodda, William H. Hsu, P. V. Vara Prasad, Yared Assefa, Nahuel R. Peralta, Terry Griffin, Ajay Sharda, Allison Ferguson, and Ignacio A. Ciampitti. 2018. "Early-Season Stand Count Determination in Corn via Integration of Imagery from Unmanned Aerial Systems (UAS) and Supervised Learning Techniques" Remote Sensing 10, no. 2: 343. https://doi.org/10.3390/rs10020343

APA StyleVarela, S., Dhodda, P. R., Hsu, W. H., Prasad, P. V. V., Assefa, Y., Peralta, N. R., Griffin, T., Sharda, A., Ferguson, A., & Ciampitti, I. A. (2018). Early-Season Stand Count Determination in Corn via Integration of Imagery from Unmanned Aerial Systems (UAS) and Supervised Learning Techniques. Remote Sensing, 10(2), 343. https://doi.org/10.3390/rs10020343