Spatiotemporal Fusion of Multisource Remote Sensing Data: Literature Survey, Taxonomy, Principles, Applications, and Future Directions

Abstract

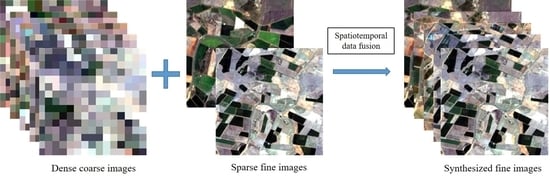

:1. Introduction

2. Literature Survey

3. Taxonomy

4. Principle Laws

4.1. Linear Mixing Model

4.2. Spatial Dependence

4.3. Temporal Dependence

5. Applications

5.1. Agriculture

5.2. Ecology

5.3. Land Cover Classification

6. Issues and Future Directions

6.1. Precise Image Alignment

6.2. Difficulty in Retrieving Land Cover Change

6.3. Standard Method and Dataset for Accuracy Assessment

6.4. Efficiency Improvement

7. Summary

- Existing spatiotemporal data fusion methods were grouped into five categories based on the specific methodology for linking coarse and fine images. These five categories include unmixing-based, weight function-based, Bayesian-based, learning-based, and hybrid methods. Currently, there is no agreement reached about which method is superior. More inter-comparisons among those categories are needed to reveal the pros and cons of the different methods.

- The main principles underlying existing spatiotemporal data fusion methods were explained. These principles include spectral mixing model, spatial dependence, and temporal dependence. Existing spatiotemporal data fusion methods used above principles and employed different mathematic tools to simplify these principles to make the developed methods implementable.

- The major applications of spatiotemporal data fusion were reviewed. Spatiotemporal data fusion can be applied to any study which needs satellite images with high frequency and high spatial resolution. Most current applications are in the field of agriculture, ecology, and land surface process.

- The issues and directions of further development of spatiotemporal data fusion methods were discussed. Spatiotemporal data fusion is one of the youngest research topics in the remote sensing field. There is still a lot of space for further improvements. Four issues were listed which are worth addressing in future studies: the high demand of data preprocessing, the difficulties in retrieving abrupt land cover change, the lack of an accuracy assessment method and data sets and low computing efficiency.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Schneider, A. Monitoring land cover change in urban and peri-urban areas using dense time stacks of Landsat satellite data and a data mining approach. Remote Sens. Environ. 2012, 124, 689–704. [Google Scholar] [CrossRef]

- Shen, M.; Tang, Y.; Chen, J.; Zhu, X.; Zheng, Y. Influences of temperature and precipitation before the growing season on spring phenology in grasslands of the central and eastern Qinghai-Tibetan Plateau. Agric. For. Meteorol. 2011, 151, 1711–1722. [Google Scholar] [CrossRef]

- Lees, K.J.; Quaife, T.; Artz, R.R.E.; Khomik, M.; Clark, J.M. Potential for using remote sensing to estimate carbon fluxes across northern peatlands—A review. Sci. Total Environ. 2013, 615, 857–874. [Google Scholar] [CrossRef] [PubMed]

- Johnson, M.D.; Hsieh, W.W.; Cannon, A.J.; Davidson, A.; Bédard, F. Crop yield forecasting on the Canadian Prairies by remotely sensed vegetation indices and machine learning methods. Agric. For. Meteorol. 2016, 218–219, 74–84. [Google Scholar] [CrossRef]

- Li, X.; Zhou, Y.; Asrar, G.R.; Mao, J.; Li, X.; Li, W. Response of vegetation phenology to urbanization in the conterminous United States. Glob. Chang. Biol. 2016, 23, 2818–2830. [Google Scholar] [CrossRef]

- Liu, Q.; Fu, Y.H.; Zhu, Z.; Liu, Y.; Liu, Z.; Huang, M.; Janssens, I.A.; Piao, S. Delayed autumn phenology in the Northern Hemisphere is related to change in both climate and spring phenology. Glob. Chang. Biol. 2016, 22, 3702–3711. [Google Scholar] [CrossRef] [PubMed]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the Blending of the Landsat and MODIS Surface Reflectance: Predicting Daily Landsat Surface Reflectance. IEEE Trans. Geoscie. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Ju, J.; Roy, D.P. The availability of cloud-free Landsat ETM+ data over the conterminous United States and globally. Remote Sens. Environ. 2008, 112, 1196–1211. [Google Scholar] [CrossRef]

- Storey, J.; Roy, D.P.; Masek, J.; Gascon, F.; Dwyer, J.; Choate, M. A note on the temporary misregistration of Landsat-8 Operational Land Imager (OLI) and Sentinel-2 Multi Spectral Instrument (MSI) imagery. Remote Sens. Environ. 2016, 186, 121–122. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Zhang, H.K.; Huang, B.; Zhang, M.; Cao, K.; Yu, L. A generalization of spatial and temporal fusion methods for remotely sensed surface parameters. Int. J. Remote Sens. 2015, 36, 4411–4445. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. Comparison of spatiotemporal fusion models: A review. Remote Sens. 2015, 7, 1798–1835. [Google Scholar] [CrossRef]

- Gao, F.; Hilker, T.; Zhu, X.; Anderson, M.; Masek, J.; Wang, P.; Yang, Y. Fusing Landsat and MODIS Data for Vegetation Monitoring. IEEE Geosci. Remote Sens. Mag. 2015, 3, 47–60. [Google Scholar] [CrossRef]

- Moosavi, V.; Talebi, A.; Mokhtari, M.H.; Shamsi, S.R.F.; Niazi, Y. A wavelet-artificial intelligence fusion approach (WAIFA) for blending Landsat and MODIS surface temperature. Remote Sens. Environ. 2015, 169. [Google Scholar] [CrossRef]

- Wang, Q.; Atkinson, P.M. Spatio-temporal fusion for daily Sentinel-2 images. Remote Sens. Environ. 2018, 204, 31–42. [Google Scholar] [CrossRef]

- Lu, M.; Chen, J.; Tang, H.; Rao, Y.; Yang, P.; Wu, W. Land cover change detection by integrating object-based data blending model of Landsat and MODIS. Remote Sens. Environ. 2016, 184, 374–386. [Google Scholar] [CrossRef]

- Hwang, T.; Song, C.; Bolstad, P.V.; Band, L.E. Downscaling real-time vegetation dynamics by fusing multi-temporal MODIS and Landsat NDVI in topographically complex terrain. Remote Sens. Environ. 2011, 115, 2499–2512. [Google Scholar] [CrossRef]

- Roy, D.P.; Ju, J.; Lewis, P.; Schaaf, C.; Gao, F.; Hansen, M.; Lindquist, E. Multi-temporal MODIS-Landsat data fusion for relative radiometric normalization, gap filling, and prediction of Landsat data. Remote Sens. Environ. 2008, 112, 3112–3130. [Google Scholar] [CrossRef]

- Li, A.; Bo, Y.; Zhu, Y.; Guo, P.; Bi, J.; He, Y. Blending multi-resolution satellite sea surface temperature (SST) products using Bayesian maximum entropy method. Remote Sens. Environ. 2013, 135, 52–63. [Google Scholar] [CrossRef]

- Weng, Q.; Fu, P.; Gao, F. Generating daily land surface temperature at Landsat resolution by fusing Landsat and MODIS data. Remote Sens. Environ. 2014, 145, 55–67. [Google Scholar] [CrossRef]

- Li, X.; Ling, F.; Foody, G.M.; Ge, Y.; Zhang, Y.; Du, Y. Generating a series of fine spatial and temporal resolution land cover maps by fusing coarse spatial resolution remotely sensed images and fine spatial resolution land cover maps. Remote Sens. Environ. 2017, 196, 293–311. [Google Scholar] [CrossRef]

- Wu, P.; Shen, H.; Zhang, L.; Göttsche, F.M. Integrated fusion of multi-scale polar-orbiting and geostationary satellite observations for the mapping of high spatial and temporal resolution land surface temperature. Remote Sens. Environ. 2015, 156, 169–181. [Google Scholar] [CrossRef]

- Gevaert, C.M.; García-Haro, F.J. A comparison of STARFM and an unmixing-based algorithm for Landsat and MODIS data fusion. Remote Sens. Environ. 2015, 156, 34–44. [Google Scholar] [CrossRef]

- Mizuochi, H.; Hiyama, T.; Ohta, T.; Fujioka, Y.; Kambatuku, J.R.; Iijima, M.; Nasahara, K.N. Development and evaluation of a lookup-table-based approach to data fusion for seasonal wetlands monitoring: An integrated use of AMSR series, MODIS, and Landsat. Remote Sens. Environ. 2017, 199, 370–388. [Google Scholar] [CrossRef]

- Zhu, X.; Helmer, E.H.; Gao, F.; Liu, D.; Chen, J.; Lefsky, M.A. A flexible spatiotemporal method for fusing satellite images with different resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Linke, J.; McDermid, G.; Masek, J.G.; Gao, F.; White, J.C. A new data fusion model for high spatial- and temporal-resolution mapping of forest disturbance based on Landsat and MODIS. Remote Sens. Environ. 2009, 113, 1613–1627. [Google Scholar] [CrossRef]

- Quan, J.; Zhan, W.; Ma, T.; Du, Y.; Guo, Z.; Qin, B. An integrated model for generating hourly Landsat-like land surface temperatures over heterogeneous landscapes. Remote Sens. Environ. 2018, 206, 403–423. [Google Scholar] [CrossRef]

- Huang, B.; Song, H. Spatiotemporal Reflectance Fusion via Sparse Representation. EEE Trans. Geosci. Remote Sens. 2012, 50, 3707–3716. [Google Scholar] [CrossRef]

- Cheng, Q.; Liu, H.; Shen, H.; Wu, P.; Zhang, L. A Spatial and Temporal Non-Local Filter Based Data Fusion Method. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4476–4488. [Google Scholar] [CrossRef]

- Zhukov, B.; Oertel, D.; Lanzl, F.; Reinhäckel, G. Unmixing-based multisensor multiresolution image fusion. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1212–1226. [Google Scholar] [CrossRef]

- Wu, B.; Huang, B.; Zhang, L. An Error-Bound-Regularized Sparse Coding for Spatiotemporal Reflectance Fusion. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6791–6803. [Google Scholar] [CrossRef]

- Wang, P.; Gao, F.; Masek, J.G. Operational data fusion framework for building frequent landsat-like imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7353–7365. [Google Scholar] [CrossRef]

- Wang, Q.; Blackburn, G.A.; Onojeghuo, A.O.; Dash, J.; Zhou, L.; Zhang, Y.; Atkinson, P.M. Fusion of Landsat 8 OLI and Sentinel-2 MSI Data. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3885–3899. [Google Scholar] [CrossRef]

- Song, H.; Huang, B. Spatiotemporal Satellite Image Fusion Through One-Pair Image Learning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1883–1896. [Google Scholar] [CrossRef]

- Malleswara Rao, J.; Rao, C.V.; Senthil, K.; Lakshmi, B.; Dadhwal, V.K.; Rao, J.M.; Rao, C.V.; Kumar, A.S.; Lakshmi, B.; Dadhwal, V.K. Spatiotemporal Data Fusion Using Temporal High-Pass Modulation and Edge Primitives. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5853–5860. [Google Scholar] [CrossRef]

- Wei, J.; Wang, L.; Liu, P.; Chen, X.; Li, W.; Zomaya, A.Y. Spatiotemporal Fusion of MODIS and Landsat-7 Reflectance Images via Compressed Sensing. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7126–7139. [Google Scholar] [CrossRef]

- Shen, H.; Meng, X.; Zhang, L. An Integrated Framework for the Spatio-Temporal-Spectral Fusion of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7135–7148. [Google Scholar] [CrossRef]

- Guan, X.; Liu, G.; Huang, C.; Liu, Q.; Wu, C.; Jin, Y.; Li, Y. An Object-Based Linear Weight Assignment Fusion Scheme to Improve Classification Accuracy Using Landsat and MODIS Data at the Decision Level. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6989–7002. [Google Scholar] [CrossRef]

- Tao, X.; Liang, S.; Wang, D.; He, T.; Huang, C. Improving Satellite Estimates of the Fraction of Absorbed Photosynthetically Active Radiation Through Data Integration: Methodology and Validation. IEEE Trans. Geosci. Remote Sens. 2017, 56, 2107–2118. [Google Scholar] [CrossRef]

- Zhao, C.; Gao, X.; Emery, W.J.; Wang, Y.; Li, J. An Integrated Spatio-Spectral-Temporal Sparse Representation Method for Fusing Remote-Sensing Images With Different Resolutions. IEEE Trans. Geosci. Remote Sens. 2018. [Google Scholar] [CrossRef]

- Xue, J.; Leung, Y.; Fung, T. A Bayesian Data Fusion Approach to Spatio-Temporal Fusion of Remotely Sensed Images. Remote Sens. 2017, 9, 1310. [Google Scholar] [CrossRef]

- Rao, Y.; Zhu, X.; Chen, J.; Wang, J. An Improved Method for Producing High Spatial-Resolution NDVI Time Series Datasets with Multi-Temporal MODIS NDVI Data and Landsat TM/ETM+ Images. Remote Sens. 2015, 7, 7865–7891. [Google Scholar] [CrossRef]

- Zhang, W.; Li, A.; Jin, H.; Bian, J.; Zhang, Z.; Lei, G.; Qin, Z.; Huang, C. An Enhanced Spatial and Temporal Data Fusion Model for Fusing Landsat and MODIS Surface Reflectance to Generate High Temporal Landsat-Like Data. Remote Sens. 2013, 5, 5346–5368. [Google Scholar] [CrossRef]

- Fu, D.; Chen, B.; Wang, J.; Zhu, X.; Hilker, T. An improved image fusion approach based on enhanced spatial and temporal the adaptive reflectance fusion model. Remote Sens. 2013, 5, 6346–6360. [Google Scholar] [CrossRef]

- Liao, L.; Song, J.; Wang, J.; Xiao, Z.; Wang, J. Bayesian method for building frequent landsat-like NDVI datasets by integrating MODIS and landsat NDVI. Remote Sens. 2016, 8, 452. [Google Scholar] [CrossRef]

- Wang, J.; Huang, B. A Rigorously-Weighted Spatiotemporal Fusion Model with Uncertainty Analysis. Remote Sens. 2017, 9, 990. [Google Scholar] [CrossRef]

- Wei, J.; Wang, L.; Liu, P.; Song, W. Spatiotemporal fusion of remote sensing images with structural sparsity and semi-coupled dictionary learning. Remote Sens. 2017, 9, 21. [Google Scholar] [CrossRef]

- Ke, Y.; Im, J.; Park, S.; Gong, H. Downscaling of MODIS One kilometer evapotranspiration using Landsat-8 data and machine learning approaches. Remote Sens. 2016, 8, 215. [Google Scholar] [CrossRef]

- Liao, C.; Wang, J.; Pritchard, I.; Liu, J.; Shang, J. A spatio-temporal data fusion model for generating NDVI time series in heterogeneous regions. Remote Sens. 2017, 9, 1125. [Google Scholar] [CrossRef]

- Xu, C.; Qu, J.; Hao, X.; Cosh, M.; Prueger, J.; Zhu, Z.; Gutenberg, L. Downscaling of Surface Soil Moisture Retrieval by Combining MODIS/Landsat and In Situ Measurements. Remote Sens. 2018, 10, 210. [Google Scholar] [CrossRef]

- Maselli, F.; Rembold, F. Integration of LAC and GAC NDVI data to improve vegetation monitoring in semi-arid environments. Int. J. Remote Sens. 2002, 23, 2475–2488. [Google Scholar] [CrossRef]

- Bhattarai, N.; Quackenbush, L.J.; Dougherty, M.; Marzen, L.J. A simple Landsat–MODIS fusion approach for monitoring seasonal evapotranspiration at 30 m spatial resolution. Int. J. Remote Sens. 2015, 36, 115–143. [Google Scholar] [CrossRef]

- Shen, H.; Wu, P.; Liu, Y.; Ai, T.; Wang, Y.; Liu, X. A spatial and temporal reflectance fusion model considering sensor observation differences. Int. J. Remote Sens. 2013, 34, 4367–4383. [Google Scholar] [CrossRef]

- Rao, C.V.; Malleswara Rao, J.; Senthil Kumar, A.; Dadhwal, V.K. Fast spatiotemporal data fusion: Merging LISS III with AWiFS sensor data. Int. J. Remote Sens. 2014, 35, 8323–8344. [Google Scholar] [CrossRef]

- Wu, B.; Huang, B.; Cao, K.; Zhuo, G. Improving spatiotemporal reflectance fusion using image inpainting and steering kernel regression techniques. Int. J. Remote Sens. 2017, 38, 706–727. [Google Scholar] [CrossRef]

- Zurita-Milla, R.; Clevers, J.G.P.W.; Schaepman, M.E. Unmixing-based landsat TM and MERIS FR data fusion. IEEE Geosci. Remote Sens. Lett. 2008, 5, 453–457. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Deng, C.; Wang, S.; Huang, G.-B.; Zhao, B.; Lauren, P. Fast and Accurate Spatiotemporal Fusion Based Upon Extreme Learning Machine. IEEE Geosci. Remote Sens. Lett. 2016, 13, 2039–2043. [Google Scholar] [CrossRef]

- Huang, B.; Wang, J.; Song, H.; Fu, D.; Wong, K. Generating High Spatiotemporal Resolution Land Surface Temperature for Urban Heat Island Monitoring. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1011–1015. [Google Scholar] [CrossRef]

- Xu, Y.; Huang, B.; Xu, Y.; Cao, K.; Guo, C.; Meng, D. Spatial and Temporal Image Fusion via Regularized Spatial Unmixing. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1362–1366. [Google Scholar] [CrossRef]

- Houborg, R.; McCabe, M.F.; Gao, F. A Spatio-Temporal Enhancement Method for medium resolution LAI (STEM-LAI). Int. J. Appl. Earth Obs. Geoinf. 2016, 47, 15–29. [Google Scholar] [CrossRef]

- Amorós-López, J.; Gómez-Chova, L.; Alonso, L.; Guanter, L.; Zurita-Milla, R.; Moreno, J.; Camps-Valls, G. Multitemporal fusion of Landsat/TM and ENVISAT/MERIS for crop monitoring. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 132–141. [Google Scholar] [CrossRef]

- Niu, Z. Use of MODIS and Landsat time series data to generate high-resolution temporal synthetic Landsat data using a spatial and temporal reflectance fusion model. J. Appl. Remote Sens. 2012, 6, 63507. [Google Scholar] [CrossRef]

- Hazaymeh, K.; Hassan, Q.K. Spatiotemporal image-fusion model for enhancing the temporal resolution of Landsat-8 surface reflectance images using MODIS images. J. Appl. Remote Sens. 2015, 9, 96095. [Google Scholar] [CrossRef]

- Xie, D.; Zhang, J.; Zhu, X.; Pan, Y.; Liu, H.; Yuan, Z.; Yun, Y. An Improved STARFM with Help of an Unmixing-Based Method to Generate High Spatial and Temporal Resolution Remote Sensing Data in Complex Heterogeneous Regions. Sensors 2016, 16, 207. [Google Scholar] [CrossRef] [PubMed]

- Wu, M.; Huang, W.; Niu, Z.; Wang, C. Generating Daily Synthetic Landsat Imagery by Combining Landsat and MODIS Data. Sensors 2015, 15, 24002–24025. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Zhang, Y.; Onojeghuo, A.O.; Zhu, X.; Atkinson, P.M. Enhancing Spatio-Temporal Fusion of MODIS and Landsat Data by Incorporating 250 m MODIS Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4116–4123. [Google Scholar] [CrossRef]

- Song, H.; Liu, Q.; Wang, G.; Hang, R.; Huang, B. Spatiotemporal Satellite Image Fusion Using Deep Convolutional Neural Networks. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 821–829. [Google Scholar] [CrossRef]

- Boyte, S.P.; Wylie, B.K.; Rigge, M.B.; Dahal, D. Fusing MODIS with Landsat 8 data to downscale weekly normalized difference vegetation index estimates for central Great Basin rangelands, USA. GIScience Remote Sens. 2018, 55, 376–399. [Google Scholar] [CrossRef]

- Huang, B.; Zhang, H.; Song, H.; Wang, J.; Song, C. Unified fusion of remote-sensing imagery: Generating simultaneously high-resolution synthetic spatial–temporal–spectral earth observations. Remote Sens. Lett. 2013, 4, 561–569. [Google Scholar] [CrossRef]

- Gärtner, P.; Förster, M.; Kleinschmit, B. The benefit of synthetically generated RapidEye and Landsat 8 data fusion time series for riparian forest disturbance monitoring. Remote Sens. Environ. 2016, 177, 237–247. [Google Scholar] [CrossRef]

- Aman, A.; Randriamanantena, H.P.; Podaire, A.; Frouin, R. Upscale Integration of Normalized Difference Vegetation Index: The Problem of Spatial Heterogeneity. IEEE Trans. Geosci. Remote Sens. 1992, 30, 326–338. [Google Scholar] [CrossRef]

- Kerdiles, H.; Grondona, M.O. NOAA-AVHRR NDVI decomposition and subpixel classification using linear mixing in the argentinean pampa. Int. J. Remote Sens. 1995, 16, 1303–1325. [Google Scholar] [CrossRef]

- Tobler, W.R. A Computer Movie Simulating Urban Growth in the Detroit Region. Econ. Geogr. 1970, 46, 234–240. [Google Scholar] [CrossRef]

- Zhu, X.; Liu, D.; Chen, J. A new geostatistical approach for filling gaps in Landsat ETM+ SLC-off images. Remote Sens. Environ. 2012, 124, 49–60. [Google Scholar] [CrossRef]

- Mueller, N.D.; Gerber, J.S.; Johnston, M.; Ray, D.K.; Ramankutty, N.; Foley, J.A. Closing yield gaps through nutrient and water management. Nature 2012, 490, 254–257. [Google Scholar] [CrossRef] [PubMed]

- Foley, J.A.; Ramankutty, N.; Brauman, K.A.; Cassidy, E.S.; Gerber, J.S.; Johnston, M.; Mueller, N.D.; O’Connell, C.; Ray, D.K.; West, P.C.; et al. Solutions for a cultivated planet. Nature 2011, 478, 337–342. [Google Scholar] [CrossRef] [PubMed]

- Lobell, D.B. The use of satellite data for crop yield gap analysis. Field Crops Res. 2013, 143, 56–64. [Google Scholar] [CrossRef]

- Allen, R.; Irmak, A.; Trezza, R.; Hendrickx, J.M.H.; Bastiaanssen, W.; Kjaersgaard, J. Satellite-based ET estimation in agriculture using SEBAL and METRIC. Hydrol. Process. 2011, 25, 4011–4027. [Google Scholar] [CrossRef]

- Li, L.; Zhao, Y.; Fu, Y.; Pan, Y.; Yu, L.; Xin, Q. High resolution mapping of cropping cycles by fusion of landsat and MODIS data. Remote Sens. 2017, 9, 1232. [Google Scholar] [CrossRef]

- Peng, Y.; Gitelson, A.A.; Sakamoto, T. Remote estimation of gross primary productivity in crops using MODIS 250m data. Remote Sens. Environ. 2013, 128, 186–196. [Google Scholar] [CrossRef]

- Son, N.T.; Chen, C.F.; Chang, L.Y.; Chen, C.R.; Sobue, S.I.; Minh, V.Q.; Chiang, S.H.; Nguyen, L.D.; Lin, Y.W. A logistic-based method for rice monitoring from multi-temporal MODIS-landsat fusion data. Eur. J. Remote Sens. 2016, 49, 39–56. [Google Scholar] [CrossRef]

- Zheng, Y.; Wu, B.; Zhang, M.; Zeng, H. Crop Phenology Detection Using High Spatio-Temporal Resolution Data Fused from SPOT5 and MODIS Products. Sensors 2016, 16, 2099. [Google Scholar] [CrossRef] [PubMed]

- Meng, J.; Du, X.; Wu, B. Generation of high spatial and temporal resolution NDVI and its application in crop biomass estimation. Int. J. Digit. Earth 2013, 6, 203–218. [Google Scholar] [CrossRef]

- Gao, F.; Anderson, M.C.; Zhang, X.; Yang, Z.; Alfieri, J.G.; Kustas, W.P.; Mueller, R.; Johnson, D.M.; Prueger, J.H. Toward mapping crop progress at field scales through fusion of Landsat and MODIS imagery. Remote Sens. Environ. 2017, 188, 9–25. [Google Scholar] [CrossRef]

- Singh, D. Generation and evaluation of gross primary productivity using Landsat data through blending with MODIS data. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 59–69. [Google Scholar] [CrossRef]

- Wang, X.; Jia, K.; Liang, S.; Li, Q.; Wei, X.; Yao, Y.; Zhang, X.; Tu, Y. Estimating Fractional Vegetation Cover from Landsat-7 ETM+ Reflectance Data Based on a Coupled Radiative Transfer and Crop Growth Model. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5539–5546. [Google Scholar] [CrossRef]

- Li, Y.; Huang, C.; Hou, J.; Gu, J.; Zhu, G.; Li, X. Mapping daily evapotranspiration based on spatiotemporal fusion of ASTER and MODIS images over irrigated agricultural areas in the Heihe River Basin, Northwest China. Agric. For. Meteorol. 2017, 244–245, 82–97. [Google Scholar] [CrossRef]

- Meyer, S.T.; Koch, C.; Weisser, W.W. Towards a standardized Rapid Ecosystem Function Assessment (REFA). Trends Ecol. Evol. 2015, 30, 390–397. [Google Scholar] [CrossRef] [PubMed]

- Pettorelli, N.; Schulte to Bühne, H.; Tulloch, A.; Dubois, G.; Macinnis-Ng, C.; Queirós, A.M.; Keith, D.A.; Wegmann, M.; Schrodt, F.; Stellmes, M.; et al. Satellite remote sensing of ecosystem functions: Opportunities, challenges and way forward. Remote Sens. Ecol. Conserv. 2017, 1–23. [Google Scholar] [CrossRef]

- Nagendra, H.; Lucas, R.; Honrado, J.P.; Jongman, R.H.G.; Tarantino, C.; Adamo, M.; Mairota, P. Remote sensing for conservation monitoring: Assessing protected areas, habitat extent, habitat condition, species diversity, and threats. Ecol. Indic. 2013, 33, 45–59. [Google Scholar] [CrossRef]

- Pettorelli, N.; Laurance, W.F.; O’Brien, T.G.; Wegmann, M.; Nagendra, H.; Turner, W. Satellite remote sensing for applied ecologists: Opportunities and challenges. J. Appl. Ecol. 2014, 51, 839–848. [Google Scholar] [CrossRef]

- Wu, M.; Wu, C.; Huang, W.; Niu, Z.; Wang, C. High-resolution Leaf Area Index estimation from synthetic Landsat data generated by a spatial and temporal data fusion model. Comput. Electron. Agric. 2015, 115, 1–11. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, L.; Xie, D.; Yin, X.; Liu, C.; Liu, G. Application of synthetic NDVI time series blended from landsat and MODIS data for grassland biomass estimation. Remote Sens. 2016, 8, 10. [Google Scholar] [CrossRef]

- Walker, J.J.; de Beurs, K.M.; Wynne, R.H. Dryland vegetation phenology across an elevation gradient in Arizona, USA, investigated with fused MODIS and Landsat data. Remote Sens. Environ. 2014, 144, 85–97. [Google Scholar] [CrossRef]

- Tran, T.V.; de Beurs, K.M.; Julian, J.P. Monitoring forest disturbances in Southeast Oklahoma using Landsat and MODIS images. Int. J. Appl. Earth Obs. Geoinf. 2016, 44, 42–52. [Google Scholar] [CrossRef]

- Senf, C.; Leitão, P.J.; Pflugmacher, D.; van der Linden, S.; Hostert, P. Mapping land cover in complex Mediterranean landscapes using Landsat: Improved classification accuracies from integrating multi-seasonal and synthetic imagery. Remote Sens. Environ. 2015, 156, 527–536. [Google Scholar] [CrossRef]

- Zhang, F.; Zhu, X.; Liu, D. Blending MODIS and Landsat images for urban flood mapping. Int. J. Remote Sens. 2014, 35, 3237–3253. [Google Scholar] [CrossRef]

- Kong, F.; Li, X.; Wang, H.; Xie, D.; Li, X.; Bai, Y. Land cover classification based on fused data from GF-1 and MODIS NDVI time series. Remote Sens. 2016, 8, 741. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. Multi-source remotely sensed data fusion for improving land cover classification. ISPRS J. Photogramm. Remote Sens. 2017, 124, 27–39. [Google Scholar] [CrossRef]

- Kakooei, M.; Baleghi, Y. Fusion of satellite, aircraft, and UAV data for automatic disaster damage assessment. Int. J. Remote Sens. 2017, 38, 2511–2534. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Gao, L.; Zhan, W.; Huang, F.; Zhu, X.; Zhou, J.; Quan, J.; Du, P.; Li, M. Disaggregation of remotely sensed land surface temperature: A simple yet flexible index (SIFI) to assess method performances. Remote Sens. Environ. 2017, 200, 206–219. [Google Scholar] [CrossRef]

- Emelyanova, I.V.; McVicar, T.R.; Van Niel, T.G.; Li, L.T.; van Dijk, A.I.J.M. Assessing the accuracy of blending Landsat–MODIS surface reflectances in two landscapes with contrasting spatial and temporal dynamics: A framework for algorithm selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar] [CrossRef]

| Satellite | Sensor | Spatial Resolution | Revisit Cycle | Operational Period |

|---|---|---|---|---|

| NOAA | AVHRR | 1.1 km | 12 h | From 1978 |

| Terra/Aqua | MODIS | Band 1–2: 250 m | 1 day | From 2000 |

| Band 3–7: 500 m | ||||

| Band 8–36: 1000 m | ||||

| Terra | ASTER | VNIR: 15 m | 16 days | From Dec-1999 |

| SWIR: 30 m | ||||

| TIR: 90 m | ||||

| Landsat | MSS (Landsat 1–3) | 79 m | 18 days | From 1972 to 1983 |

| MSS+TM(Landsat5) | VNIR: 30 m | 16 days | From 1984 to 2013 | |

| TIR: 120 m | ||||

| ETM+(Landsat-7) | VNIR: 30 m | 16 days | From 1999 | |

| TIR: 60 m | ||||

| OLI(Landsat-8) | VNIR: 30 m | 16 days | From 2013 | |

| TIR: 100 m | ||||

| OrbView-2 | Sealifts | 1 km | 1 day | From 1997 |

| SPOT | HRV(SPOT1–3) | 20 m | 26 days (VGT 1 day) | From 1986 |

| VGT(SPOT-4) | 1.15 km | |||

| HRG/HRS/VGT(SPOT-5) | HRG-VNIR: 10 m | |||

| HRG-SWIR: 20 m | ||||

| ENVISAT | MERIS | 300 m | 35 days | From 2002 to 2012 |

| Sentinel-2 | MSI | 10 m: (VNIR) B2,3,4,8 | 10 days with one satellite and 5 days with 2 satellites | From Jun-2015 |

| 20 m: B5,6,7,8A,11,12 | ||||

| 60 m: B1,9,10 | ||||

| HJ-1A/1B | Multi-spectral sensor | 30 m | 31 days | From 2009 |

| TH-1 | Multi-spectral sensor | 10 m | 58 days | From Aug-2010 |

| BJ-1 | Multi-spectral sensor | 32 m | From Oct-2005 | |

| CBERS-01/02 | Multi-spectral sensor | Multi-spectral-CCD: 19.5 m | 26 days | CBERS-01: From 1999 to 2002 |

| Multi-spectral-IRMSS: 78 m/156 m | 26 days | CBERS-02: From Oct-2003 | ||

| ZY-1 02B | Multi-spectral sensor | 20 m | 26 days | From Sep-2007 |

| ZY-1 02C | Multi-spectral sensor | 10 m | 55 days | From Apr-2012 |

| SJ-9A | Multi-spectral sensor | 10 m | 69 days | From Oct-2012 |

| ALOS | AVNIR-2 | 10 m | 46 days | From 2006 to 2011 |

| ADEOS | Multi-spectral sensor | 700 m | 41 days | From 1996 to 1997 |

| JERS-1 | OPS | 18 m | 44 days | From 1992 to 1998 |

| IRS-1A/1B | LISS-I/LISS-II/LISS-A/LISS-B | 72.5 m(LISS-I); 36 m(LISS-II) | 22 days | From 1988 |

| IRS-1C/1D | LISS-III | 23.5 m; 70 m | 24 days | From 1995 to 2010 |

| IRS-P3 | WiFS/MOS | 188 m(WiFS); 1500 m/520 m/ 550 m(MOS) | 5 days | From 1996 to 2004 |

| IRS-P6 | LISS-IV/LISS-III/AWiFS | 5.8 m(LISS-IV)/23.5 m(LISS-III)/70 m(AWiFS) | 24 days | From 2003 |

| THEOS | Multi-spectral sensor | 15 m | 26 days | From Oct-2008 |

| Source Titles | Records | Percentage | Literature |

|---|---|---|---|

| Remote Sensing of Environment | 15 | 25.86% | [11,15,16,17,18,19,20,21,22,23,24,25,26,27,28] |

| IEEE Transactions on Geoscience and Remote Sensing | 14 | 24.14% | [8,29,30,31,32,33,34,35,36,37,38,39,40,41] |

| Remote Sensing | 10 | 17.24% | [42,43,44,45,46,47,48,49,50,51] |

| International Journal of Remote Sensing | 5 | 8.62% | [52,53,54,55,56] |

| IEEE Geoscience and Remote Sensing Letters | 4 | 6.90% | [57,58,59,60] |

| International Journal of Applied Earth Observation and Geoinformation | 2 | 3.45% | [61,62] |

| Journal of Applied Remote Sensing | 2 | 3.45% | [63,64] |

| Sensors | 2 | 3.45% | [65,66] |

| IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing | 2 | 3.45% | [67,68] |

| GIScience and Remote Sensing | 1 | 1.72% | [69] |

| Remote Sensing Letters | 1 | 1.72% | [70] |

| TOTAL | 58 | 100% |

| Category | Methods 1 and References |

|---|---|

| Unmixing-based | MMT [31]; LAC-GAC NDVI integration [52]; Landsat-MERIS fusion method [62]; ESTDFM [44]; STDFA [63]; MSTDFA [66]; OB-STVIUM [17]; MERIS-Landsat fusion [57] |

| Weight function-based | STARFM [8]; semi-physical fusion approach [19]; ESTARFM [11]; STAARCH [27]; SADFAT [21]; Topographically corrected downscaling [18]; STITFM [23]; STARFM-Sensor difference [54]; mESTARFM [45]; Bilateral Filter method [59]; ET fusion model [53]; STI-FM [64]; operational STARFM [33]; Three-step method [16]; STEM-LAI [61]; ATPPK [34]; ATPPK-STARFM [67]; STNLFFM [30]; Fast spatiotemporal fusion [55]; RWSTFM [47]; DBUX [25]; ISKRFM [56]; Temporal high-pass modulation and edge primitives method [36]; Decision-level fusion [39]; STVIFM [50]; Soil moisture downscaling [51] |

| Bayesian-based | BME [20]; Unified fusion [70]; NDVI-BSFM [46]; Bayesian data fusion approach [42]; Spatio-Temporal-Spectral fusion framework [38] |

| Learning-based | SPSTFM [29]; One-pair image learning method [35]; EBSPTM [32]; ELM-based method [58]; WAIFA [15]; bSBL-SCDL [48]; CSSF [37]; Regression tree-based method [69]; Evapotranspiration downscaling [49]; STFDCNN [68]; MRT [40]; Integrated sparse representation-based fusion [41] |

| Hybrid | FSDAF [26]; NDVI-LMGM [43]; Regularized spatial unmixing method [60]; STIMFM [22]; STRUM [24]; USTARFM [65]; BLEST [28] |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, X.; Cai, F.; Tian, J.; Williams, T.K.-A. Spatiotemporal Fusion of Multisource Remote Sensing Data: Literature Survey, Taxonomy, Principles, Applications, and Future Directions. Remote Sens. 2018, 10, 527. https://doi.org/10.3390/rs10040527

Zhu X, Cai F, Tian J, Williams TK-A. Spatiotemporal Fusion of Multisource Remote Sensing Data: Literature Survey, Taxonomy, Principles, Applications, and Future Directions. Remote Sensing. 2018; 10(4):527. https://doi.org/10.3390/rs10040527

Chicago/Turabian StyleZhu, Xiaolin, Fangyi Cai, Jiaqi Tian, and Trecia Kay-Ann Williams. 2018. "Spatiotemporal Fusion of Multisource Remote Sensing Data: Literature Survey, Taxonomy, Principles, Applications, and Future Directions" Remote Sensing 10, no. 4: 527. https://doi.org/10.3390/rs10040527

APA StyleZhu, X., Cai, F., Tian, J., & Williams, T. K. -A. (2018). Spatiotemporal Fusion of Multisource Remote Sensing Data: Literature Survey, Taxonomy, Principles, Applications, and Future Directions. Remote Sensing, 10(4), 527. https://doi.org/10.3390/rs10040527