1. Introduction

Landslide inventory maps may provide baseline information about landslide types, distribution, location, and boundaries in landslide-prone areas. Information pertaining to slope and displacement measurements affecting failure may be deduced from landslide inventories [

1]. Furthermore, landslide inventories could be deployed for various purposes, such as implementation of landslide susceptibility, hazard assessment, risk assessment, and landslide magnitude recording. It is a quite challenging task to map landslide inventory in tropical areas due to densely vegetative cover which obscures the underlying landforms [

2]. However, most of the available traditional landslide detection methods, such as optical and aerial photographs, high spatial resolution multispectral images, synthetic aperture radar (SAR) images, very high resolution (VHR) satellite images, and moderate-resolution digital terrain models (DTM) [

3,

4,

5,

6] are not sufficiently quick and accurate enough to map landslide inventory due to rapid vegetation growth in tropical areas. Thus, a fast and accurate approach is required in landslide inventory mapping. Therefore, high-resolution light detection and ranging (LiDAR)-derived digital elevation models (DEMs) may provide highly valuable information on landslide-prone terrain shielded by densely vegetative cover [

7]. High-resolution DEM may be employed to detect landforms and provide useful information about densely vegetated and rocky areas; consequently, minimal changes in topographic features may be easily detected [

8,

9,

10]. Generally, LiDAR data could propose significant benefits, owing to their ability to penetrate dense vegetation and provide valuable information on topographic conditions [

2]. These benefits make LiDAR data unique and different from other data sources, such as aerial photographs for slope failure detection under dense vegetation [

4,

6].

Pixel-based approaches often exhibit a “salt and pepper” appearance when very high spatial resolution images are employed [

11]. However, an object-based method is widely utilized in landslide mapping to resolve the aforementioned pixel-based limitations [

12]. According to Moosavi et al. [

13], it is usually assumed that a pixel is very likely to belong to the same class as its neighboring pixels. In contrast to the information obtained from individual pixels, object-based image analysis offers additional geometric and contextual information that can be derived from image objects [

5,

12]. In an object-based approach, one of the most challenging issues is selecting the optimum combination of segmentation parameters [

13]. According to Zeng et al. [

14], the Dempster–Shafer theory (DST) is a precise fusion algorithm utilizing belief uncertainty intervals to present the belief of assumptions based on evidence of multiple observations. The algorithm utilizes reasoning, weight, and probability-driven evidences contained within the dataset [

14]. The ability to handle incomplete data and an associated degree of uncertainty gives DST the strength to exploit data from many sensors in a processing train [

15]. The reasoning-based system [

16] and the empirical determination of parameters in DST make the concept generally applicable without depending on satellite imagery [

15]. Furthermore, it is an economical and time-effective alternative, as it decreases the time required to select training sites [

17]. From the literature, it is apparent that the DST method has previously been employed for multisensor images [

18]. In the current study, the DST method was applied to fuse three object-based classified maps at the feature level. This approach (DST) has been tested in many other applications, such as fingerprint verification, forensics, ontologies, and the military [

19,

20,

21]. Also, the model has been used in remote sensing applications such as mapping landslide susceptibility [

22,

23,

24,

25], groundwater assessment and potentials [

26], and risk assessment of groundwater pollution [

27]. In 2017, Mezaal et al. [

28] applied the DST method for automatic detection of landslide locations using very high LiDAR-derived data and orthophoto imagery.

Landslides have the capability to display heterogeneous sizes that require information with higher spatial resolutions in order to produce complete event inventories [

29]. Effective feature selection, such as texture, image band information, and geometric features, are needed to improve the quality of landslide inventory mapping. However, handling huge amount of irrelevant features can lead to overfitting [

3]. Landslide identification in a particular area may be improved by selecting the most significant features [

3,

6,

7,

8,

9]. According to Van Westen et al. [

8], selection of the most significant features may aid in distinguishing between landslides and non-landslides. Researchers such as Stumpf and Kerle [

30] have attempted to improve the efficiency of feature selection in detecting the location of landslides. Some object-based investigations have taken care of feature selection to detect landslides using LiDAR data [

3,

31]. Recently, Pradhan and Mezaal [

2] effectively utilized a correlation-based selection method (CFS) to optimize the feature selection for detecting landslides in tropical areas.

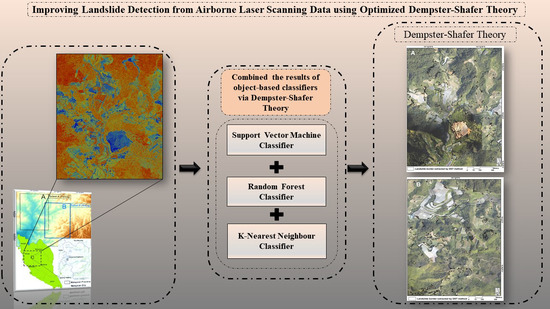

Therefore, the present study proposes an improved approach to detect landslide locations by employing a Dempster–Shafer theory (DST) fusion technique. In this technique, three object-based classified outputs are fused together (in contrast to multisensor data) to achieve more efficient and accurate results.

2. Materials and Methods

The investigation started with the preprocessing of LiDAR data and landslide inventories, an essential step typically taken before the commencement of subsequent steps to eliminate the noise and outliers from data. Subsequently, a high-resolution DEM (0.5 m) was derived from LiDAR point clouds and utilized to produce other LiDAR-derived products and landslide conditioning factors (i.e., aspect, slope, height, normalized digital surface model (nDSM), intensity, and hillshade). The LiDAR-derived products and orthophotos were combined by correcting their geometric distortions and were projected in one coordinate system. Lastly, they were prepared in GIS for feature extraction. Thereafter, a fuzzy-based segmentation parameter optimizer (FbSP optimizer), developed by Zhang et al. [

32], was employed to acquire suitable parameters (i.e., shape, scale, and compactness) in differing levels of segmentation. Next, three object-based approaches—support vector machine (SVM), random forest (RF), and K-nearest neighbor (KNN) classifiers—were applied for both analysis and test areas. Subsequently, DST was employed to combine the outputs of the aforementioned classifiers in MATLAB R2015b. Model transferability was applied to another part of the study area (i.e., test site). The results were then validated and compared based on quantitative and qualitative methods (i.e., confusion matrix and precision/recall).

Figure 1 illustrates a detailed flowchart of the methodology and the overall framework.

2.1. Study Area

The present investigation was carried out in the tropical, densely vegetated rainforest of Cameron Highlands, Malaysia. The rationality for choosing this study area was the frequent occurrence of landslides there. Geographically, the area under consideration is located in the north of peninsular Malaysia (latitude 4°26′09″N–4°27′30″N and longitude 101°23′02″E–101°23′47″E), covering an area of 26.7 km2. The study area records an annual average rainfall of about 2660 mm and average temperatures of 24 °C and 14 °C during day and night times, respectively. A significant portion of the study area (80%) is forested landform, ranging from flat terrain (0°) to hilly area (80°).

In the present investigation, two subsets were selected to implement the proposed method, shown in

Figure 2. A training area was employed to enhance the methodology for detecting the location of landslides. Similarly, a testing site was employed for testing purposes. Meticulous care was taken in selecting the test site to avoid deficiencies in the number of classes. Moreover, the training sample size was assessed using stratified random sampling method in order to enhance the accuracy of the subsets under consideration (i.e., training and test sites).

Generally speaking, landslide history is an important aspect of landslide detection. It gives an idea of its occurrences in a particular region. In this regard, landslide history was collected from many sources such as newspapers, national reports, technical papers, etc. The preliminary classification was based on age for landslide inventory mapping in accordance with visible morphologic criteria captured on aerial photographs. Landslide deposits and scars are disequilibrium landforms that evolve through morphologic stages with age [

33]. Specifically, relevant information from previous investigations and landslide inventory information spanning over a decade was prepared for the whole Cameron Highlands. In an earlier paper by Pradhan and Lee [

34], a database of 324 landslide incidents was prepared for 293 km

2 of the Cameron Highlands to assess the number of landslides and their corresponding surface area. It was observed that the landslides are shallow rotational and a few translational types. The data was put together based on historical landslide records. In March 2011, AIRSAR data was deployed to prepare the landslide inventory map. In 2014, Samy and Marghany [

35] presented the landslide history of 273 landslides of different sizes collected from the archive data of the Department of Mineral and Geosciences, Malaysia. In the same year, Murakmi et al. [

36] prepared a database of historical landslide occurrences in the Malaysian Peninsula. In 2015, Shahab and Hashim [

37] reported a landslide history prepared from different sources, such as field surveys, published reports, and digital aerial photographs (DAP) covering a 25-year period. Therefore, landslides can be characterized as old and new events using visual inspection and overlaying the last landslides events on the Cameron Highlands. Due to vegetation cover, landslides which have occurred between 2008 and 2010 are considered old landslides. On the other hand, new landslides are those which have occurred after 2010. New landslides less than 5-years old are apparently barren in nature and can be observed by red, green, and blue (RGB) sensors. However, the ones older than 5 years are usually covered by vegetation and thus cannot be recognized in visible bands.

Figure 3 and

Figure 4 show the landslide inventory map for both subsets (training and test sites), respectively.

2.2. Data Used

The LiDAR point cloud data was captured for the Cameron Highlands region on January 15, 2015. The land area spans 26.7 km

2 of the Ringlet at an altitude of 1510 m. The point density is approximately 8 points per square meter, with a 25,000-Hz pulse rate frequency. The accuracy of the LiDAR data was restricted to conform to the root-mean-square errors of 0.15 m and 0.3 m in the vertical and horizontal axes, respectively, as standardized by the Department of Survey and Mapping Malaysia (JUPEM). A high-resolution camera (visible bands) used in the acquisition of LiDAR point cloud data in the study area was deployed to collect the orthophotos. A DSM was generated by interpolating LiDAR point clouds using inverse distance weighting method (IDW) using the ArcGIS 10.3 software (

Figure 5A). A DEM of spatial resolution of 0.5 m was interpolated from the LiDAR point clouds after removing the nonground points using inverse distance weighting, with GDM2000 as the spatial reference (

Figure 5B). Next, the height feature, i.e., normalized DSM (nDSM), was derived by subtracting DSM from DEM (

Figure 5E). Subsequently, the LiDAR-based DEM was used to generate the derived layers in identifying the location of landslides and their features [

38]. Slope is a major determinant of land stability due to its role in landslide phenomenology [

39]. Hillshade refers to a map showing sufficient images of terrain movement which aid in landslide mapping [

40]. The intensity image obtained from LiDAR data also play a significant role in differentiating between landslides and non-landslides [

2]. Moreover, the quality of the landslide inventory map may be significantly improved by using texture features [

2,

6]. These data provide extensive information on landslide detection [

28]. The accuracy and ability of DEM to represent the surface are affected by terrain morphology, sampling density, and the interpolation algorithm [

41]. In the present study, various LiDAR-derived data were employed as follows: DEM, DSM, intensity, height (nDSM), slope, and aspect (shown in

Figure 5). Additionally, orthophoto images (i.e., visible bands) and texture features were used to detect the landslides.

2.3. Multiresolution Segmentation Algorithm

A multiresolution segmentation (MRS) is a bottom-up approach used in a segmentation algorithm and is based on the pairwise region-merging technique [

12]. In algorithms, image pixels that possess homogeneous spectral and textural characteristics are usually grouped [

42]. The smaller objects are substituted in the larger ones based on certain criteria obtainable from three parameters: color, scale, and shape (i.e., smoothness and compactness). The aforementioned three parameters may be determined in this algorithm using the traditional trial-and-error method; however, this is a time-consuming and laborious task [

4]. Hence, many semiautomatic and automatic approaches have attempted to optimize parameter segmentation [

43,

44,

45]. Optimization techniques consider only the scale, without taking into consideration the combination of the parameters [

4]. A few powerful optimization techniques currently exist, such as the Taguchi optimization method [

4] and the fuzzy-based segmentation parameter optimizer (FbSP optimizer) [

32]. These techniques represent advanced methods employed for automatic combination of segmentation parameters (i.e., scale, shape, and compactness). However, differentiating among image objects at various scales is still a challenge and not all feature selection methods are fully utilized in a particular segmentation scale. Thus, it is suggested that an automatic method should be directly implemented.

2.4. Calculation of the Relevant Feature Selection

Classification schemes implemented on segments are more significant than single pixels in an object-based approach. This occurs by incorporating a multitude of additional information such as the texture, shape, and context associated with image objects [

46]. However, both objective and subjective methods may be used to select important object features in object-based classification. The subjective methods depend on user knowledge and past experience, while the employment of feature selection algorithms is relatively objective [

47].

According to Stumpf and Kerle [

30], several remote sensing applications are able to measure rotation invariance. However, they cannot capture directional patterns in the grey-value distribution. Landslide-affected areas regularly appear in a downslope direction with texture patterns. Stumpf and Kerle [

30] reported that patterns are potential features for detecting and differentiating landslide surfaces and texture patterns oriented at the strike of the slope [

30]. Gray-level co-occurrence matrix (GLCM) texture features can be calculated with airborne laser scanning data by using eCognition software and the measured texture co-occurrence can be calculated for individual landslide objects in the software. This result comprises Dissimilarity, Contrast, Homogeneity, and Standard Deviation co-occurrence texture measured based on all bands.

In the present study, a correlation-based feature selection algorithm (CFS) was used to select the most important features in detecting the landslide locations. The best search was utilized for determining the feature space, and the five consecutive, fully expanded, nonimproving subsets were set to a stopping criterion in order to avoid searching the entire feature subset space. In this study, the Weka 3.8 package was used to implement this feature selection algorithm. Furthermore, three employed object features resulted in 82 total features—Mean and StdDev LiDAR data, Mean and StdDev visible band, and texture (see

Table 1). Mean and StdDev data were extracted from airborne laser scanning data with the use of eCognition software.

The correlation coefficient between Mean Intensity and GLCM Dissimilarity, GLCM Angular second moment, had a positive and moderate relationship at (

p < 0.01), and it had a negative moderate relationship at (

p < 0.01) with StdDev Blue as indicated in

Table 2. The relationship between GLCM Homogeneity and GLCM Contrast and GLCM Dissimilarity were negatively correlated at (

p < 0.01). However, there was positive significant relationship at (

p < 0.01) with GLCM Angular second moment and StdDev Blue. The Mean Slope had a positive significant relationship at (

p < 0.01) with Mean DTM and StdDev Blue, however it had a negative significant relationship at (

p < 0.05) with StdDe DTM. The Mean Red showed a strong relationship at (

p < 0.01) with GLCM Contrast and GLCM Dissimilarity, and it showed a negative relationship at (

p < 0.05) with StdDe DTM. The correlation coefficient between Mean DTM and StdDe DTM was negatively significant at (

p < 0.05). GLCM Contrast had a strong significant relationship at (

p < 0.01) with GLCM Dissimilarity. The relationship between GLCM Dissimilarity and StdDev Blue was negatively significant at (

p < 0.05).

2.5. Support Vector Machine (SVM)

The dataset was categorized into groups using a supervised nonparametric statistical learning technique consistent with training examples. SVMs are gaining huge popularity in various applications of remote sensing, especially in landslide mapping [

13,

29,

48,

49,

50], due to their capability in handling small training datasets and unknown statistical distribution data obtained in the field [

51]. Huang et al. [

52] observed that SVMs containing fewer training dataset yielded more stable results compared to those of decision tree, maximum belief, and artificial neural network classifiers with large training datasets. SVMs are regarded as binary classifiers designed to determine the boundary of the decision region that separates the dataset features or characteristics into two regions in the feature space. In SVM, boundary optimal hyperplanes exhibiting maximum safety margins are chosen closest to the training features. These are called support vectors, which take full advantage of the margin between the classes [

29]. Kernel functions have been used in the linearization of the decision boundary and were achieved by using the maps of the training data in the higher-dimensional space that have the capacity to linearly separate two classes of hyperplanes [

52]. A nonlinear transformation of covariates was also conducted, transferring into high-dimensional feature space [

53].

In the present study, SVM e1071 package [

54] for the R statistical computing software was implemented [

55]. It was observed that hyperparameters determined the performance of the SVM classifier. Therefore, the selection of these parameters was optimized and their sensitivity analyzed. Three parameters were assessed in the case of SVM, namely, the penalty parameter (

C), kernel function, and gamma parameter (

ɤ). The most accurate prediction was attained in the radial basis function (RBF), using gamma parameter (

ɤ) 0.9 and penalty parameter of 300. This was carried out rapidly by visual inspection of the match between results and reference data. Seventy percent (70%) of the inventory map, along with all the features, were selected as training sets to train the RF model.

2.6. Random Forest (RF)

The RF classifier method has been implemented for detecting landslides using many types of remote sensing data [

3,

30,

56,

57,

58,

59]. The algorithm builds upon multiple decision trees that depend on randomly selected subsets of the training dataset. The RF makes use of the high variance among individual trees in a classification problem by assigning the respective classes based on majority votes. The merit of this approach lies in its performance on complex datasets, with fewer attempts for fine-tuning [

30]. RF is defined as a random subset of the original set of features, whereas a classification and regression tree considers all variables in each node. Users can estimate the number of variables per node by using the square root of the total variable number. Various mechanisms, such as sampling and usage of random variables, in each node may produce entirely different uncorrelated trees. Moreover, large numbers of trees are absolutely required to obtain variability in the training data and attain accurate classification. A total vote of all the trees in the forest is used to determine a feature and the class will then be assigned on the basis of the majority vote.

RF package [

54], an open-source statistical language R, was used in this research. The parameters used in the analysis were the number of variables in the random subset at each node and the number of trees in the forest. The number (500) of trees was chosen, which is a typical value for the RF classifier [

30]. A single randomly split variable was employed to grow the trees. The inventory map (70%) together with other features and feature subsets were chosen as training sets to train the RF model. The remaining 30% of the inventory was used to evaluate the accuracy of the classification. The mean and standard deviation (stdev) values of the classification accuracies were then obtained from 50 random runs.

2.7. K-Nearest Neighbour (KNN)

KNN is a powerful tool utilized in many object-based workflows due to its flexibility and simplicity [

60,

61]. It is used most often for classification in object-based software frameworks, i.e., eCognition (Trimble Geospatial, Munich, Germany). In comparison to model-based learning, KNN allocates the object to the class based on proximity or neighborhood in the feature space, rather than learning from a model [

62]. The closest

K neighbors are obtained from the training set and then used to vote for the final predicted new object.

K is usually a tunable parameter, typically assuming a small and positive integer value [

5]. In this study, an optimal

K parameter was used based on cross-validation and bootstrap samples which were used to search for the best

K value, where

K ranges from 1 to 10 in steps of 1 and the best value is applied to the KNN algorithm implemented in the R package “class”. The inventory map (70%) together with all the features were selected as training sets to train the KNN model.

2.8. Dempster–Shafer Theory

The DST is built on a frame of discernment, formally defined as the set of mutually exclusive and collectively exhaustive hypotheses, represented by

Θ [

62,

63]. By defining two functions (Plausibility

and Belief

), this theory seeks to model imprecision and uncertainty. The two functions are essentially derived from a mass function

, with the latter function being applicable to every element of

in lieu of exclusively to elements of

θ. Thus, a rich and flexible modeling behavior is achieved which may potentially address numerous remote sensing applications. Thus, a mass function

allocates belief for each proposition, shown in the following equation:

where

is the empty set. Two common evidential measures within the mass function are belief

and plausibility

, both defined in equations:

where for every

,

is a measure of the total amount of beliefs committed exactly to every subset of

by

.

signifies the degree to which the evidence remains plausible. These two functions, which are regarded as the lower and upper probabilities, respectively, contain following properties:

The rule of combination in the proposed DST builds on the mathematical theory substantiating the combination of the mass functions

obtained from

sources of information given in Equations (6) and (7):

where

denotes the degree of conflict given in Equation (8):

Various methods exist for taking a final decision using the DST decision technique as found in the literature: the maximum mass, plausibility, or belief [

28]. From the probabilistic SVM, RF, and KNN classifiers, the posterior probabilities are converted in the form of mass function

. These, in turn, are then combined using the DST method. The combination output may be considered a belief function, which defines a posterior probability measure for each thematic.

2.9. Fusion of Three Object-Based Classifiers via Dempster–Shafer Theory (DST)

The fusion level analysis (FLA) method groups different classified features together and fuses them into a new class based on the belief confusion matrix [

14]. In the present study, the DST algorithm was employed to perform the feature-based data fusion. This method comprises well-defined combination rules that are capable of combining several belief functions in the same frame. The theory is built on the formulated basis of harmonizing the information from many sources [

64]. The evidence is then integrated in a reliable approach to complete the evaluation of the entire body of evidence. DST may serve both uncertainty and imprecision from belief and plausibility functions, while also possessing the ability to compute compound hypotheses [

65].

Consequently, the results of three object-based approaches (i.e., SVM, KNN, and RF) were combined by fusing the DST with LiDAR data. The method employed the fused class label contained within each pixel with the maximal belief function. The produced fused pixels were set as an unclassified value in the case of multiple class labels [

64]. For all classified landslide and non-landslide (frame of discernment), the belief functions were estimated using a precision function, which processes the confidence of a classifier probability. DST considers the majority of classified labels and then assigns that label to the segment. The belief masses for labelled features resulting from the classifiers were calculated using a confusion matrix, which is a text file that fuses the most probable feature label. Fundamentally, belief measurements are divided into four types, namely, precision, accuracy, Kappa, and recall. Due to its high performance in previous works, the precision belief function was selected in the present study to label the belief classes from standalone classifiers.