Integrating Drone Imagery into High Resolution Satellite Remote Sensing Assessments of Estuarine Environments

Abstract

:1. Introduction

1.1. Estuarine Habitats and Geomorphology

1.2. Satellite Mapping in Estuarine Environments

1.3. Unoccupied Aircraft Systems Estuarine/Marine Applications

1.4. National Estuarine Research Reserve Program and Rachel Carson Reserve Study Area

1.5. Study Objectives

- Assess the ability of UAS to replace field work for classification algorithm training and validation

- Compare WorldView-3 and RapidEye for estuarine habitat classification

- Test the utility of image texture, spectral indices, and data fusion with LiDAR

- Analyze changes in detailed coastal cover types from 2004 to 2017

2. Materials and Methods

2.1. NERRS Classification Scheme

2.2. Remotely Sensed Data

2.2.1. UAS Imagery Collection and Processing

2.2.2. WorldView-3 and RapidEye Satellite Imagery

2.2.3. LiDAR derived Digital Elevation Models

2.2.4. Rachel Carson Reserve Habitat Maps and Change Analysis

2.3. Image Pre-Processing

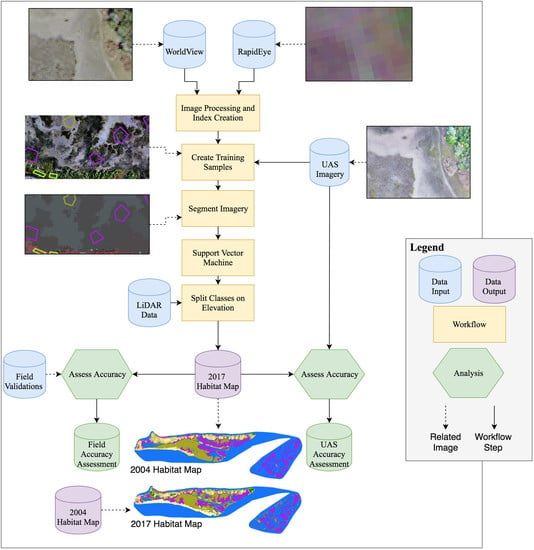

2.4. Supervised Classification Workflow

2.5. Accuracy Assessment

2.5.1. UAS Assessment

2.5.2. Field Assessment

3. Results

3.1. Estuarine Habitat Mapping

3.1.1. UAS vs. Field Validation

3.1.2. WorldView-3 vs. RapidEye

3.2. Change Analysis with 2017 RCR Map

4. Discussion

4.1. UAS and Field Work for Validation

4.2. WV3 and RE Classifications

4.3. Image Layers and Thresholding

4.4. Conservation Implications

4.5. Continuing Difficulties and Future Work

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Barbier, E.B.; Hacker, S.D.; Kennedy, C.; Kock, E.W.; Stier, A.C.; Brian, S.R. The value of estuarine and coastal ecosystem services. Ecol. Monogr. 2011, 81, 169–193. [Google Scholar] [CrossRef] [Green Version]

- Kirwan, M.L.; Temmerman, S.; Skeehan, E.E.; Guntenspergen, G.R.; Fagherazzi, S. Overestimation of marsh vulnerability to sea level rise. Nat. Climat. Change. 2016, 6, 253–260. [Google Scholar] [CrossRef]

- Spalding, M.D.; Ruffo, S.; Lacambra, C.; Meliane, I.; Hale, L.Z.; Shepard, C.C.; et al. The role of ecosystems in coastal protection: Adapting to climate change and coastal hazards. Ocean Coast Manag. 2014, 90, 50–57. [Google Scholar] [CrossRef]

- Kirwan, M.L.; Guntenspergen, G.R.; D’Alpaos, A.; Morris, J.T.; Mudd, S.M.; Temmerman, S. Limits on the adaptability of coastal marshes to rising sea level. Geophys Res Lett. 2010, 37, 1–5. [Google Scholar] [CrossRef]

- Mitchell, S.B.; Jennerjahn, T.C.; Vizzini, S.; Zhang, W. Changes to processes in estuaries and coastal waters due to intense multiple pressures—An introduction and synthesis. Estuar. Coast Shelf Sci. 2015, 156, 1–6. [Google Scholar] [CrossRef]

- Raposa, K.B.; Weber, R.L.J.; Ekberg, M.C.; Ferguson, W. Vegetation Dynamics in Rhode Island Salt Marshes During a Period of Accelerating Sea Level Rise and Extreme Sea Level Events. Estuar. Coast . 2017, 40, 640–650. [Google Scholar] [CrossRef]

- Klemas, V. Remote Sensing Techniques for Studying Coastal Ecosystems: An Overview. J. Coast Res. 2011, 27, 2–17. [Google Scholar] [CrossRef] [Green Version]

- Adam, E.; Mutanga, O.; Rugege, D. Multispectral and hyperspectral remote sensing for identification and mapping of wetland vegetation: A review. Wetl. Ecol. Manag. 2010, 18, 281–296. [Google Scholar] [CrossRef]

- Dronova, I. Object-Based Image Analysis in Wetland Research: A Review. Remote Sens. 2015, 7, 6380–6413. [Google Scholar] [CrossRef] [Green Version]

- McCarthy, M.; Halls, J. Habitat Mapping and Change Assessment of Coastal Environments: An Examination of WorldView-2, QuickBird, and IKONOS Satellite Imagery and Airborne LiDAR for Mapping Barrier Island Habitats. ISPRS Int. J. Geo-Inf. 2014, 3, 297–325. [Google Scholar] [CrossRef] [Green Version]

- Le Bris, A.; Rosa, P.; Lerouxel, A.; Cognie, B.; Gernez, P.; Launeau, P.; Robin, M.; Barillé, L. Hyperspectral remote sensing of wild oyster reefs. Estuar. Coast Shelf Sci. 2016, 172, 1–12. [Google Scholar] [CrossRef]

- Heenkenda, M.K.; Joyce, K.E.; Maier, S.W.; Bartolo, R. Mangrove species identification: Comparing WorldView-2 with aerial photographs. Remote Sens. 2014, 6, 6064–6088. [Google Scholar] [CrossRef]

- Laba, M.; Blair, B.; Downs, R.; Monger, B.; Philpot, W.; Smith, S.; Sullivan, P.; Baveye, P.C. Use of textural measurements to map invasive wetland plants in the Hudson River National Estuarine Research Reserve with IKONOS satellite imagery. Remote Sens. Environ. 2010, 114, 876–886. [Google Scholar] [CrossRef]

- Henderson, F.M.; Lewis, A.J. Radar detection of wetland ecosystems: A review. Int. J. Remote Sens. 2008, 29, 5809–5835. [Google Scholar] [CrossRef]

- Guo, M.; Li, J.; Sheng, C.; Xu, J.; Wu, L. A review of wetland remote sensing. Sensors 2017, 17, 777. [Google Scholar] [CrossRef] [PubMed]

- Joshi, N.; Baumann, M.; Ehammer, A.; Fensholt, R.; Grogan, K.; Hostert, P.; Jepsen, M.R.; Kuemmerle, T.; Meyfroidt, P.; Mitchard, E.T.A.; et al. A review of the application of optical and radar remote sensing data fusion to land use mapping and monitoring. Remote Sens. 2016, 8, 70. [Google Scholar] [CrossRef]

- Halls, J.; Costin, K. Submerged and emergent land cover and bathymetric mapping of estuarine habitats using worldView-2 and liDAR imagery. Remote Sens. 2016, 8, 718. [Google Scholar] [CrossRef]

- Kalacska, M.; Chmura, G.L.; Lucanus, O.; Bérubé, D.; Arroyo-Mora, J.P. Structure from motion will revolutionize analyses of tidal wetland landscapes. Remote Sens. Environ. 2017, 199, 14–24. [Google Scholar] [CrossRef]

- Wan, H.; Wang, Q.; Jiang, D.; Fu, J.; Yang, Y.; Liu, X. Monitoring the invasion of Spartina alterniflora using very high resolution unmanned aerial vehicle imagery in Beihai, Guangxi (China). Sci. World J. 2014, 2014, 14–16. [Google Scholar] [CrossRef] [PubMed]

- Durban, J.W.; Fearnbach, H.; Perryman, W.L.; Leroi, D.J. Photogrammetry of killer whales using a small hexacopter launched at sea. J. Unmanned Veh. Syst. 2015, 3, 1–5. [Google Scholar] [CrossRef]

- Seymour, A.C.; Dale, J.; Hammill, M.; Halpin, P.N.; Johnston, D.W. Automated detection and enumeration of marine wildlife using unmanned aircraft systems (UAS) and thermal imagery. Sci. Rep. 2017, 7, 45127. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sykora-Bodie, S.T.; Bezy, V.; Johnston, D.W.; Newton, E.; Lohmann, K.J. Quantifying Nearshore Sea Turtle Densities: Applications of Unmanned Aerial Systems for Population Assessments. Sci. Rep. 2017, 7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mancini, F.; Dubbini, M.; Gattelli, M.; Stecchi, F.; Fabbri, S.; Gabbianelli, G. Using unmanned aerial vehicles (UAV) for high-resolution reconstruction of topography: The structure from motion approach on coastal environments. Remote Sens. 2013, 5, 6880–6898. [Google Scholar] [CrossRef] [Green Version]

- Seymour, A.; Ridge, J.; Rodriguez, A.; Newton, E.; Dale, J.; Johnston, D. Deploying Fixed Wing Unoccupied Aerial Systems (UAS) for Coastal Morphology Assessment and Management. J. Coast Res. 2017, 34. [Google Scholar] [CrossRef]

- Elarab, M.; Ticlavilca, A.M.; Torres-Rua, A.F.; Maslova, I.; McKee, M. Estimating chlorophyll with thermal and broadband multispectral high resolution imagery from an unmanned aerial system using relevance vector machines for precision agriculture. Int. J. Appl. Earth Obs. Geoinf. 2015, 43, 32–42. [Google Scholar] [CrossRef]

- Casella, E.; Rovere, A.; Pedroncini, A.; Mucerino, L.; Casella, M.; Cusati, L.A.; Vacchi, M.; Ferrari, M.; Firpo, M. Study of wave runup using numerical models and low-altitude aerial photogrammetry: A tool for coastal management. Estuar. Coast Shelf Sci. 2014, 149, 160–167. [Google Scholar] [CrossRef]

- Inoue, J.; Curry, J.A. Application of Aerosondes to high-resolution observations of sea surface temperature over Barrow Canyon. Geophys. Res. Lett. 2004, 31. [Google Scholar] [CrossRef] [Green Version]

- Corrigan, C.E.; Roberts, G.C.; Ramana, M.V.; Kim, D.; Ramanathan, V. Capturing vertical profiles of aerosols and black carbon over the Indian Ocean using autonomous unmanned aerial vehicles. Atmos. Chem. Phys. 2008, 8, 737–747. [Google Scholar] [CrossRef] [Green Version]

- Standard Operating Procedures Mapping Land Use and Habitat Change in the National Estuarine Research Reserve System. Available online: https://coast.noaa.gov/data/docs/nerrs/Standard_Operating_ Procedures_Mapping_Land_Use_and_Habitat_Change_in_the_NERRS.pdf (accessed on 6 February 2018).

- NCNERR. North Carolina National Estuarine Research Reserve Management Plan 2009–2014. 2009. Available online: https://coast.noaa.gov/data/docs/nerrs/Reserves_NOC_MgmtPlan.pdf (accessed on 1 February 2018).

- Pilkey, O.H.; Cooper, J.A.G.; Lewis, D.A. Global Distribution and Geomorphology of Fetch-Limited Barrier Islands. J. Coast Res. 2009, 254, 819–837. [Google Scholar] [CrossRef]

- Kutcher, T.E.; Garfield, N.H.; Raposa, K.B. A Recommendation for a Comprehensive Habitat and Land Use Classification System for the National Estuarine Research Reserve System. Environ. Heal. 2005, 19, 1–26. [Google Scholar]

- Classification of Wetlands and Deepwater Habitats of the United States. Available online: https://www.fws.gov/wetlands/Documents/Classification-of-Wetlands-and-Deepwater-Habitats-of-the-United-States.pdf (accessed on 20 December 2017).

- Anderson, J.R.; Hardy, E.E.; Roach, J.T.; Witmer, R.E.; Peck, D.L. A Land Use And Land Cover Classification System for Use With Remote Sensor Data; US Government Printing Office: Washington, DC, USA, 1976; Volume 964.

- NOAA Tides and Currents. NOAA Tide Predictions. Available online: http://tidesandcurrents.noaa.gov/ (accessed on 1 February 2018).

- Carle, M.V.; Wang, L.; Sasser, C.E. Mapping freshwater marsh species distributions using WorldView-2 high-resolution multispectral satellite imagery. Int. J. Remote Sens. 2014, 35, 4698–4716. [Google Scholar] [CrossRef]

- McCarthy, M.J.; Radabaugh, K.R.; Moyer, R.P.; Muller-Karger, F.E. Enabling efficient, large-scale high-spatial resolution wetland mapping using satellites. Remote Sens. Environ. 2018, 208, 189–201. [Google Scholar] [CrossRef]

- DigitalGlobe. WorldView-3 Features, Benefits Design and specifications. Available online: https://dg-cms-uploads-production.s3.amazonaws.com/uploads/document/file/95/DG2017_WorldView-3_DS.pdf (accessed on 8 November 2017).

- Schuster, C.; Förster, M.; Kleinschmit, B. Testing the red edge channel for improving land-use classifications based on high-resolution multi-spectral satellite data. Int. J. Remote Sens. 2012, 33, 5583–5599. [Google Scholar] [CrossRef]

- Gabrielsen, C.G.; Murphy, M.A.; Evans, J.S. Using a multiscale, probabilistic approach to identify spatial-temporal wetland gradients. Remote Sens. Environ. 2016, 184, 522–538. [Google Scholar] [CrossRef]

- Planet Application Program Interface: In Space for Life on Earth. Available online: https://www.planet.com/docs/citations/ (accessed on 22 October 2017).

- Office for Coastal Management. NOAA Post-Sandy Topobathymetric LiDAR: Void DEMs South Carolina to New York. Available online: https://inport.nmfs.noaa.gov/inport/item/48367 (accessed on 17 November 2017).

- North Carolina Department of Environmental Quality D of CM. N.C. Coastal Reserve and National Estuarine Research Reserve: Habitat Mapping and Change. Available online: http://portal.ncdenr.org/%0Aweb/crp/habitat-mapping (accessed on 21 January 2018).

- Song, C.; Woodcock, C.E.; Seto, K.C.; Lenney, M.P.; Macomber, S.A. Classification and change detection using Landsat TM data: When and how to correct atmospheric effects? Remote Sens. Environ. 2001, 75, 230–244. [Google Scholar] [CrossRef]

- Lin, C.; Wu, C.C.; Tsogt, K.; Ouyang, Y.C.; Chang, C.I. Effects of atmospheric correction and pansharpening on LULC classification accuracy using WorldView-2 imagery. Inf. Process Agric. 2015, 2, 25–36. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Lane, C.R.; Liu, H.; Autrey, B.C.; Anenkhonov, O.A.; Chepinoga, V.V.; Wu, Q. Improved wetland classification using eight-band high resolution satellite imagery and a hybrid approach. Remote Sens. 2014, 6, 12187–12216. [Google Scholar] [CrossRef]

- Heydari, S.S.; Mountrakis, G. Effect of classifier selection, reference sample size, reference class distribution and scene heterogeneity in per-pixel classification accuracy using 26 Landsat sites. Remote Sens. Environ. 2017, 204, 648–658. [Google Scholar] [CrossRef]

- Dronova, I.; Gong, P.; Clinton, N.E.; Wang, L.; Fu, W.; Qi, S.; Liu, Y. Landscape analysis of wetland plant functional types: The effects of image segmentation scale, vegetation classes and classification methods. Remote Sens. Environ. 2012, 127, 357–369. [Google Scholar] [CrossRef]

- Stehman, S.V. Estimating area and map accuracy for stratified random sampling when the strata are different from the map classes. Int. J. Remote Sens. 2014, 35, 4923–4939. [Google Scholar] [CrossRef]

- Assessing the Accuracy of Remotely Sensed Data: Principles and Practices. Available online: https://onlinelibrary.wiley.com/doi/abs/10.1111/j.1477-9730.2010.00574_2.x (accessed on 26 September 2017).

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S. V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. Elsevier Inc. 2014, 148, 42–57. [Google Scholar] [CrossRef] [Green Version]

- Tigges, J.; Lakes, T.; Hostert, P. Urban vegetation classi fi cation: Benefits of multitemporal RapidEye satellite data. Remote Sens. Environ. 2013, 136, 66–75. [Google Scholar] [CrossRef]

- Massetti, A.; Sequeira, M.M.; Pupo, A.; Figueiredo, A.; Guiomar, N.; Gil, A. Assessing the effectiveness of RapidEye multispectral imagery for vegetation mapping in Madeira Island (Portugal). Eur. J. Remote Sens. 2016, 49, 643–672. [Google Scholar] [CrossRef] [Green Version]

- Yan, L.; Roy, D.P. Improved time series land cover classification by missing-observation-adaptive nonlinear dimensionality reduction. Remote Sens. Environ. 2015, 158, 478–491. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. An SVM ensemble approach combining spectral, structural, and semantic features for the classification of high-resolution remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2013, 51, 257–272. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Neumann, C.; Forster, M.; Buddenbaum, H.; Ghosh, A.; Clasen, A.; Joshi, P.K.; Koch, B. Comparison of feature reduction algorithms for classifying tree species with hyperspectral data on three central european test sites. IEEE J. Sel. Top Appl. Earth. Obs. Remote Sens. 2014, 7, 2547–2561. [Google Scholar] [CrossRef]

- Jackson, N.L.; Nordstrom, K.F.; Eliot, I.; Masselink, G. “Low energy” sandy beaches in marine and estuarine environments a review. Geomorphol. 2002, 48, 147–162. [Google Scholar] [CrossRef]

- The Role of Overwash and Inlet Dynamics in the Formation of Salt Marshes on North Carolina Barrier Island. Available online: http://agris.fao.org/agris-search/search.do?recordID=US201303196073 (accessed on 13 January 2017).

- Rodriguez, A.B.; Fegley, S.R.; Ridge, J.T.; Van Dusen, B.M.; Anderson, N. Contribution of aeolian sand to backbarrier marsh sedimentation. Estuar. Coast Shelf Sci. 2013, 117, 248–259. [Google Scholar] [CrossRef]

- Leidner, A.K.; Haddad, N.M. Natural, not urban, barriers define population structure for a coastal endemic butterfly. Conserv. Genet. 2010, 11, 2311–2320. [Google Scholar] [CrossRef]

- Levin, P.S.; Ellis, J.; Petrik, R.; Hay, M.E. Indirect effects of feral horses on estuarine communities. Conserv Biol. 2002, 16, 1364–1371. [Google Scholar] [CrossRef]

- Taggart, J.B. Management of Feral Horses at the North Carolina National Estuarine Research Reserve. Nat. Areas J. 2008, 28, 187–195. [Google Scholar] [CrossRef]

- Valle-Levinson, A.; Dutton, A.; Martin, J.B. Spatial and temporal variability of sea level rise hot spots over the eastern United States. Geophys. Res. Lett. 2017, 44, 7876–7882. [Google Scholar] [CrossRef]

- Elevated East Coast Sea Level Anomaly: June–July 2009. Noaa-Tr-Nos-Co-Ops-051; 2009. Available online: http://tidesandcurrents.noaa.gov/publications/EastCoastSeaLevelAnomaly_2009.pdf (accessed on 1 February 2018).

- Theuerkauf, E.J.; Rodriguez, A.B.; Fegley, S.R.; Luettich, R.A. Sea level anomalies exacerbate beach erosion. Geophys. Res. Lett. 2014, 41, 5139–5147. [Google Scholar] [CrossRef] [Green Version]

- Rodriguez, A.B.; Duran, D.M.; Mattheus, C.R.; Anderson, J.B. Sediment accommodation control on estuarine evolution: An example from Weeks Bay, Alabama, USA. In Response of Upper Gulf Coast Estuaries to Holocene Climate Change and Sea-Level Rise; Geological Society of America: Boulder, CO, USA, 2008; Volume 443, pp. 31–42. [Google Scholar]

- Morris, J.T.; Sundareshwar, P.V.; Nietch, C.T.; Kjerfve, B.; Cahoon, D.R. Responses of coastal wetlands to rising sea level. Ecology. 2002, 83, 2869–2877. [Google Scholar] [CrossRef]

- Fagherazzi, S.; Carniello, L.; D’Alpaos, L.; Defina, A. Critical bifurcation of shallow microtidal landforms in tidal flats and salt marshes. Proc. Natl. Acad Sci. USA 2006, 103, 8337–8341. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kirwan, M.L.; Megonigal, J.P. Tidal wetland stability in the face of human impacts and sea-level rise. Nature 2013, 53–60. [Google Scholar] [CrossRef] [PubMed]

- Dronova, I.; Gong, P.; Wang, L. Object-based analysis and change detection of major wetland cover types and their classification uncertainty during the low water period at Poyang Lake, China. Remote Sens. Environ. 2011, 115, 3220–3236. [Google Scholar] [CrossRef]

- Ma, L.; Cheng, L.; Li, M.; Liu, Y.; Ma, X. Training set size, scale, and features in Geographic Object-Based Image Analysis of very high resolution unmanned aerial vehicle imagery. ISPRS J. Photogramm Remote Sens. Int. Soc. Photogramm. Remote Sens. 2015, 102, 14–27. [Google Scholar] [CrossRef]

| Class | ID | Subsystem | Definition |

|---|---|---|---|

| Subtidal Haline | 2100 | Subtidal Haline | the substrate is continuously submerged [by tidal water and] … ocean-derived salts measure [at least] 0.5‰ during the period of average annual low flow. |

| Intertidal Sand | 2253 | Intertidal Haline | unconsolidated particles smaller than stones [constitute at least 25% aerial cover and] are predominantly sand. Particle size ranges from 0.00625 mm to 2.0 mm in diameter. 1 |

| Emergent Wetland | 2260 | Intertidal Haline | characterized by erect, rooted, herbaceous hydrophytes, excluding mosses and lichens. This vegetation is present for most of the growing season in most years. These wetlands are usually dominated by perennial plants |

| Supratidal Sand | 2323 | Supratidal Haline | 1 |

| Scrub-Shrub Wetland | 2350 | Supratidal Haline | includes areas dominated by woody vegetation less than 6 m (20 feet) tall. The species include true shrubs, young trees, and trees or shrubs that are small or stunted because of environment. 2 |

| Upland Sand | 6123 | Supratidal Upland | 1 |

| Herbaceous Upland | 6131 | Supratidal Upland | herbaceous upland habitat that is dominated by graminoids. |

| Scrub-Shrub Upland | 6140 | Supratidal Upland | 2 |

| Forested Upland | 6150 | Supratidal Upland | characterized by woody vegetation that is 6 m tall or taller. All water regimes are included except subtidal. |

| Subsystem | Definition | ||

| Intertidal Haline | the substrate is exposed and flooded by tides; includes the associated splash zone; … ocean-derived salts measure [at least] 0.5‰ during the period of average annual low flow. | ||

| Supratidal Haline | nontidal wetlands containing at least 0.5‰ ocean- derived salts at some point during a year of average rainfall. | ||

| Supratidal Upland | any coastal upland area above the highest spring tide mark that is periodically over-washed, covered, or soaked with seawater during storm events to an extent that affects habitat structure or function. | ||

| WorldView-3 | RapidEye | |

|---|---|---|

| Imagery Details | ||

| Spatial Resolution (m) | 1.24 | 5.0 |

| Radiometric Resolution | 11 bit | 12 bit |

| Revisit Rate | 4.5 days | 5.5 days |

| Revisit Rate (off-nadir) | Daily | Daily |

| Date of Acquisition | 31 October 2017 | 20 July 2017 |

| Time of Acquisition | 16:14:35 UTC | 16:04:21 UTC |

| Tidal State (m > MLLW) | 0.22 | -0.07 |

| Bands (nm) | ||

| Coastal Blue | 400–450 | - |

| Blue | 450–510 | 440–510 |

| Green | 510–580 | 520–590 |

| Yellow | 585–625 | - |

| Red | 630–690 | 630–685 |

| Red Edge | 705–745 | 690–730 |

| NIR 1 | 770–895 | 760–850 |

| NIR 2 | 860–1040 | - |

| Panchromatic | 450–800 | - |

| Product | Field | UAS |

|---|---|---|

| WV 8-band | 93% | 93% |

| WV 8-band + NDVI + texture | 79% | 83% |

| RE 5-band | 86% | 90% |

| RE 5-band + NDVI + texture | 87% | 92% |

| Field Validation | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Habitat Class | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | Total Area | |

| UAS Validation | Subtidal Haline-1 | 22 | 22 | ||||||||

| Supratidal Sand-2 | 23 | 23 | |||||||||

| Emergent Wetland-3 | 1 | 28 | 1 | 30 | |||||||

| Scrub-Shrub Wetland-4 | 29 | 29 | |||||||||

| Intertidal Sand-5 | 2 | 1 | 24 | 27 | |||||||

| Herbaceous Upland-6 | 21 | 2 | 23 | ||||||||

| Upland Sand-7 | 19 | 19 | |||||||||

| Scrub-Shrub Upland-8 | 16 | 2 | 18 | ||||||||

| Forested Upland-9 | 23 | 23 | |||||||||

| Total Area | 24 | 25 | 28 | 29 | 25 | 21 | 19 | 18 | 25 | 96% | |

| Reference Class | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Habitat Class | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | Total Area | User’s Acc | |

| Map Class | Subtidal Haline-1 | 0.5060 | 0.0108 | 0.0215 | 0.5383 | 94% | ||||||

| Supratidal Sand-2 | 0.0393 | 0.0009 | 0.0026 | 0.0428 | 92% | |||||||

| Emergent Wetland-3 | 0.1660 | 0.0035 | 0.0071 | 0.1765 | 94% | |||||||

| Scrub-Shrub Wetland-4 | 0.0037 | 0.0229 | 0.0266 | 86% | ||||||||

| Intertidal Sand-5 | 0.0141 | 0.1269 | 0.1410 | 90% | ||||||||

| Herbaceous Upland-6 | 0.0183 | 0.0012 | 0.0008 | 0.0203 | 90% | |||||||

| Upland Sand-7 | 0.0011 | 0.0176 | 0.0000 | 0.0187 | 94% | |||||||

| Scrub-Shrub Upland-8 | 0.0026 | 0.0000 | 0.0186 | 0.0004 | 0.0216 | 86% | ||||||

| Forested Upland-9 | 0.0003 | 0.0000 | 0.0023 | 0.0117 | 0.0142 | 82% | ||||||

| Total Area | 0.5060 | 0.0393 | 0.1954 | 0.0264 | 0.1581 | 0.0223 | 0.0188 | 0.0217 | 0.0121 | 0.9271 | ||

| Producer’s Accuracy | 100% | 100% | 85% | 87% | 80% | 82% | 94% | 86% | 96% | |||

| Reference Class | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Habitat Class | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | Total Area | User’s Acc | |

| Map Class | Subtidal Haline-1 | 0.4969 | 0.0414 | 0.5383 | 92% | |||||||

| Supratidal Sand-2 | 0.0393 | 0.0393 | 100% | |||||||||

| Emergent Wetland-3 | 0.0068 | 0.1698 | 0.1765 | 96% | ||||||||

| Scrub-Shrub Wetland-4 | 0.0024 | 0.0234 | 0.0258 | 91% | ||||||||

| Intertidal Sand-5 | 0.0059 | 0.1352 | 0.1410 | 96% | ||||||||

| Herbaceous Upland-6 | 0.0225 | 0.0012 | 0.0237 | 95% | ||||||||

| Upland Sand-7 | 0.0026 | 0.0169 | 0.0195 | 87% | ||||||||

| Scrub-Shrub Upland-8 | 0.0202 | 0.0014 | 0.0216 | 93% | ||||||||

| Forested Upland-9 | 0.0016 | 0.0126 | 0.0142 | 89% | ||||||||

| Total Area | 0.4969 | 0.0461 | 0.1780 | 0.0234 | 0.1766 | 0.0251 | 0.0169 | 0.0229 | 0.0141 | 0.9367 | ||

| Producer’s Accuracy | 100% | 85% | 95% | 100% | 77% | 90% | 100% | 88% | 90% | |||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gray, P.C.; Ridge, J.T.; Poulin, S.K.; Seymour, A.C.; Schwantes, A.M.; Swenson, J.J.; Johnston, D.W. Integrating Drone Imagery into High Resolution Satellite Remote Sensing Assessments of Estuarine Environments. Remote Sens. 2018, 10, 1257. https://doi.org/10.3390/rs10081257

Gray PC, Ridge JT, Poulin SK, Seymour AC, Schwantes AM, Swenson JJ, Johnston DW. Integrating Drone Imagery into High Resolution Satellite Remote Sensing Assessments of Estuarine Environments. Remote Sensing. 2018; 10(8):1257. https://doi.org/10.3390/rs10081257

Chicago/Turabian StyleGray, Patrick C., Justin T. Ridge, Sarah K. Poulin, Alexander C. Seymour, Amanda M. Schwantes, Jennifer J. Swenson, and David W. Johnston. 2018. "Integrating Drone Imagery into High Resolution Satellite Remote Sensing Assessments of Estuarine Environments" Remote Sensing 10, no. 8: 1257. https://doi.org/10.3390/rs10081257

APA StyleGray, P. C., Ridge, J. T., Poulin, S. K., Seymour, A. C., Schwantes, A. M., Swenson, J. J., & Johnston, D. W. (2018). Integrating Drone Imagery into High Resolution Satellite Remote Sensing Assessments of Estuarine Environments. Remote Sensing, 10(8), 1257. https://doi.org/10.3390/rs10081257