Automated Cloud and Cloud-Shadow Masking for Landsat 8 Using Multitemporal Images in a Variety of Environments

Abstract

:1. Introduction

2. Material

3. Methods

3.1. MCM Algorithm Improvements

3.2. Accuracy Assessment of the New MCM

3.3. Comparison Between the New MCM and L8 CCA Algorithm

4. Results and Discussion

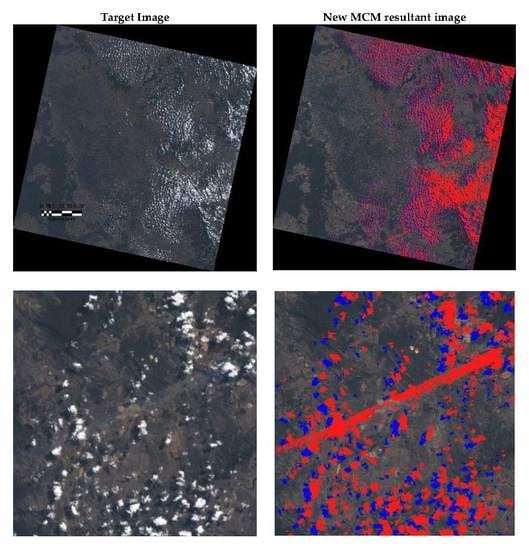

4.1. Visual Assessments of the New MCM Results

4.2. Statistical Assessments of the New MCM

4.3. Comparison Between the New MCM and L8 CCA Algorithm

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| MCM | Multitemporal Cloud Masking | DEM | Digital Elevation Model |

| L8 CCA | Landsat 8 Cloud Cover Assessment | OLI | Operational Land Imager |

| USGS | The U.S. Geological Survey | TIRS | Thermal Infrared Sensor |

| HOT | Haze Optimized Transformation | MTCD | Multitemporal Cloud Detection |

| Fmask | Function of Mask | L1TP | Level-1 Precision Terrain |

| Tmask | multiTemporal mask |

References

- USGS. Landsat Project Statistics. Available online: https://landsat.usgs.gov/landsat-project-statistics (accessed on 7 July 2018).

- USGS. Landsat 8 Data Users Handbook. Available online: https://landsat.usgs.gov/landsat-8-data-users-handbook (accessed on 7 July 2018).

- Jia, K.; Wei, X.; Gu, X.; Yao, Y. Land cover classification using Landsat 8 Operational Land Imager data in Beijing, China. Geocarto Int. 2014, 29, 1–15. [Google Scholar] [CrossRef]

- Chejarla, V.R.; Mandla, V.R.; Palanisamy, G.; Choudhary, M. Estimation of damage to agriculture biomass due to Hudhud cyclone and carbon stock assessment in cyclone affected areas using Landsat-8. Geocarto Int. 2017, 32, 589–602. [Google Scholar] [CrossRef]

- Ozelkan, E.; Chen, G.; Ustundag, B.B. Multiscale object-based drought monitoring and comparison in rainfed and irrigated agriculture from Landsat 8 OLI imagery. Int. J. Appl. Earth Obs. Geoinf. 2016, 44, 159–170. [Google Scholar] [CrossRef]

- Torbick, N.; Chowdhury, D.; Salas, W.; Qi, J. Monitoring rice agriculture across myanmar using time series Sentinel-1 assisted by Landsat-8 and PALSAR-2. Remote Sens. 2017, 9, 119. [Google Scholar] [CrossRef]

- Chignell, S.; Anderson, R.S.; Evangelista, P.H.; Laituri, M.J.; Merritt, D.M. Multi-Temporal Independent Component Analysis and Landsat 8 for Delineating Maximum Extent of the 2013 Colorado Front Range Flood. Remote Sens. 2015, 7, 9822–9843. [Google Scholar] [CrossRef] [Green Version]

- Dao, P.; Liou, Y.A. Object-Based Flood Mapping and Affected Rice Field Estimation with Landsat 8 OLI and MODIS Data. Remote Sens. 2015, 7, 5077–5097. [Google Scholar] [CrossRef] [Green Version]

- Olthof, I.; Tolszczuk-Leclerc, S. Comparing Landsat and RADARSAT for Current and Historical Dynamic Flood Mapping. Remote Sens. 2018, 10, 780. [Google Scholar] [CrossRef]

- Wang, B.; Ono, A.; Muramatsu, K.; Fujiwara, N. Automated detection and removal of cloud and their shadow from Landsat TM images. IEICE Trans. Inf. Syst. 1999, E82-D, 453–460. [Google Scholar]

- Zhu, Z.; Woodcock, C.E. Automated cloud, cloud shadow and snow detection in multitemporal Landsat data: An algorithm designed specifically for monitoring land cover change. Remote Sens. Environ. 2014, 152, 217–234. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in Landsat imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Richter, R.; Wang, X.; Bachmann, M.; Schlapfer, D. Correction of cirrus effects in Sentinel-2 type of imagery. Int. J. Remote Sens. 2011, 32, 2931–2941. [Google Scholar] [CrossRef]

- Houze, R.A. Chapter 1—Types of Clouds in Earth’s Atmosphere. In International Geophysics; Houze, R.A., Ed.; Academic Press: Cambridge, MA, USA, 2014; p. 5. [Google Scholar]

- Irish, R.R.; Barker, J.L.; Goward, S.N.; Arvidson, T. Characterization of the Landsat-7 ETM+ Automated Cloud-Cover Assessment (ACCA) algorithm. Photogramm. Eng. Remote Sens. 2006, 72, 1179–1188. [Google Scholar] [CrossRef]

- Huang, C.; Thomas, N.; Goward, S.N.; Masek, J. Automated masking of cloud and cloud shadow for forest change analysis using Landsat images. Int. J. Remote Sens. 2010, 31, 5449–5464. [Google Scholar] [CrossRef]

- Zhang, Y.; Guindon, B.; Cihlar, J. An image transform to characterize and compensate for spatial variations in thin cloud contamination of Landsat images. Remote Sens. Environ. 2002, 82, 173–187. [Google Scholar] [CrossRef]

- Zhang, Y.; Guindon, B.; Li, X. A Robust Approach for Object-Based Detection and Radiometric Characterization of Cloud Shadow Using Haze Optimized Transformation. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5540–5547. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Li, H.; Xia, G.; Gamba, P.; Zhang, L. Multi-feature combined cloud and cloud shadow detection in GaoFen-1 wide field of view imagery. Remote Sens. Environ. 2017, 191, 342–358. [Google Scholar] [CrossRef] [Green Version]

- Hughes, M.J.; Daniel, J.H. Automated Detection of Cloud and Cloud Shadow in Single-Date Landsat Imagery Using Neural Networks and Spatial Post-Processing. Remote Sens. 2014, 6, 4907–4926. [Google Scholar] [CrossRef] [Green Version]

- André, H.; Segl, K.; Guanter, L.; Brell, M.; Enesco, M. Ready-to-Use Methods for the Detection of Clouds, Cirrus, Snow, Shadow, Water and Clear Sky Pixels in Sentinel-2 MSI Images. Remote Sens. 2016, 8, 666. [Google Scholar] [Green Version]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and expansion of the Fmask algorithm: cloud, cloud shadow, and snow detection for Landsats 4–7, 8, and Sentinel 2 images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- Qiu, S.; He, B.; Zhu, Z.; Liao, Z.; Quan, X. Improving Fmask cloud and cloud shadow detection in mountainous area for Landsats 4-8 images. Remote Sens. Environ. 2017, 199, 107–119. [Google Scholar] [CrossRef]

- Kennedy, R.E.; Cohen, W.B.; Schroeder, T.A. Trajectory-based change detection for automated characterization of forest disturbance dynamics. Remote Sens. Environ. 2007, 110, 370–386. [Google Scholar] [CrossRef]

- Goodwin, N.R.; Collet, L.J.; Denham, R.J.; Flood, N.; Tindall, D. Cloud and cloud shadow screening across Queensland, Australia: An automated method for Landsat TM/ETM+ time series. Remote Sens. Environ. 2013, 134, 50. [Google Scholar] [CrossRef]

- Hagolle, O.; Huc, M.; Pascual, V.; Dedieu, G. A multi-temporal method for cloud detection, applied to FORMOSAT-2, VENµS, LANDSAT and SENTINEL-2 images. Remote Sens. Environ. 2010, 114, 1747–1755. [Google Scholar] [CrossRef]

- Candra, D.S.; Phinn, S.; Scarth, P. Cloud and cloud shadow masking using multi-temporal cloud masking algorithm in tropical environmental. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B2. [Google Scholar] [CrossRef]

- Wikimedia. Maps of the World. Available online: https://commons.wikimedia.org/wiki/Maps_of_the_world#/media/File:BlankMap-World-v2.png (accessed on 19 March 2019).

- Huang, C.; Goward, S.N.; Masek, J.G.; Thomas, N.; Zhu, Z.; Vogelmann, J.E. An automated approach for reconstructing recent forest disturbance history using dense Landsat time series stacks. Remote Sens. Environ. 2010, 114, 183–198. [Google Scholar] [CrossRef]

- Masek, J.G.; Vermote, E.F.; Saleous, N.E.; Wolfe, R.; Hall, F.G.; Huemmerich, K.F.; Gao, F.; Kutler, J.; Kim, T.K. A Landsat surface reflectance dataset for North America, 1990-2000. IEEE Geosci. Remote Sens. Lett. 2006, 3, 68–72. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First Experience with Sentinel-2 Data for Crop and Tree Species Classifications in Central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; Lewis Publishers: Boca Raton, FL, USA, 1999. [Google Scholar]

| Genus | Height | The Height of Cloud Base | ||

|---|---|---|---|---|

| Polar Regions | Temperate Regions | Tropical Regions | ||

| ||||

| Low | Below 2 km | Below 2 km | Below 2 km | |

| ||||

| Middle | 2–4 km | 2–7 km | 2–8 km | |

| ||||

| Height | 3–8 km | 5–13 km | 6–18 km | |

| Path/Row | Area | Environment | Cloud Type | Land Cover Class |

|---|---|---|---|---|

| 090/079 | Queensland, Australia | Sub-tropical South | Thick | Settlement, crop land, forest, wetland, and open land |

| 091/085 | New South Wales, Australia | Sub-tropical South | Thick | Settlement, cropland, forest, wetland, open land, and water |

| 091/085 | New South Wales, Australia | Sub-tropical South | Thin | Settlement, cropland, forest, wetland, open land, and water |

| 170/078 | Johannesburg, South Africa | Sub-tropical South | Thick | Settlement, cropland, open land, and water |

| 171/074 | Bulawayo, Zimbabwe | Tropical | Thick and thin | Settlement, cropland, forest, open land, swamp, and water |

| 175/062 | Kindu—Democratic Republic of The Congo | Tropical | Thick | Settlement, open land, cropland, forest, water |

| 170/063 | Tabora, Tanzania | Tropical | Thick | Open land, wet land, forest and water |

| 041/033 | Nevada, USA | Sub-tropical North | Thick | Settlement, open land, and water |

| 192/024 | Berlin, Germany | Sub-tropical North | Thick | Settlement, cropland, forest, open land, and water |

| 202/038 | Marrakesh, Morocco | Sub-tropical North | Thick | Settlement, desert, cropland, open land, and water |

| Settlement | Cropland | Forest | Desert | Average | |

|---|---|---|---|---|---|

| Commission Error of New MCM | 0.024 | 0.018 | 0.035 | 0.001 | 0.019 |

| Commission Error of L8 CCA | 0.013 | 0.004 | 0.006 | 0.003 | 0.007 |

| Omission Error of New MCM | 0.009 | 0.039 | 0.120 | 0.009 | 0.010 |

| Omission Error of L8 CCA | 0.220 | 0.212 | 0.126 | 0.500 | 0.264 |

| Settlement | Cropland | Forest | Desert | Average | |

|---|---|---|---|---|---|

| Commission Error of New MCM | 0.013 | 0.010 | 0.058 | 0.000 | 0.020 |

| Commission Error of L8 CCA | 0.583 | 0.339 | 0.354 | 0.165 | 0.360 |

| Omission Error of New MCM | 0.025 | 0.024 | 0.001 | 0.084 | 0.033 |

| Omission Error of L8 CCA | 0.136 | 0.104 | 0.043 | 0.249 | 0.133 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Candra, D.S.; Phinn, S.; Scarth, P. Automated Cloud and Cloud-Shadow Masking for Landsat 8 Using Multitemporal Images in a Variety of Environments. Remote Sens. 2019, 11, 2060. https://doi.org/10.3390/rs11172060

Candra DS, Phinn S, Scarth P. Automated Cloud and Cloud-Shadow Masking for Landsat 8 Using Multitemporal Images in a Variety of Environments. Remote Sensing. 2019; 11(17):2060. https://doi.org/10.3390/rs11172060

Chicago/Turabian StyleCandra, Danang Surya, Stuart Phinn, and Peter Scarth. 2019. "Automated Cloud and Cloud-Shadow Masking for Landsat 8 Using Multitemporal Images in a Variety of Environments" Remote Sensing 11, no. 17: 2060. https://doi.org/10.3390/rs11172060

APA StyleCandra, D. S., Phinn, S., & Scarth, P. (2019). Automated Cloud and Cloud-Shadow Masking for Landsat 8 Using Multitemporal Images in a Variety of Environments. Remote Sensing, 11(17), 2060. https://doi.org/10.3390/rs11172060