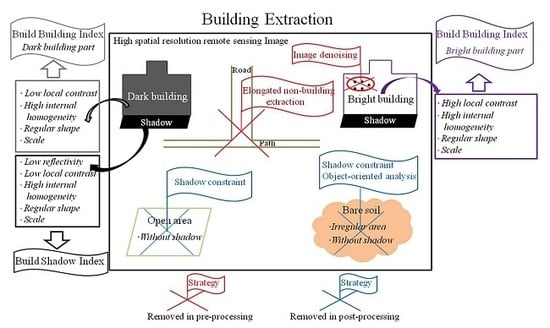

Figure 1.

Flowchart of the proposed framework.

Figure 1.

Flowchart of the proposed framework.

Figure 2.

The attribute profiles (APs) and differential attribute profiles (DAPs) obtained by attribute ld on threshold 10,30,50,70, and 90. The example grayscale image is in Figure 4j. (a–e) are the opening profiles obtained on threshold 10,30,50,70, and 90, respectively. (f–i) are the DAPs obtained between adjacent scales.

Figure 2.

The attribute profiles (APs) and differential attribute profiles (DAPs) obtained by attribute ld on threshold 10,30,50,70, and 90. The example grayscale image is in Figure 4j. (a–e) are the opening profiles obtained on threshold 10,30,50,70, and 90, respectively. (f–i) are the DAPs obtained between adjacent scales.

Figure 3.

Pre-processing flowchart.

Figure 3.

Pre-processing flowchart.

Figure 4.

Example showing the steps of the proposed strategy: (a) example image; (b) the image obtained after image denoising; (c) the input image I; (d,e) the building maps obtained from MABIbright and MABIdark, respectively; (f) MASI feature image; (g) overlay image of the obtained buildings and shadows, with high-MABI in yellow, low-MABI and MABIdark in blue, and shadows in red; (h) the final results of the proposed method.

Figure 4.

Example showing the steps of the proposed strategy: (a) example image; (b) the image obtained after image denoising; (c) the input image I; (d,e) the building maps obtained from MABIbright and MABIdark, respectively; (f) MASI feature image; (g) overlay image of the obtained buildings and shadows, with high-MABI in yellow, low-MABI and MABIdark in blue, and shadows in red; (h) the final results of the proposed method.

Figure 5.

Three test datasets and the corresponding ground truth maps: (a) Dataset 1 and Subgraphs I1 (in the red box) and I2 (in blue box); (b) Dataset 2 and Subgraphs I3(in the red box) and I4 (in the blue box); (c) Dataset 3 and Subgraphs I5 (in the red box) and I6 (in the blue box).

Figure 5.

Three test datasets and the corresponding ground truth maps: (a) Dataset 1 and Subgraphs I1 (in the red box) and I2 (in blue box); (b) Dataset 2 and Subgraphs I3(in the red box) and I4 (in the blue box); (c) Dataset 3 and Subgraphs I5 (in the red box) and I6 (in the blue box).

Figure 6.

Building feature extraction results for Dataset 1: (a,b) the RGB image and the ground truth map; (c) the building detection resultof the MBI; (d–f) the building maps with the results of the pixel-based SVM, DMP-SVM, and object-oriented SVM, respectively; (g,h) the building detection results of DMP-RF and object-oriented RF; (i) the results of the proposed framework.

Figure 6.

Building feature extraction results for Dataset 1: (a,b) the RGB image and the ground truth map; (c) the building detection resultof the MBI; (d–f) the building maps with the results of the pixel-based SVM, DMP-SVM, and object-oriented SVM, respectively; (g,h) the building detection results of DMP-RF and object-oriented RF; (i) the results of the proposed framework.

Figure 7.

Building feature extraction results for Dataset 2: (a,b) the RGB image and the ground truth map; (c) the building detection result of the MBI; (d–f) the building maps with the results of the pixel-based SVM, DMP-SVM, and object-oriented SVM, respectively; (g,h) the building detection results of DMP-RF and object-oriented RF; (i) the results of the proposed framework.

Figure 7.

Building feature extraction results for Dataset 2: (a,b) the RGB image and the ground truth map; (c) the building detection result of the MBI; (d–f) the building maps with the results of the pixel-based SVM, DMP-SVM, and object-oriented SVM, respectively; (g,h) the building detection results of DMP-RF and object-oriented RF; (i) the results of the proposed framework.

Figure 8.

Building feature extraction results for Dataset 3: (a,b) the RGB image and the ground truth map; (c) the building detection result of the MBI; (d–f) the building maps with the results of the pixel-based SVM, DMP-SVM, and object-oriented SVM, respectively; (g,h) the building detection results of DMP-RF and object-oriented RF; (i) the results of the proposed framework.

Figure 8.

Building feature extraction results for Dataset 3: (a,b) the RGB image and the ground truth map; (c) the building detection result of the MBI; (d–f) the building maps with the results of the pixel-based SVM, DMP-SVM, and object-oriented SVM, respectively; (g,h) the building detection results of DMP-RF and object-oriented RF; (i) the results of the proposed framework.

Figure 9.

Building detection results of Test Patches I1 and I2. (a) RGB image; (b) MBI results; (c–e) the building maps with the results of the pixel-based SVM, DMP-SVM, and object-oriented SVM, respectively; (f,g) DMP-RF and object-oriented RF results; (h) the proposed method results.

Figure 9.

Building detection results of Test Patches I1 and I2. (a) RGB image; (b) MBI results; (c–e) the building maps with the results of the pixel-based SVM, DMP-SVM, and object-oriented SVM, respectively; (f,g) DMP-RF and object-oriented RF results; (h) the proposed method results.

Figure 10.

Building detection results of Test Patches I3 and I4. (a) RGB image; (b) MBI results; (c–e) the building maps with the results of the pixel-based SVM, DMP-SVM, and object-oriented SVM, respectively; (f,g) DMP-RF and object-oriented RF results; (h) the proposed method results.

Figure 10.

Building detection results of Test Patches I3 and I4. (a) RGB image; (b) MBI results; (c–e) the building maps with the results of the pixel-based SVM, DMP-SVM, and object-oriented SVM, respectively; (f,g) DMP-RF and object-oriented RF results; (h) the proposed method results.

Figure 11.

Building detection results of Test Patches I5 and I6. (a) RGB image; (b) MBI results; (c–e) the building maps with the results of the pixel-based SVM, DMP-SVM, and object-oriented SVM, respectively; (f,g) DMP-RF and object-oriented RF results; (h) the proposed method results.

Figure 11.

Building detection results of Test Patches I5 and I6. (a) RGB image; (b) MBI results; (c–e) the building maps with the results of the pixel-based SVM, DMP-SVM, and object-oriented SVM, respectively; (f,g) DMP-RF and object-oriented RF results; (h) the proposed method results.

Figure 12.

The OA of the building feature detection results of the MBI and MABI based on different input images: the bright image and I’. (a–c) are the statistical results of Dataset 1, Dataset 2, and Dataset 3, respectively.

Figure 12.

The OA of the building feature detection results of the MBI and MABI based on different input images: the bright image and I’. (a–c) are the statistical results of Dataset 1, Dataset 2, and Dataset 3, respectively.

Figure 13.

The MBI and MABI feature results based on the bright image and I’ for Patches I1 and I5: (a) bright image; (b) results of the MBI based on the bright image; (c) results of the MABI based on bright image; (d) image I’. (e,f) are the results of MBI and MABI, respectively, based on I’.

Figure 13.

The MBI and MABI feature results based on the bright image and I’ for Patches I1 and I5: (a) bright image; (b) results of the MBI based on the bright image; (c) results of the MABI based on bright image; (d) image I’. (e,f) are the results of MBI and MABI, respectively, based on I’.

Figure 14.

Building feature extraction results of Patches I3, I4, and I5 for step analysis of the proposed method: (a) ground truth image; (b) result of MABIbright without non-building object detection; (c) result of MABIbright(I); (d) result of the MABI without shadow constraint. The red and green regions emphasize the performance for elongated objects and dark building, respectively.

Figure 14.

Building feature extraction results of Patches I3, I4, and I5 for step analysis of the proposed method: (a) ground truth image; (b) result of MABIbright without non-building object detection; (c) result of MABIbright(I); (d) result of the MABI without shadow constraint. The red and green regions emphasize the performance for elongated objects and dark building, respectively.

Figure 15.

Relationship between building detection accuracies and the thresholds of attributes sd and Hu for Dataset 2.

Figure 15.

Relationship between building detection accuracies and the thresholds of attributes sd and Hu for Dataset 2.

Figure 16.

Relationship between overall accuracies of building detection and the thresholds of attribute ld in Dataset 2.

Figure 16.

Relationship between overall accuracies of building detection and the thresholds of attribute ld in Dataset 2.

Table 1.

Notations used in this paper.

Table 1.

Notations used in this paper.

| Notation | Description |

|---|

| The n bands of image f |

| Opening/thinning/closing operator |

| The differential attribute profile (DAP) obtained by the opening/closing profile in the attribute profile (APs) |

| The stack of thinning profiles in EAP (the extension of the APs) |

| Ordered set of m criteria/attributes |

| The opening profile/closing profile/DAP obtained by Attr with t |

| The filter parameter of attribute Attr |

Table 2.

Details of the test datasets.

Table 2.

Details of the test datasets.

| Dataset | Sensor | Resolution | Size | Major Land Cover Types |

|---|

| Dataset 1 | WorldView-2 | 2.0 | 2000 × 2000 | Building: 428,674 pixels. Background (vegetation, road, baresoil, path): 3,571,326 pixels. |

| Dataset 2 | QuickBird | 2.4 | 1100 × 1100 | Building: 290,403pixels. Background (vegetation, road, baresoil, path, water): 919,597pixels. |

| Dataset 3 | QuickBird | 2.4 | 1060 × 1600 | Building: 184,034 pixels. Background (vegetation, asphalt road, bare soil, open area): 1,511,966 pixels. |

Table 3.

Training and test samples for the three datasets.

Table 3.

Training and test samples for the three datasets.

| | Dataset 1 | Dataset 2 | Dataset 3 |

|---|

| Methods | No. of Training Samples | No. of Test Samples | No. of Training Samples | No. of Test Samples | No. of Training Samples | No. of test samples |

|---|

| Building | 858 | 427,816 | 1,275 | 289,128 | 1,147 | 182,887 |

| Background | 1,184 | 3,570,142 | 1,835 | 917,762 | 1,562 | 1,510,404 |

Table 4.

Parameters and the suggested range of the proposed method.

Table 4.

Parameters and the suggested range of the proposed method.

| Feature Extraction Parameters | Parameters in Post-Processing |

|---|

| Variables | Fixed Value in This Study | Suggested Range | Variables | Fixed Value in This Study | Suggested Range |

|---|

| λbright | 0.35 | [0.1,0.5] | tsd | 7 | [5,8] |

| NDVI | 0.58 | [0.1,0.6] | tHu | 0.7 | [0.7,0.9] |

| RcFit | 0.7 | [0.5,0.7] | TMABI | 0.25 | [0.1,0.4] |

| SI | 1.1 | [1,1.5] | TMASI | 0.4 | [0.1,0.4] |

| Dist | 0 in high-MABI, 10 in low-MABI | 0 in high-MABI, 10 in low-MABI | ld in MABI | From 10 to 100, interval is 5 | [10,200] |

| | | | ld in MASI | From 4 to 28, interval is 4 | [2,50] |

Table 5.

Building detection accuracies of the test datasets.

Table 5.

Building detection accuracies of the test datasets.

| Method | Dataset 1 | Dataset 2 | Dataset 3 |

|---|

| OA | OE | CE | Kc | OA | OE | CE | Kc | OA | OE | CE | Kc |

|---|

| MBI | 88.81 | 49.56 | 52.09 | 0.62 | 81.56 | 57.54 | 31.24 | 0.61 | 89.60 | 35.83 | 48.34 | 0.66 |

| Pixel-Based SVM | 71.07 | 19.3 | 75.64 | 0.51 | 62.38 | 11.11 | 62.1 | 0.51 | 76.43 | 16.1 | 70.56 | 0.56 |

| DMP-SVM | 85.81 | 45.9 | 61.53 | 0.59 | 77.14 | 47.17 | 47.17 | 0.56 | 87.08 | 53.55 | 58.53 | 0.59 |

| Object-Oriented SVM | 88.32 | 21.89 | 52.73 | 0.66 | 72.58 | 19.13 | 54.04 | 0.58 | 89.45 | 51.85 | 48.54 | 0.63 |

| DMP-RF | 85.03 | 15.25 | 59.48 | 0.64 | 78.34 | 30.16 | 46.25 | 0.62 | 84.11 | 20.81 | 61.34 | 0.62 |

| Object-OrientedRF | 89.91 | 49.92 | 48.22 | 0.63 | 80.51 | 13.99 | 43.87 | 0.66 | 85.17 | 7.57 | 58.27 | 0.65 |

| Proposed | 90.27 | 26.52 | 46.65 | 0.69 | 84.53 | 36.32 | 30.67 | 0.68 | 91.13 | 27.09 | 42.90 | 0.70 |

Table 6.

Running time (second) of all building detection methods used in this study.

Table 6.

Running time (second) of all building detection methods used in this study.

| Method | Dataset 1 | Dataset 2 | Dataset 3 |

|---|

| MBI | 146.35 | 55.34 | 72.91 |

| Pixel-Based SVM | 130.57 | 45.46 | 66.97 |

| DMP-SVM | 624.85 | 145.53 | 193.65 |

| Object-Oriented SVM | 1434.39 | 184.59 | 241.93 |

| DMP-RF | 1648.25 | 413.67 | 579.21 |

| Object-Oriented RF | 1581.41 | 185.42 | 252.43 |

| Proposed | 217.72 | 101.58 | 132.09 |

Table 7.

Accuracies of the building feature extraction results for each step of the proposed framework.

Table 7.

Accuracies of the building feature extraction results for each step of the proposed framework.

| Step | Dataset 1 | Dataset 2 | Dataset 3 |

|---|

| OA | OE | CE | Kc | OA | OE | CE | Kc | OA | OE | CE | Kc |

|---|

| MABIbright(I’) | 81.07 | 32.95 | 68.15 | 0.57 | 71.82 | 39.51 | 55.96 | 0.54 | 86.33 | 34.22 | 58.26 | 0.62 |

| MABIbright(I) | 89.71 | 31.64 | 48.37 | 0.66 | 82.11 | 37.48 | 38.24 | 0.65 | 90.94 | 33.79 | 42.47 | 0.68 |

| MABI | 90.6 | 26.18 | 45.92 | 0.68 | 83.72 | 35.55 | 33.22 | 0.67 | 90.22 | 26.51 | 42.78 | 0.68 |

Table 8.

Accuracy of the building detection results with different shadow constraints.

Table 8.

Accuracy of the building detection results with different shadow constraints.

| Method | Dataset 1 | Dataset 2 | Dataset 3 |

|---|

| OA | OE | CE | Kc | OA | OE | CE | Kc | OA | OE | CE | Kc |

|---|

| MBI | 88.81 | 48.56 | 52.09 | 0.62 | 81.56 | 57.54 | 31.24 | 0.61 | 89.6 | 35.83 | 48.34 | 0.66 |

| MBI+MASI | 89.1 | 48.18 | 50.88 | 0.63 | 81.6 | 57.36 | 31.17 | 0.61 | 89.65 | 35.12 | 48.07 | 0.66 |

| MABI+MSI | 90.17 | 27.31 | 45.06 | 0.68 | 84.23 | 36.31 | 31.66 | 0.68 | 91.11 | 27.89 | 41.7 | 0.7 |

| Proposed | 91.02 | 26.44 | 44.71 | 0.7 | 84.54 | 36.2 | 30.67 | 0.68 | 91.13 | 27.09 | 41.7 | 0.7 |