A Novel Intelligent Classification Method for Urban Green Space Based on High-Resolution Remote Sensing Images

Abstract

:1. Introduction

- (1)

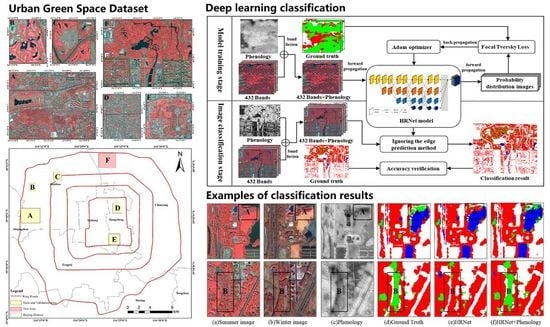

- This paper proposed introducing phenological features into deep learning urban green space classification research. The experimental results show that the addition of phenological features can improve the richness of HRNet model learning, optimize the classification results, and eliminate the small misclassification phenomenon, proving that vegetation phenology is very useful in enhancing urban vegetation classification.

- (2)

- This paper proved that Focal Tversky Loss is better than Cross Entropy Loss, Dice Loss, and Tversky Loss by using the GF-2 Beijing urban green space dataset. The Focal Tversky Loss can reduce data imbalance in the classification task and improve urban green space classification accuracy.

- (3)

- We compared HRNet with other convolutional neural network models, including SegNet, DeepLabv3+, U-Net, and ResUNet. The results show that our method performed well in urban green space classification.

2. Research Area and Data Source

2.1. Study Area

2.1.1. Classification of Urban Green Space

2.1.2. Training and Validation Area

2.1.3. Test Area

2.2. Data Source and Data Preprocessing

2.2.1. Satellite Image

2.2.2. Ground Truth

3. Methods

3.1. Phenological Feature

3.2. HRNet Convolutional Neural Network

3.2.1. Parallel Multi-Resolution Subnetworks

3.2.2. Repeated Multi-Scale Fusion

3.2.3. Multi-Scale Features Concatenating

3.3. Focal Tversky Loss

3.4. Evaluation Metrics

4. Results and Analysis

4.1. Comparative Experiments of Different Networks

4.1.1. Comparison of Classification Results of Different Networks

4.1.2. Comparison of Total Parameters of Different Networks

4.2. Comparative Experiments of Different Loss Functions

4.3. Comparative Experiments about Vegetation Phenology

5. Discussion

5.1. Alleviation of Imbalanced Sample Classification by Introducing Focal Tversky Loss

5.2. Improvement of Urban Green Space Classification by Adding Vegetation Phenology into HRNet

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Yang, J.; McBride, J.; Zhou, J.; Sun, Z. The urban forest in Beijing and its role in air pollution reduction. Urban For. Urban Green. 2005, 3, 65–78. [Google Scholar] [CrossRef]

- Dwivedi, P.; Rathore, C.S.; Dubey, Y. Ecological benefits of urban forestry: The case of Kerwa Forest Area (KFA), Bhopal, India. Appl. Geogr. 2009, 29, 194–200. [Google Scholar] [CrossRef]

- Thompson, C.W.; Roe, J.; Aspinall, P.; Mitchell, R.; Clow, A.; Miller, D. More green space is linked to less stress in deprived communities: Evidence from salivary cortisol patterns. Landsc. Urban Plan. 2012, 105, 221–229. [Google Scholar] [CrossRef] [Green Version]

- Xiao, R.; Zhou, Z.; Wang, P.; Ye, Z.; Guo, E.; Ji, G. Application of 3S technologies in urban green space ecology. Chin. J. Ecol. 2004, 23, 71–76. [Google Scholar]

- Groenewegen, P.P.; Van den Berg, A.E.; De Vries, S.; Verheij, R.A. Vitamin G: Effects of green space on health, well-being, and social safety. BMC Public Health 2006, 6, 149. [Google Scholar] [CrossRef] [Green Version]

- Seto, K.C.; Woodcock, C.; Song, C.; Huang, X.; Lu, J.; Kaufmann, R. Monitoring land-use change in the Pearl River Delta using Landsat TM. Int. J. Remote Sens. 2002, 23, 1985–2004. [Google Scholar] [CrossRef]

- Yuan, F.; Sawaya, K.E.; Loeffelholz, B.C.; Bauer, M.E. Land cover classification and change analysis of the Twin Cities (Minnesota) Metropolitan Area by multitemporal Landsat remote sensing. Remote Sens. Environ. 2005, 98, 317–328. [Google Scholar] [CrossRef]

- Portillo-Quintero, C.; Sanchez, A.; Valbuena, C.; Gonzalez, Y.; Larreal, J. Forest cover and deforestation patterns in the Northern Andes (Lake Maracaibo Basin): A synoptic assessment using MODIS and Landsat imagery. Appl. Geogr. 2012, 35, 152–163. [Google Scholar] [CrossRef]

- Hurd, J.D.; Wilson, E.H.; Lammey, S.G.; Civco, D.L. Characterization of forest fragmentation and urban sprawl using time sequential Landsat imagery. In Proceedings of the ASPRS Annual Convention; Citeseer: St. Louis, MO, USA, 2001; Volume 21. [Google Scholar]

- Miller, M.D. The impacts of Atlanta’s urban sprawl on forest cover and fragmentation. Appl. Geogr. 2012, 34, 171–179. [Google Scholar] [CrossRef]

- Tucker, C.J.; Pinzon, J.E.; Brown, M.E.; Slayback, D.A.; Pak, E.W.; Mahoney, R.; Vermote, E.F.; El Saleous, N. An extended AVHRR 8-km NDVI dataset compatible with MODIS and SPOT vegetation NDVI data. Int. J. Remote Sens. 2005, 26, 4485–4498. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sensing of Environment. Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Yao, F.; Luo, J.; Shen, Z.; Dong, D.; Yang, K. Automatic urban vegetation extraction method using high resolution imagery. J. Geo Inf. Sci. 2016, 18, 248–254. [Google Scholar]

- Sirirwardane, M.; Gunatilake, J.; Sivanandarajah, S. Study of the Urban Green Space Planning Using Geographic Information Systems and Remote Sensing Approaches for the City of Colombo, Sri Lanka. In Geostatistical and Geospatial Approaches for the Characterization of Natural Resources in the Environment; Springer: New Delhi, India, 2016; pp. 797–800. [Google Scholar]

- Kranjčić, N.; Medak, D.; Župan, R.; Rezo, M. Machine learning methods for classification of the green infrastructure in city areas. ISPRS Int. J. Geo-Inf. 2019, 8, 463. [Google Scholar] [CrossRef] [Green Version]

- Jianhui, X.; Ya, S. Study of Urban Green Space Surveying Based on High Resolution Images of Remote Sensing. Resour. Dev. Mark. 2010, 26, 291–294. [Google Scholar]

- Qian, Y.; Zhou, W.; Yu, W.; Pickett, S.T. Quantifying spatiotemporal pattern of urban greenspace: New insights from high resolution data. Landsc. Ecol. 2015, 30, 1165–1173. [Google Scholar] [CrossRef]

- Huang, H.P.; Wu, B.F.; Li, M.M.; Zhou, W.F.; Wang, Z.W. Detecting urban vegetation efficiently with high resolution remote sensing data. J. Remote Sens. Beijing 2004, 8, 68–74. [Google Scholar]

- Meng, Q.Y.; Chen, X.; Sun, Y.X.; Wu, J. Urban building green environment index based on LiDAR and multispectral data. Chin. J. Ecol. 2019, 38, 3221. [Google Scholar]

- Peng, C.; Li, Y.; Jiao, L.; Chen, Y.; Shang, R. Densely based multi-scale and multi-modal fully convolutional networks for high-resolution remote-sensing image semantic segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2612–2626. [Google Scholar] [CrossRef]

- Xu, Z.; Zhou, Y.; Wang, S.; Wang, L.; Wang, Z. U-Net for urban green space classification in GF-2 remote sensing images. J. Image Graph. 2021, in press. [Google Scholar] [CrossRef]

- Zhou, M.; Shi, Z.; Ding, H. Aircraft classification in remote-sensing images using convolutional neural networks. J. Image Graph. 2017, 22, 702–708. [Google Scholar]

- Haiwei, H.; Haizhong, Q.; Limin, X.; Peixiang, D. Interchange Recognition Method Based on CNN. Acta Geod. Cartogr. Sin. 2018, 47, 385. [Google Scholar]

- Hamaguchi, R.; Hikosaka, S. Building detection from satellite imagery using ensemble of size-specific detectors. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 223–2234. [Google Scholar]

- Zhao, K.; Kang, J.; Jung, J.; Sohn, G. Building Extraction From Satellite Images Using Mask R-CNN With Building Boundary Regularization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 247–251. [Google Scholar]

- Yang, X.; Sun, H.; Fu, K.; Yang, J.; Sun, X.; Yan, M.; Guo, Z. Automatic ship detection in remote sensing images from google earth of complex scenes based on multiscale rotation dense feature pyramid networks. Remote Sens. 2018, 10, 132. [Google Scholar] [CrossRef] [Green Version]

- Ghosh, A.; Ehrlich, M.; Shah, S.; Davis, L.S.; Chellappa, R. Stacked U-Nets for Ground Material Segmentation in Remote Sensing Imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 257–261. [Google Scholar]

- Pascual, G.; Seguí, S.; Vitrià, J. Uncertainty Gated Network for Land Cover Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 19–21 June 2018; pp. 276–279. [Google Scholar]

- Zhang, X.; Chen, X.; Li, F.; Yang, T. Change Detection Method for High Resolution Remote Sensing Images Using Deep Learning. Acta Geod. Cartogr. Sin. 2017, 46, 999. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef] [Green Version]

- Panboonyuen, T.; Jitkajornwanich, K.; Lawawirojwong, S.; Srestasathiern, P.; Vateekul, P. Road segmentation of remotely-sensed images using deep convolutional neural networks with landscape metrics and conditional random fields. Remote Sens. 2017, 9, 680. [Google Scholar] [CrossRef] [Green Version]

- Wu, G.; Shao, X.; Guo, Z.; Chen, Q.; Yuan, W.; Shi, X.; Xu, Y.; Shibasaki, R. Automatic building segmentation of aerial imagery using multi-constraint fully convolutional networks. Remote Sens. 2018, 10, 407. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–10 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; pp. 4700–4708. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Munich, Germany, 2015; pp. 234–241. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 5693–5703. [Google Scholar]

- Su-Ting, C.; Hui, Y. High resolution remote sensing image classification based on multi-scale and multi-feature fusion. Chin. J. Quantum Electron. 2016, 33, 420. [Google Scholar]

- Sun, W.; Wang, R. Fully convolutional networks for semantic segmentation of very high resolution remotely sensed images combined with DSM. IEEE Geosci. Remote Sens. Lett. 2018, 15, 474–478. [Google Scholar] [CrossRef]

- Senf, C.; Leitão, P.J.; Pflugmacher, D.; van der Linden, S.; Hostert, P. Mapping land cover in complex Mediterranean landscapes using Landsat: Improved classification accuracies from integrating multi-seasonal and synthetic imagery. Remote Sens. Environ. 2015, 156, 527–536. [Google Scholar] [CrossRef]

- Ulsig, L.; Nichol, C.J.; Huemmrich, K.F.; Landis, D.R.; Middleton, E.M.; Lyapustin, A.I.; Mammarella, I.; Levula, J.; Porcar-Castell, A. Detecting inter-annual variations in the phenology of evergreen conifers using long-term MODIS vegetation index time series. Remote Sens. 2017, 9, 49. [Google Scholar] [CrossRef] [Green Version]

- Yan, J.; Zhou, W.; Han, L.; Qian, Y. Mapping vegetation functional types in urban areas with WorldView-2 imagery: Integrating object-based classification with phenology. Urban For. Urban Green. 2018, 31, 230–240. [Google Scholar] [CrossRef]

- Beijing Gardening and Greening Bureau. Work Summary in 2019 and Work Plan in 2020 of Beijing Gardening and Greening Bureau. Available online: http://yllhj.beijing.gov.cn/zwgk/ghxx/jhzj/202002/t20200227_1670249.shtml (accessed on 3 November 2020).

- Beijing Gardening and Greening Bureau. Notice on Printing and Distributing the Key Points of Urban Greening Work in 2020. Available online: http://yllhj.beijing.gov.cn/zwgk/fgwj/qtwj/202001/t20200121_1619893.shtml (accessed on 3 November 2020).

- Nhu, V.H.; Hoang, N.D.; Nguyen, H.; Ngo, P.T.T.; Bui, T.T.; Hoa, P.V.; Samui, P.; Bui, D.T. Effectiveness assessment of keras based deep learning with different robust optimization algorithms for shallow landslide susceptibility mapping at tropical area. Catena 2020, 188, 104458. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, Y.; Wang, S.; Wang, F.; Xu, Z. House building extraction from high resolution remote sensing image based on IEU-Net. J. Remote Sens. 2021, in press. [Google Scholar] [CrossRef]

- Salehi, S.S.M.; Erdogmus, D.; Gholipour, A. Tversky loss function for image segmentation using 3D fully convolutional deep networks. In International Workshop on Machine Learning in Medical Imaging; Springer: Quebec City, QC, Canada, 2017; pp. 379–387. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Peng, Y.; Zhang, Z.; He, G.; Wei, M. An improved grabcut method based on a visual attention model for rare-earth ore mining area recognition with high-resolution remote sensing images. Remote Sens. 2019, 11, 987. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Sabuncu, M. Generalized cross entropy loss for training deep neural networks with noisy labels. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montréal, QC, Canada, 3–8 December 2018; pp. 8778–8788. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

| Evaluation Metrics | Formula |

|---|---|

| F1-Score | |

| OA | |

| FWIoU |

| Plan | Deciduous Trees | Evergreen Trees | Grassland | Other | Average | OA | FWIoU |

|---|---|---|---|---|---|---|---|

| F1-Score | F1-Score | F1-Score | F1-Score | F1-Score | |||

| SVM | 0.8635 | 0.5202 | 0.1769 | 0.9486 | 0.6273 | 87.73% | 82.13% |

| DeepLabv3+ | 0.8875 | 0.5378 | 0.6361 | 0.9485 | 0.7525 | 91.14% | 84.11% |

| SegNet | 0.8937 | 0.614 | 0.7307 | 0.9489 | 0.7968 | 91.62% | 84.94% |

| U-Net | 0.9102 | 0.6816 | 0.7015 | 0.9574 | 0.8127 | 92.81% | 86.99% |

| ResUNet | 0.9109 | 0.6541 | 0.7363 | 0.9601 | 0.81535 | 92.80% | 87.31% |

| HRNet | 0.9137 | 0.6918 | 0.7301 | 0.959 | 0.8237 | 93.12% | 87.48% |

| HRNe+Phenology | 0.9181 | 0.7248 | 0.7588 | 0.959 | 0.8402 | 93.24% | 87.93% |

| Plan | Deciduous Trees | Evergreen Trees | Grassland | Other | Average | OA | FWIoU |

|---|---|---|---|---|---|---|---|

| F1-Score | F1-Score | F1-Score | F1-Score | F1-Score | |||

| HRNet (CE) | 0.9094 | 0.6575 | 0.7494 | 0.9562 | 0.818125 | 0.9249 | 0.8683 |

| HRNet (DL, , ) | 0.9015 | 0.6614 | 0.7167 | 0.9569 | 0.809125 | 0.9237 | 0.864 |

| HRNet (TL,, ) | 0.9088 | 0.657 | 0.7212 | 0.959 | 0.8115 | 0.9264 | 0.8705 |

| HRNet (TL, , ) | 0.9091 | 0.6694 | 0.702 | 0.9585 | 0.80975 | 0.9282 | 0.8702 |

| HRNet (TL, , ) | 0.9099 | 0.6828 | 0.7378 | 0.9589 | 0.82235 | 0.9289 | 0.8723 |

| HRNet (TL, , ) | 0.906 | 0.6543 | 0.7608 | 0.9579 | 0.81975 | 0.925 | 0.8684 |

| HRNet (FT, , ,) | 0.9137 | 0.6918 | 0.7301 | 0.959 | 0.82365 | 0.9312 | 0.8748 |

| HRNet (FT, , ,) | 0.9056 | 0.6391 | 0.7552 | 0.9571 | 0.81425 | 0.9233 | 0.8666 |

| Plan | Deciduous Trees | Evergreen Trees | Grassland | Other | Average | OA | FWIoU |

|---|---|---|---|---|---|---|---|

| F1-Score | F1-Score | F1-Score | F1-Score | F1-Score | |||

| HRNet | 0.9137 | 0.6918 | 0.7301 | 0.9590 | 0.8237 | 0.9312 | 0.8748 |

| HRNe+Phenology | 0.9181 | 0.7248 | 0.7588 | 0.9590 | 0.8402 | 0.9324 | 0.8793 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Z.; Zhou, Y.; Wang, S.; Wang, L.; Li, F.; Wang, S.; Wang, Z. A Novel Intelligent Classification Method for Urban Green Space Based on High-Resolution Remote Sensing Images. Remote Sens. 2020, 12, 3845. https://doi.org/10.3390/rs12223845

Xu Z, Zhou Y, Wang S, Wang L, Li F, Wang S, Wang Z. A Novel Intelligent Classification Method for Urban Green Space Based on High-Resolution Remote Sensing Images. Remote Sensing. 2020; 12(22):3845. https://doi.org/10.3390/rs12223845

Chicago/Turabian StyleXu, Zhiyu, Yi Zhou, Shixin Wang, Litao Wang, Feng Li, Shicheng Wang, and Zhenqing Wang. 2020. "A Novel Intelligent Classification Method for Urban Green Space Based on High-Resolution Remote Sensing Images" Remote Sensing 12, no. 22: 3845. https://doi.org/10.3390/rs12223845

APA StyleXu, Z., Zhou, Y., Wang, S., Wang, L., Li, F., Wang, S., & Wang, Z. (2020). A Novel Intelligent Classification Method for Urban Green Space Based on High-Resolution Remote Sensing Images. Remote Sensing, 12(22), 3845. https://doi.org/10.3390/rs12223845