Adapting Satellite Soundings for Operational Forecasting within the Hazardous Weather Testbed

Abstract

:1. Introduction

2. Materials and Methods

2.1. Datasets

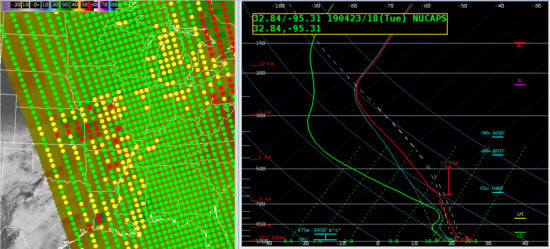

2.1.1. Baseline NUCAPS Soundings

2.1.2. Modified NUCAPS Soundings

2.1.3. Gridded NUCAPS

2.1.4. NUCAPS-Forecast

2.2. Methods

2.2.1. Hazardous Weather Testbed Design

2.2.2. Methods for Product Delivery

2.2.3. Questionnaire Design

3. Results

3.1. Utility of NUCAPS for Predicting Severe Storms

3.2. Assessing Limitations for Storm Prediction

3.3. Forecasters Feedback to NUCAPS Developers

4. Discussion

- Develop a clear understanding of user needs, express realistic possibilities from the research community and be prepared to provide alternative products, tools or data delivery methods where possible. As product developers, we often focus our efforts on meeting statistical requirements (e.g., producing data within a target error threshold) to increase product utilization in the research community. Through the HWT, we found that product latency was also of primary importance to forecasters. Improving product delivery into AWIPS and reducing the NUCAPS latency to 30 min drastically increased the utility for severe weather forecasting. This is example also illustrates how developers, who are aware of what changes are feasible, can help forecasters make achievable requests for improvements. Additionally, providing alternative visualization tools, which for NUCAPS include BUFKIT and SHARPpy, gives forecasters flexibility to view low-latency data in whatever way is most effective for their decision making.

- Developers should document and communicate the limitations of their product(s) for the scenario being tested. Each testbed focuses on a specific scenario; in the case of the HWT EWP, the focus is on issuing warnings on time scales of approximately 0–2 h. If forecasters repeatedly do not find utility in the product for certain situations, this should be documented in HWT training materials such as quick guides and communicated in pre-testbed training. As part of the operations to research process, developers should incorporate these limitations in general user guides for the product.

- For mature products, foster more sophisticated analysis using training. As a product matures, the assessment should focus on how a product is used and with what other datasets. From HWT case studies, we suggest developing a screen capture of a live demonstration of the product. In addition to scenarios where the product works well, product limitations should be clearly described in the training.

- Surveys should contain a mixture of quantitative and qualitative questions. Quantitative results permit easier comparison between HWT demonstrations and are faster for forecasters to fill out. We recommend repeating a core set of 2–3 quantitative questions every year. The remaining questions can be in qualitative, written narrative form. Written responses to questions give forecasters the opportunity to describe their experiences in detail. Qualitative questions should be broad and include the what, when, where, and how to encourage a more detailed response.

- Products should meet quality requirements and be usable in AWIPS. No product development should occur in isolation; if possible, have a developer be physically present in the room. Screen captures show developers how the fixed product looks, but watching forecasters interact with the AWIPS system also provides insight. For instance, menu design enables quick data access and comparison. Accessing gridded NUCAPS was a challenge the first year, but menus were improved following feedback. NUCAPS has 100 different pressure levels and not every combination is used by forecasters; we found that a well-curated list of options is better than an exhaustive one. Not all changes can be easily made, however. Updates to AWIPS visualizations often take time to develop or update; for NUCAPS, forecasters did not like how they could not open and compare multiple soundings in the display or see which profile they were observing. These changes require updates to the baseline code and will take longer to push into operations.

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ralph, F.M.; Intrieri, J.; Andra, D.; Atlas, R.; Boukabara, S.; Bright, D.; Davidson, P.; Entwistle, B.; Gaynor, J.; Goodman, S.; et al. The Emergence of Weather-Related Test Beds Linking Research and Forecasting Operations. Bull. Am. Meteorol. Soc. 2013, 94, 1187–1211. [Google Scholar] [CrossRef]

- Jedlovec, G. Transitioning research satellite data to the operational weather community: The SPoRT Paradigm [Organization Profiles]. IEEE Geosci. Remote Sens. Mag. 2013, 1, 62–66. [Google Scholar] [CrossRef]

- Weaver, G.M.; Smith, N.; Berndt, E.B.; White, K.D.; Dostalek, J.F.; Zavodsky, B.T. Addressing the Cold Air Aloft Aviation Challenge with Satellite Sounding Observations. J. Operational Meteorol. 2019, 138–152. [Google Scholar] [CrossRef]

- Smith, N.; Shontz, K.; Barnet, C.D. What Is a Satellite Measurement? Communicating Abstract Satellite Science Concepts to the World. In Proceedings of the 98th American Meteorological Society Annual Meeting, Austin, TX, USA, 8–11 January 2018. [Google Scholar]

- Smith, N.; White, K.D.; Berndt, E.B.; Zavodsky, B.T.; Wheeler, A.; Bowlan, M.A.; Barnet, C.D. NUCAPS in AWIPS: Rethinking Information Compression and Distribution for Fast Decision Making. In Proceedings of the 98th American Meteorological Society Annual Meeting, Austin, TX, USA, 8–11 January 2018. [Google Scholar]

- Susskind, J.; Barnet, C.D.; Blaisdell, J.M. Retrieval of atmospheric and surface parameters from AIRS/AMSU/HSB data in the presence of clouds. IEEE Trans. Geosci. Remote Sens. 2003, 41, 390–409. [Google Scholar] [CrossRef]

- Gambacorta, A.; Barnet, C.D. Methodology and Information Content of the NOAA NESDIS Operational Channel Selection for the Cross-Track Infrared Sounder (CrIS). IEEE Trans. Geosci. Remote Sens. 2013, 51, 3207–3216. [Google Scholar] [CrossRef]

- Gambacorta, A.; Nalli, N.R.; Barnet, C.D.; Tan, C.; Iturbide-Sanchez, F.; Zhang, K. The NOAA Unique Combined Atmospheric Processing System (NUCAPS) Algorithm Theoretical Basis Document. NOAA 2017. Available online: https://www.star.nesdis.noaa.gov/jpss/documents/ATBD/ATBD_NUCAPS_v2.0.pdf (accessed on 6 December 2019).

- Ackerman, S.A.; Platnick, S.; Bhartia, P.K.; Duncan, B.; L’Ecuyer, T.; Heidinger, A.; Skofronick-Jackson, G.; Loeb, N.; Schmit, T.; Smith, N. Satellites See the World’s Atmosphere. Meteorol. Monogr. 2018, 59, 4.1–4.53. [Google Scholar] [CrossRef]

- HWT Blog. 18Z NUCAPS (Op vs Experiment), Viewing, and Latency. The Satellite Proving Ground at the Hazardous Weather Testbed. 2017. Available online: https://goesrhwt.blogspot.com/2017/06/18z-nucaps-op-vs-experiment-viewing-and.html (accessed on 6 December 2019).

- Nietfield, D. NUCAPS Applications 2015. In Proceedings of the 2015 Satellite Proving Ground/User-Readiness Meeting, Kansas City, MO, USA, 15–19 June 2015. [Google Scholar]

- Smith, N.; Barnet, C.D.; Berndt, E.; Goldberg, M. Why Operational Meteorologists Need More Satellite Soundings. In Proceedings of the 99th American Meteorological Society Annual Meeting, Phoenix, AZ, USA, 6–10 January 2019. [Google Scholar]

- National Research Council. From Research to Operations in Weather Satellites and Numerical Weather Prediction: Crossing the Valley of Death; National Research Council: Washington, DC, USA, 2000; ISBN 978-0-309-06941-0. [Google Scholar]

- Rodgers, C.D. Inverse Methods for Atmospheric Sounding: Theory and Practice; Series on Atmospheric, Oceanic and Planetary Physics; World Scientific: Singapore, 2000; Volume 2, ISBN 978-981-02-2740-1. [Google Scholar]

- Smith, W.L. An improved method for calculating tropospheric temperature and moisture from satellite radiometer measurements. Mon. Weather Rev. 1968, 96, 387–396. [Google Scholar] [CrossRef]

- Chahine, M.T. Remote Sounding of Cloudy Atmospheres. I. The Single Cloud Layer. J. Atmos. Sci. 1974, 31, 233–243. [Google Scholar] [CrossRef] [Green Version]

- Chahine, M.T. Remote Sounding of Cloudy Atmospheres. II. Multiple Cloud Formations. J. Atmos. Sci. 1977, 34, 744–757. [Google Scholar] [CrossRef] [Green Version]

- Nalli, N.R.; Gambacorta, A.; Liu, Q.; Barnet, C.D.; Tan, C.; Iturbide-Sanchez, F.; Reale, T.; Sun, B.; Wilson, M.; Borg, L.; et al. Validation of Atmospheric Profile Retrievals From the SNPP NOAA-Unique Combined Atmospheric Processing System. Part 1: Temperature and Moisture. IEEE Trans. Geosci. Remote Sens. 2018, 56, 180–190. [Google Scholar] [CrossRef]

- Sun, B.; Reale, A.; Tilley, F.H.; Pettey, M.E.; Nalli, N.R.; Barnet, C.D. Assessment of NUCAPS S-NPP CrIS/ATMS Sounding Products Using Reference and Conventional Radiosonde Observations. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2499–2509. [Google Scholar] [CrossRef]

- HWT Blog. NUCAPS Accuracy. The Satellite Proving Ground at the Hazardous Weather Testbed 2015. Available online: https://goesrhwt.blogspot.com/2015/05/nucaps-accuracy.html (accessed on 9 December 2019).

- Maddy, E.S.; Barnet, C.D. Vertical Resolution Estimates in Version 5 of AIRS Operational Retrievals. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2375–2384. [Google Scholar] [CrossRef]

- HWT Blog. Hwt Daily Summary: Week 2, Day 1 (May 11, 2015). The Satellite Proving Ground at the Hazardous Weather Testbed 2015. Available online: https://goesrhwt.blogspot.com/2015/05/daily-summary-week-2-day-1.html (accessed on 9 December 2019).

- Iturbide-Sanchez, F.; da Silva, S.R.S.; Liu, Q.; Pryor, K.L.; Pettey, M.E.; Nalli, N.R. Toward the Operational Weather Forecasting Application of Atmospheric Stability Products Derived from NUCAPS CrIS/ATMS Soundings. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4522–4545. [Google Scholar] [CrossRef]

- De Pondeca, M.S.F.V.; Manikin, G.S.; DiMego, G.; Benjamin, S.G.; Parrish, D.F.; Purser, R.J.; Wu, W.-S.; Horel, J.D.; Myrick, D.T.; Lin, Y.; et al. The Real-Time Mesoscale Analysis at NOAA’s National Centers for Environmental Prediction: Current Status and Development. Weather Forecast. 2011, 26, 593–612. [Google Scholar] [CrossRef]

- NASA SPoRT Training Gridded NUCAPS: Analysis of Pre-Convective Environment 2019. Available online: https://nasasporttraining.wordpress.com/2019/11/21/gridded-nucaps-analysis-of-pre-convective-environment/ (accessed on 6 December 2019).

- HWT Blog. NUCAPS Soundings and Imagery. The Satellite Proving Ground at the Hazardous Weather Testbed 2018. Available online: https://goesrhwt.blogspot.com/2018/05/nucaps-soundings-and-imagery.html (accessed on 6 December 2019).

- Kalmus, P.; Kahn, B.H.; Freeman, S.W.; van den Heever, S.C. Trajectory-Enhanced AIRS Observations of Environmental Factors Driving Severe Convective Storms. Mon. Weather Rev. 2019, 147, 1633–1653. [Google Scholar] [CrossRef] [Green Version]

- Stein, A.F.; Draxler, R.R.; Rolph, G.D.; Stunder, B.J.B.; Cohen, M.D.; Ngan, F. NOAA’s HYSPLIT Atmospheric Transport and Dispersion Modeling System. Bull. Am. Meteorol. Soc. 2015, 96, 2059–2077. [Google Scholar] [CrossRef]

- Unidata | Local Data Manager (LDM). Available online: https://www.unidata.ucar.edu/software/ldm/ (accessed on 6 February 2020).

- Zhou, D.K.; Liu, X.; Larar, A.M.; Tian, J.; Smith, W.L.; Kizer, S.H.; Wu, W.; Liu, Q.; Goldberg, M.D. First Suomi NPP Cal/Val Campaign: Intercomparison of Satellite and Aircraft Sounding Retrievals. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4037–4046. [Google Scholar] [CrossRef]

- HWT Blog. NEW NUCAPS. The Satellite Proving Ground at the Hazardous Weather Testbed 2019. Available online: http://goesrhwt.blogspot.com/2019/05/new-nucaps.html (accessed on 6 December 2019).

- HWT Blog. Precipitable Water Comparisons. The Satellite Proving Ground at the Hazardous Weather Testbed 2019. Available online: https://goesrhwt.blogspot.com/2019/05/precipitable-water-comparisons.html (accessed on 6 December 2019).

- HWT Blog. The Satellite Proving Ground at the Hazardous Weather Testbed: Springfield Illinois Storms. The Satellite Proving Ground at the Hazardous Weather Testbed. 2019. Available online: https://goesrhwt.blogspot.com/2019/06/springfield-illinois-storms.html (accessed on 6 December 2019).

- HWT Blog. NUCAPS Sounding Plan View Examined. The Satellite Proving Ground at the Hazardous Weather Testbed 2017. Available online: https://goesrhwt.blogspot.com/2017/07/nucaps-sounding-plan-view-examined.html (accessed on 6 December 2019).

- Recommendations from the Data Quality Working Group | Earthdata. Available online: https://earthdata.nasa.gov/esdis/eso/standards-and-references/recommendations-from-the-data-quality-working-group (accessed on 9 December 2019).

- Bloch, C.; Knuteson, R.O.; Gambacorta, A.; Nalli, N.R.; Gartzke, J.; Zhou, L. Near-Real-Time Surface-Based CAPE from Merged Hyperspectral IR Satellite Sounder and Surface Meteorological Station Data. J. Appl. Meteor. Clim. 2019, 58, 1613–1632. [Google Scholar] [CrossRef]

- Cintineo, J.L.; Pavolonis, M.J.; Sieglaff, J.M.; Lindsey, D.T. An Empirical Model for Assessing the Severe Weather Potential of Developing Convection. Weather Forecast. 2014, 29, 639–653. [Google Scholar] [CrossRef] [Green Version]

- Cintineo, J.L.; Pavolonis, M.J.; Sieglaff, J.M.; Lindsey, D.T.; Cronce, L.; Gerth, J.; Rodenkirch, B.; Brunner, J.; Gravelle, C. The NOAA/CIMSS ProbSevere Model: Incorporation of Total Lightning and Validation. Weather Forecast. 2018, 33, 331–345. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Esmaili, R.B.; Smith, N.; Berndt, E.B.; Dostalek, J.F.; Kahn, B.H.; White, K.; Barnet, C.D.; Sjoberg, W.; Goldberg, M. Adapting Satellite Soundings for Operational Forecasting within the Hazardous Weather Testbed. Remote Sens. 2020, 12, 886. https://doi.org/10.3390/rs12050886

Esmaili RB, Smith N, Berndt EB, Dostalek JF, Kahn BH, White K, Barnet CD, Sjoberg W, Goldberg M. Adapting Satellite Soundings for Operational Forecasting within the Hazardous Weather Testbed. Remote Sensing. 2020; 12(5):886. https://doi.org/10.3390/rs12050886

Chicago/Turabian StyleEsmaili, Rebekah B., Nadia Smith, Emily B. Berndt, John F. Dostalek, Brian H. Kahn, Kristopher White, Christopher D. Barnet, William Sjoberg, and Mitchell Goldberg. 2020. "Adapting Satellite Soundings for Operational Forecasting within the Hazardous Weather Testbed" Remote Sensing 12, no. 5: 886. https://doi.org/10.3390/rs12050886

APA StyleEsmaili, R. B., Smith, N., Berndt, E. B., Dostalek, J. F., Kahn, B. H., White, K., Barnet, C. D., Sjoberg, W., & Goldberg, M. (2020). Adapting Satellite Soundings for Operational Forecasting within the Hazardous Weather Testbed. Remote Sensing, 12(5), 886. https://doi.org/10.3390/rs12050886