Counting Mixed Breeding Aggregations of Animal Species Using Drones: Lessons from Waterbirds on Semi-Automation

Abstract

:1. Introduction

2. Materials and Methods

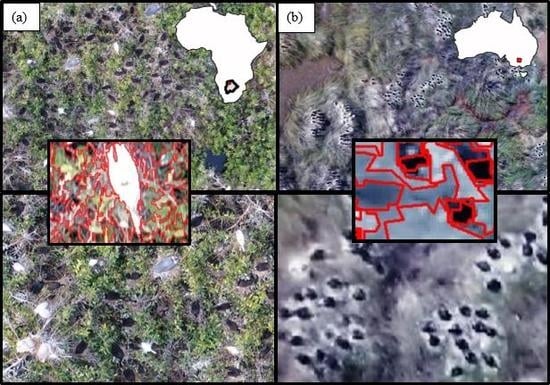

2.1. Study Areas

2.2. Image Collection and Processing

2.3. Semi-Automated Image Analysis

2.3.1. Training and Test Datasets

2.3.2. Image Object Segmentation and Predictor Variables

2.3.3. Machine Learning

2.3.4. Estimation of Target Populations

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Arendt, M.D.; Segars, A.L.; Byrd, J.I.; Boynton, J.; Whitaker, J.D.; Parker, L.; Owens, D.W.; Blanvillain, G.; Quattro, J.M.; Roberts, M. Distributional patterns of adult male loggerhead sea turtles (Caretta caretta) in the vicinity of Cape Canaveral, Florida, USA during and after a major annual breeding aggregation. Mar. Boil. 2011, 159, 101–112. [Google Scholar] [CrossRef]

- Pomeroy, P.; Twiss, S.; Duck, C. Expansion of a grey seal (Halichoerus grypus) breeding colony: Changes in pupping site use at the Isle of May, Scotland. J. Zool. 2000, 250, 1–12. [Google Scholar] [CrossRef]

- Lyons, M.B.; Brandis, K.J.; Murray, N.J.; Wilshire, J.H.; McCann, J.A.; Kingsford, R.; Callaghan, C.T. Monitoring large and complex wildlife aggregations with drones. Methods Ecol. Evol. 2019, 10, 1024–1035. [Google Scholar] [CrossRef] [Green Version]

- Wakefield, E.D.; Owen, E.; Baer, J.; Carroll, M.; Daunt, F.; Dodd, S.G.; Green, J.A.; Guilford, T.; Mavor, R.A.; Miller, P.; et al. Breeding density, fine-scale tracking, and large-scale modeling reveal the regional distribution of four seabird species. Ecol. Appl. 2017, 27, 2074–2091. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bino, G.; Steinfeld, C.; Kingsford, R. Maximizing colonial waterbirds’ breeding events using identified ecological thresholds and environmental flow management. Ecol. Appl. 2014, 24, 142–157. [Google Scholar] [CrossRef] [PubMed]

- Chambers, L.E.; Hughes, L.; Weston, M.A. Climate change and its impact on Australia’s avifauna. Emu-Austral Ornithol 2005, 105, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Frederick, P.; Ogden, J.C. Monitoring wetland ecosystems using avian populations: Seventy years of surveys in the Everglades. In Monitoring Ecosystems: Interdisciplinary Approaches for Evaluating Ecoregional Initiatives; Island Press: Washington, DC, USA, 2003; pp. 321–350. [Google Scholar]

- Ogden, J.C.; Baldwin, J.D.; Bass, O.L.; Browder, J.A.; Cook, M.I.; Frederick, P.C.; Frezza, P.E.; Galvez, R.A.; Hodgson, A.B.; Meyer, K.D.; et al. Waterbirds as indicators of ecosystem health in the coastal marine habitats of southern Florida: 1. Selection and justification for a suite of indicator species. Ecol. Indic. 2014, 44, 148–163. [Google Scholar] [CrossRef]

- Brandis, K.J.; Koeltzow, N.; Ryall, S.; Ramp, D. Assessing the use of camera traps to measure reproductive success in Straw-necked Ibis breeding colonies. Aust. Field Ornithol. 2014, 31, 99. [Google Scholar]

- Znidersic, E. Camera Traps are an Effective Tool for Monitoring Lewin’s Rail (Lewinia pectoralis brachipus). Waterbirds 2017, 40, 417–422. [Google Scholar] [CrossRef]

- Loots, S. Evaluation of Radar and Cameras as Tools for Automating the Monitoring of Waterbirds at Industrial Sites. Available online: https://era.library.ualberta.ca/items/e7e66493-9f87-4980-b268-fecae42c9c33 (accessed on 6 March 2020).

- Kingsford, R.; Porter, J.L. Monitoring waterbird populations with aerial surveys—What have we learnt? Wildl. Res. 2009, 36, 29–40. [Google Scholar] [CrossRef]

- Rodgers, J.A.; Kubilis, P.S.; Nesbitt, S.A. Accuracy of Aerial Surveys of Waterbird Colonies. Waterbirds 2005, 28, 230–237. [Google Scholar] [CrossRef] [Green Version]

- Carney, K.M.; Sydeman, W.J. A Review of Human Disturbance Effects on Nesting Colonial Waterbirds. Waterbirds 1999, 22, 68. [Google Scholar] [CrossRef]

- Green, M.C.; Luent, M.C.; Michot, T.C.; Jeske, C.W.; Leberg, P.L. Comparison and Assessment of Aerial and Ground Estimates of Waterbird Colonies. J. Wildl. Manag. 2008, 72, 697–706. [Google Scholar] [CrossRef]

- Kingsford, R. Aerial survey of waterbirds on wetlands as a measure of river and floodplain health. Freshw. Boil. 1999, 41, 425–438. [Google Scholar] [CrossRef]

- Schofield, G.; Katselidis, K.; Lilley, M.; Reina, R.D.; Hays, G.C. Detecting elusive aspects of wildlife ecology using drones: New insights on the mating dynamics and operational sex ratios of sea turtles. Funct. Ecol. 2017, 31, 2310–2319. [Google Scholar] [CrossRef]

- Koh, L.P.; Wich, S. Dawn of Drone Ecology: Low-Cost Autonomous Aerial Vehicles for Conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef] [Green Version]

- Inman, V.L.; Kingsford, R.T.; Chase, M.J.; Leggett, K.E.A. Drone-based effective counting and ageing of hippopotamus (Hippopotamus amphibius) in the Okavango Delta in Botswana. PLoS ONE 2019, 14, e0219652. [Google Scholar] [CrossRef] [Green Version]

- Ezat, M.A.; Fritsch, C.; Downs, C.T. Use of an unmanned aerial vehicle (drone) to survey Nile crocodile populations: A case study at Lake Nyamithi, Ndumo game reserve, South Africa. Boil. Conserv. 2018, 223, 76–81. [Google Scholar] [CrossRef]

- Brody, S. Unmanned: Investigating the Use of Drones with Marine Mammals. Available online: https://escholarship.org/uc/item/0rw1p3tq (accessed on 6 March 2020).

- Bennitt, E.; Bartlam-Brooks, H.; Hubel, T.Y.; Wilson, A.M. Terrestrial mammalian wildlife responses to Unmanned Aerial Systems approaches. Sci. Rep. 2019, 9, 2142. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Baylis, S.; Mott, R.; Herrod, A.; Clarke, R.H. Precision wildlife monitoring using unmanned aerial vehicles. Sci. Rep. 2016, 6, 22574. [Google Scholar] [CrossRef] [Green Version]

- McEvoy, J.; Hall, G.P.; McDonald, P.G. Evaluation of unmanned aerial vehicle shape, flight path and camera type for waterfowl surveys: Disturbance effects and species recognition. PeerJ 2016, 4, e1831. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lyons, M.B.; Brandis, K.J.; Callaghan, C.; McCann, J.; Mills, C.; Ryall, S.; Kingsford, R. Bird interactions with drones, from individuals to large colonies. Aust. Field Ornithol. 2018, 35, 51–56. [Google Scholar] [CrossRef] [Green Version]

- Callaghan, C.T.; Brandis, K.J.; Lyons, M.B.; Ryall, S.; Kingsford, R. A comment on the limitations of UAVS in wildlife research—The example of colonial nesting waterbirds. J. Avian Boil. 2018, 49, e01825. [Google Scholar] [CrossRef]

- Tack, J.P.; West, B.S.; McGowan, C.P.; Ditchkoff, S.S.; Reeves, S.J.; Keever, A.C.; Grand, J.B. AnimalFinder: A semi-automated system for animal detection in time-lapse camera trap images. Ecol. Inform. 2016, 36, 145–151. [Google Scholar] [CrossRef]

- Chabot, D.; Francis, C.M. Computer-automated bird detection and counts in high-resolution aerial images: A review. J. Field Ornithol. 2016, 87, 343–359. [Google Scholar] [CrossRef]

- Brandis, K.J.; Kingsford, R.; Ren, S.; Ramp, D. Crisis Water Management and Ibis Breeding at Narran Lakes in Arid Australia. Environ. Manag. 2011, 48, 489–498. [Google Scholar] [CrossRef] [PubMed]

- Descamps, S.; Béchet, A.; Descombes, X.; Arnaud, A.; Zerubia, J. An automatic counter for aerial images of aggregations of large birds. Bird Study 2011, 58, 302–308. [Google Scholar] [CrossRef]

- Liu, C.-C.; Chen, Y.-H.; Wen, H.-L. Supporting the annual international black-faced spoonbill census with a low-cost unmanned aerial vehicle. Ecol. Inform. 2015, 30, 170–178. [Google Scholar] [CrossRef]

- McNeill, S.; Barton, K.; Lyver, P.; Pairman, D. Semi-automated penguin counting from digital aerial photographs. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 4312–4315. [Google Scholar]

- Groom, G.; Petersen, I.; Fox, T. Sea bird distribution data with object based mapping of high spatial resolution image data. In Challenges for Earth Observation-Scientific, Technical and Commercial. Proceedings of the Remote Sensing and Photogrammetry Society Annual Conference; Groom, G., Petersen, I., Fox, T., Eds.; Available online: https://onlinelibrary.wiley.com/doi/abs/10.1111/j.1477-9730.2007.00455.x (accessed on 6 March 2020).

- Groom, G.; Stjernholm, M.; Nielsen, R.D.; Fleetwood, A.; Petersen, I.K. Remote sensing image data and automated analysis to describe marine bird distributions and abundances. Ecol. Inform. 2013, 14, 2–8. [Google Scholar] [CrossRef]

- Teucher, A.; Hazlitt, S. Using principles of Open Science for transparent, repeatable State of Environment reporting. Available online: https://cedar.wwu.edu/ssec/2016ssec/policy_and_management/10/ (accessed on 6 March 2020).

- Lowndes, J.S.S.; Best, B.D.; Scarborough, C.; Afflerbach, J.C.; Frazier, M.; O’Hara, C.C.; Jiang, N.; Halpern, B.S. Our path to better science in less time using open data science tools. Nat. Ecol. Evol. 2017, 1, 160. [Google Scholar] [CrossRef] [Green Version]

- López, R.; Toman, M.A. Economic Development and Environmental Sustainability; Oxford University Press (OUP): Oxford, UK, 2006. [Google Scholar]

- Pix4d SA. Pix4Dcapture. 2019. Available online: https://www.pix4d.com/product/pix4dcapture (accessed on 6 March 2020).

- Mooii Tech. Photoscape X. 2019. Available online: http://x.photoscape.org/ (accessed on 6 March 2020).

- Chabot, D.; Dillon, C.; Francis, C.M. An approach for using off-the-shelf object-based image analysis software to detect and count birds in large volumes of aerial imagery. Avian Conserv. Ecol. 2018, 13, 15. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Hodgson, J.C.; Mott, R.; Baylis, S.; Pham, T.; Wotherspoon, S.; Kilpatrick, A.D.; Segaran, R.R.; Reid, I.D.; Terauds, A.; Koh, L.P. Drones count wildlife more accurately and precisely than humans. Methods Ecol. Evol. 2018, 9, 1160–1167. [Google Scholar] [CrossRef] [Green Version]

- R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing: Vienna, Austria. Available online: https://www.gbif.org/zh/tool/81287/r-a-language-and-environment-for-statistical-computing (accessed on 6 March 2020).

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Wright, M.N.; Ziegler, A. Ranger: A Fast Implementation of Random Forests for High Dimensional Data in C++ and R. J. Stat. Softw. 2017, 77, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Menkhorst, P.; Rogers, D.; Clarke, R.; Davies, J.; Marsack, P.; Franklin, K. The Australian bird guide: Csiro Publishing. Available online: https://www.publish.csiro.au/book/6520/ (accessed on 6 March 2020).

- Rees, A.F.; Avens, L.; Ballorain, K.; Bevan, E.; Broderick, A.C.; Carthy, R.; Christianen, M.; Duclos, G.; Heithaus; Johnston, D.W.; et al. The potential of unmanned aerial systems for sea turtle research and conservation: A review and future directions. Endanger. Species Res. 2018, 35, 81–100. [Google Scholar] [CrossRef] [Green Version]

- Pirotta, V.; Smith, A.; Ostrowski, M.; Russell, D.; Jonsen, I.D.; Grech, A.; Harcourt, R. An Economical Custom-Built Drone for Assessing Whale Health. Front. Mar. Sci. 2017, 4, 425. [Google Scholar] [CrossRef]

- Arthur, A.D.; Reid, J.R.W.; Kingsford, R.; McGinness, H.; Ward, K.A.; Harper, M.J. Breeding Flow Thresholds of Colonial Breeding Waterbirds in the Murray-Darling Basin, Australia. Wetlands 2012, 32, 257–265. [Google Scholar] [CrossRef]

- Narayanan, S.P.; Vijayan, L. Status of the colonial breeding waterbirds in Kumarakom Heronry in Kerala, Southern India. Podoces 2007, 2, 22–29. [Google Scholar]

- Niemczynowicz, A.; Świętochowski, P.; Chȩtnicki, W.; Zalewski, A. Facultative Interspecific Brood Parasitism in Colonial Breeding Waterbirds in Biebrza National Park, Poland. Waterbirds 2015, 38, 282–289. [Google Scholar] [CrossRef]

- Ferrari, M.A.; Campagna, C.; Condit, R.; Lewis, M. The founding of a southern elephant seal colony. Mar. Mammal Sci. 2012, 29, 407–423. [Google Scholar] [CrossRef]

- Knight, J. Herding Monkeys to Paradise; Brill: Leiden, The Netherlands, 2011. [Google Scholar]

- Crutsinger, G.M.; Short, J.; Sollenberger, R. The future of UAVs in ecology: An insider perspective from the Silicon Valley drone industry. J. Unmanned Veh. Syst. 2016, 4, 161–168. [Google Scholar] [CrossRef] [Green Version]

- Wandler, A.; Spaun, E.; Steiniche, T.; Nielsen, P.S. Automated quantification of Ki67/MART1 stains may prevent false-negative melanoma diagnoses. J. Cutan. Pathol. 2016, 43, 956–962. [Google Scholar] [CrossRef]

- Gupta, A.S.; Kletzing, C.; Howk, R.; Kurth, W.S.; Matheny, M. Automated Identification and Shape Analysis of Chorus Elements in the Van Allen Radiation Belts. J. Geophys. Res. Space Phys. 2017, 122, 12–353. [Google Scholar]

- Kingsford, R.; Johnson, W. Impact of Water Diversions on Colonially-Nesting Waterbirds in the Macquarie Marshes of Arid Australia. Colonial Waterbirds 1998, 21, 159. [Google Scholar] [CrossRef]

- Leslie, D.J. Effect of river management on colonially-nesting waterbirds in the Barmah-Millewa forest, south-eastern Australia. Regul. Rivers: Res. Manag. 2001, 17, 21–36. [Google Scholar] [CrossRef]

- Kingsford, R.; Auld, K.M. Waterbird breeding and environmental flow management in the Macquarie Marshes, arid Australia. River Res. Appl. 2005, 21, 187–200. [Google Scholar] [CrossRef]

- Bregnballe, T.; Amstrup, O.; Holm, T.E.; Clausen, P.; Fox, A.D. Skjern River Valley, Northern Europe’s most expensive wetland restoration project: Benefits to breeding waterbirds. Ornis Fenn. 2014, 91, 231–243. [Google Scholar]

| Colony | Waterbird Descriptions | Dominant Vegetation | ||

|---|---|---|---|---|

| Species | Colour | Size (cm) | ||

| Kanana | African Openbill Anastomus lamelligerus | Black | 82 | Gomoti fig Ficus verrucolosa |

| African Sacred Ibis Threskiornis aethiopicus | White | 77 | Papyrus Cyperus papyrus | |

| Egret sp. Egretta sp 1 | White | 64–95 | ||

| Marabou Stork Leptoptilos crumeniferus | Grey | 152 | ||

| Pink-backed Pelican Pelecanus rufescens | Grey | 128 | ||

| Yellow-billed Stork Mycteria ibis | White | 97 | ||

| Eulimbah | Australian White Ibis Threskiornis molucca | White | 75 | Lignum shrubs Duma florulenta |

| Straw-necked Ibis Threskiornis spinicollis | Grey | 70 | Common reed Phragmites australis | |

| Colony | Target | Final Counts | Difference % | |||

|---|---|---|---|---|---|---|

| Freeware | Payware | Manual | Freeware | Payware | ||

| Kanana | Bird 1 | 2128 | 1797 | |||

| Egret Sp. 2 | 587 | 605 | 578 | 1.56 | 4.67 | |

| Marabou Stork | 156 | 102 | 137 | 13.87 | −25.55 | |

| African Openbill | 725 | 681 | 2986 | −4.45 3 | −17.01 4 | |

| Pink-backed Pelican | 154 | 71 | 59 | 161.02 | 20.34 | |

| Yellow-billed Stork | 336 | 354 | 380 | −11.58 | −6.84 | |

| Total targets | 4086 | 3610 | 4140 | −1.30 | −12.80 | |

| Eulimbah | Bird 1 | N/A | 1155 | |||

| Egg | 108 | 287 | 80 | 35.00 | 258.75 | |

| Nest | 3458 | 3390 | 2787 | 24.08 | 21.64 | |

| Straw-necked Ibis on nest | 2271 | 2590 | 3267 | −30.49 | −20.72 | |

| Straw-necked Ibis off nest | 196 | 91 | 136 | 44.12 | −33.09 | |

| White Ibis on nest | 111 | 99 | 40 | 177.50 | 147.50 | |

| Total targets | 6144 | 7612 | 6310 | −2.63 | 20.63 | |

| Kanana Freeware | Initial | Secondary | Final |

|---|---|---|---|

| Target versus Background Accuracy | 0.99 | 0.99 | 0.91 |

| Between Target Detection Accuracy | 0.88 | 0.88 | 0.99 |

| Kanana Payware | |||

| Target versus Background Accuracy | 0.99 | 0.99 | 0.90 |

| Between Target Detection Accuracy | 0.57 | 0.82 | 0.99 |

| Eulimbah Freeware | |||

| Target versus Background Accuracy | 0.98 | N/A 1 | 0.98 |

| Between Target Detection Accuracy | 0.99 | N/A | 0.98 |

| Eulimbah Payware | |||

| Target versus Background Accuracy | 0.99 | 0.99 | 0.93 |

| Between Target Detection Accuracy | 0.88 | 0.93 | 0.98 |

| Kanana Freeware | |||||||

|---|---|---|---|---|---|---|---|

| Background | Bird | Egret Sp. | Marabou Stork | African Openbill | Pink-Backed Pelican | Yellow-Billed Stork | |

| Background | 3310 | 14 | 0 | 0 | 0 | 0 | 0 |

| Egret Sp. a | 0 | 0 | 11 | 0 | 0 | 0 | 0 |

| Marabou Stork | 0 | 6 | 0 | 5 | 0 | 0 | 0 |

| African Openbill | 14 | 11 | 0 | 0 | 7 | 0 | 0 |

| Pink-backed Pelican | 0 | 0 | 0 | 0 | 0 | 7 | 0 |

| Yellow-billed Stork | 0 | 1 | 2 | 0 | 0 | 1 | 10 |

| Background | Bird | Egret Sp. | Marabou Stork | African Openbill | Pink-Backed Pelican | Yellow-Billed Stork | |

| Background | 2 | 10 | 1 | 0 | 0 | 0 | 0 |

| Egret Sp. a | 0 | 0 | 50 | 0 | 0 | 0 | 2 |

| Marabou Stork | 0 | 4 | 0 | 49 | 0 | 0 | 0 |

| African Openbill | 3 | 12 | 0 | 0 | 126 | 0 | 0 |

| Pink-backed Pelican | 1 | 0 | 1 | 0 | 0 | 28 | 0 |

| Yellow-billed Stork | 0 | 0 | 2 | 0 | 0 | 0 | 66 |

| Kanana Payware | |||||||

| Background | Bird | Egret Sp. | Marabou Stork | African Openbill | Pink-Backed Pelican | Yellow-Billed Stork | |

| Background | 3310 | 14 | 0 | 0 | 0 | 0 | 0 |

| Egret Sp. a | 0 | 0 | 11 | 0 | 0 | 0 | 0 |

| Marabou Stork | 0 | 6 | 0 | 5 | 0 | 0 | 0 |

| African Openbill | 14 | 11 | 0 | 0 | 7 | 0 | 0 |

| Pink-backed Pelican | 0 | 0 | 0 | 0 | 0 | 7 | 0 |

| Yellow-billed Stork | 0 | 1 | 2 | 0 | 0 | 1 | 10 |

| Background | Bird | Egret Sp. | Marabou Stork | African Openbill | Pink-Backed Pelican | Yellow-Billed Stork | |

| Background | 2 | 10 | 1 | 0 | 0 | 0 | 0 |

| Egret Sp. a | 0 | 0 | 50 | 0 | 0 | 0 | 2 |

| Marabou Stork | 0 | 4 | 0 | 49 | 0 | 0 | 0 |

| African Openbill | 3 | 12 | 0 | 0 | 126 | 0 | 0 |

| Pink-backed Pelican | 1 | 0 | 1 | 0 | 0 | 28 | 0 |

| Yellow-billed Stork | 0 | 0 | 2 | 0 | 0 | 0 | 66 |

| Eulimbah Freeware | |||||||

|---|---|---|---|---|---|---|---|

| Background | Bird 1 | Egg | Nest | Straw-Necked Ibis | Straw-Necked Ibis | White Ibis | |

| On Nest | Off Nest | On Nest | |||||

| Background | 366 | N/A | 0 | 1 | 0 | 0 | 0 |

| Egg | 2 | N/A | 19 | 3 | 0 | 0 | 0 |

| Nest | 2 | N/A | 0 | 194 | 1 | 0 | 0 |

| Straw-necked Ibis on nest | 4 | N/A | 0 | 3 | 162 | 0 | 0 |

| Straw-necked Ibis off nest | 0 | N/A | 0 | 0 | 1 | 21 | 0 |

| White Ibis on nest | 0 | N/A | 0 | 1 | 0 | 0 | 19 |

| Eulimbah Payware | |||||||

| Background | Bird | Egg | Nest | Straw-Necked Ibis | Straw-Necked Ibis | White Ibis | |

| On nest | Off nest | On nest | |||||

| Background | 1243 | 0 | 0 | 2 | 2 | 0 | 0 |

| Egg | 0 | 1 | 3 | 1 | 0 | 0 | 0 |

| Nest | 4 | 1 | 0 | 31 | 0 | 0 | 1 |

| Straw-necked Ibis on nest | 1 | 1 | 0 | 2 | 28 | 1 | 0 |

| Straw-necked Ibis off nest | 1 | 1 | 0 | 0 | 0 | 2 | 0 |

| White Ibis on nest | 0 | 0 | 0 | 0 | 0 | 0 | 4 |

| Background | Bird | Egg | Nest | Straw-Necked Ibis | Straw-Necked Ibis | White Ibis | |

| On nest | Off nest | On nest | |||||

| Background | 1 | 2 | 0 | 2 | 0 | 0 | 0 |

| Egg | 1 | 0 | 22 | 1 | 0 | 0 | 0 |

| Nest | 0 | 0 | 0 | 31 | 0 | 0 | 0 |

| Straw-necked Ibis on nest | 3 | 2 | 0 | 0 | 111 | 0 | 0 |

| Straw-necked Ibis off nest | 0 | 3 | 0 | 0 | 0 | 19 | 0 |

| White Ibis on nest | 0 | 0 | 0 | 1 | 0 | 0 | 19 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Francis, R.J.; Lyons, M.B.; Kingsford, R.T.; Brandis, K.J. Counting Mixed Breeding Aggregations of Animal Species Using Drones: Lessons from Waterbirds on Semi-Automation. Remote Sens. 2020, 12, 1185. https://doi.org/10.3390/rs12071185

Francis RJ, Lyons MB, Kingsford RT, Brandis KJ. Counting Mixed Breeding Aggregations of Animal Species Using Drones: Lessons from Waterbirds on Semi-Automation. Remote Sensing. 2020; 12(7):1185. https://doi.org/10.3390/rs12071185

Chicago/Turabian StyleFrancis, Roxane J., Mitchell B. Lyons, Richard T. Kingsford, and Kate J. Brandis. 2020. "Counting Mixed Breeding Aggregations of Animal Species Using Drones: Lessons from Waterbirds on Semi-Automation" Remote Sensing 12, no. 7: 1185. https://doi.org/10.3390/rs12071185

APA StyleFrancis, R. J., Lyons, M. B., Kingsford, R. T., & Brandis, K. J. (2020). Counting Mixed Breeding Aggregations of Animal Species Using Drones: Lessons from Waterbirds on Semi-Automation. Remote Sensing, 12(7), 1185. https://doi.org/10.3390/rs12071185