1. Introduction

Monitoring the RGMs and detecting any changes to the Earth’s surface is important in updating the spatial reference system [

1]. This is especially of high interest in areas that are affected by activities such as salt mining, gas, and oil extraction, as well as fossil fuel storage. In this research, we focus on the modeling RGMs. The area under study is located in the region of Lower Saxony in Germany.

Ground movement detection on a large scale depends on the measurement approach, as well as the modeling technique. One necessary step is to mathematically model the measurements as a surface, therefore allowing the user to predict the changes to the Earth’s surface at any position where measurements could be unavailable. The modeling and spatial representation of such data come with different challenges. These types of data are usually contaminated with noise and outliers and the main challenges include the irregular distribution of data and the high variability of the precision of the measurements.

From the aspect of the measurement approach, there are different methods that can be applied to track the changes through repeated point-wise measurements. Conventional surveying approaches, such as levelling, the Global Navigation Satellite System (GNSS), or total station will result in a limited number of observations. Although the resulted observations are considered precise, they have low spatial density and are usually restricted to known geodetic stations. Additionally, these approaches are typically expensive, time-consuming, and usually labor-intensive [

2,

3,

4]. The disadvantage of sparse measurements can be mitigated by using satellite-based remote-sensing methods that can provide observations on a larger scale. The advanced methods of Interferometric Synthetic Aperture Radar (InSAR), particularly the Persistent Scatterer Interferometry (PSI), are able to detect and quantify the ground deformation in the range of millimeters per year [mm/year] in many application fields [

5,

6,

7,

8]. In the current study, PSI observations are used to model RGM specifically in the area of Hanover in Lower Saxony, Germany.

The PSI observations are prone to outliers. There are observations that might deviate from the global or local distribution of the whole data set. Hawkins [

9] defines an outlier as “an observation that deviates so much from other observations as to arouse suspicions that it was generated by a different mechanism”. The PSI observations show high spatial variability due to different movement behavior of neighboring scatterers. These variations might represent only an anomaly related to individual object movements, that does not necessarily follow the global ground movement in the region. To accurately model the true RGM, detecting and removing these outliers is an important step. There are a variety of methods, which have been developed to detect outliers specifically in the field of statistics [

9,

10]. According to Papadimitriou et al. [

11], they are mainly categorized into distance-based, density-based, clustering-based, depth-based and distribution-based methods. Distance-based methods were first introduced by Knorr et al. [

12], and applied by, for example, Shen et al. [

13], which defines an outlier as a point from which a certain portion of the neighbouring data has a distance more than a specific threshold. However, such a method can lead to problems when it comes to non-homogeneous distributed data [

14]. Breunig et al. [

14] proposed a density-based approach, which is based on the Local Outlier Factor (LOF). This factor could be derived by analyzing the data distribution density. In clustering-based approaches, those data which are not assigned to any cluster are labeled as outliers [

15]. Clustering for detecting outliers have been used in different applications, which deal with point clouds, such as laser scanning data and sonar data [

16,

17]. Distribution-based approaches focus on a standard distribution model (e.g., Gaussian) and identify outliers as those points that deviate from this distribution [

9,

10]. Finally, the depth-based approaches concentrate on computational geometry, and the data are divided into different layers, wherein shallow layers are more probable to contain outliers [

18,

19].

In detecting anomalies for spatial data, one should consider an important characteristic of these data types, which is spatial correlation. Tobler [

20] expresses this as: “Everything is related to everything else, but near things are more related than distant things”. Algorithms designed to deal with spatial data can be categorized into graphic approaches [

21,

22] and quantitative tests [

23,

24]. The methods of Scatterplot [

23] and Moran Scatterplot [

24] can be mentioned, which work with quantitative tests in detecting anomalies. In the direction of quantitative testing, Liu et al. [

25] used a local adoptive statistical analysis to detect outliers, which was tested on SAR data. Lu et al. [

26] proposed several statistical outlier detection algorithms, and similarly, Chen et al. [

27] introduced a robust median algorithm to detect spatial outliers.

In this paper, a data adaptive algorithm is proposed to process the data and identify the outliers. The anomalies are detected based on the deviation of the observations to a fitted model in a hierarchical approach. The algorithm considers local behavior of the data while globally testing the deviations of the observations to the model.

The other aspect in detecting RGMs is the modeling technique, which is mainly the problem of finding the underlying function in the data set as a continuous surface that best describes the behavior of the data. This makes it possible to propagate information from the positions where information is available to new positions where no data exists. Despite a flurry of activity in this area, scattered data interpolation remains a difficult and computationally expensive problem. Mostly, the developed approaches do not allow different data distributions or remain computationally inefficient [

28,

29]. In modeling such data, one needs to consider the fact that it contains both deterministic and stochastic parts [

30]. Deterministic approaches mainly focus on the trend in the data, for example, traditional polynomial surfaces and free-form surfaces, such as Bézier, B-splines, and non-uniform rational B-splines [

31] (see Bureick et al. [

32]). The stochastic methods model the stochastic part of the data based on the spatial correlation among the data sets. The Least Squares Collocation [

33], Gaussian Processes [

18] and Kriging [

34] are examples of such stochastic approaches.

In practice, for large data sets with high variability and large spatial gaps, it is challenging to choose a suitable approach. Mohammadivojdan et al. [

35] modeled such a dataset by using both ordinary Kriging and Multilevel B-splines Approximation (MBA) methods. Ordinary Kriging is a powerful stochastic method that uses variograms to model the correlation among data points; however, this approach has several drawbacks. Firstly, as a stochastic method, this method best models the stochastic part of the data; therefore, in order to have a reliable estimation, the trend in the data should be modeled separately. Additionally, this method is computationally expensive, especially for large data sets. In this study, an approach based on HB-splines was adopted to model the PSI observations. Forsey and Bartels were the first to introduce a method for HB-splines refinement to interpolate a grid of data [

36,

37,

38]. Lee et al. [

29] proposed a similar approach that is also able to approximate scattered data. The suggested MBA method generates a series of bicubic B-splines functions based on a coarse to fine control lattice hierarchy. The approximation at each level is improved by a correction term from the next level, and the sum of all the levels forms the final approximation. Compared to Ordinary Kriging, the deterministic method of MBA does not require a separate model for estimating the trend, takes less computational time, and shows robust behavior against data gaps. At the same time, the disadvantage of the MBA approach lies in model selection and choosing an optimal control lattice hierarchy [

35].

It is of high importance to also model the uncertainty of the approximated RGM. Therefore, based on the modeled movement, a user should be able to predict deformation at any position and have a measure of quality for the prediction. The uncertainty in the model can be due to different reasons, namely noise and data gaps. The approximated model based on MBA in each level is derived based on least squares minimization. Therefore, based on the precision of the observations, an uncertainty measure could be derived mathematically for the approximated model. However, the final model in MBA consists of more than one level. Because each level is derived from the previous one, a highly mathematical correlation exists between different levels. This makes the error propagation and the consideration in an appropriate stochastic model a challenging process. Consequently, mathematically modeling the uncertainty for the Multilevel method is not optimal, and for large data sets the process is not computationally practical.

In order to solve this issue, a non-parametric approach based on the bootstrapping method is adopted to approximate the sampling distribution of the estimated RGM. Bootstrapping is a computer-intensive method, which can be used to obtain the sampling distribution of an estimate. It was first introduced by Efron et al. [

39,

40]. The method is based on intensive resampling from a limited existing sample and generating new samples. Using the information from all samples, one can derive the bootstrap sampling distribution. The standard error or confidence interval of an estimate can be derived based on the bootstrap sampling distribution. However, in terms of PSI observations and approximation surfaces, this non-parametric approach provides the uncertainty of the approximated surface. The uncertainty will mainly represent the quality of the model, reflecting the general noise and data gaps.

The rest of the paper is organized as follows:

Section 2 gives an overview of the data set that is used in this paper.

Section 3 provides a detailed explanation of the implemented methodologies, including the basics of the surface-based modeling method and an overview of the implemented bootstrapping approach. In

Section 4, the computational results of applying the above-mentioned methodologies on the data set of interest are analysed and evaluated. The main outputs of the research, along with the strengths and limitations of the methods, are discussed in

Section 5.

Section 6 concludes and provides a discussion on the characteristics of the developed method, and possible opportunities are presented.

2. Data

Deformation of the Earth’s surface can be caused by various geological processes or anthropogenic measures. Due to its high temporal and spatial resolution, radar interferometry provides valuable data for detecting areas influenced by ground movements. The analyzed motions in the area of Hanover are based on SAR data from the Sentinel-1 mission, which is part of the European Space Agency (ESA) Copernicus Earth observation program [

41]. The identical radar satellites Sentinel-1A and Sentinel-1B were respectively launched in April 2014 and April 2016, and offer a revisiting time of 6 days on a shared orbit plane. The radar satellites operating in C-band have a spatial resolution of

in Interferometric Swath mode and scan the Earth’s surface over a width of approximately 250 km. The data set for this paper provided by the BGR was generated by using PSI-WAP (Wide-Area-Product) processing [

42], and contains 319226 PSI velocities. To mitigate possible large-scale errors, such as residual orbital or atmospheric errors, the PSI-WAP analysis includes independent velocities from GNSS reference stations for calibration. In the color-coded points in

Figure 1, the area with ground movement caused by salt mining in the west of Hanover near Wunstorf is clearly visible. Besides this, the clustering characteristic of PSI information in urban areas and the data gaps in rural regions become apparent. The presented data material originates from an ascending satellite orbit, which covers an acquisition period from 13.10.2014 to 01.04.2018. Overall, the PSI processing contains 138 SAR images, from which the acquisition of 20.09.2016 was selected as a master scene. The derived velocities were determined in the line of sight (LOS) of the satellite and projected vertically by assuming no horizontal displacement. Horizontal displacements, especially in local ground motion areas, may lead to systematic errors of derived height changes if neglected. To separate the LOS-movement into vertical and horizontal components, a combined solution of data from ascending and descending radar satellites is necessary [

43], which is out of the scope of the current paper.

The provided PSI information is corrupted by a high level of measurement errors. The measurement errors can be divided into two parts: noise and outliers. The noise in the data expresses the precision of the measured samples. By considering prior knowledge about the performance of the measurement system, this can be considered in the surface modeling process.

The second type of errors, outliers, are those observations that do not follow the general behavior of the neighboring points. The existing outliers can be caused by various influencing factors, such as insufficient state modeling of the unknowns in PSI processing. In addition, very local displacements (e.g., subsidence of new buildings) can also lead to anomalies, which in practice do not represent the ground motions in wider areas. Before any further processing and interpretation of the PSI velocities, the outliers have to be eliminated from the data. Those outliers which are due to very local displacements in a short period of time can usually be detected via a temporal outlier detection process [

1] (

Section 2.1). The rest of the spatial anomalies are to be detected in spatial outlier detection, which is the main focus in this research (

Section 3.1).

2.1. Temporal Outlier Detection

The existing outliers in the time series of the scatters can be caused by local displacements in a short period of time. Hence, the time series of the height changes of the persistent scatterer were analyzed first, since they show varying quality levels. In order to identify time series with high measurement noise, a temporal outlier detection approach with subsequent elimination is adopted [

1]. For this purpose, the time series of the PSI points are de-trended (by using a linear regression model) and the remaining residuals serve as a measure for the variability. If the standard deviation of the de-trended time series exceeds the defined experimental threshold of 6 mm, the scatterer is considered as an outlier and excluded from further processing.

Even after temporal filtering, the PSI velocities still show high spatial variability due to different movement behaviors of neighboring scatterers. Therefore, to remove such high-frequency outliers from the data set, a spatial outlier filtering has to be performed. Details about the adapted method is explained in

Section 3.1.

5. Discussion

The proposed spatial outlier detection algorithm shows compatible results to the study by Brockmeyer et al. [

1]. Since the ground truth behind the distribution of the outliers in the real data is not known, it is not possible to evaluate these results more comprehensively. The algorithm not only considers both local and global behavior of the data, but the iterative process of the algorithm also helps to detect the anomalies more precisely. By fitting a surface to the data in each iteration, the nature of the data which represents a surface is taken into account. This makes the approach especially suitable for these kinds of applications. The performance of the algorithm will be improved by having some prior knowledge of the data set. For example, information regarding the minimum noise level and rough expected RGM would help to decide on the complexity of the models for the first and last iterations.

The MBA approach showed computational efficiency and numerical stability in dealing with the real data set. Therefore, it is possible to choose a very complex model with a high number of control points and many levels; however, the approximation of the data based on the MBA approach is very sensitive to complexity of the chosen model. To avoid over- or under-fitting the data, an appropriate control lattice hierarchy could be selected based on the type of dataset and the prior information about the general ground behavior of the region.

The calculated confidence bounds describe the quality of the model very well. The effect of data gaps and noise is directly reflected on the range of the CIs. In this research, the CIs are determined based on the quantiles; however, an improved interval or the ’bias-corrected-

’ could also be calculated [

47].

The range of the CIs is also influenced by the complexity of the model. Increasing the complexity increases the uncertainty range of the approximation. This is particularly important when there is a risk of over- or under-fitting the data or, in other words, the model selection problem. The information from the CIs and standard deviations of the bootstraps can be helpful in finding the most appropriate model for a given data set. This could lead to a solution to solve the model selection problem in similar situations. In the present work, the initial model selected is based on the prior information of the global movement in the region. The model selection concept is not the focus of the current paper.

In practice, the output of this process will help to detect areas affected by movement. By considering the additional information on the uncertainty of the model (CIs), the user can optimally pinpoint areas where new measurements by a more accurate geodetic technique are necessary to update the spatial reference system. Having a reliable mathematical model not only helps to better understand the general behavior of the data, but also provides the opportunity to keep track of any changes of the surface more efficiently, for example by statistical testing. Additionally, the outputs of the outlier detection algorithm can be used to detect the movements of individual objects. These anomalies might represent very local changes that do not affect modeling RGM. However, this information can be of high interest in monitoring infrastructure or other monitoring applications.

6. Conclusions

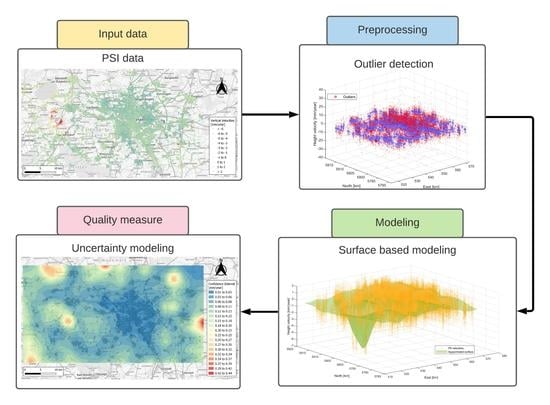

In this paper, processing and modeling PSI observations related to the area of Hanover, Germany were discussed. The goal is to precisely model RGM as a continuous surface that enables the user to predict movements at positions where no measurements are available. The contribution of the current paper consists of three main parts: pre-processing of data, modeling and CIs for the model.

For pre-processing the data, a data-adaptive outlier detection algorithm is proposed. The process considers both global and local behavior of the data. The algorithm is an iterative process in which a model is fitted to the data and in each iteration, the largest deviation to the model is globally detected. The proposed algorithm is tested in a MC simulation for different reference data sets. Results show promising performance in detecting outliers with an accuracy of 0.95 and -score of at least 0.95 for data sets containing up to 10% of outliers. Moreover, the algorithm detects around 5% of outliers in the PSI observations. The proposed methodology is not adopted for outliers of more than 10%, which is due to the criteria for recognizing outliers within the algorithm. This aspect could be improved by optimizing criterion selection, which was not within the scope of the current paper. However, in the next steps, we plan to develop an approach to find the optimal criteria based on individual data sets. For the real data set, 5% of outliers is detected.

The modeled RGM, based on MBA, shows mostly small movements in the area. A large movement area is also detected with up to –6 [mm/year]. The method helps to overcome the challenges in modeling PSI observations, which are mainly the large number of observations and irregular distribution of points. The method is computationally efficient and can numerically handle data gaps. However, it is difficult to model the correlation between different levels, and especially due to the large amount of data, parametrical modeling of uncertainty is not practical. Therefore, the uncertainty of the model is derived from the non-parametric method of bootstrapping. As a result, a CI is estimated for the approximated surface. The CI for the whole area has a range between 0.01–0.4 [mm/year].

The derived CIs reflect the sources of uncertainty in the model. An important source is caused by data gaps in the PSI observation. Additionally, high local variations or noise of the data affect the confidence bands. If the chosen model is not suitable for the data set, the range of the CIs will also increase, which in turn leads to a higher level of uncertainty in the model. Therefore, model selection is an essential step, which directly affects the confidence bands. In the future, we will investigate ways of using the information about the CIs to solve the model selection problem.

Overall, we provided a pipeline for modeling RGMs based on PSI observations including a series of steps. The steps have been designed to consider the nature of the data and the general movement they represent. Although the PSI data provide a large amount of information, the data are contaminated by high levels of noise and outliers besides the irregular distribution of the data, which is challenging to model. The main steps of preprocessing, modeling and quality assurance are done by temporal outlier detection, spatial outlier detection, MBA and bootstrapping methods, respectively.

The combination of a mathematical model of the RGM and the quality measure of the model (CIs) helps the user in identifying the critical locations for updating the spatial reference system. The output of this research could be of high interest for monitoring purposes. Having a mathematical model of the RGM and tracking any changes to the model in time will give the possibility of statistically testing any significant changes to the region in a systematic way. The detected outliers in the spatial outlier detection step may carry important information of very local changes to important infrastructures or buildings.