Combining Spectral and Texture Features of UAV Images for the Remote Estimation of Rice LAI throughout the Entire Growing Season

Abstract

:1. Introduction

2. Materials and Methods

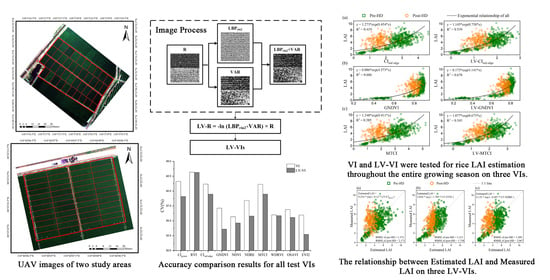

2.1. Study Area

2.2. LAI Sampling and Determination of Heading Date

2.3. Reflectance and Vegetation Indices from UAV Image

2.4. Texture Measurements

2.5. Algorithm Development for LAI Estimation

3. Results

3.1. Relationships of VI vs. Rice LAI throughout the Entire Growing Season

3.2. Rice LAI Estimation Combined with Texture Features

3.2.1. Variation of LBP and VAR in Pre-Heading Stages and Post-Heading Stages

3.2.2. Rice LAI Estimation Based on Remotely Sensed VI and LV-VI

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Sesma, A.; Osbourn, A.E. The rice leaf blast pathogen undergoes developmental processes typical of root-infecting fungi. Nature 2004, 431, 582–586. [Google Scholar] [CrossRef]

- Yuan, L. Development of hybrid rice to ensure food security. Rice Sci. 2014, 21, 1–2. [Google Scholar] [CrossRef]

- Xu, X.Q.; Lu, J.S.; Zhang, N.; Yang, T.C.; He, J.Y.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Inversion of rice canopy chlorophyll content and leaf area index based on coupling of radiative transfer and Bayesian network models. ISPRS J. Photogramm. Remote Sens. 2019, 150, 185–196. [Google Scholar] [CrossRef]

- Watson, D.J. Comparative physiological studies on the growth of field crops: I. Variation in net assimilation rate and leaf area between species and varieties, and within and between years. Ann. Bot. 1947, 11, 41–76. [Google Scholar] [CrossRef]

- Yan, G.; Hu, R.; Luo, J.; Weiss, M.; Jiang, H.; Mu, X.; Xie, D.; Zhang, W. Review of indirect optical measurements of leaf area index: Recent advances, challenges, and perspectives. Agric. For. Meteorol. 2019, 265, 390–411. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Goude, M.; Nilsson, U.; Holmström, E. Comparing direct and indirect leaf area measurements for Scots pine and Norway spruce plantations in Sweden. Eur. J. For. Res. 2019, 138, 1033–1047. [Google Scholar] [CrossRef] [Green Version]

- Qiao, K.; Zhu, W.; Xie, Z.; Li, P. Estimating the seasonal dynamics of the leaf area index using piecewise LAI-VI relationships based on phenophases. Remote Sens. 2019, 11, 689. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Jin, J.; Herbert, S.J.; Zhang, Q.; Wang, G. Yield components, dry matter, LAI and LAD of soybeans in Northeast China. Field Crops Res. 2005, 93, 85–93. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Fang, H.; Baret, F.; Plummer, S.; Schaepman-Strub, G. An overview of global leaf area index (LAI): Methods, products, validation, and applications. Rev. Geophys. 2019, 57, 739–799. [Google Scholar] [CrossRef]

- Asner, G.P.; Braswell, B.H.; Schimel, D.S.; Wessman, C.A. Ecological research needs from multiangle remote sensing data. Remote Sens. Environ. 1998, 63, 155–165. [Google Scholar] [CrossRef]

- Zheng, G.; Moskal, L.M. Retrieving leaf area index (LAI) using remote sensing: Theories, methods and sensors. Sensors 2009, 9, 2719–2745. [Google Scholar] [CrossRef] [Green Version]

- Boussetta, S.; Balsamo, G.; Beljaars, A.; Kral, T.; Jarlan, L. Impact of a satellite-derived leaf area index monthly climatology in a global numerical weather prediction model. Int. J. Remote Sens. 2012, 34, 3520–3542. [Google Scholar] [CrossRef]

- Breda, N.J. Ground-based measurements of leaf area index: A review of methods, instruments and current controversies. J. Exp. Bot. 2003, 54, 2403–2417. [Google Scholar] [CrossRef]

- Casanova, D.; Epema, G.F.; Goudriaan, J. Monitoring rice reflectance at field level for estimating biomass and LAI. Field Crops Res. 1998, 55, 83–92. [Google Scholar] [CrossRef]

- Yao, X.; Wang, N.; Liu, Y.; Cheng, T.; Tian, Y.; Chen, Q.; Zhu, Y. Estimation of wheat LAI at middle to high levels using unmanned aerial vehicle narrowband multispectral imagery. Remote Sens. 2017, 9, 7624. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Chang, Q.; Yang, J.; Zhang, X.; Li, F. Estimation of paddy rice leaf area index using machine learning methods based on hyperspectral data from multi-year experiments. PLoS ONE 2018, 13, e0207624. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dong, T.; Liu, J.; Shang, J.; Qian, B.; Ma, B.; Kovacs, J.M.; Walters, D.; Jiao, X.; Geng, X.; Shi, Y. Assessment of red-edge vegetation indices for crop leaf area index estimation. Remote Sens. Environ. 2019, 222, 133–143. [Google Scholar] [CrossRef]

- Darvishzadeh, R.; Skidmore, A.; Schlerf, M.; Atzberger, C. Inversion of a radiative transfer model for estimating vegetation LAI and chlorophyll in a heterogeneous grassland. Remote Sens. Environ. 2008, 112, 2592–2604. [Google Scholar] [CrossRef]

- Richter, K.; Atzberger, C.; Vuolo, F.; D’Urso, G. Evaluation of Sentinel-2 spectral sampling for radiative transfer model based LAI estimation of wheat, sugar beet, and maize. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 458–464. [Google Scholar] [CrossRef]

- Atzberger, C.; Richter, K. Spatially constrained inversion of radiative transfer models for improved LAI mapping from future Sentinel-2 imagery. Remote Sens. Environ. 2012, 120, 208–218. [Google Scholar] [CrossRef]

- Campos-Taberner, M.; García-Haro, F.J.; Camps-Valls, G.; Grau-Muedra, G.; Nutini, F.; Crema, A.; Boschetti, M. Multitemporal and multiresolution leaf area index retrieval for operational local rice crop monitoring. Remote Sens. Environ. 2016, 187, 102–118. [Google Scholar] [CrossRef]

- Li, D.; Chen, J.M.; Zhang, X.; Yan, Y.; Zhu, J.; Zheng, H.; Zhou, K.; Yao, X.; Tian, Y.; Zhu, Y.; et al. Improved estimation of leaf chlorophyll content of row crops from canopy reflectance spectra through minimizing canopy structural effects and optimizing off-noon observation time. Remote Sens. Environ. 2020, 248, 111985. [Google Scholar] [CrossRef]

- Jay, S.; Maupas, F.; Bendoula, R.; Gorretta, N. Retrieving LAI, chlorophyll and nitrogen contents in sugar beet crops from multi-angular optical remote sensing: Comparison of vegetation indices and PROSAIL inversion for field phenotyping. Field Crops Res. 2017, 210, 33–46. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Pattey, E.; Jégo, G. Assessment of vegetation indices for regional crop green LAI estimation from Landsat images over multiple growing seasons. Remote Sens. Environ. 2012, 123, 347–358. [Google Scholar] [CrossRef]

- Houborg, R.; Anderson, M.; Daughtry, C. Utility of an image-based canopy reflectance modeling tool for remote estimation of LAI and leaf chlorophyll content at the field scale. Remote Sens. Environ. 2009, 113, 259–274. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with ERTS. In NASA SP-351 Third ERTS-1 Symposium; Fraden, S.C., Marcanti, E.P., Becker, M.A., Eds.; Scientific and Technical Information Office, National Aeronautics and Space Administration: Washington, DC, USA, 1974; pp. 309–317. [Google Scholar]

- Viña, A.; Gitelson, A.A.; Nguy-Robertson, A.L.; Peng, Y. Comparison of different vegetation indices for the remote assessment of green leaf area index of crops. Remote Sens. Environ. 2011, 115, 3468–3478. [Google Scholar] [CrossRef]

- Yoshida, S. Fundamentals of Rice Crop Science; International Rice Research Institute: Los Baños, Philippines, 1981; pp. 1–61. [Google Scholar]

- Moldenhauer, K.; Counce, P.; Hardke, J. Rice Growth and Development. In Arkansas Rice Production Handbook; Hardke, J.T., Ed.; University of Arkansas Division of Agriculture Cooperative Extension Service: Little Rock, AR, USA, 2013; pp. 9–20. [Google Scholar]

- He, J.; Zhang, N.; Su, X.; Lu, J.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Estimating leaf area index with a new vegetation index considering the influence of rice panicles. Remote Sens. 2019, 11, 1809. [Google Scholar] [CrossRef] [Green Version]

- Sakamoto, T.; Shibayama, M.; Kimura, A.; Takada, E. Assessment of digital camera-derived vegetation indices in quantitative monitoring of seasonal rice growth. ISPRS J. Photogramm. Remote Sens. 2011, 66, 872–882. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, K.; Tang, C.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; Liu, X. Estimation of rice growth parameters based on linear mixed-effect model using multispectral images from fixed-wing unmanned aerial vehicles. Remote Sens. 2019, 11, 1371. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Yuan, F.; Ata-Ui-Karim, S.T.; Zheng, H.; Cheng, T.; Liu, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cao, Q. Combining color indices and textures of UAV-based digital imagery for rice lai estimation. Remote Sens. 2019, 11, 1793. [Google Scholar] [CrossRef] [Green Version]

- Ma, Y.; Jiang, Q.; Wu, X.; Zhu, R.; Gong, Y.; Peng, Y.; Duan, B.; Fang, S. Monitoring hybrid rice phenology at initial heading stage based on low-altitude remote sensing data. Remote Sens. 2020, 13, 86. [Google Scholar] [CrossRef]

- Duan, B.; Liu, Y.; Gong, Y.; Peng, Y.; Wu, X.; Zhu, R.; Fang, S. Remote estimation of rice LAI based on Fourier spectrum texture from UAV image. Plant Methods 2019, 15, 124. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, C.; Xie, Z. Combining object-based texture measures with a neural network for vegetation mapping in the Everglades from hyperspectral imagery. Remote Sens. Environ. 2012, 124, 310–320. [Google Scholar] [CrossRef]

- Lu, D. Aboveground biomass estimation using Landsat TM data in the Brazilian Amazon. Int. J. Remote Sens. 2007, 26, 2509–2525. [Google Scholar] [CrossRef]

- Zhou, J.; Guo, R.; Sun, M.; Di, T.T.; Wang, S.; Zhai, J.; Zhao, Z. The effects of GLCM parameters on LAI estimation using texture values from Quickbird satellite imagery. Sci. Rep. 2017, 7. [Google Scholar] [CrossRef] [PubMed]

- Ojala, T.; Pietikainen, M.; Harwood, D. A comparative study of texture measures with classification based on feature distributions. Pattern Recogn. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Song, X.; Chen, Y.; Feng, Z.; Hu, G.; Zhang, T.; Wu, X. Collaborative representation based face classification exploiting block weighted LBP and analysis dictionary learning. Pattern Recogn. 2019, 88, 127–138. [Google Scholar] [CrossRef]

- Liu, L.; Fieguth, P.; Guo, Y.; Wang, X.; Pietikäinen, M. Local binary features for texture classification: Taxonomy and experimental study. Pattern Recogn. 2017, 62, 135–160. [Google Scholar] [CrossRef] [Green Version]

- Heikkilä, M.; Pietikäinen, M.; Schmid, C. Description of interest regions with local binary patterns. Pattern Recogn. 2009, 42, 425–436. [Google Scholar] [CrossRef] [Green Version]

- Guo, Z.; Zhang, L.; Zhang, D. A completed modeling of local binary pattern operator for texture classification. IEEE Trans. Image Process. 2010, 19, 1657–1663. [Google Scholar] [CrossRef] [Green Version]

- Ji, L.; Ren, Y.; Liu, G.; Pu, X. Training-based gradient LBP feature models for multiresolution texture classification. IEEE Trans. Cybern. 2018, 48, 2683–2696. [Google Scholar] [CrossRef]

- Garg, M.; Dhiman, G. A novel content-based image retrieval approach for classification using GLCM features and texture fused LBP variants. Neural Comput. Appl. 2020. [Google Scholar] [CrossRef]

- Ma, Z.; Ding, Y.; Li, B.; Yuan, X. Deep CNNs with robust LBP guiding pooling for face recognition. Sensors 2018, 18, 3876. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Karanwal, S.; Diwakar, M. OD-LBP: Orthogonal difference-local binary pattern for face recognition. Digit. Signal Process. 2021, 110, 102948. [Google Scholar] [CrossRef]

- Nanni, L.; Lumini, A.; Brahnam, S. Local binary patterns variants as texture descriptors for medical image analysis. Artif. Intell. Med. 2010, 49, 117–125. [Google Scholar] [CrossRef]

- Yurdakul, O.C.; Subathra, M.S.P.; George, S.T. Detection of parkinson’s disease from gait using neighborhood representation local binary patterns. Biomed. Signal Process. 2020, 62, 102070. [Google Scholar] [CrossRef]

- Yazid, M.; Abdur Rahman, M. Variable step dynamic threshold local binary pattern for classification of atrial fibrillation. Artif. Intell. Med. 2020, 108, 101932. [Google Scholar] [CrossRef]

- Vilbig, J.M.; Sagan, V.; Bodine, C. Archaeological surveying with airborne LiDAR and UAV photogrammetry: A comparative analysis at Cahokia Mounds. J. Archaeol. Sci. Rep. 2020, 33, 102509. [Google Scholar] [CrossRef]

- Hamouchene, I.; Aouat, S.; Lacheheb, H. Texture Segmentation and Matching Using LBP Operator and GLCM Matrix. In Intelligent Systems for Science and Information. Studies in Computational Intelligence; Chen, L., Kapoor, S., Bhatia, R., Eds.; Springer: Cham, Switzerland, 2014; Volume 542. [Google Scholar]

- Peuna, A.; Thevenot, J.; Saarakkala, S.; Nieminen, M.T.; Lammentausta, E. Machine learning classification on texture analyzed T2 maps of osteoarthritic cartilage: Oulu knee osteoarthritis study. Osteoarthr. Cartil. 2021, 29, 859–869. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Mäenpää, T. Gray Scale and Rotation Invariant Texture Classification with Local Binary Patterns. In Proceedings of the 6th European Conference on Computer Vision, Dublin, Ireland, 26 June–1 July 2000; pp. 404–420. [Google Scholar]

- Guo, Z.; Zhang, L.; Zhang, D. Rotation invariant texture classification using LBP variance (LBPV) with global matching. Pattern Recogn. 2010, 43, 706–719. [Google Scholar] [CrossRef]

- Wang, F.-m.; Huang, J.-f.; Tang, Y.-l.; Wang, X.-z. New vegetation index and its application in estimating leaf area index of rice. Rice Sci. 2007, 14, 195–203. [Google Scholar] [CrossRef]

- Jiang, Q.; Fang, S.; Peng, Y.; Gong, Y.; Zhu, R.; Wu, X.; Ma, Y.; Duan, B.; Liu, J. UAV-based biomass estimation for rice-combining spectral, Tin-based structural and meteorological features. Remote Sens. 2019, 11, 890. [Google Scholar] [CrossRef] [Green Version]

- Duan, B.; Fang, S.; Zhu, R.; Wu, X.; Wang, S.; Gong, Y.; Peng, Y. Remote estimation of rice yield with unmanned aerial vehicle (UAV) data and spectral mixture analysis. Front. Plant Sci. 2019, 10, 204. [Google Scholar] [CrossRef] [Green Version]

- Zheng, H.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2018, 20, 611–629. [Google Scholar] [CrossRef]

- Yuan, N.; Gong, Y.; Fang, S.; Liu, Y.; Duan, B.; Yang, K.; Wu, X.; Zhu, R. UAV remote sensing estimation of rice yield based on adaptive spectral endmembers and bilinear mixing model. Remote Sens. 2021, 13, 2190. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Combining unmanned aerial vehicle (UAV)-based multispectral imagery and ground-based hyperspectral data for plant nitrogen concentration estimation in rice. Front. Plant Sci. 2018, 9, 936. [Google Scholar] [CrossRef] [PubMed]

- Smith, G.M.; Milton, E.J. The use of the empirical line method to calibrate remotely sensed data to reflectance. Int. J. Remote Sens. 2010, 20, 2653–2662. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral Remote Sensing from Unmanned Aircraft: Image Processing Workflows and Applications for Rangeland Environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef] [Green Version]

- Baugh, W.M.; Groeneveld, D.P. Empirical proof of the empirical line. Int. J. Remote Sens. 2007, 29, 665–672. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef] [PubMed]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring the Vernal Advancement and Retrogradation (Greenwave Effect) of Natural Vegetation; NASA/GSFC Type III Final Report; NASA/GSFC: Greenbelt, MD, USA, 1974. [Google Scholar]

- Fitzgerald, G.; Rodriguez, D.; O’Leary, G. Measuring and predicting canopy nitrogen nutrition in wheat using a spectral index—The canopy chlorophyll content index (CCCI). Field Crops Res. 2010, 116, 318–324. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P.J. The MERIS terrestrial chlorophyll index. Int. J. Remote Sens. 2004, 25, 5403–5413. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide dynamic range vegetation index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [Green Version]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Wang, A.; Wang, S.; Lucieer, A. Segmentation of multispectral high-resolution satellite imagery based on integrated feature distributions. Int. J. Remote Sens. 2010, 31, 1471–1483. [Google Scholar] [CrossRef]

- Lucieer, A.; Stein, A.; Fisher, P. Multivariate texture-based segmentation of remotely sensed imagery for extraction of objects and their uncertainty. Int. J. Remote Sens. 2005, 26, 2917–2936. [Google Scholar] [CrossRef]

- Kohavi, R. A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection. In Proceedings of the International Joint Conference on Articial Intelligence, Montreal, QC, Canada, 20–25 August 1995; pp. 1137–1145. [Google Scholar]

- Nguy-Robertson, A.; Gitelson, A.; Peng, Y.; Viña, A.; Arkebauer, T.; Rundquist, D. Green leaf area index estimation in maize and soybean: Combining vegetation indices to achieve maximal sensitivity. Agron. J. 2012, 104, 1336–1347. [Google Scholar] [CrossRef] [Green Version]

- Kira, O.; Nguy-Robertson, A.L.; Arkebauer, T.J.; Linker, R.; Gitelson, A.A. Toward generic models for green LAI estimation in maize and soybean: Satellite observations. Remote Sens. 2017, 9, 318. [Google Scholar] [CrossRef] [Green Version]

- Asrola, M.; Papilob, P.; Gunawan, F.E. Support Vector Machine with K-fold Validation to Improve the Industry’s Sustainability Performance Classification. Procedia Comput. Sci. 2021, 179, 854–862. [Google Scholar] [CrossRef]

- Peng, Y.; Zhu, T.; Li, Y.; Dai, C.; Fang, S.; Gong, Y.; Wu, X.; Zhu, R.; Liu, K. Remote prediction of yield based on LAI estimation in oilseed rape under different planting methods and nitrogen fertilizer applications. Agric. For. Meteorol. 2019, 271, 116–125. [Google Scholar] [CrossRef]

- Baret, F.; Guyot, G. Potentials and limits of vegetation indices for LAI and APAR assessment. Remote Sens. Environ. 1991, 35, 161–173. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahighground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Peng, Y.; Nguy-Robertson, A.; Arkebauer, T.; Gitelson, A. Assessment of canopy chlorophyll content retrieval in maize and soybean: Implications of hysteresis on the development of generic algorithms. Remote Sens. 2017, 9, 226. [Google Scholar] [CrossRef] [Green Version]

- Reza, M.N.; Na, I.S.; Baek, S.W.; Lee, K.-H. Rice yield estimation based on K-means clustering with graph-cut segmentation using low-altitude UAV images. Biosyst. Eng. 2019, 177, 109–121. [Google Scholar] [CrossRef]

- Zha, H.; Miao, Y.; Wang, T.; Li, Y.; Zhang, J.; Sun, W.; Feng, Z.; Kusnierek, K. Improving unmanned aerial vehicle remote sensing-based rice nitrogen nutrition index prediction with machine learning. Remote Sens. 2020, 12, 215. [Google Scholar] [CrossRef] [Green Version]

- Bannari, A.; Morin, D.; Bonn, F.; Huete, A.R. A review of vegetation indices. Remote Sens. Rev. 1995, 13, 95–120. [Google Scholar] [CrossRef]

| Growth Stage | Experiment 1: Hainan | Experiment 2: Ezhou | ||||

|---|---|---|---|---|---|---|

| UAV Images | LAI Sampling | DAT | UAV Images | LAI Sampling | DAT | |

| Tillering | - | - | - | 26 June 2019 | 26 June 2019 | 17 |

| - | - | - | 2 July 2019 | 2 July 2019 | 23 | |

| 2 February 2018 | 4 February 2018 | 27 | 6 July 2019 | 6 July 2019 | 27 | |

| - | - | - | 14 July 2019 | 16 July 2019 | 37 | |

| - | - | - | 22 July 2019 | 21 July 2019 | 42 | |

| 26 February 2018 | 25 February 2018 | 48 | 27 July 2019 | 26 July 2019 | 47 | |

| Jointing | - | - | - | 1 August 2019 | 1 August 2019 | 53 |

| 11 March 2018 | 9 March 2018 | 60 | 6 August 2019 | 6 August 2019 | 58 | |

| Booting and heading | - | - | - | 11 August 2019 | 11 August 2019 | 63 |

| 18 March 2018 | 19 March 2018 | 70 | 16 August 2019 | 17 August 2019 | 69 | |

| Ripening | - | - | - | 22 August 2019 | 21 August 2019 | 73 |

| - | - | - | 29 August 2019 | 26 August 2019 | 78 | |

| 1 April 2018 | 31 March 2018 | 82 | 3 September 2019 | 2 September 2019 | 85 | |

| 15 April 2018 | 17 April 2018 | 99 | - | - | - | |

| Center Wavelength (nm) | Band Width(nm) | Center Wavelength (nm) | Band Width (nm) |

|---|---|---|---|

| 490 | 10 | 700 | 10 |

| 520 | 10 | 720 | 10 |

| 550 | 10 | 800 | 10 |

| 570 | 10 | 850 | 10 |

| 670 | 10 | 900 | 20 |

| 680 | 10 | 950 | 40 |

| VI | Formula | Reference | |

|---|---|---|---|

| Ratio Indices | Green Chlorophyll Index (CIgreen) | [67] | |

| Ratio Vegetation Index (RVI) | [28] | ||

| Red-edge Chlorophyll Index (CIred edge) | [67] | ||

| Normalized Indices | Green Normalized Difference Vegetation Index (GNDVI) | [68] | |

| Normalized Difference Vegetation Index (NDVI) | [69] | ||

| Normalized Difference Red-edge Vegetation Index (NDRE) | [70] | ||

| Modified Indices | MERIS Terrestrial Chlorophyll Index (MTCI) | [71] | |

| Wide Dynamic Range Vegetation Index (WDRVI) | , | [72] | |

| Optimized Soil-Adjusted Vegetation Index (OSAVI) | (1+0.16)/ | [73] | |

| Two-band Enhanced Vegetation Index (EVI2) | [74] |

| VI | MTCI | CIred edge | CIgreen | RVI | NDRE | OSAVI | GNDVI | NDVI | WDRVI | EVI2 |

|---|---|---|---|---|---|---|---|---|---|---|

| R2 | 0.385 | 0.419 | 0.426 | 0.43 | 0.525 | 0.58 | 0.606 | 0.618 | 0.645 | 0.704 |

| VI | LV-VI | |||

|---|---|---|---|---|

| R2 | RMSE | R2 | RMSE | |

| CIgreen | 0.400 | 1.738 | 0.552 | 1.633 |

| RVI | 0.368 | 1.804 | 0.422 | 1.802 |

| CIred edge | 0.394 | 1.720 | 0.527 | 1.648 |

| GNDVI | 0.592 | 1.551 | 0.644 | 1.403 |

| NDVI | 0.574 | 1.490 | 0.633 | 1.446 |

| NDRE | 0.485 | 1.605 | 0.617 | 1.495 |

| MTCI | 0.373 | 1.719 | 0.539 | 1.649 |

| WDRVI | 0.608 | 1.504 | 0.643 | 1.498 |

| OSAVI | 0.553 | 1.541 | 0.631 | 1.485 |

| EVI2 | 0.675 | 1.503 | 0.719 | 1.367 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, K.; Gong, Y.; Fang, S.; Duan, B.; Yuan, N.; Peng, Y.; Wu, X.; Zhu, R. Combining Spectral and Texture Features of UAV Images for the Remote Estimation of Rice LAI throughout the Entire Growing Season. Remote Sens. 2021, 13, 3001. https://doi.org/10.3390/rs13153001

Yang K, Gong Y, Fang S, Duan B, Yuan N, Peng Y, Wu X, Zhu R. Combining Spectral and Texture Features of UAV Images for the Remote Estimation of Rice LAI throughout the Entire Growing Season. Remote Sensing. 2021; 13(15):3001. https://doi.org/10.3390/rs13153001

Chicago/Turabian StyleYang, Kaili, Yan Gong, Shenghui Fang, Bo Duan, Ningge Yuan, Yi Peng, Xianting Wu, and Renshan Zhu. 2021. "Combining Spectral and Texture Features of UAV Images for the Remote Estimation of Rice LAI throughout the Entire Growing Season" Remote Sensing 13, no. 15: 3001. https://doi.org/10.3390/rs13153001

APA StyleYang, K., Gong, Y., Fang, S., Duan, B., Yuan, N., Peng, Y., Wu, X., & Zhu, R. (2021). Combining Spectral and Texture Features of UAV Images for the Remote Estimation of Rice LAI throughout the Entire Growing Season. Remote Sensing, 13(15), 3001. https://doi.org/10.3390/rs13153001