1. Introduction

Coastal zones are the shores of seas or oceans. Today, nearly half of the world’s population lives in coastal regions where multiple activities are developed [

1]. Over the last century, coastal zones throughout the world have undergone major changes related to a significant influx of the population. Coveted, densely populated, and exploited by human societies, coastal zones are therefore subject to significant pressures that generate territorial dynamics and changes in land cover/land use (LCLU). LCLU is al-ways influenced by human actions and environmental features and processes, and it mediates the interactions of these two factors. This means that land use changes are primarily due to human actions, which are associated with economic development, tech-neology, environmental change, and especially, population growth, which usually has parallel rates to land use change [

2,

3]. However, traditional methods require direct observations in the field; usually, they are not only ineffective, expensive, time-consuming, and labor intensive but are also limited on the local scale. Hence, remote sensing with analysis techniques is highly recommended, and there has been an in-creasing demand for LCLU studies since the first launch of Earth observation satellites in 1972 with Landsat-1. Since that time, the monitoring and mapping of LCLUs over large areas and in a consistent manner has been made possible with Earth observation (EO) data, and detection of these changes by EO data is necessary for the better management of territory and resources. Moreover, each new generation of satellite equipment increases the resolution of sensors that collect high spatial resolution data for LCLU mapping and monitoring [

4]. Since high-resolution satellite images are now available, land cover change mapping and monitoring at the landscape or local scale have been developed at a high rate of speed [

5,

6,

7].

Several national and international organizations have produced regional land change maps that represent a location on a single date (e.g., CORINE Land Cover 2000 in Europe), with Landsat observations acquired in a target year interval (e.g., ±1–3 years). Some programs repeat land cover mapping periodically (e.g., NLCD 2001/2006/2011 in the United States and CSBIO in France) to allow the observation of the changes. The local accuracy of these global or national land cover maps generated from coarse spatial resolution data is low, especially in regions with fragmented land covers [

8].

At the same time, for studies at larger scales, satellite data have been used to monitor LCLU changes worldwide in various fields of research, such as mapping cropland conversions [

9], monitoring urbanization and its impacts [

10,

11,

12], monitoring deforestation [

13,

14,

15,

16,

17], evaluating the environment [

18,

19,

20] and biodiversity losses, and examining the influence of LCLU on climate change [

21]. Nonetheless, all types of land use might lead to detrimental impacts and effects in many fields: for example, the abandonment of agricultural land without restoration is linked to a specific set of problems, including landscape degradation and an increased risk of erosion [

4]. These irreversible impacts of LCLU change have significantly increased in recent decades, and so the mapping and monitoring LCLU is very important as the first step in the study and management of this phenomenon.

In recent years, given the importance of LCLU changes and the increasing availability of open-access archived multitemporal datasets, many methods for analyzing and mapping LCLU changes have been developed. The diversity of algorithms for studying LCLU changes was also determined by the diversity of remote sensing sensor types (e.g., multispectral, hyperspectral, and SAR). Among the most commonly used satellite images in change detection (CD) studies are multispectral images due to the diversity of the types of sensors used to collect the data and the high temporal resolution of datasets for this type of study. For example, Wang et al. 2018 [

22] conducted a study in a coastal area of Dongguan City, China, using SPOT-5 images acquired in 2005 and 2010. In this study, a scale self-adapting segmentation (SSAS) approach based on the exponential sampling of a scale parameter and the location of the local maximum of a weighted local variance was proposed to determine the scale selection problem when segmenting images constrained by LCLU for detecting changes. Tran et al. 2015 [

23] conducted a study in coastal areas of the Mekong Delta on changes in LCLU between 1973 and 2011 from Landsat and SPOT images. The supervised maximum likelihood classification algorithm was demonstrated to provide the best results from remotely sensed data when each class had a Gaussian distribution. Guan et al. 2020 [

24] studied a CD and classification algorithm for urban expansion processes in Tianjin (a coastal city in China) based on a Landsat time series from 1985 to 2018. They applied the c-factor approach with the Ross Thick-LiSparse-R model to correct the bi-directional reflectance distribution function (BRDF) effect for each Landsat image and calculated a spatial line density feature for improving the CD and the classification. Dou and Chen 2017 [

25] proposed a study in Shenzhen, a coastal city in China, from Landsat images using C4.5-based AdaBoost, and a hierarchical classification method was developed to extract specific classes with high accuracy by combining a specific number of base-classifier decisions. According to this study, the landscape of Shenzhen city has been profoundly changed by prominent urban expansion.

In addition, in recent decades, remote sensing techniques have progressed, and many methods, such as machine learning, have been developed for LCLU change studies, such as support vector machines (SVMs), random forests (RFs), and convolutional neural networks (CNNs). Nonparametric machine learning algorithms such as SVM and RF are well-known for their optimal classification accuracies in land cover classification applications [

26,

27,

28]. These algorithms have significant advantages and similar abilities in classifying multitemporal and multisensor data, including high-dimensional datasets and improved overall accuracy [

29,

30]. The accurate and timely detection of changes is the most important aspect of this process. Moreover, CNN, a more recently developed but well-represented deep learning method, allows the rapid and effective analysis and classification of LCLUs and has proven a suitable and reliable method for accurate CD in complex scenes. Although it is more recent, many studies have made use of this method. Wang et al., 2020 [

31] proposed a new coarse-to-fine deep learning-based land-use CD method. In this study, several models of CNN well-trained with a new scene classification dataset were able to provide ac-curate pixel-level range CD results with a high detection accuracy and reflect the changes in LCLU in detail. In another study of Han et al., 2020 [

32], a weighted Demptster-Shafer theory fusion method was proposed. This method achieved reliable CD results with high accuracy using only two very high-resolution multitemporal images by generating object-based CD through combining multiple pixel-based CDs.

At the same time, in the Pays de Brest, which the Crozon Peninsula is part of, a category of LCLU has been studied through shallow machine learning algorithms. Niculescu et al. 2018 [

33] and Niculescu et al. 2020 [

34] applied the algorithms of rotation forest, canonical correlation forests and random forest (RF) with satisfactory results for the classification of the different categories of land cover (vegetation) of the peninsula, as well as the summer and winter crops from the synergy of optical and radar data from the Sentinel satellite.

LCLU changes in coastal areas have been studied with machine learning algorithms in different environments. Munoz et al 2021 [

35] analyzed the coastal wetland dynamics associated with urbanization, the sea level rise and hurricane impacts in the Mobile Bay watershed since 1984. They developed a land cover classification model with CNNs and a data fusion (DF) framework. The classification model achieved the highest overall accuracy (0.93) and f1-scores in the woody (0.90) and emergent wetland classes (0.99) when those datasets were fused into the framework.

More methodological work on the application of CNNs for CD was conducted by Jing et al. 2020 [

36]. In this study, a CD method was proposed that combines a multiscale simple linear iterative clustering-convolutional neural network (SLIC-CNN) with stacked convolutional auto encoder (SCAE) features to improve the CD capabilities with HR satellite images. This method uses the self-learning SCAE architecture as the feature extractor to integrate multiscale, spectral, geometric, textural and deep structural features to enhance the characteristics of ground objects in images.

Machine learning methods were combined with Object-based Image Analysis (OBIA) techniques by Jozdani et al., 2019 [

37] for urban LCLU classification. The multi-layer perceptron model led to the most accurate classification results in this study. However, it is also important to note that GB/XGB and SVM produced highly accurate classification results, demonstrating the versatility of these ML algorithms.

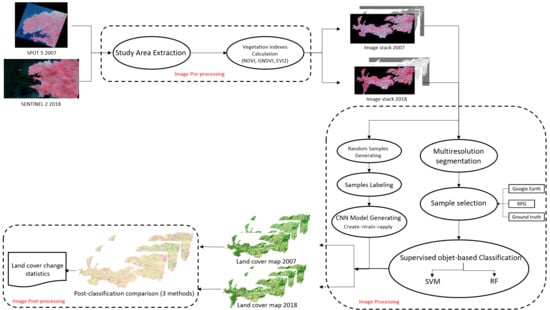

In this work, we aimed to study multiannual changes of LCLU in the Crozon Peninsula, an area that has mainly been marked by conversion between three types of LCLU: cropland, urban, and vegetation, in recent years, especially from 2007 to 2018. The challenge of this research was to deal with multiannual changes of a coastal area with different shapes and patterns by combining machine learning methods with PCC. To improve the CD capabilities using high-resolution satellite images, we implemented three remote sensing machine learning algorithms: SVM, RF combined with GEOBIA techniques, and CNN with SPOT 5 and Sentinel 2 data from 2007 and 2018, all effective and valid data sources. An evaluation of these three advanced machine-learning algorithms for image classification in terms of the overall accuracy (OA), producer’s accuracy (PA), user’s accuracy (UA), and confidence interval was conducted to more precisely detect the type of multiannual change.

2. Study Area

The study area, the Crozon Peninsula canton south of the Landerneau-Daoulas canton, is located on the west coast of France in the Pays de Brest, Department of Finistère and the region of Brittany (

Figure 1).

It covers a land area of 365.4 km² that extends between latitudes 48° 10′04″ N and 48° 21′28″ N, longitudes 4°02′44″ W and 4°38′37″ W. The Crozon Peninsula is a sedimentary site with contrasting topography and contours that separate the Bay of Brest and the Bay of Douarnenez. The region is a mosaic of cliffs, dunes, moors, peat bogs, and coastal wetlands. The peninsula thus presents phytocenetic, faunistic, and landscape interests. The population of the study area is 29,893; this makes the population density approximately 81.6 per km². The topography of the Crozon Peninsula is mostly dominated by plains, except for hills in the east and northeast, and the elevation of the area ranges between 0 m and 300 m. Climatically, the study area is classified as type Cfb (temperate oceanic climate) according to the Köppen climate classification. On average, the Crozon Peninsula reaches 1208 mm of precipitation per year, and the annual average temperature is 12.2 °C. The land cover is characterized by forest, shrubs, and grasslands, which are mostly in the west, urban areas, cropland (including mainly corn and wheat) and meadow.

Traditionally, the majority of local people practice agricultural or related activities in the Finistère Department, in which 57% of the department’s surface is devoted to agricultural use. However, the French National Defense provides more than half of the employment in the Crozon Peninsula; hence, other activity sectors (e.g., agriculture, industry, construction and commerce) are proportionally less important.

Nevertheless, the land cover was actually in sharp transition in our study area between 2008 and 2018, with the peninsula especially marked by an increasing service and commerce sectors. Therefore, the study area was chosen as a typical ideal case to study land cover changes.

3. Data

Operable high-quality cloud-free satellite images in this area are extremely rare due to the annual high-intensity rainfall and, hence, heavy cloud cover. Despite these limitations, three cloud-free images from two dates in 2007 and 2018 with the same scene area were acquired from either the SPOT or Sentinel platforms to study land cover changes in the study area during the summer, which is the growing season for crops (

Table 1).

First, a SPOT-5 satellite image was obtained from the early summer of 2007. SPOT-5 was the fifth satellite in the SPOT series of CNES (Space Agency of France). It was launched in 2002 and completed its mission by the end of 2012. It provided very high spatial resolution (2.5 m in the panchromatic band and 10 m in the multispectral band) and wide-area coverage satellite images with a revisit frequency of 2 to 3 days [

38]. The multispectral SPOT-5 image downloaded from the ESA was obtained by merging the 2.5 m panchromatic band and the 10 m multispectral band, resulting in the spatial information of the image being identical to the information observed with the panchromatic sensor (earth.eas.int).

Second, Sentinel-2 is an imaging mission that operates in the frame of the Copernicus (ex-GMES Global Monitoring for Environment and Security) program, which is implemented by the European Commission (EC) and the European Space Agency (ESA). The twin Sentinel-2 satellites (2A and 2B) deliver continually polar-orbiting; multispectral; high-resolution (10 m spatial resolution for B2, B3, B4, and B8; 20 m for B5, B6, B7, B8a, B11, and B12; and 60 m for B1, B9, and B10); high revisit frequency (10 days of revisit frequency for each satellite and a combined revisit frequency of five days); wide-swath; and open-access satellite imagery [

39]. Two level 2A atmospheric effect-corrected Sentinel-2 images of the same date in the middle of the summer in 2018 were acquired from Theia (catalog.theia-land.fr); a mosaic was then created by combining two images to cover the whole study area, and four spectral bands at a 10 m resolution (red, green, blue, and near-infrared) were extracted for further use.

For the purpose of land cover identification at the sample selection step, we also used Google Earth and RPG (Graphic parcel register) maps and a French database with agricultural parcel identification as the reference data, complemented by observation and survey in the field when necessary.

6. Discussion

6.1. LCLU Classification

In this study, three different algorithms were applied to two high spatial resolution satellite images from 2007 and 2018, which were both acquired in the growing season, to map LCLU changes in the Crozon Peninsula, a highly fragmented region. Our objective was to map different LCLUs (cropland, water, vegetation and non-vegetation, including urban land use) and then map and monitor LCLU changes between two years. Another important aspect in the application of the machine learning methods was to recognize the specific type of change when collecting samples for training.

Three classification algorithms (SVM, RF, and CNN) were used, and all of them achieved a good accuracy level, with the overall accuracy ranging from 70% to 90%, despite the complex landscape and small field size. Two machine learning methods, RF and SVM, are object-based approaches, and features other than spectral values play an important role in the classification.

The RF and SVM models both performed well for the LCLU classification; nonetheless, the CNN obviously is better suited to performing classification in our study area, as indicated by the accuracy assessments. According to the results presented in

Figure 5 and the statistical evaluations of accuracy provided in

Table 3,

Table 4,

Table 5 and

Table 6, the proposed method (CNN) generally performs best regardless of the type of dataset and accuracy index. Therefore, the CNN has proven to be a feasible, reliable method with remarkable performance for precisely mapping LCLU and analyzing the changes. Our experiments have shown the superiority of the CNN over other state-of-the-art machine learning classifiers in terms of classification accuracy. However, some important considerations regarding its effectiveness are worth discussing. Previous applications of CNN models have tended to emphasize the complexity of these models compared to RF models and SVMs. In this case, parameter tuning and optimization are often performed by cross-validation for CNN algorithms. However, in some cases, CNN models can have millions of weights to optimize at each iteration [

83]. In such situations, training these models can be tedious. Manual tuning or rules of thumb for cross-validation should be implemented in this case. This manual manipulation could have repercussions on the accuracy of the model. A well-known solution is transfer learning [

84]. In this case, instead of a model being trained from scratch, pretrained models are retrained on the user’s classes of interest. Pretrained models allow for better accuracy [

85]. In our study, the deep model was very useful for generalization.

6.2. Accuracy Assessment

In accordance with

Table 4, the highest OA was obtained by applying the CNN algorithm to 2007 (83.11 ± 03.27). The RF gives the lowest OA, 70.51 ± 08.38. The SVM showed intermediate values between 77.03 ± 04.36 (2007) and 78.14 ± 06.40 (2018). Regarding the PU and UA (validated results) by class, the best results were obtained with SVM for the PA in 2018 for the non-vegetation class (99.08 ± 01.31; except for the water class). The worst results were always obtained with the SVM for the PA of the cropland class (with bare soil), which was 35.19 ± 06.81.

However, the lower accuracy occurred for 2018, and we deduced that the spatial resolution of the image is a crucial part of classification that can explain the differences between the SVM and RF’s overall accuracy in the different years. The RF performed better on the 2007 data with a 2 m spatial resolution SPOT 5 image; in contrast, SVM achieved a better accuracy in 2018 with a 10 m spatial resolution Sentinel 2 image. Among all of the classes, except for the water areas, which have a very different spectral signature than the other classes, vegetation was the best-detected class, most likely because it occurred on the greatest part of the study area; therefore, it also had the largest sample dataset, since all of the samples were randomly and evenly selected in the images. Non-vegetation areas that are mostly urban land, rocks, and sand were relatively simple to discriminate. Cropland with bail soil was better-classified than planted cropland. Misclassification largely occurred between the vegetation and crops due to their spectral signature similarities, especially during the growing season, and they were spatially approximate; some croplands were small and intermixed with trees or shrubs.

The choice of a good classifier is very important, but at the same time, the features extracted from the image are also important. GEOBIA techniques allow the use of hand-created features in the classification phase. The number and choice of features clearly influence the final classification. At the same time, the features of an RF and SVM are learned automatically from the input data during training. The features automatically learned by RF and SVM based on the spectral, contextual and spatial property classes increased the generalization capabilities of the models.

6.3. LCLU Changes Detection (2007–2018)

CD techniques can be grouped into two types of objectives: change enhancement and change “from-to” information extraction. In this study, the detection and direction of the changes were processed by applying PCC on a pixel-by-pixel basis through SVM, RF, and CNN classification, with the best performances of the change classes detection between the series of multitemporal images. The multitemporal images were stacked together and then classified directly to detect land cover changes. This work presents a CD protocol that allows reliable PCC to account for the classification accuracies, landscape heterogeneity, and pixel sizes. However, the accuracy of the final change map depends on the quality of each individual classification [

86,

87,

88]. Errors in the individual maps are additive in the combination (change mapping). In connection with this error question, Liu and Zhou, 2004 [

89] proposed a set of rules for the probability of changes from one class to another based on field knowledge. They used these rules to separate “real changes” from possible classification errors. Thus, they determined the accuracy of trajectory changes by arguing the rationality of the changes through a PCC.

Our classification results showed that it is possible to map land use with different algorithms and analyze land use changes between two years. First, increasing the cropland surface indicates that agricultural activities remained an important economic sector in the peninsula, and there were essentially no signs of abandoned agricultural land during the study period. Second, non-vegetation areas increased dramatically due to urbanization, especially some coastal cities that are highly frequented by tourists, since tourism is highly developed in the peninsula. The very dense population corresponds to a high level of artificialization of the territory, which is growing faster than the national average, fueled by a construction of housing and nonresidential premises. This human concentration also implies the progression of urbanization toward the hinterlands, where the construction of housing and the arrival of new residents increased significantly. Artificialization is the main change that has affected the coastal zone of the peninsula, with preferential locations around the major urban centers and on certain coastal sectors. Despite the regulatory protection established by the Littoral Law, the changes are also important in the 100 m band nearest to the sea and then decrease as one moves away from it. In 1986, the Littoral Law provided an initial regulatory response to the need to control the anarchic development of construction on the coast. One of the most significant consequences of development has been the drastic reduction of the vegetation surface. Vegetation has been removed for two main reasons: increasing agricultural activities and urban land growth. Therefore, economic development can have negative social and economic implications on the peninsula; in addition, environmental conservation and protection are required.

7. Conclusions

CD methods involve analyzing the state of a specific geographic area to identify variations from images taken at different times. With satellite remote sensing, high spatial and spectral resolution images are recorded and used to analyze the scales of changes. In this study, in order to detect multiannual change classes between the series of multitemporal images using a pixel-by-pixel PCC technique, three different well-known and frequently used algorithms, including two machine learning algorithms (SVM and RF) and one deep learning algorithm (CNN), were tested on two high spatial resolution satellite images. RF and SVM were applied with an object-based approach, which requires a segmentation step to create subpixel-level objects to avoid the error of mixed pixels since the study area was mainly covered by small fields. The inclusion of the CNN significantly improved the classification performance (5–10% increase in the overall accuracy) compared to the SVM and RF classifiers applied in our study.

Our results showed that the use of remote sensing for complex multiannual small-scale LCLU change studies was completely reliable. The study resulted in two maps that showed five different land uses (cropland, cropland with bare soil, water, vegetation and non-vegetation) in 2007 and 2018 with high accuracy. In particular, the CNN had an overall accuracy that ranged from 80 to 90%, making it the most suitable algorithm in our case, even though RF and SVM also achieved good accuracy levels.

The results may also lead to the conclusion that economic development is rapidly occurring in the peninsula, manifested as urban land and tourism growth, increasing the agricultural activities and grossly decreasing the vegetative areas. Hence, environmental protection measures are demanded for the future. In this context of change, the coastal zones of the peninsula tend to specialize socially and economically, and the maintenance of the agricultural areas, as well as the preservation of the natural areas, are both more sensitive and more complex. Moreover, it appears that the change in land use must be understood in the context of climate change, which is a factor in the aggravation of risks (e.g., flooding and, coastal risks), especially in the sectors that are most subjected to urbanization pressures.

Although we observed relatively high classification accuracies, several uncertainties and limitations persisted. The first is the misclassification between vegetation and planted croplands: the very similar spectral characteristics that they share and their geographical localization lead to this confusion. Second, the two classifications were based on two images with different spatial resolutions; thus, some errors of the land use change analysis could have been induced. Third, useful cloud-free satellite images of the growing season were not easy to obtain in our study area; therefore, a series of annual mappings with more precision was not performed in the study. Hence, some recommendations can be made for further studies, such as applying more vegetation indices or using hyperspectral images to differentiate between vegetation and planted croplands or exploring the potential of synthetic-aperture radar images as a supplement to the traditional optical images on cloudy days.