Sentinel-2 Image Scene Classification: A Comparison between Sen2Cor and a Machine Learning Approach

Abstract

:1. Introduction

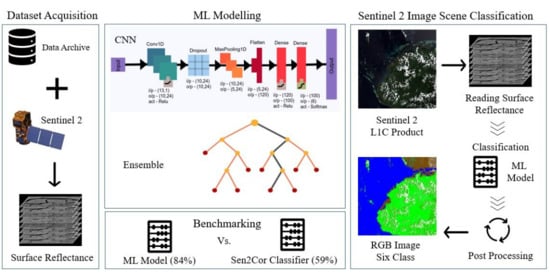

- Focusing on the problem of optical satellite image scene classification, an ensemble and Neural Network based ML models are proposed;

- An extended manually labeled Sentinel-2 database is set up by adding Surface Reflectance values to a previous available dataset;

- The diversity of the formulated dataset and the ML model sensitivity, biasness, and generalization ability are tested over geographically independent L1C images;

- The ML model benchmarking is performed against the existing Sen2Cor package that was developed for calibrating and classifying Sentinel-2 imagery.

2. Sen2Cor

2.1. Cloud and Snow

2.2. Vegetation

2.3. Soil and Water

2.4. Cirrus Cloud

2.5. Cloud Shadow

3. Materials and Methods

3.1. Dataset Creation

- For each product-ID in the original dataset, L1C products were downloaded from CREODIAS [39] platform;

- For each downloaded L1C product, a corresponding L2A product was generated using Sen2Cor v2.5.5. Afterwards, for each L2A product, Scene Classification was retrieved resulting in an extended dataset for Sen2Cor assessment;

- Downloaded L1C products were re-sampled to 20 m (allowing spatial analysis) and the 13 bands of imagery were retrieved, resulting in an extended dataset for the ML model.

3.1.1. Original Data

3.1.2. Extended Data

3.2. Classification Algorithms

3.2.1. Decision Tree (DT)

3.2.2. Random Forest (RF)

3.2.3. Extra Tree (ET)

3.2.4. Convolutional Neural Networks (CNNs)

3.3. Feature Analysis

3.4. Experimental Setup

- Input layer: The input representation of this layer is a matrix value of 13 bands;

- Convolutional-1D layer: This layer is used to extract features from input. Here, from the previous layer, multiple activation feature maps are extracted by combining the convolution kernel. In our architecture, we used a convolution kernel size of 4;

- Dropout: A random portion of the outputs for each batch is nullified to avoid strong dependencies between portions of adjacent layers;

- Pooling layer: This layer is responsible for the reduction of dimension and abstraction of the features by combining the feature maps. Thus, the overfitting problem is prevented, and at the same time, computation speed is increased;

- Flatten layer: Here, the (5 × 24) input from the previous layer is taken and transformed into a single vector giving a feature space of width 120;

- Dense layer: In this layer, each neuron receives input from all the neurons in the previous layer, making it a densely connected neural network layer. The layer has a weight matrix W, a bias vector b, and the activation function of the previous layer.

- Softmax activation: It is a normalized exponential function which is used in multinomial logistic regression. By using the softmax activation function, the last output vector of the CNN model is forced to be a part of the sample class (in our case, the output vector is 6).

4. Results

- When looking at micro-F1, CNN performs similar to Random Forest and Extra Trees. The difference in micro-F1 is small (almost zero) and we cannot state that CNN outperforms the others. Moreover, one can state that each algorithm performs better than the others on specific classes; for example, ET has higher micro-F1 over classes Cirrus, Cloud, and Other, whereas RF has higher micro-F1 over Water and CNN over Snow.

- Looking at precision and recall for Cirrus and Shadow classes, it is noticeable that Sen2Cor has high precision but low recall. This means that Sen2Cor is returning very few results of Cirrus and Shadow (it has a very high rate of false negatives), although most of its predicted labels are correct (low level of false positive errors).

- Overall, the three Machine Learning algorithms generate models with similar performance with differences that range from 0% to 7% between the “best” and the “worst”. (for example, Cirrus has a “best” micro-F1 of 0.79% with ET and a “worst” micro-F1 of 0.72% with RF.) With regard to the classes, there is a great variation: precision values are above 90% for classes Snow and Shadow and less than 75% for the Other class; for recall, the highest values are obtained for the classes Cloud and Other (values above 80%) and the lowest for the Cirrus and Shadow classes (values between 67% and 77%). Regarding the micro-F1 measure, the only class with values below 80% is the class Cirrus; classes Snow and Water have values above 90%.

- Comparing the performance of ML algorithms with Sen2Cor, especially for the Cirrus and Snow classes, ML approaches are superior. For the same classes, Sen2Cor micro-F1 values are below 50%; these low values are due to the big difference between precision and recall (for Cirrus precision is above 90% while recall is 10%; for Snow precision is above 85% and recall around 30%). Considering the micro-F1 measure, the ML models present an increase of about 25 points (from 59% to 84%) when compared to the Sen2Cor Scene Classification algorithm.

5. Discussion

6. Conclusions

- Add more training scenes with the help of image augmentation (also known as elastic transformation) [78] using existing training data.

- Incorporate radar information and correlate the results and its impact over Water, Shadow, Cirrus, Cloud, and Snow detection.

- Study different CNN architectures.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ML | Machine Learning |

| ESA | European Space Agency |

| MSI | MultiSpectral Instrument |

| TOA | Top-of-Atmosphere |

| BOA | Bottom-of-Atmosphere |

| SCL | Scene Classification |

| AOT | Aerosol Optical Thickness |

| WV | Water Vapour |

| MAJA | Maccs-Atcor Joint Algorithm |

| Fmask | Function of mask |

| NDVI | Normalized Difference Vegetation Index |

| NIR | Near Infra-Red |

| RF | Random Forest |

| ET | Extra Trees |

| CNN | Convolutional Neural Networks |

| NN | Neural Networks |

| GVI | Global Vegetation Index |

| NDSI | Normalized Difference Salinity Index |

| SCI | Soil Composition Index |

Appendix A. Classifying Sentinel-2 L1C Product

References

- Mohajerani, S.; Krammer, T.A.; Saeedi, P. Cloud detection algorithm for remote sensing images using fully convolutional neural networks. arXiv 2018, arXiv:1810.05782. [Google Scholar]

- Hashem, N.; Balakrishnan, P. Change analysis of land use/land cover and modelling urban growth in Greater Doha, Qatar. Ann. GIS 2015, 21, 233–247. [Google Scholar] [CrossRef]

- Rahman, A.; Kumar, S.; Fazal, S.; Siddiqui, M.A. Assessment of land use/land cover change in the North-West District of Delhi using remote sensing and GIS techniques. J. Indian Soc. Remote Sens. 2012, 40, 689–697. [Google Scholar] [CrossRef]

- Liou, Y.A.; Nguyen, A.K.; Li, M.H. Assessing spatiotemporal eco-environmental vulnerability by Landsat data. Ecol. Indic. 2017, 80, 52–65. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, K.A.; Liou, Y.A. Mapping global eco-environment vulnerability due to human and nature disturbances. MethodsX 2019, 6, 862–875. [Google Scholar] [CrossRef] [PubMed]

- Dao, P.D.; Liou, Y.A. Object-based flood mapping and affected rice field estimation with Landsat 8 OLI and MODIS data. Remote Sens. 2015, 7, 5077–5097. [Google Scholar] [CrossRef] [Green Version]

- San, B.T. An evaluation of SVM using polygon-based random sampling in landslide susceptibility mapping: The Candir catchment area (Western Antalya, Turkey). Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 399–412. [Google Scholar] [CrossRef]

- Sentinel-2 Mission. Available online: https://sentinel.esa.int/web/sentinel/missions/sentinel-2 (accessed on 4 February 2020).

- European Copernicus Programme. Available online: https://www.copernicus.eu/en (accessed on 22 June 2020).

- Frantz, D.; Haß, E.; Uhl, A.; Stoffels, J.; Hill, J. Improvement of the Fmask algorithm for Sentinel-2 images: Separating clouds from bright surfaces based on parallax effects. Remote Sens. Environ. 2018, 215, 471–481. [Google Scholar] [CrossRef]

- Main-Knorn, M.; Louis, J.; Hagolle, O.; Müller-Wilm, U.; Alonso, K. The Sen2Cor and MAJA cloud masks and classification products. In Proceedings of the 2nd Sentinel-2 Validation Team Meeting, ESA-ESRIN, Frascati, Rome, Italy, 29–31 January 2018; pp. 29–31. [Google Scholar]

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in Landsat imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Automated cloud, cloud shadow, and snow detection in multitemporal Landsat data: An algorithm designed specifically for monitoring land cover change. Remote Sens. Environ. 2014, 152, 217–234. [Google Scholar] [CrossRef]

- Hagolle, O.; Huc, M.; Pascual, D.V.; Dedieu, G. A multi-temporal method for cloud detection, applied to FORMOSAT-2, VENμS, LANDSAT and SENTINEL-2 images. Remote Sens. Environ. 2010, 114, 1747–1755. [Google Scholar] [CrossRef] [Green Version]

- Petrucci, B.; Huc, M.; Feuvrier, T.; Ruffel, C.; Hagolle, O.; Lonjou, V.; Desjardins, C. MACCS: Multi-Mission Atmospheric Correction and Cloud Screening tool for high-frequency revisit data processing. In Image and Signal Processing for Remote Sensing XXI; International Society for Optics and Photonics: Bellingham, WA, USA, 2015; Volume 9643, p. 964307. [Google Scholar]

- Moustakidis, S.; Mallinis, G.; Koutsias, N.; Theocharis, J.B.; Petridis, V. SVM-based fuzzy decision trees for classification of high spatial resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2011, 50, 149–169. [Google Scholar] [CrossRef]

- Munoz-Mari, J.; Tuia, D.; Camps-Valls, G. Semisupervised classification of remote sensing images with active queries. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3751–3763. [Google Scholar] [CrossRef]

- Oliva, A.; Torralba, A. Modeling the shape of the scene: A holistic representation of the spatial envelope. Int. J. Comput. Vis. 2001, 42, 145–175. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Hu, F.; Xia, G.S.; Hu, J.; Zhang, L. Transferring deep convolutional neural networks for the scene classification of high-resolution remote sensing imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Shen, H.; Cheng, Q.; Liu, Y.; You, S.; He, Z. Deep learning based cloud detection for medium and high resolution remote sensing images of different sensors. ISPRS J. Photogramm. Remote Sens. 2019, 150, 197–212. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. Joint Deep Learning for land cover and land use classification. Remote Sens. Environ. 2019, 221, 173–187. [Google Scholar] [CrossRef] [Green Version]

- Baetens, L.; Desjardins, C.; Hagolle, O. Validation of Copernicus Sentinel-2 Cloud Masks Obtained from MAJA, Sen2Cor, and FMask Processors Using Reference Cloud Masks Generated with a Supervised Active Learning Procedure. Remote Sens. 2019, 11, 433. [Google Scholar] [CrossRef] [Green Version]

- Hagolle, O.; Huc, M.; Desjardins, C.; Auer, S.; Richter, R. Maja Algorithm Theoretical Basis Document. 2017. Available online: https://zenodo.org/record/1209633#.XpdnZvnQ-Cg (accessed on 4 August 2020).

- Louis, J.; Debaecker, V.; Pflug, B.; Main-Knorn, M.; Bieniarz, J.; Mueller-Wilm, U.; Cadau, E.; Gascon, F. Sentinel-2 Sen2Cor: L2A processor for users. In Proceedings of the Living Planet Symposium 2016, Spacebooks Online, Prague, Czech Republic, 9–13 May 2016; pp. 1–8. [Google Scholar]

- Qiu, S.; Zhu, Z.; He, B. Fmask 4.0: Improved cloud and cloud shadow detection in Landsats 4–8 and Sentinel-2 imagery. Remote Sens. Environ. 2019, 231, 111205. [Google Scholar] [CrossRef]

- Zou, Q.; Ni, L.; Zhang, T.; Wang, Q. Deep learning based feature selection for remote sensing scene classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- Xia, G.S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L.; Lu, X. AID: A benchmark data set for performance evaluation of aerial scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef] [Green Version]

- Penatti, O.A.; Nogueira, K.; Dos Santos, J.A. Do deep features generalize from everyday objects to remote sensing and aerial scenes domains? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 44–51. [Google Scholar]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. Eurosat: A novel dataset and deep learning benchmark for land use and land cover classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2217–2226. [Google Scholar] [CrossRef] [Green Version]

- Zhou, W.; Newsam, S.; Li, C.; Shao, Z. PatternNet: A benchmark dataset for performance evaluation of remote sensing image retrieval. ISPRS J. Photogramm. Remote Sens. 2018, 145, 197–209. [Google Scholar] [CrossRef] [Green Version]

- Sumbul, G.; Charfuelan, M.; Demir, B.; Markl, V. Bigearthnet: A large-scale benchmark archive for remote sensing image understanding. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 5901–5904. [Google Scholar]

- Sentinel-2 MSI—Level 2A Products Algorithm Theoretical Basis Document. Available online: https://earth.esa.int/c/document_library/get_file?folderId=349490&name=DLFE-4518.pdf (accessed on 4 February 2020).

- Rouse, J.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Kohonen, T. Self-organized formation of topologically correct feature maps. Biol. Cybern. 1982, 43, 59–69. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence—A Modern Approach, 3rd ed.; Prentice Hall Press: Upper Saddle River, NJ, USA, 2010; ISBN 0136042597. [Google Scholar] [CrossRef]

- Mohri, M.; Rostamizadeh, A.; Talwalkar, A. Foundations of Machine Learning. Adaptive Computation and Machine Learning; MIT Press: Cambridge, MA, USA, 2012; Volume 31, p. 32. [Google Scholar]

- Creodias Platfrom. Available online: https://creodias.eu/ (accessed on 4 August 2020).

- Hollstein, A.; Segl, K.; Guanter, L.; Brell, M.; Enesco, M. Ready-to-use methods for the detection of clouds, cirrus, snow, shadow, water and clear sky pixels in Sentinel-2 MSI images. Remote Sens. 2016, 8, 666. [Google Scholar] [CrossRef] [Green Version]

- ESA, S.O. Resolution and Swath. Available online: https://sentinel.esa.int/web/sentinel/missions/sentinel-2/instrument-payload/resolution-and-swath (accessed on 4 August 2020).

- Quinlan, J.R. Learning decision tree classifiers. ACM Comput. Surv. (CSUR) 1996, 28, 71–72. [Google Scholar] [CrossRef]

- Kuhn, M.; Johnson, K. Classification trees and rule-based models. In Applied Predictive Modeling; Springer: Berlin/Heidelberg, Germany, 2013; pp. 369–413. [Google Scholar]

- Decision Tree. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.tree.DecisionTreeClassifier.html (accessed on 22 June 2020).

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Al-Obeidat, F.; Al-Taani, A.T.; Belacel, N.; Feltrin, L.; Banerjee, N. A fuzzy decision tree for processing satellite images and landsat data. Procedia Comput. Sci. 2015, 52, 1192–1197. [Google Scholar] [CrossRef] [Green Version]

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.; Goetz, S.J.; Loveland, T.R.; et al. High-resolution global maps of 21st-century forest cover change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef] [Green Version]

- Wright, C.; Gallant, A. Improved wetland remote sensing in Yellowstone National Park using classification trees to combine TM imagery and ancillary environmental data. Remote Sens. Environ. 2007, 107, 582–605. [Google Scholar] [CrossRef]

- Ensemble Methods. Available online: https://scikit-learn.org/stable/modules/ensemble.html (accessed on 22 June 2020).

- Random Forest. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier (accessed on 22 June 2020).

- Elith, J.; Leathwick, J.R.; Hastie, T. A working guide to boosted regression trees. J. Anim. Ecol. 2008, 77, 802–813. [Google Scholar] [CrossRef] [PubMed]

- Extra Tress. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.ExtraTreesClassifier.html (accessed on 22 June 2020).

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Zhong, Y.; Fei, F.; Zhu, Q.; Qin, Q. Scene classification based on a deep random-scale stretched convolutional neural network. Remote Sens. 2018, 10, 444. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Abdel-Hamid, O.; Deng, L.; Yu, D. Exploring Convolutional Neural Network Structures and Optimization Techniques for Speech recognition. In Proceedings of the Interspeech 2013, Lyon, France, 25–29 August 2013; Volume 11, pp. 73–75. [Google Scholar]

- How to Develop 1D Convolutional Neural Network Models for Human Activity Recognition. Available online: https://machinelearningmastery.com/cnn-models-for-human-activity-recognition-time-series-classification/ (accessed on 3 June 2020).

- Kramer, O. Scikit-learn. In Machine Learning for Evolution Strategies; Springer: Berlin/Heidelberg, Germany, 2016; pp. 45–53. [Google Scholar]

- Feature Selection. Available online: https://scikit-learn.org/stable/modules/classes.html (accessed on 4 February 2020).

- Feature Selection chi2. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.feature_selection.chi2.html (accessed on 4 February 2020).

- Feature Selection mutual_info_classif. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.feature_selection.mutual_info_classif.html (accessed on 4 February 2020).

- Feature Selection f_classif. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.feature_selection.f_classif.html (accessed on 4 February 2020).

- Feature Selection f_regression. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.feature_selection.f_regression.html (accessed on 4 February 2020).

- F1 Score. Available online: https://en.wikipedia.org/wiki/F1_score (accessed on 3 June 2020).

- RandomizedSearchCV. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.RandomizedSearchCV.html (accessed on 22 June 2020).

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef]

- Bowker, A.H. A test for symmetry in contingency tables. J. Am. Stat. Assoc. 1948, 43, 572–574. [Google Scholar] [CrossRef]

- Lancaster, H.O.; Seneta, E. Chi-square distribution. In Encyclopedia of Biostatistics, 2nd ed.; American Cancer Society: New York, NY, USA, 2005; ISBN 9780470011812. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Henrich, V.; Götze, E.; Jung, A.; Sandow, C.; Thürkow, D.; Gläßer, C. Development of an online indices database: Motivation, concept and implementation. In Proceedings of the 6th EARSeL Imaging Spectroscopy SIG Workshop Innovative Tool for Scientific and Commercial Environment Applications, Tel Aviv, Israel, 16–18 March 2009; pp. 16–18. [Google Scholar]

- Crist, E.P.; Cicone, R.C. A Physically-Based Transformation of Thematic Mapper Data-The TM Tasseled Cap. IEEE Trans. Geosci. Remote Sens. 1984, 22, 256–263. [Google Scholar] [CrossRef]

- Richardson, A.D.; Duigan, S.P.; Berlyn, G.P. An evaluation of noninvasive methods to estimate foliar chlorophyll content. New Phytol. 2002, 153, 185–194. [Google Scholar] [CrossRef] [Green Version]

- Alkhaier, F. Soil Salinity Detection Using Satellite Remote Sensing. 2003. Available online: https://webapps.itc.utwente.nl/librarywww/papers_2003/msc/wrem/khaier.pdf (accessed on 16 January 2021).

- Gabrani, M.; Tretiak, O.J. Elastic transformations. In Proceedings of the Conference Record of the Thirtieth Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 3–6 November 1996; Volume 1, pp. 501–505. [Google Scholar]

- Raiyani, K. Ready to Use Machine Learning Approach towards Sentinel-2 Image Scene Classification. 2020. Available online: https://github.com/kraiyani/Sentinel-2-Image-Scene-Classification-A-Comparison-between-Sen2Cor-and-a-Machine-Learning-Approach (accessed on 14 January 2021).

- GDAL/OGR contributors. GDAL/OGR Geospatial Data Abstraction Software Library; Open Source Geospatial Foundation: Chicago, IL, USA, 2020. [Google Scholar]

| No. | Class | Color |

|---|---|---|

| 0 | No Data (Missing data on projected tiles) (black) | |

| 1 | Saturated or defective pixel (red) | |

| 2 | Dark features / Shadows (very dark gray) | |

| 3 | Cloud shadows (dark brown) | |

| 4 | Vegetation (green) | |

| 5 | Bare soils / deserts (dark yellow) | |

| 6 | Water (dark and bright) (blue) | |

| 7 | Cloud low probability (dark gray) | |

| 8 | Cloud medium probability (gray) | |

| 9 | Cloud high probability (white) | |

| 10 | Thin cirrus (very bright blue) | |

| 11 | Snow or ice (very bright pink) |

| Class | Coverage | Points | Distribution (%) |

|---|---|---|---|

| Cloud | opaque clouds | 1,031,819 | 15.57 |

| Cirrus | cirrus and vapor trails | 956,623 | 14.43 |

| Snow | snow and ice | 882,763 | 13.32 |

| Shadow | clouds, cirrus, mountains, buildings | 991,393 | 14.96 |

| Water | lakes, rivers, seas | 1,071,426 | 16.16 |

| Other | remaining: crops, mountains, urban | 1,694,454 | 25.56 |

| Total | - | 6,628,478 | 100 |

| Bands\Class | Other | Water | Shadow | Cirrus | Cloud | Snow |

|---|---|---|---|---|---|---|

| B01 | 47 | 229 | 1076 | 5013 | 34,334 | 259,562 |

| B02 | 587 | 313 | 2694 | 5589 | 47,265 | 285,053 |

| B03 | 190 | 289 | 1232 | 3742 | 40,254 | 256,421 |

| B04 | 536 | 429 | 3855 | 6897 | 79,380 | 300,538 |

| B05 | 516 | 447 | 4300 | 8099 | 84,426 | 305,134 |

| B06 | 546 | 477 | 4609 | 8597 | 993,355 | 299,270 |

| B07 | 576 | 559 | 4653 | 8858 | 121,825 | 290,569 |

| B08 | 517 | 424 | 3942 | 7880 | 96,182 | 277,403 |

| B8A | 607 | 597 | 4513 | 8903 | 133,901 | 281,007 |

| B09 | 0 | 0 | 0 | 6 | 3730 | 60,674 |

| B100 | 0 | 0 | 0 | 0 | 0 | 0 |

| B11 | 597 | 0 | 1 | 0 | 10,112 | 0 |

| B12 | 43 | 0 | 0 | 0 | 671 | 0 |

| Total | 4762 (0.02%) | 3764 (0.03%) | 30,875 (0.24%) | 63,581 (0.51%) | 751,415 (5.60%) | 2,615,631 (22.79%) |

| Mapped Class | Corresponding Sen2Cor Class (Table 1) |

|---|---|

| Cloud | Cloud high probability |

| Cirrus | Thin Cirrus |

| Snow | Snow |

| Shadow | Shadow, Cloud Shadow |

| Water | Water |

| Other | No Data, Defective Pixel, Vegetation, Soil, Cloud low and medium probability |

| Rank | Chi2 | Mutual Info. | Anova | Pearson |

|---|---|---|---|---|

| 1 | B11 | B11 | B11 | B11 |

| 2 | B12 | B01 | B12 | B12 |

| 3 | B04 | B12 | B8A | B8A |

| 4 | B8A | B02 | B07 | B07 |

| 5 | B03 | B03 | B08 | B08 |

| 6 | B07 | B04 | B03 | B03 |

| 7 | B05 | B06 | B06 | B06 |

| 8 | B02 | B05 | B01 | B01 |

| 9 | B08 | B07 | B02 | B02 |

| 10 | B06 | B8A | B04 | B04 |

| 11 | B01 | B08 | B05 | B05 |

| 12 | B09 | B09 | B09 | B09 |

| 13 | B10 | B10 | B10 | B10 |

| Class | Points | Distribution (%) |

|---|---|---|

| Other | 174,369 | 10.29 |

| Water | 117,010 | 10.92 |

| Shadow | 155,715 | 15.71 |

| Cirrus | 175,988 | 18.40 |

| Cloud | 134,315 | 13.02 |

| Snow | 154,751 | 17.53 |

| Total | 912,148 | 13.76 |

| Attribute | Description |

|---|---|

| Features | 13 (value of each band) |

| Classes | 6 (Other, Water, Shadow, Cirrus, Cloud, Snow) |

| Training set | 50 Products (5,716,330 samples) |

| Test set | 10 Products (912,148 samples) |

| Language and Library | Python and Scikit-learn |

| System Specification | Intel(R) Xeon(R) Silver 4110 CPU @ 2.10GHz |

| CNN Early Stopping | monitor = ’val_loss’, mode = ’min’, patience = 2 |

| CNN Model Checkpoint | monitor = ’val_acc’, mode = ’max’ |

| Parameter | RF | ET |

|---|---|---|

| criterion | gini | gini |

| max_depth | 20 | 20 |

| min_samples_split | 50 | 10 |

| min_samples_leaf | 1 | 1 |

| max_features | sqrt | sqrt |

| n_estimators | 242 | 279 |

| min_samples_split | 50 | 10 |

| bootstrap | True | True |

| Class | Precision | Recall | Micro-F1 | Support | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RF | ET | CNN | SCL | RF | ET | CNN | SCL | RF | ET | CNN | SCL | ||

| Other | 0.74 | 0.74 | 0.74 | 0.39 | 0.91 | 0.96 | 0.92 | 0.97 | 0.82 | 0.83 | 0.82 | 0.56 | 174,369 |

| Water | 0.96 | 0.93 | 0.93 | 0.84 | 0.86 | 0.87 | 0.87 | 0.83 | 0.91 | 0.90 | 0.90 | 0.84 | 117,010 |

| Shadow | 0.89 | 0.91 | 0.91 | 0.96 | 0.77 | 0.73 | 0.75 | 0.54 | 0.83 | 0.81 | 0.83 | 0.69 | 155,715 |

| Cirrus | 0.78 | 0.82 | 0.78 | 0.91 | 0.67 | 0.76 | 0.75 | 0.10 | 0.72 | 0.79 | 0.76 | 0.18 | 175,988 |

| Cloud | 0.77 | 0.81 | 0.79 | 0.62 | 0.91 | 0.90 | 0.90 | 0.94 | 0.83 | 0.86 | 0.84 | 0.75 | 134,315 |

| Snow | 0.93 | 0.94 | 0.96 | 0.86 | 0.88 | 0.86 | 0.86 | 0.31 | 0.90 | 0.90 | 0.91 | 0.46 | 154,751 |

| Overall | 0.83 | 0.84 | 0.84 | 0.59 | 0.83 | 0.84 | 0.84 | 0.59 | 0.83 | 0.84 | 0.84 | 0.59 | 912,148 |

| Class | DT | RF | ET | CNN | Sen2Cor | Support |

|---|---|---|---|---|---|---|

| Other | 63.29 | 72.3 | 74.16 | 74.43 | 64.96 | 1,694,454 (25.56%) |

| Water | 63.81 | 73.4 | 76.69 | 73.88 | 80.73 | 1,071,426 (16.16%) |

| Shadow | 53.98 | 63.96 | 61.45 | 64.63 | 50.57 | 991,393 (14.96%) |

| Cirrus | 47.58 | 56.63 | 42.97 | 51.58 | 24.08 | 956,623 (14.43%) |

| Cloud | 65.25 | 75.08 | 75.33 | 72.67 | 75.04 | 1,031,819 (15.57%) |

| Snow | 74.67 | 84.90 | 87.00 | 83.43 | 61.40 | 882,763 (13.32%) |

| 67.95 | 76.43 | 76.77 | 77.54 | 66.40 | 6,628,478 (100%) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Raiyani, K.; Gonçalves, T.; Rato, L.; Salgueiro, P.; Marques da Silva, J.R. Sentinel-2 Image Scene Classification: A Comparison between Sen2Cor and a Machine Learning Approach. Remote Sens. 2021, 13, 300. https://doi.org/10.3390/rs13020300

Raiyani K, Gonçalves T, Rato L, Salgueiro P, Marques da Silva JR. Sentinel-2 Image Scene Classification: A Comparison between Sen2Cor and a Machine Learning Approach. Remote Sensing. 2021; 13(2):300. https://doi.org/10.3390/rs13020300

Chicago/Turabian StyleRaiyani, Kashyap, Teresa Gonçalves, Luís Rato, Pedro Salgueiro, and José R. Marques da Silva. 2021. "Sentinel-2 Image Scene Classification: A Comparison between Sen2Cor and a Machine Learning Approach" Remote Sensing 13, no. 2: 300. https://doi.org/10.3390/rs13020300

APA StyleRaiyani, K., Gonçalves, T., Rato, L., Salgueiro, P., & Marques da Silva, J. R. (2021). Sentinel-2 Image Scene Classification: A Comparison between Sen2Cor and a Machine Learning Approach. Remote Sensing, 13(2), 300. https://doi.org/10.3390/rs13020300