Camera Self-Calibration with GNSS Constrained Bundle Adjustment for Weakly Structured Long Corridor UAV Images

Abstract

:1. Introduction

2. Methodology

2.1. Camera Distortion Model

2.1.1. Brown Model

2.1.2. 7th Polynomial Model

2.1.3. Legendre Model

2.1.4. Fourier Model

2.1.5. Jacobi–Fourier Model

2.2. GNSS-Aided Bundle Adjustment with Inequality Constraint

| Algorithm 1 The procedure of IBA |

| 1: 2: UpdateD = 1; ; 3: for (It = 0; It < ; It++) { 4: if (UpdateD) { 5: UpdateD = 0; 6: ; ; 7: ; ; 8: ; 9: } 10: 11: ; 12: 13: if ( { 14: ; continue; 15: } 16: ; 17: if () { 18: ; 19: if () break; 20: ; UpdateD = 1; ; 21: } else 22: ; 23:} |

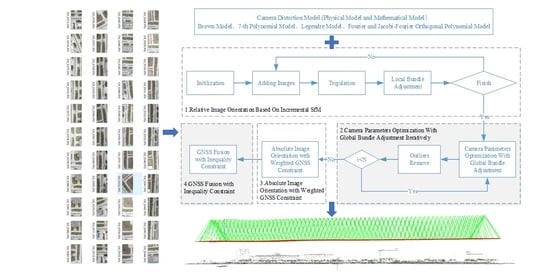

2.3. Camera Self-Calibration for Long Corridor UAV Images

- From the perspective of camera self-calibration, the next best image selection does not consider whether the scene structure is degraded. If the structure of the seed image is poor and lacks height variation, the camera intrinsic parameters are unstable and may even lead to the failure of the final reconstruction.

- At present, UAV images often record high-precision GNSS location information, which can alleviate the “bowl effect” of long corridor images. The existing incremental SfM framework of camera self-calibration does not take full advantage of GNSS information for absolute orientation.

- The inaccurately estimated distortion parameters have an adverse impact on the 3D point clouds generated by dense matching technology. The power lines in UAV images of high-voltage transmission are usually 1~2 pixels in width. When the distortion parameters are estimated inaccurately, the reconstructed point clouds of power lines are noisy and diverged around. Figure 3 shows the dense point clouds reconstructed by ColMap with inaccurate camera distortion parameters.

- Image-relative orientation based on incremental SfM framework. Only the local BA is performed to reduce the error accumulation, and the focal length, principal point, and distortion parameters of the image are kept fixed to avoid the problem of the unstable and large variation of distortion parameters and focal length caused by the instability structure of image and scene degradation. The results of image-relative orientation are shown in Figure 5a.

- Global BA and iterative optimization of camera parameters. In the process of iterative global BA and gross error elimination, the optimization strategy of gradually freeing the intrinsic and distortion of camera parameters is adopted, that is, (a) distortion parameters, (b) focal length, and (c) principal point. This strategy can alleviate the correlation between focal length, principal point, and distortion parameters of the camera. According to the experiment, when the number of iterations is bigger than two, the camera intrinsic parameters become stable. In this paper, the global BA is iterated three times. In each iteration, the distortion, focal length, and principal point parameters of the camera are optimized using the strategy of gradually freeing parameters, to provide better initial values for GNSS-constrained BA.

- Traditional weighted GNSS-constrained absolute orientation. At this time, the GNSS-constrained BA optimizes the focal length, principal point, and distortion parameters as unknowns together. Further, the error equation is shown in Formula (12).

- 4.

- GNSS fusion based on IBA. This paper combines IBA to further fuse the GNSS. The main steps are as follows: (a) the camera focal length, principal point, and distortion parameters are used as unknowns to optimize together during camera self-calibration; (b) the initial input parameters are the sum of the squares’ reprojection error of weighted GNSS-constrained BA, and are the minimum solvers; (c) all the image projection centers and the corresponding GNSS positions are used as constraints for global IBA to solve iteratively. The final reconstructed model with the proposed camera self-calibration strategy is shown in Figure 5b.

3. Results and Discussion

3.1. Test Sites and Datasets

3.2. Analysis of the Influence of Camera Distortion Models

3.3. Analysis of the Performance of Proposed Self-Calibration

3.4. Comparison with State-Of-The-Art Software

3.4.1. Bundle Adjustment without GCP

3.4.2. Bundle Adjustment with GCP

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xiang, T.-Z.; Xia, G.-S.; Zhang, L. Mini-unmanned aerial vehicle-based remote sensing: Techniques, applications, and prospects. IEEE Geosci. Remote Sens. Mag. 2019, 7, 29–63. [Google Scholar] [CrossRef] [Green Version]

- Jiang, S.; Jiang, W.; Huang, W.; Yang, L. UAV-Based Oblique Photogrammetry for Outdoor Data Acquisition and Offsite Visual Inspection of Transmission Line. Remote Sens. 2017, 9, 278. [Google Scholar] [CrossRef] [Green Version]

- Jiang, S.; Jiang, W. UAV-based Oblique Photogrammetry for 3D Reconstruction of Transmission Line: Practices and Applications. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Enschede, The Netherlands, 10–14 June 2019; pp. 401–406. [Google Scholar]

- Zhang, H.; Yang, W.; Yu, H.; Zhang, H.; Xia, G.-S. Detecting power lines in UAV images with convolutional features and structured constraints. Remote Sens. 2019, 11, 1342. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Yuan, X.; Li, W.; Chen, S. Automatic Power Line Inspection Using UAV Images. Remote Sens. 2017, 9, 824. [Google Scholar] [CrossRef] [Green Version]

- Huang, W.; Jiang, S.; Jiang, W. A Model-Driven Method for Pylon Reconstruction from Oblique UAV Images. Sensors 2020, 20, 824. [Google Scholar] [CrossRef] [Green Version]

- Triggs, B. Autocalibration from Planar Scenes. In Proceedings of the European Conference on Computer Vision, Berlin, Germany, 2–6 June 1998; pp. 89–105. [Google Scholar]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Oniga, E.; Pfeifer, N.; Loghin, A.-M. 3D Calibration Test-Field for Digital Cameras Mounted on Unmanned Aerial Systems (UAS). Remote Sens. 2018, 10, 2017. [Google Scholar] [CrossRef] [Green Version]

- Duane, C.B. Close-Range Camera Calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Fraser, C.S. Digital camera self-calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Luhmann, T.; Robson, S.; Kyle, S.; Harley, I.A. Close Range Photogrammetry: Principles, Techniques and Applications; Whittles Publishing: Dunbeath, Caithness, Scotland, 2006; Volume 3. [Google Scholar]

- Fitzgibbon, A.W. Simultaneous Linear Estimation of Multiple View Geometry and Lens Distortion. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; p. I. [Google Scholar]

- Kukelova, Z.; Pajdla, T. A Minimal Solution to the Autocalibration of Radial Distortion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–7. [Google Scholar]

- Kukelova, Z.; Pajdla, T. A Minimal Solution to Radial Distortion Autocalibration. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2410–2422. [Google Scholar] [CrossRef]

- Jiang, F.; Kuang, Y.; Solem, J.E.; Åström, K. A Minimal Solution to Relative Pose with Unknown Focal Length and Radial Distortion. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014; pp. 443–456. [Google Scholar]

- Kukelova, Z.; Heller, J.; Bujnak, M.; Fitzgibbon, A.; Pajdla, T. Efficient Solution to the Epipolar Geometry for Radially Distorted Cameras. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 2309–2317. [Google Scholar]

- Ebner, H. Self calibrating block adjustment. Bildmess. Und Luftbildwessen 1976, 44, 128–139. [Google Scholar]

- Gruen, A. Accuracy, reliability and statistics in close-range photogrammetry. In Proceedings of the Inter-Congress Symposium of ISP Commission V, Stockholm, Sweden, 14–17 August 1978. [Google Scholar]

- Tang, R.; Fritsch, D.; Cramer, M.; Schneider, W. A Flexible Mathematical Method for Camera Calibration in Digital Aerial Photogrammetry. Photogramm. Eng. Remote Sens. 2012, 78, 1069–1077. [Google Scholar] [CrossRef]

- Tang, R.; Fritsch, D.; Cramer, M. New rigorous and flexible Fourier self-calibration models for airborne camera calibration. ISPRS J. Photogramm. Remote Sens. 2012, 71, 76–85. [Google Scholar] [CrossRef]

- Babapour, H.; Mokhtarzade, M.; Valadan Zoej, M.J. Self-calibration of digital aerial camera using combined orthogonal models. ISPRS J. Photogramm. Remote Sens. 2016, 117, 29–39. [Google Scholar] [CrossRef]

- Wu, C. Critical Configurations for Radial Distortion Self-Calibration. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 28 June 2014; pp. 25–32. [Google Scholar]

- Zhou, Y.; Rupnik, E.; Meynard, C.; Thom, C.; Pierrot-Deseilligny, M. Simulation and Analysis of Photogrammetric UAV Image Blocks—Influence of Camera Calibration Error. Remote Sens. 2019, 12, 22. [Google Scholar] [CrossRef] [Green Version]

- Tournadre, V.; Pierrot-Deseilligny, M.; Faure, P.H. UAV Linear Photogrammetry. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, La Grande Motte, France, 28 September–3 October 2015; p. 327. [Google Scholar]

- Polic, M.; Steidl, S.; Albl, C.; Kukelova, Z.; Pajdla, T. Uncertainty based camera model selection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 5991–6000. [Google Scholar]

- Griffiths, D.; Burningham, H. Comparison of pre- and self-calibrated camera calibration models for UAS-derived nadir imagery for a SfM application. Prog. Phys. Geogr. Earth Environ. 2018, 43, 215–235. [Google Scholar] [CrossRef] [Green Version]

- Jaud, M.; Passot, S.; Le Bivic, R.; Delacourt, C.; Grandjean, P.; Le Dantec, N. Assessing the accuracy of high resolution digital surface models computed by PhotoScan® and MicMac® in sub-optimal survey conditions. Remote Sens. 2016, 8, 465. [Google Scholar] [CrossRef] [Green Version]

- Salach, A.; Bakuła, K.; Pilarska, M.; Ostrowski, W.; Górski, K.; Kurczyński, Z. Accuracy assessment of point clouds from LiDAR and dense image matching acquired using the UAV platform for DTM creation. ISPRS Int. J. Geo-Inf. 2018, 7, 342. [Google Scholar] [CrossRef] [Green Version]

- Jaud, M.; Passot, S.; Allemand, P.; Le Dantec, N.; Grandjean, P.; Delacourt, C. Suggestions to limit geometric distortions in the reconstruction of linear coastal landforms by SfM photogrammetry with PhotoScan® and MicMac® for UAV surveys with restricted GCPs pattern. Drones 2019, 3, 2. [Google Scholar] [CrossRef] [Green Version]

- Nahon, A.; Molina, P.; Blázquez, M.; Simeon, J.; Capo, S.; Ferrero, C. Corridor mapping of sandy coastal foredunes with UAS photogrammetry and mobile laser scanning. Remote Sens. 2019, 11, 1352. [Google Scholar] [CrossRef] [Green Version]

- Ferrer-González, E.; Agüera-Vega, F.; Carvajal-Ramírez, F.; Martínez-Carricondo, P. UAV Photogrammetry Accuracy Assessment for Corridor Mapping Based on the Number and Distribution of Ground Control Points. Remote Sens. 2020, 12, 2447. [Google Scholar] [CrossRef]

- Maxime, L. Incremental Fusion of Structure-from-Motion and GPS Using Constrained Bundle Adjustments. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2489–2495. [Google Scholar] [CrossRef]

- Gopaul, N.S.; Wang, J.; Hu, B. Camera auto-calibration in GPS/INS/stereo camera integrated kinematic positioning and navigation system. J. Glob. Position. Syst. 2016, 14, 3. [Google Scholar] [CrossRef] [Green Version]

- Snow, W.L.; Childers, B.A.; Shortis, M.R. The calibration of video cameras for quantitative measurements. In Proceedings of the 39th International Instrumentation Symposium, Albuquerque, NM, USA, 2–6 May 1993; pp. 103–130. [Google Scholar]

- Wang, J.; Wang, W.; Ma, Z. Hybrid-model based Camera Distortion Iterative Calibration Method. Bull. Surv. Mapp. 2019, 4, 103–106. [Google Scholar]

- Jiang, S.; Jiang, C.; Jiang, W. Efficient structure from motion for large-scale UAV images: A review and a comparison of SfM tools. ISPRS J. Photogramm. Remote Sens. 2020, 167, 230–251. [Google Scholar] [CrossRef]

- Schonberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

| Method | Incremental SfM in ColMap | The Proposed Method |

|---|---|---|

| Local BA | Camera intrinsic parameters are freed in local BA | Camera intrinsic parameters are fixed in local BA |

| Global BA | Global BA is performed after growing the registered images by a certain percentage. The camera intrinsic parameters are freed in global BA. | Global BA is performed after registering all images. Iteratively free distortion parameters, focal length, and principal point in global BA. |

| Method | IBA in [33] | The Proposed Method |

|---|---|---|

| Applied Stage | IBA is applied in Local BA | IBA is applied in Global BA |

| Initial Parameters | The input-optimized initial parameters are the results of local BA and the most recent GNSS location. | The input-optimized initial parameters are the results of weighted GNSS constraint BA and all the GNSS locations. |

| Camera Intrinsic Parameters | Keep fixed | Free |

| Datasets | Camera Model | Mean(m) | SD(m) | RMSE(m) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| X | Y | Z | X | Y | Z | X | Y | Z | |||

| Test Site 1 | Rectangle | Brown | 0.004 | −0.001 | 0.174 | 0.011 | 0.036 | 0.037 | 0.012 | 0.036 | 0.178 |

| Poly7 | 0.008 | 0.018 | 2.456 | 0.018 | 0.050 | 0.032 | 0.020 | 0.053 | 2.456 | ||

| Legendre | −0.006 | −0.002 | 0.829 | 0.026 | 0.082 | 0.032 | 0.027 | 0.082 | 0.830 | ||

| Fourier | 0.001 | 0.000 | 0.755 | 0.013 | 0.054 | 0.032 | 0.013 | 0.054 | 0.755 | ||

| Jacobi–Four | −0.001 | 0.003 | 0.481 | 0.020 | 0.040 | 0.032 | 0.020 | 0.040 | 0.482 | ||

| S-Shaped | Brown | 0.011 | 0.002 | −0.348 | 0.014 | 0.018 | 0.022 | 0.018 | 0.018 | 0.349 | |

| Poly7 | 0.004 | 0.001 | 0.084 | 0.012 | 0.028 | 0.025 | 0.012 | 0.028 | 0.088 | ||

| Legendre | 0.003 | −0.000 | −0.061 | 0.012 | 0.030 | 0.026 | 0.012 | 0.030 | 0.066 | ||

| Fourier | 0.010 | 0.007 | 0.054 | 0.013 | 0.013 | 0.018 | 0.016 | 0.015 | 0.057 | ||

| Jacobi–Four | 0.019 | 0.007 | 0.031 | 0.020 | 0.014 | 0.022 | 0.028 | 0.015 | 0.038 | ||

| Test Site 2 | Rectangle | Brown | −0.018 | 0.028 | 0.753 | 0.030 | 0.025 | 0.036 | 0.035 | 0.037 | 0.754 |

| Poly7 | 0.001 | 0.014 | 0.240 | 0.022 | 0.032 | 0.028 | 0.022 | 0.035 | 0.242 | ||

| Legendre | −0.018 | 0.027 | 0.223 | 0.033 | 0.027 | 0.033 | 0.038 | 0.038 | 0.226 | ||

| Fourier | 0.000 | 0.004 | 0.208 | 0.022 | 0.035 | 0.028 | 0.022 | 0.035 | 0.210 | ||

| Jacobi–Four | −0.011 | 0.024 | 0.104 | 0.023 | 0.025 | 0.029 | 0.026 | 0.035 | 0.108 | ||

| S-Shaped | Brown | 0.016 | −0.006 | −1.259 | 0.012 | 0.012 | 0.041 | 0.020 | 0.013 | 1.260 | |

| Poly7 | 0.004 | 0.003 | 0.058 | 0.016 | 0.012 | 0.018 | 0.017 | 0.012 | 0.061 | ||

| Legendre | 0.003 | 0.004 | 0.125 | 0.017 | 0.012 | 0.018 | 0.017 | 0.013 | 0.127 | ||

| Fourier | 0.005 | 0.005 | 0.098 | 0.014 | 0.012 | 0.015 | 0.013 | 0.013 | 0.099 | ||

| Jacobi–Four | 0.022 | 0.003 | −0.724 | 0.016 | 0.012 | 0.035 | 0.027 | 0.013 | 0.725 | ||

| Datasets | Method | Mean(m) | SD(m) | RMSE(m) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| X | Y | Z | X | Y | Z | X | Y | Z | |||

| Test Site 1 | Rectangle | ColMap | 0.006 | −0.007 | 0.574 | 0.015 | 0.050 | 0.036 | 0.016 | 0.050 | 0.575 |

| Ours | 0.004 | −0.001 | 0.174 | 0.011 | 0.036 | 0.037 | 0.012 | 0.036 | 0.178 | ||

| S-Shaped | ColMap | 0.107 | 0.103 | 0.055 | 0.040 | 0.320 | 0.404 | 0.114 | 0.337 | 0.407 | |

| Ours | 0.011 | 0.002 | −0.348 | 0.014 | 0.018 | 0.022 | 0.018 | 0.018 | 0.349 | ||

| Test Site 2 | Rectangle | ColMap | −0.038 | 0.033 | 0.239 | 0.055 | 0.026 | 0.091 | 0.067 | 0.042 | 0.256 |

| Ours | −0.018 | 0.028 | 0.753 | 0.030 | 0.025 | 0.036 | 0.035 | 0.037 | 0.754 | ||

| S-Shaped | ColMap | 0.012 | −0.005 | −1.380 | 0.018 | 0.011 | 0.019 | 0.021 | 0.012 | 1.381 | |

| Ours | 0.016 | −0.006 | −1.259 | 0.012 | 0.012 | 0.041 | 0.020 | 0.013 | 1.260 | ||

| Datasets | Software | Mean(m) | SD(m) | RMSE(m) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| X | Y | Z | X | Y | X | Y | Z | Z | |||

| Test site 1 | Rectangle | MicMac | −0.007 | −0.023 | 0.301 | 0.017 | 0.125 | 0.095 | 0.019 | 0.127 | 0.315 |

| Pix4d | −0.012 | −0.024 | −1.266 | 0.020 | 0.066 | 0.088 | 0.024 | 0.070 | 1.269 | ||

| Ours | −0.001 | 0.003 | 0.481 | 0.020 | 0.040 | 0.032 | 0.020 | 0.040 | 0.482 | ||

| S-shaped | MicMac | −0.029 | −0.050 | −0.075 | 0.013 | 0.155 | 0.157 | 0.031 | 0.163 | 0.174 | |

| Pix4d | 0.021 | 0.013 | 0.360 | 0.017 | 0.016 | 0.029 | 0.027 | 0.021 | 0.362 | ||

| Ours | 0.019 | 0.007 | 0.031 | 0.020 | 0.014 | 0.022 | 0.028 | 0.015 | 0.038 | ||

| Test site 2 | Rectangle | MicMac | −0.016 | 0.047 | 0.716 | 0.037 | 0.043 | 0.056 | 0.041 | 0.064 | 0.718 |

| Pix4d | 0.008 | −0.013 | −0.847 | 0.025 | 0.048 | 0.037 | 0.026 | 0.050 | 0.848 | ||

| Ours | −0.011 | 0.024 | 0.104 | 0.023 | 0.025 | 0.029 | 0.026 | 0.035 | 0.108 | ||

| S-shaped | MicMac | 0.056 | −0.023 | −1.425 | 0.076 | 0.021 | 0.108 | 0.095 | 0.031 | 1.429 | |

| Pix4d | 0.011 | 0.005 | −0.111 | 0.013 | 0.023 | 0.025 | 0.018 | 0.023 | 0.113 | ||

| Ours | 0.022 | 0.003 | −0.724 | 0.016 | 0.012 | 0.035 | 0.027 | 0.013 | 0.725 | ||

| Datasets | Software | Mean(m) | SD(m) | RMSE(m) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| X | Y | Z | X | Y | Z | X | Y | Z | |||

| Test site 1 | Rectangle | Brown | −0.005 | 0.003 | 0.016 | 0.011 | 0.036 | 0.040 | 0.012 | 0.036 | 0.043 |

| Jacobi–Four | 0.000 | 0.000 | 0.016 | 0.021 | 0.041 | 0.038 | 0.021 | 0.041 | 0.042 | ||

| MicMac | 0.020 | −0.081 | 0.169 | 0.019 | 0.200 | 0.119 | 0.027 | 0.216 | 0.207 | ||

| Pix4d | 0.018 | −0.019 | 0.018 | 0.020 | 0.073 | 0.083 | 0.027 | 0.076 | 0.085 | ||

| S-shaped | Brown | −0.011 | −0.001 | −0.014 | 0.014 | 0.018 | 0.022 | 0.018 | 0.018 | 0.026 | |

| Jacobi–Four | −0.019 | −0.004 | −0.024 | 0.017 | 0.016 | 0.019 | 0.026 | 0.016 | 0.030 | ||

| MicMac | 0.017 | −0.029 | 0.164 | 0.017 | 0.044 | 0.142 | 0.024 | 0.053 | 0.217 | ||

| Pix4d | 0.020 | 0.013 | 0.034 | 0.019 | 0.017 | 0.027 | 0.027 | 0.022 | 0.043 | ||

| Test site 2 | Rectangle | Brown | 0.018 | −0.027 | 0.005 | 0.030 | 0.026 | 0.029 | 0.035 | 0.038 | 0.029 |

| Jacobi–Four | 0.012 | −0.024 | 0.003 | 0.024 | 0.026 | 0.028 | 0.027 | 0.035 | 0.028 | ||

| MicMac | 0.154 | −0.071 | 0.016 | 0.214 | 0.067 | 0.105 | 0.264 | 0.098 | 0.106 | ||

| Pix4d | 0.005 | −0.010 | −0.023 | 0.023 | 0.048 | 0.033 | 0.024 | 0.049 | 0.040 | ||

| S-shaped | Brown | −0.018 | 0.007 | 0.024 | 0.014 | 0.013 | 0.017 | 0.023 | 0.015 | 0.029 | |

| Jacobi–Four | −0.023 | −0.002 | 0.005 | 0.018 | 0.013 | 0.019 | 0.029 | 0.013 | 0.019 | ||

| MicMac | −0.283 | 0.057 | 0.022 | 0.381 | 0.053 | 0.190 | 0.475 | 0.078 | 0.191 | ||

| Pix4d | 0.012 | 0.017 | −0.017 | 0.013 | 0.021 | 0.024 | 0.017 | 0.027 | 0.030 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, W.; Jiang, S.; Jiang, W. Camera Self-Calibration with GNSS Constrained Bundle Adjustment for Weakly Structured Long Corridor UAV Images. Remote Sens. 2021, 13, 4222. https://doi.org/10.3390/rs13214222

Huang W, Jiang S, Jiang W. Camera Self-Calibration with GNSS Constrained Bundle Adjustment for Weakly Structured Long Corridor UAV Images. Remote Sensing. 2021; 13(21):4222. https://doi.org/10.3390/rs13214222

Chicago/Turabian StyleHuang, Wei, San Jiang, and Wanshou Jiang. 2021. "Camera Self-Calibration with GNSS Constrained Bundle Adjustment for Weakly Structured Long Corridor UAV Images" Remote Sensing 13, no. 21: 4222. https://doi.org/10.3390/rs13214222

APA StyleHuang, W., Jiang, S., & Jiang, W. (2021). Camera Self-Calibration with GNSS Constrained Bundle Adjustment for Weakly Structured Long Corridor UAV Images. Remote Sensing, 13(21), 4222. https://doi.org/10.3390/rs13214222