1. Introduction

Mangroves are recognized as unique forms of vegetation in subtropical and tropical coastal zones in 118 countries and territories [

1]. Mangrove vegetation provides many benefits for humans and surrounding ecosystems. For example, they provide carbon storage vegetation [

2], coastal protection vegetation [

3], breeding grounds [

4], biodiversity conservation [

4], and commercial vegetation [

5]. Research revealed that 35% and 2.1% of the global distribution of mangroves vanished during 1980–2000 [

6] and 2000–2016 [

7], respectively; also, the annual rate of mangrove loss was 0.26%–0.66% from 2000 to 2012 [

8]. Because mangrove wetlands are among the few ecosystems with a large capacity for carbon storage, their mapping is essential.

The causes of mangrove loss can be both natural and anthropogenic. Human activities such as aquaculture, agriculture, hydrological pollution, port development, timber extraction, and urban development are some human causes of mangrove forest loss [

9,

10,

11]. Extreme climate events [

10], sea-level rise [

12], and hurricanes [

13] are some of the natural disasters that provoke mangrove forest loss. A principal factor behind biodiversity loss is ecosystem degradation [

14]. Degradation can be defined as decreases in ecosystem composition, the provision of ecosystem services, productivity, and in vegetation canopy cover, which can all fundamentally alter an ecosystem [

15,

16,

17,

18]. Hurricanes are also among natural events that have substantially contributed to mangrove degradation [

13,

19]. In September 2017, southwest Florida was hit by one such storm, classed as a Category 3 hurricane, named Hurricane Irma [

20]. Studies have reported on the Hurricane Irma–induced mangrove degradation in southwest Florida [

20,

21,

22].

The mapping of mangrove degradation caused by Hurricane Irma is essential for understanding the status of mangroves at an affected area. The greatest challenge of mangrove mapping is to deal with the marred condition of mangrove forests and their wide distribution within a location. Remote sensing satellite imagery is advantageous because of a large observation scale and time-series data. Thus, it has been widely applied to mangrove mapping using traditional extraction methods or machine learning classification methods like pixel-based classification and object-based classification. The large scale of remote sensing data can be used to deal with the wide distribution of mangrove areas. By using remote sensing data, researchers were able to map mangroves without directly visiting all the mangrove areas. The remote sensing data also provide multispectral bands that can be used to get more information about the characteristics of mangrove objects. The combination of machine learning or deep learning with remote sensing data can increase effectiveness and reduce cost more efficiently than manual digitation techniques.

In recent years, several algorithms have been proposed for mangrove mapping. For example, Kamal et al. used an object-based algorithm for multiscale mangrove composition mapping and achieved overall accuracies around 82–94% [

23]. Diniz et al. used the random forest (RF) algorithm for mangrove mapping in the Brazilian coastal zone over a period of three decades and achieved overall accuracies around 80–87% [

24]. Moreover, Mondal et al. evaluated the accuracy of RF and classification and regression tree (CART) algorithms for mangrove mapping in South Africa and achieved an overall accuracy of RF and CART around 93.44% and 92.18%, respectively [

25]. Chen also used the RF algorithm for mangrove mapping in Dongzhaigang, China, with Sentinel-2 imagery and achieved an overall accuracy of 90.47% [

26]. Furthermore, some algorithms have been used for mangrove degradation mapping. For example, Lee et al. applied the RF algorithm as an ecological conceptual model for mapping mangrove degradation in Rakhine State, Myanmar (mangrove degradation mostly caused by anthropogenic disturbances) and Shark River in southwest Florida (mangrove degradation caused by Hurricane). The user’s accuracy rates of the model in classifying degraded mangroves in Myanmar and Shark River were 69.3% and 73.9%, respectively [

22]. McCarthy et al. applied decision tree (DT), neural network (NN), and support vector machine (SVM) classifiers for mapping mangrove degradation in areas affected by Hurricane Irma by using high-resolution satellite imagery. They reported that the user’s accuracy rates for the DT classifier in classifying degraded mangroves were 62% and 56%, 75% for the NN classifier, and 59% for the SVM classifier [

20,

21]. Although the above studies can obtain sufficient user’s accuracy rates on mangrove degradation mapping using specific algorithms, the accuracy can be improved with more appropriate techniques.

Recently, deep learning (DL) has been extensively applied to remote sensing satellite imagery [

27,

28,

29]. As an important derivative of machine learning with multiple processing layers, it can increase model performance and precision [

30]. There are three sorts of DL algorithms for assessing satellite imagery, i.e., patch-based convolutional neural network (CNN), semantic segmentation or Fully Convolutional Network (FCN), and object detection algorithms [

27,

29]. Patch-based CNN and FCN algorithms have been used for mangrove classification using satellite imagery [

31,

32,

33]. Hosseiny, et al. have proposed a spatio–temporal patch-based CNN, called WetNet, for wetland classification by using the LSTM layer in one of their architectures [

34]. In 2014, a study introduced a fully convolutional network (FCN) that uses an encoder for feature extraction and a decoder to restore the input resolution by using deconvolutional or upsampling layers [

35]. FCN has been widely used for pixel-based classification [

29]. FCN uses an encoder for feature extraction and a decoder to restore the input resolution by deconvolutional or upsampling layers. A study reported that an FCN algorithm applied with Landsat imagery produced favorable results in mangrove mapping in large-scale areas [

31]. An FCN algorithm is a pixel-based classification method, and such algorithms have been improved with advancements in CNN systems (e.g., AlexNet [

36], VGG [

37], ResNet [

38], and DenseNet [

39]) [

29]. State-of-the-art FCN algorithms (e.g., U-Net [

40], LinkNet [

41], Me-Net [

32], FC-DenseNet [

42], and feature pyramid Net [

43]) have recently drawn attention.

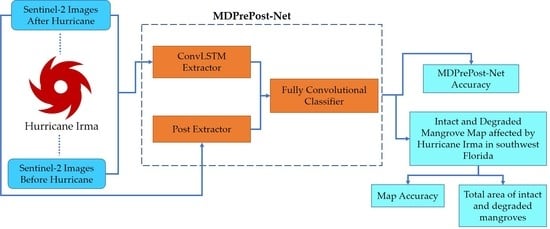

As above mentioned, a hurricane is one of the natural hazards that caused mangrove degradation in affected areas. This motivated us to exploit the relationship of satellite images between pre and post-hurricane events to better know the degraded mangrove area that was affected by Hurricane Irma. Thus, in this study, an end-to-end spatial-spectral-temporal FCN framework referred to as MDPrePost-Net is proposed to improve the classification accuracy of mangrove mapping. MDPrePost-Net consists of two main sub-models, a pre-post deep feature extractor, and an FCN classifier. Abundant previous studies have successfully applied convolutional LSTM (ConvLSTM) and FCN in remote sensing works, such as precipitation nowcasting [

44], analyzing various types of input images for Side-Looking Airborne Radar (SLAR) imagery [

45], and video frame sequences [

46,

47]. Therefore, in this study, the ConvLSTM is mainly used to extract spatial–spectral–temporal relationships from pre and post image data in the proposed MDPrePost-Net.

Sentinel-2 is a freely available passive remote sensing system that covers the entire planet and has 13 bands with different spatial resolutions (10, 20, and 60 m). Many studies have successfully applied machine learning and deep learning algorithms on Sentinel-2 for mangrove mapping [

25,

26,

32]. Therefore, the proposed MDPrePost-Net uses Sentinel-2 imagery before and after the hurricane as the input data. Moreover, some spectral indices are also used in this study to improve model performance. In summary, this study has two aims: (1) to propose an end-to-end fully convolutional network that considers satellite imagery before and after hurricanes for mangrove degradation classification, referred to as MDPrePost-Net; (2) to use the MDPrePost-Net to draw a mangrove degradation map of the southwest Florida coastal zone affected by Hurricane Irma.

The rest of this paper is organized as follows: the materials and methods are discussed in

Section 2. The results for mangrove degradation mapping from MDPrePost-Net in southwest Florida are discussed in

Section 3. The discussion of our research is discussed in

Section 4. Finally, in

Section 5, the conclusions are drawn.

3. Results

This section presents the experimental results of the MDPrePost-Net (

Section 3.1). The comparison of the MDPrePost-Net results with existing FCN architecture is drawn in

Section 3.2. We investigated the effects of adding the NDVI, CMRI, NDMI, and MMRI to the input data and found that added spectral indices improved the accuracy result (

Section 3.3). We extrapolated and applied the trained and proposed MDPrePost-Net to produce an intact and degraded mangrove map in the entire study area and calculate the map’s accuracy. Our map shows good Kappa, producer’s, user’s and overall accuracy scores (

Section 3.4).

3.1. MDPrePost-Net Results

MDPrePost-Net was trained using an early stopping schema to avoid overfitting, signifying that the training process automatically stopped if the validation loss did not improve further. The accuracy and loss curves from training and validation data from MDPrePost-Net are shown in

Figure 7. The training and validation loss decreased steadily, and the training and validation IoU score and F1-Score increased steadily until the training was completed (292 total epochs). This result shows that the MDPrePost-Net completely learned the input and target data for three classes of mangrove degradation classification.

The trained model of MDPrePost-Net was evaluated by using a testing dataset that is different data from the input training data. The IoU score, F1-Score, and class accuracy for non-mangrove, intact mangrove, and degraded mangrove classes are shown in

Table 8. Overall, the non-mangrove class achieved better accuracy than the intact and degraded mangrove classes. This indicates that intact and degraded mangrove classes are harder to classify than the non-mangrove class. However, the accuracy of intact and degraded mangrove classes is also high (>95%). The IoU score of intact and degraded mangrove classes is 96.47% and 96.82%, respectively. These results indicate the MDPrePost-Net successfully identifies three classes of this study with high accuracy.

The effects of the various total numbers of input data are tabulated in

Table 9. We performed experiments by using 25%, 50%, 75%, and 100% of training data to demonstrate the effects of the total number of input data on the accuracy and IoU score, respectively. Based on the experimental results, the overall accuracy and IoU scores increase gradually in line with the increase in the total number of input data. The mean IoU decreased by 9.11% if we just used 25% of training data, while the mean IoU decreased by 5.01% and 2.32% if we just used 50% and 75% of training data, respectively. This result indicates that the total number of input data affects the accuracy scores. The fewer input data that are used, the lower accuracy scores are obtained.

To evaluate the effectiveness of each extractor part in the pre-post deep feature extractor, ablation studies were conducted. We removed certain parts in the first sub-model of the MDPrePost-Net to understand the contribution of each extractor part to the overall architecture based on the accuracy result. The classification results of removing the ConvLSTM extractor or the post extractor in the proposed model are shown in

Table 10. The average accuracy is the averaging of Mean IoU, OA F1-Score, and overall accuracy. The results show that the ConvLSTM extractor part is the most important and effective part of the spatial–spectral–temporal deep feature extraction sub-model. If we removed the ConvLSTM extractor from the network, the average accuracy decreased by 1.13%, while the mean IoU decreased by 2.05%. This finding indicates that the spatial–spectral–temporal extracted feature from the ConvLSTM part, with pre-post data as the input, is an important feature for mangrove degradation classification affected by Hurricane Irma.

The mean IoU decreased by 0.6% if we removed the post extractor part and the IoU of intact and degraded mangrove decreased by 0.85%~0.90%. The post extractor part is less influential than the ConvLSTM part based on the accuracy metrics. However, adding the post extractor part in the network increased the average accuracy and IoU for each class. This result demonstrates the effectiveness of the two feature extractors in the proposed MDPrePost-Net.

3.2. Comparison with Existing Architecture

The classification result from the proposed model was compared with some well-known FCN architecture, namely FC-DenseNet [

42], U-Net [

40], LinkNet [

41], and FPN [

43]. We used the same input data and same seed random number to train the existing FCN architecture. Because the existing FCN architecture cannot handle time-series data, we stacked the pre-post image data from (2 ×

R ×

C ×

D10) into (

R ×

C ×

D20) and fed the data to the existing FCN architecture. A visual comparison of a small part from the MDPrePost result and some existing FCN architecture is presented in

Figure 8. Based on the visualization analysis, the proposed model achieved improved classification compared to other existing FCN architecture. The classified map from MDPrePost-Net can clearly distinguish each class even if it has a little misclassification (non-mangrove, intact mangrove, and degraded mangrove).

FC-DenseNet103 with a growth rate (K) of 32 and U-Net architecture both demonstrated good visualization results, but there exist some misclassified areas of degraded mangrove classes at the top section. On LinkNet results, the top section can better classify intact and degraded mangrove classes, but in the center until the bottom section, there exist many misclassified areas. On FPN results, the classified map result is very general and missing a lot of important details. In general, existing FCN architecture can clearly distinguish non-mangrove and mangrove objects but there exist more misclassified results in terms of intact or degraded mangroves classes when compared with MDPrePost-Net results. This result indicates the spatial–spectral–temporal extracted features from the MDPrePost-Net are very important to distinguish intact and degraded mangrove objects.

The detailed quantitative comparison including IoU score, training time, and total parameters as shown in

Table 11. The proposed MDPrePost-Net outperforms other existing FCN architecture in terms of mean IoU and IoU scores for each class. The total parameter of the proposed model is larger than LinkNet and FPN-Net, and the training time is also longer than LinkNet and FPN-Net but is still acceptable due to the higher accuracy and reasonable total training time that is lower than one hour. LinkNet has a very fast training time and fewer total parameters due to LinkNet aiming for real-time semantic segmentation.

In general, almost all the existing architecture achieved a high mean IoU of more than 90%. Only FPN achieved a mean IoU lower than 90%. Based on the IoU score of the non-mangrove class, all existing FCN achieved a very high IoU score (>98%), which indicates that all the existing FCN can clearly distinguish mangrove and non-mangrove objects based on the visual analysis. FC-DenseNet103 (K = 32) achieved the second-best place in terms of mean IoU but the IoU of the intact mangrove was still lower than 90%, and the total training time and total parameter of FC-DenseNet103 were the longest and biggest. In the U-Net result, the training time is almost the same as the proposed MDPrePost-Net, but U-Net has IoU intact and degraded mangrove scores of lower than 90%. Based on this result, MDPrePost-Net successfully outperforms some existing FCN architecture in terms of mangrove degradation affected by Hurricane Irma using temporal satellite data.

3.3. Effects of Vegetation and Mangrove Indices on the Results

This section demonstrates the effect of the different total number of input bands in the MDPrePost-Net. In the first experiment, we used only true-color images or the blue, green, and red bands of Sentinel-2 as the input data. A true-color image is widely used for DL-based image classification. In the second experiment, we used true-color images with the NIR band (blue, green, red, NIR). In the third experiment, we used true-color images with the NIR and SWIR bands (blue, green, red, NIR, SWIR1, and SWIR2). The third experiment was conducted to study the effects of including the SWIR bands in the classification of intact and degraded mangroves because the SWIR band is sensitive to wet objects. The last experiment was conducted using the original input data to prove that the 10 input bands (including the NDVI, CMRI, NDMI, and MMRI) could improve the accuracy of the classification of mangroves and degraded mangroves.

Table 12 presents the accuracy assessment results (i.e., mean IoU, overall F1, OA, and average accuracy) for the three classes (non-mangroves, mangroves, and degraded mangroves) as well as the IoU result for each class. For the first experiment using RGB data, the mean IoU was 95.54%, the IoU value for the mangrove class was 93.13%, and the IoU value for the degraded mangrove class was 93.76%. This finding shows that the proposed MDPrePost-Net model has satisfactory accuracy for the true-color Sentinel-2 images. For the second and third experiments, the addition of the NIR, SWIR1, and SWIR2 bands improved the accuracy value. Specifically, the average accuracy increased by approximately 0.57%, the IoU value for the intact mangrove class increased by 1.69%, and the IoU value for the degraded mangrove class increased by approximately 1.37%. For the final experiment involving the 10 input bands, the results revealed that the added vegetation and mangrove indices considerably improved the classification accuracy.

3.4. Mangrove Degradation Map in Southwest Florida

The second goal of this study is to map intact/healthy mangrove and degraded mangrove forests along the southwest Florida coastal zone effectively and efficiently because southwest Florida has a long and wide coastal zone. The most effective, efficient, and accurate method is needed to know the impact of Hurricane Irma on the mangrove forests area. The trained proposed MDPrePost-Net was used to produce the non-mangrove, intact/healthy, and degraded mangrove map along the southwest Florida coastal zone.

We used a confusion matrix to calculate the map accuracy based on reference samples: 1500 reference point samples, 500 samples of non-mangrove, 500 samples of intact/healthy mangroves, and 500 samples of degraded mangrove classes (

Table 13). The overall accuracy, user’s accuracy, producer’s accuracy, and kappa score have been calculated for map accuracy based on the confusion matrix. The result shows that the overall accuracy is 0.9733. The user’s accuracy and producer’s accuracy of non-mangrove classes are 0.998 and 0.978, intact mangrove classes are 0.9680 and 0.9622, while degraded mangrove classes are 0.9540 and 0.9794, respectively. Based on this result, our mangrove degradation map affected by Hurricane Irma has a very good result with a kappa score of 0.960. Based on the calculation of the map accuracy, the MDPrePost-Net achieved a very good result in terms of mapping the intact and degraded mangroves affected by Hurricane Irma in the large study area.

The result of the non-mangrove, intact and degraded mangrove map along the southwest Florida coastal zone is shown in

Figure 9. Based on the map, almost the entire mangrove forest which is directly adjacent to the shoreline was degraded. The degraded mangrove areas include several degraded mangroves or slightly degraded mangroves. The purple box on the map shows zoom areas that indicate severely degraded mangrove areas. Based on the zoom areas, we can see that our model can clearly distinguish intact/healthy mangrove, degraded mangrove, and non-mangrove areas. The map shows in the lower north and northwest study area that there are many degraded mangrove areas. Based on the map, we can see the pattern of intact/healthy mangrove and degraded mangrove areas. Most of the intact mangrove areas are behind the degraded mangrove areas, which indicates that the mangrove area in front of it can protect against Hurricane Irma. We can see the mangrove areas bordering the land are not degraded. These results show that mangroves can protect the coastline area from hurricanes.

4. Discussion

Ecosystem degradation is a principal factor behind biodiversity loss [

14]. Mapping of mangrove degradation is very important to know the status of the mangroves because mangroves have many benefits [

2,

3,

4,

5]. In September 2017, southwest Florida was hit by Hurricane Irma (a category 3 hurricane) which degraded mangrove forests [

20]. By developing advanced technology in satellite and computer science, remote sensing imagery have been widely used for mangrove mapping using object-based classification [

23], RF algorithm [

24,

26], CART algorithm [

25], Capsules-U-Net [

31], Me-Net [

32], and convolutional neural network [

33]. Some previous studies used satellite imagery for mangrove degradation mapping in southwest Florida affected by Hurricane Irma by using machine learning algorithms [

20,

21,

22]. This study proposed the deep learning model that can be used for mangrove degradation mapping using Sentinel-2 data by considering the spatial–spectral–temporal relationship between images before hurricanes and images after hurricanes and producing a map of intact and degraded mangrove areas affected by Hurricane Irma along southwest Florida.

In this study, we consider two kinds of satellite imagery for mangrove degradation mapping. The first one is pre-image referring to images before Hurricane Irma and the second one is post-image referring to images after Hurricane Irma. We assume the degraded mangrove class is the mangrove object in the image before Hurricane Irma which is then degraded in the image after Hurricane Irma because the degraded mangrove area in this study was affected by Hurricane Irma. The proposed MDPrePost-Net consists of two sub-models, a pre-post deep feature extractor and an FCN classifier. The MDPrePost-Net considers the relationship between the pre and post-hurricane events by using the ConvLSTM feature extractor in the deep feature extraction sub-model. Previous studies used the combination of ConvLSTM with semantic segmentation for precipitation nowcasting [

44], extracting spatiotemporal relationships and classifying SLAR images [

45] and extracting spatiotemporal relationships of video sequences classifications [

46,

47]. In this study, we used the ConvLSTM part for extracting the spatiotemporal relationship between the images captured before the hurricane and those captured after the hurricane. The post extractor also considered the spatial–spectral information of images after the hurricane that was more correlated with the degraded mangrove area that was affected by Hurricane Irma.

Based on the classification result, our proposed model achieved good results in terms of accuracy metrics and acceptable training time. Our proposed model has a mean IoU score, F1-Score, and overall accuracy of 97.70%, 98.83%, and 99.71%, respectively. The total training time of our proposed model is about one hour (01:17). We compared our MDPrePost-Net results with other existing FCN architecture (FC-DenseNet [

42], U-Net [

40], LinkNet [

41], and FPN [

43]). We used the same input data to train all the existing FCN architecture. All the existing architecture can clearly distinguish non-mangrove objects with IoU scores of more than 90% for the existing FCN architectures. However, the existing FCN architectures have more misclassified results in terms of intact and degraded mangrove classes, and the average IoU scores for both classes are lower than 90%. These results were caused by the existing FCN architecture not being specially designed to acquire a spatial–spectral–temporal relationship of pre-post images. Our proposed model considered the spatial–spectral–temporal relationship and increased the IoU scores of intact and degraded mangrove objects.

A previous study revealed that the difference in input bands affects the accuracy result of mangrove classification [

32]. We considered the effects of vegetation and mangrove indices in the accuracy results because mangroves are a unique form of vegetation with unique spectral characteristics, such as wet vegetation features. The SWIR band is useful for distinguishing wet objects. Some mangrove indices are designed according to spectral mangrove characteristics. Sentinel-2 can produce vegetation and mangrove indices. The CMRI, NDMI, and MMRI make up the vegetation index which has a good capability to distinguish mangrove objects [

24,

51,

52]. In our original input data for this study, we used 10 input bands (blue, green, red, NIR, SWIR1, SWIR2, NDVI, CMRI, NDMI, and MMRI) with vegetation and mangrove indices. Our results in

Section 3.3 reveal the mean IoU of 10 input bands increased by approximately 2.16% relative to that observed for the first experiment (R, G, B). This significant improvement was observed in the IoU value for the intact and degraded mangrove classes. The IoU value for the intact mangrove class increased by nearly 3.34%, and for the degraded mangrove class, it increased by approximately 3.06%. Based on this result, the addition of vegetation and mangrove indices increased the accuracy result.

We used the proposed MDPrePost-Net with 10 input bands to make an intact and degraded mangroves map affected by Hurricane Irma along the southwest Florida coastal zone. The confusion matrix was used to calculate the map accuracy based on reference samples. The kappa score from our proposed model result is 0.960 and is included in the almost perfect agreement category based on Landis and Koch categorizations [

58].

Previous studies of mangrove degradation affected by Hurricane Irma in some areas of southwest Florida have been performed by using WorldView-2 and Landsat data which are different data from our input data in this research. The ecological conceptual model with annual metrics before and after Hurricane Irma has been used to calculate the annual NDVI mean, NDVI standard deviation, Normalize Difference Moisture Index (NorDMI) mean, NorDMI standard deviation and NDWI mean from Landsat data, and the RF algorithm for intact and degraded mangrove classification [

22]. The time series WorldView-2 data (2010, 2016, 2017, and 2018) with the decision tree (DT) algorithm has been used to classify damaged mangrove forests [

20], while WorldView-2 data (2018) has been used for mapping damage inflicted by Hurricane Irma using SVM, DT, and neural network [

21]. Our proposed method considered the spatial–spectral–temporal relationship of pre-post Sentinel-2 imagery by using a pre-post deep feature extractor.

The comparison of the map accuracy from our proposed model with other existing results is shown in

Table 14. Lee et al. conducted mangrove degradation research in the small area of Shark River in southwest Florida by using the RF algorithm and Landsat image data. They achieved an OA of 79.1% [

22]. McCarthy et al. applied some machine learning algorithms (DT, NN, and SVM) to mangrove degradation research in Rookery Bay NEER in southwest Florida using WorldView-2 imagery and achieved an OA around 82%–85% [

20,

21]. The non-mangrove class from DT, NN, and SVM results in

Table 12 have come from averaging soil, upland, and water classes. Our MDPrePost-Net results revealed the pre-post deep feature extractor significantly improved the map accuracy of intact mangrove and degraded mangrove classes.

A total of 22% of mangrove areas were lost from spring 2016 to fall 2018 in Rookery Bay NEER and most were affected by Hurricane Irma [

20], while 97.4% of mangrove areas have been degraded by Hurricane Irma in the small area of the Shark River case-study region [

22]. These two areas are part of our study area. Our study reported that the total mangrove area, including intact/healthy and degraded mangroves in our study area, which is 153,933.36 Ha, has losses of 6.96% from Global Mangrove Forest reported by Giri, et al in 2000 [

1]. Based on our result, 26.64% (41,008.66 Ha) of the mangrove area has been degraded by Hurricane Irma, and the other 73.36% (112,924.70 Ha) of the mangrove area is still intact. Our result indicated that extreme climates event [

10] and hurricanes [

13] can degrade mangrove forests.

Our results of total intact and degraded mangrove areas are affected by the presence of cloud cover in some Sentinel-2 data that we used. The acquisition time of Sentinel-2 used in this research also affects the total or degraded mangrove areas because degraded mangrove areas can recover in a certain period of time. McCarthy et al. reported a total of 1.73 km

2 of mangroves recovered in their study area 6 months after Hurricane Irma [

20]. The last data from Sentinel-2 we used in this research were in March 2018 which is 6 months after the Hurricane Irma event. The selection of this data is based on the percentage of cloud cover to minimize discrepancies in results. The recovery time of degraded mangroves affected by hurricanes needs more attention for future research.

5. Conclusions

In September 2017, Hurricane Irma hit the coastal zone of southwest Florida and caused mangrove degradation. This study aimed to propose an end-to-end deep learning network that exploited the spatial–spectral–temporal relationship between images before the hurricane and images after the hurricane, referred to as the MDPrePost-Net. Generally, our proposed model consists of two sub-models, a pre-post feature extraction and the FCN classifier. We used pre and post Sentinel-2 to extract the spatial–spectral–temporal relationship of intact and degraded mangroves that were affected by Hurricane Irma by using the ConvLSTM feature extractor and used post image Sentinel-2 data to extract spatial–spectral of the mangrove condition after the hurricane by using a post feature extractor. Then the extracted spatial–spectral–temporal features were fed into an FCN classifier. A total of 10 input bands were used in this study (blue, green, red, NIR, SWIR-1, SWIR-2, NDVI, CMRI, NDMI, and MMRI bands). Our results reveal that the adding of vegetation and mangrove indices improves the accuracy of intact and degraded mangrove classes.

The experimental results demonstrate that the proposed model achieved better results in terms of accuracy metrics than the existing FCN architecture (FC-DenseNet, U-Net, Link-Net, and FPN). The proposed model also has an acceptable training time (01:17) or 16 seconds of each epoch. In addition, the proposed model achieved the algorithm output mean IoU, F1-Score, and OA of 97.70%, 98.83%, and 99.71%, respectively. We used the proposed model to produce an intact/healthy and degraded mangrove map affected by Hurricane Irma in 2017 along the southwest Florida coastal zone. The overall map accuracy of non-mangrove, intact/healthy and degraded mangrove classes is 0.9733 with a kappa score of 0.960. This study reported that 26.64% (41,008.66 Ha) of mangrove areas have been degraded by Hurricane Irma in September 2017, and the other 73.36% (112,924.70 Ha) of mangrove areas are still intact.