Advanced Fully Convolutional Networks for Agricultural Field Boundary Detection

Abstract

:1. Introduction

2. Proposed Method

2.1. U-Net Architecture

2.2. Residual U-Net (ResU-Net) Architecture

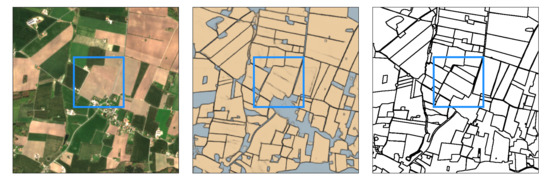

2.3. Study Area and Dataset

2.4. Network Training

- A set of 10 neural networks is randomly generated from a set of allowed hyperparameters (Unit level, conv layer, input size, and filter size for each layer);

- Each network is trained for 1000 epochs and its performance on the validation set is evaluated. We have chosen this large number of epochs to ensure that each network achieves the best possible performance, and save the best performing set of weights for each network to prevent overfitting;

- The worst-performing networks are discarded, while the better-performing ones are paired up as "parents". "child" networks are then created, which randomly inherit hyperparameter values to form the next generation of networks, while the worst-performing values "die out";

- There is also a small probability for random mutations in the child networks - hyperparameter values have a small chance of randomly changing;

- This process is repeated for 10 generations, leaving only the best performing network architectures;

2.5. Accuracy Assessment

3. Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability

Acknowledgments

Conflicts of Interest

References

- Debats, S.R.; Luo, D.; Estes, L.D.; Fuchs, T.J.; Caylor, K.K. A generalized computer vision approach to mapping crop fields in heterogeneous agricultural landscapes. Remote Sens. Environ. 2016, 179, 210–221. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Garcia-Pedrero, A.; Gonzalo-Martín, C.; Lillo-Saavedra, M.; Rodríguez-Esparragón, D. The Outlining of Agricultural Plots Based on Spatiotemporal Consensus Segmentation. Remote Sens. 2018, 10, 1991. [Google Scholar] [CrossRef] [Green Version]

- Turker, M.; Kok, E.H. Field-based sub-boundary extraction from remote sensing imagery using perceptual grouping. ISPRS J. Photogramm. Remote Sens. 2013, 79, 106–121. [Google Scholar] [CrossRef]

- Shawon, A.R.; Ko, J.; Ha, B.; Jeong, S.; Kim, D.K.; Kim, H.-Y. Assessment of a Proximal Sensing-integrated Crop Model for Simulation of Soybean Growth and Yield. Remote Sens. 2020, 12, 410. [Google Scholar] [CrossRef] [Green Version]

- Yan, L.; Roy, D.P. Automated crop field extraction from multi-temporal Web Enabled Landsat Data. Remote Sens. Environ. 2014, 144, 42–64. [Google Scholar] [CrossRef] [Green Version]

- Yan, L.; Roy, D.P. Conterminous United States crop field size quantification from multi-temporal Landsat data. Remote Sens. Environ. 2016, 172, 67–86. [Google Scholar] [CrossRef] [Green Version]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Nevatia, R.; Babu, K.R. Linear feature extraction and description. Comput. Graphics Image Proc. 1980, 13, 257–269. [Google Scholar] [CrossRef]

- Wagner, M.P.; Oppelt, N. Extracting Agricultural Fields from Remote Sensing Imagery Using Graph-Based Growing Contours. Remote Sens. 2020, 12, 1205. [Google Scholar] [CrossRef] [Green Version]

- Kettig, R.L.; Landgrebe, D.A. Classification of multispectral image data by extraction and classification of homogeneous objects. IEEE Trans. Geosci. Electron. 1976, 14, 19–26. [Google Scholar] [CrossRef] [Green Version]

- Pal, S.K.; Mitra, P. Multispectral image segmentation using the rough-set-initialized EM algorithm. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2495–2501. [Google Scholar] [CrossRef] [Green Version]

- Robertson, T.V. Extraction and classification of objects in multispectral images. Available online: https://docs.lib.purdue.edu/cgi/viewcontent.cgi?article=1117&context=larstech (accessed on 15 February 2021).

- Theiler, J.P.; Gisler, G. Contiguity-Enhanced k-means Clustering Algorithm for Unsupervised Multispectral Image Segmentation. In Proceedings of the Algorithms, Devices, and Systems for Optical Information Processing. Available online: https://public.lanl.gov/jt/Papers/cluster-spie.pdf (accessed on 15 February 2021).

- Bertasius, G.; Shi, J.; Torresani, L. DeepEdge: A multi-scale bifurcated deep network for top-down contour detection. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Santiago, Chile, 7–12 June 2015; pp. 4380–4389. [Google Scholar]

- Maninis, K.-K.; Pont-Tuset, J.; Arbelaez, P.; Van Gool, L. Convolutional Oriented Boundaries: From Image Segmentation to High-Level Tasks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 819–833. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wei, S.; Xinggang, W.; Yan, W.; Xiang, B.; Zhang, Z. DeepContour: A deep convolutional feature learned by positive-sharing loss for contour detection. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Santiago, Chile, 7–12 June 2015; pp. 3982–3991. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-Nested Edge Detection. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1395–1403. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Rawat, W.; Wang, Z. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 21 June 2015; pp. 1–9. [Google Scholar]

- Cheng, G.; Yang, C.; Yao, X.; Guo, L.; Han, J. When Deep Learning Meets Metric Learning: Remote Sensing Image Scene Classification via Learning Discriminative CNNs. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2811–2821. [Google Scholar] [CrossRef]

- Bergado, J.R.; Persello, C.; Gevaert, C. A deep learning approach to the classification of sub-decimetre resolution aerial images. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1516–1519. [Google Scholar]

- Bergado, J.R.; Persello, C.; Stein, A. Recurrent Multiresolution Convolutional Networks for VHR Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6361–6374. [Google Scholar] [CrossRef] [Green Version]

- Fu, G.; Liu, C.; Zhou, R.; Sun, T.; Zhang, Q. Classification for High Resolution Remote Sensing Imagery Using a Fully Convolutional Network. Remote Sens. 2017, 9, 498. [Google Scholar]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional Neural Networks for Large-Scale Remote-Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 645–657. [Google Scholar] [CrossRef] [Green Version]

- Paisitkriangkrai, S.; Sherrah, J.; Janney, P.; Van Den Hengel, A. Semantic labeling of aerial and satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2868–2881. [Google Scholar] [CrossRef]

- Volpi, M.; Tuia, D. Dense Semantic Labeling of Subdecimeter Resolution Images With Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 881–893. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Li, Z.; Han, J.; Yao, X.; Guo, L. Exploring Hierarchical Convolutional Features for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6712–6722. [Google Scholar] [CrossRef]

- Ghamisi, P.; Chen, Y.; Zhu, X.X. A self-improving convolution neural network for the classification of hyperspectral data. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1537–1541. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.; Du, S. Spectral–spatial feature extraction for hyperspectral image classification: A dimension reduction and deep learning approach. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.-Q. Target Classification Using the Deep Convolutional Networks for SAR Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate Object Localization in Remote Sensing Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Gevaert, C.M.; Persello, C.; Nex, F.; Vosselman, G. A deep learning approach to DTM extraction from imagery using rule-based training labels. ISPRS J. Photogramm. Remote Sens. 2018, 142, 106–123. [Google Scholar] [CrossRef]

- Rizaldy, A.; Persello, C.; Gevaert, C.; Oude Elberink, S.J. Fully convolutional networks for ground classification from lidar point clouds. Remote Sens. Spat. Inf. Sci. 2018, 4. [Google Scholar] [CrossRef] [Green Version]

- Mboga, N.; Persello, C.; Bergado, J.R.; Stein, A. Detection of Informal Settlements from VHR Images Using Convolutional Neural Networks. Remote Sens. 2017, 9, 1106. [Google Scholar] [CrossRef] [Green Version]

- Persello, C.; Stein, A. Deep Fully Convolutional Networks for the Detection of Informal Settlements in VHR Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2325–2329. [Google Scholar] [CrossRef]

- Taravat, A.; Grayling, M.; Talon, P.; Petit, D. Boundary delineation of agricultural fields using convolutional NNs. In Proceedings of the ESA Phi Week, Rome, Italy, 9–13 September 2019. [Google Scholar]

- Persello, C.; Tolpekin, V.A.; Bergado, J.R.; de By, R.A. Delineation of agricultural fields in smallholder farms from satellite images using fully convolutional networks and combinatorial grouping. Remote Sens. Environ. 2019, 231, 111253. [Google Scholar] [CrossRef] [PubMed]

- Xia, X.; Persello, C.; Koeva, M. Deep Fully Convolutional Networks for Cadastral Boundary Detection from UAV Images. Remote Sens. 2019, 11, 1725. [Google Scholar] [CrossRef] [Green Version]

- Masoud, K.M.; Persello, C.; Tolpekin, V.A. Delineation of Agricultural Field Boundaries from Sentinel-2 Images Using a Novel Super-Resolution Contour Detector Based on Fully Convolutional Networks. Remote Sens. 2020, 12, 59. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5 October 2015; pp. 234–241. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 30 June 2016; pp. 770–778. [Google Scholar]

- Statistics Denmark: Area. Available online: https://www.dst.dk/en/Statistik/emner/geografi-miljoe-og-energi/areal/areal (accessed on 15 February 2021).

- Eurostat: Agriculture, Forestry and Fishery Statistics. Available online: https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Agriculture,_forestry_and_fishery_statistics (accessed on 15 February 2021).

- Eurostat: Utilized Agricultural Area by Categories. Available online: https://ec.europa.eu/eurostat/databrowser/view/tag00025/default/table?lang=en (accessed on 15 February 2021).

- Statistics Denmark: Agriculture, Horticulture and Forestry. Available online: https://www.dst.dk/en/Statistik/emner/erhvervslivets-sektorer/landbrug-gartneri-og-skovbrug (accessed on 15 February 2021).

- SoilEssentials. Available online: https://www.soilessentials.com (accessed on 15 February 2021).

| Model Name (Unit Level, Conv Layer, Input Size) | F1 Score | Jaccard Coefficient |

|---|---|---|

| ResU-Net (8, 15, 256 × 256) | 0.87 | 0.74 |

| ResU-Net (10, 19, 512 × 512) | 0.94 | 0.87 |

| ResU-Net (12, 23, 1024 × 1024) | 0.91 | 0.79 |

| U-Net (8, 15, 256 × 256) | 0.89 | 0.76 |

| U-Net (10, 19, 512 × 512) | 0.92 | 0.83 |

| U-Net (12, 23, 1024 × 1024) | 0.83 | 0.72 |

| Input | Unit Level | Conv Layer | Stride | Output Size |

|---|---|---|---|---|

| 512 × 512 × 4 | ||||

| Encoding | Level 1 | Conv 1 | 1 | 512 × 512 × 16 |

| Conv 2 | 1 | 512 × 512 × 16 | ||

| Level 2 | Conv 3 | 2 | 256 × 256 × 32 | |

| Conv 4 | 1 | 256 × 256 × 32 | ||

| Level 3 | Conv 5 | 2 | 128 × 128 × 64 | |

| Conv 6 | 1 | 128 × 128 × 64 | ||

| Level 4 | Conv 7 | 2 | 64 × 64 × 128 | |

| Conv 8 | 1 | 64 × 64 × 128 | ||

| Bridge | Level 5 | Conv 9 | 2 | 32 × 32 × 256 |

| Conv 10 | 1 | 32 × 32 × 256 | ||

| Decoding | Level 6 | Conv 11 | 1 | 64 × 64 × 128 |

| Conv 12 | 1 | 64 × 64 × 128 | ||

| Level 7 | Conv 13 | 1 | 128 × 128 × 64 | |

| Conv 14 | 1 | 128 × 128 × 64 | ||

| Level 8 | Conv 15 | 1 | 256 × 256 × 32 | |

| Conv 16 | 1 | 256 × 256 × 32 | ||

| Level 9 | Conv 17 | 1 | 512 × 512 × 16 | |

| Conv 18 | 1 | 512 × 512 × 16 | ||

| Output | Level 10 | Conv 19 | 1 | 512 × 512 × 3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Taravat, A.; Wagner, M.P.; Bonifacio, R.; Petit, D. Advanced Fully Convolutional Networks for Agricultural Field Boundary Detection. Remote Sens. 2021, 13, 722. https://doi.org/10.3390/rs13040722

Taravat A, Wagner MP, Bonifacio R, Petit D. Advanced Fully Convolutional Networks for Agricultural Field Boundary Detection. Remote Sensing. 2021; 13(4):722. https://doi.org/10.3390/rs13040722

Chicago/Turabian StyleTaravat, Alireza, Matthias P. Wagner, Rogerio Bonifacio, and David Petit. 2021. "Advanced Fully Convolutional Networks for Agricultural Field Boundary Detection" Remote Sensing 13, no. 4: 722. https://doi.org/10.3390/rs13040722

APA StyleTaravat, A., Wagner, M. P., Bonifacio, R., & Petit, D. (2021). Advanced Fully Convolutional Networks for Agricultural Field Boundary Detection. Remote Sensing, 13(4), 722. https://doi.org/10.3390/rs13040722