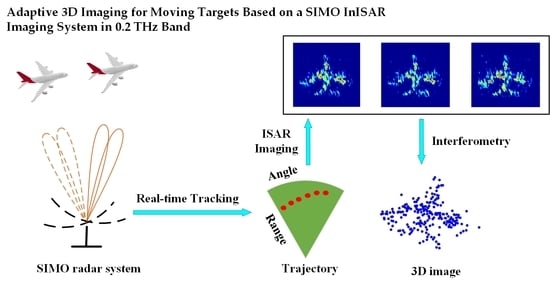

Adaptive 3D Imaging for Moving Targets Based on a SIMO InISAR Imaging System in 0.2 THz Band

Abstract

:1. Introduction

2. Architecture of the Terahertz SIMO InISAR Imaging System

2.1. The Architecture of the SIMO Antenna Array

2.2. FMCW Signal Model and De-chirp processing

3. Adaptive Tracking of the Moving Targets Using Multiple Beams

3.1. SIMO Signal Model

3.2. Target Locating with Phase Difference of Multiple Beams

4. Three-dimensional Imaging of Moving Targets with InISAR Technology

4.1. ISAR Imaging with Combined Motion Compensation

4.2. Image Registration

4.3. Interferometric Imaging

5. Experiments

5.1. Experiment Set-up

5.2. Target Tracking and Imaging

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Horiuchi, N. Terahertz technology: Endless applications. Nat. Photon. 2010, 4, 140. [Google Scholar] [CrossRef]

- Popović, Z.; Grossman, E.N. THz metrology and instrumentation. IEEE Trans. THz Sci. Technol. 2011, 1, 133–144. [Google Scholar] [CrossRef]

- Sheen, D.M.; McMakin, D.L.; Hall, T.E. Three-dimensional millimeter-wave imaging for concealed weapon detection. IEEE Trans. Microw. Theory Tech. 2001, 49, 1581–1592. [Google Scholar] [CrossRef]

- Sheen, D.M.; McMakin, D.L.; Hall, T.E.; Severtsen, R.H. Active millimeter-wave standoff and portal imaging techniques for personnel screening. In Proceedings of the 2009 IEEE Conference on Technologies for Homeland Security, Boston, MA, USA, 11–12 May 2009; pp. 440–447. [Google Scholar]

- Sheen, D.M.; Hall, T.E.; Severtsen, R.H.; McMakin, D.L.; Hatchell, B.K.; Valdez, P.L.J. Standoff concealed weapon detection using a 350 GHz radar imaging system. Proc. SPIE 2010, 7670, 115–118. [Google Scholar]

- Cooper, K.B.; Dengler, R.J.; Llombart, N.; Bryllert, T.; Chattopadhyay, G.; Schlecht, E.; Gill, J.; Lee, C.; Skalare, A.; Mehdi, I.; et al. Penetrating 3-D imaging at 4- and 25-m range using a submillimeter-wave radar. IEEE Trans. Microw. Theory Tech. 2008, 56, 2771–2778. [Google Scholar] [CrossRef] [Green Version]

- Cooper, K.B.; Dengler, R.J.; Llombart, N.N.; Thomas, B.; Chattopadhyay, G.; Siegel, P.H. THz imaging radar for standoff personnel screening. IEEE Trans. Terahertz Sci. Technol. 2011, 1, 169–182. [Google Scholar] [CrossRef]

- Gu, S.; Li, C.; Gao, X.; Sun, Z.; Fang, G. Terahertz aperture synthesized imaging with fan-beam scanning for personnel screening. IEEE Trans. Microw. Theory Tech. 2012, 60, 3877–3885. [Google Scholar] [CrossRef]

- Saqueb, S.A.N.; Sertel, K. Multisensor Compressive Sensing for High Frame-Rate Imaging System in the THz Band. IEEE Trans. Terahertz Sci. Technol. 2019, 9, 520–523. [Google Scholar] [CrossRef]

- Alexander, N.E.; Alderman, B.; Allona, F.; Frijlink, P.; Gonzalo, R.; Hägelen, M.; Ibáñez, A.; Krozer, V.; Langford, M.L.; Limiti, E.; et al. TeraSCREEN: Multi-frequency multi-mode Terahertz screening for border checks. Proc. SPIE 2014, 9078, 907802. [Google Scholar]

- Ahmed, S.S.; Genghammer, A.; Schiessl, A.; Schmidt, L. Fully Electronic E-Band Personnel Imager of 2 m² Aperture Based on a Multistatic Architecture. IEEE Trans. Microw. Theory Tech. 2013, 61, 651–657. [Google Scholar] [CrossRef]

- Ahmed, S.S.; Schiessl, A.; Schmidt, L. A Novel Fully Electronic Active Real-Time Imager Based on a Planar Multistatic Sparse Array. IEEE Trans. Microw. Theory Tech. 2011, 59, 3567–3576. [Google Scholar] [CrossRef]

- Blazquez, B.; Cooper, K.B.; Llombart, N. Time-delay multiplexing with linear arrays of THz radar transceivers. IEEE Trans. Terahertz Sci. Technol. 2014, 4, 232–239. [Google Scholar] [CrossRef]

- Reck, T.; Kubiak, C.J.; Siles, J.V.; Lee, C.; Lin, R.; Chattopadhyay, G.; Mehdi, I.; Cooper, K. A silicon micromachined eight-pixel transceiver array for submillimeter-wave radar. IEEE Trans. Terahertz Sci. Technol. 2015, 5, 197–206. [Google Scholar] [CrossRef]

- Gao, H.; Li, C.; Zheng, S.; Wu, S.; Fang, G. Implementation of the phase shift migration in MIMO-sidelooking imaging at Terahertz band. IEEE Sens. J. 2019, 19, 9384–9393. [Google Scholar] [CrossRef]

- Gao, J.K.; Cui, Z.H.M.; Cheng, B.B.; Qin, Y.L.; Deng, X.J.; Deng, B.; Li, X.; Wang, H.Q. Fast three-dimensional image reconstruction of a standoff screening system in the Terahertz regime. IEEE Trans. Terahertz Sci. Technol. 2018, 8, 38–51. [Google Scholar] [CrossRef]

- Krozer, V.; Löffler, T.; Dall, J.; Kusk, A.; Eichhorn, F.; Olsson, R.K.; Buron, J.D.; Jepsen, P.U.; Zhurbenko, V.; Jensen, T. Terahertz imaging systems with aperture synthesis techniques. IEEE Trans. Microw. Theory Tech. 2010, 58, 2027–2039. [Google Scholar] [CrossRef]

- Stanko, S.; Palm, S.; Sommer, R.; Klöppel, F.; Caris, M.; Pohl, N. Millimeter resolution SAR imaging of infrastructure in the lower THz region using MIRANDA-300. In Proceedings of the 2016 46th European Microwave Conference, London, UK, 4–6 October 2016; pp. 1505–1508. [Google Scholar]

- Palm, S.; Sommer, R.; Homes, A.; Pohl, N.; Stilla, U. Mobile mapping by FMCW synthetic aperture radar operating at 300 GHz. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016. [Google Scholar]

- Kim, S.; Fan, R.; Dominski, F. ViSAR: A 235 GHz radar for airborne applications. In Proceedings of the 2018 IEEE Radar Conference, Oklahoma City, OK, USA, 23–27 April 2018; pp. 1549–1554. [Google Scholar]

- Shi, S.; Li, C.; Hu, J.; Zhang, X.; Fang, G. Motion compensation for Terahertz synthetic aperture radar based on subaperture decomposition and minimum entropy theorem. IEEE Sens. J. 2010, 20, 14940–14949. [Google Scholar] [CrossRef]

- Danylov, A.A.; Goyette, T.M.; Waldman, J.; Coulombe, M.J.; Gatesman, A.J.; Giles, R.H.; Qian, X.; Chandrayan, N.; Vangala, S.; Termkoa, K.; et al. Terahertz inverse synthetic aperture radar (ISAR) imaging with a quantum cascade laser transmitter. Opt. Express 2010, 18, 16264–16272. [Google Scholar] [CrossRef] [PubMed]

- Caris, M.; Stanko, S.; Palm, S.; Sommer, R.; Wahlen, A.; Pohl, N. 300 GHz radar for high resolution SAR and ISAR applications. In Proceedings of the 2015 16th International Radar Symposium (IRS), Dresden, Germany, 24–26 June 2015; pp. 577–580. [Google Scholar]

- Cheng, B.; Jiang, G.; Wang, C.; Yang, C.; Cai, Y.; Chen, Q.; Huang, X.; Zeng, G.; Jiang, J.; Deng, X.; et al. Real-time imaging with a 140 GHz inverse synthetic aperture radar. IEEE Trans. Terahertz Sci. Technol. 2013, 5, 594–605. [Google Scholar] [CrossRef]

- Zhang, B.; Pi, Y.; Li, J. Terahertz imaging radar with inverse aperture synthesis techniques: System structure, signal processing, and experiment results. IEEE Sens. J. 2015, 15, 290–299. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Q.; Deng, B.; Qin, Y.; Wang, H. Experimental research on interferometric inverse synthetic aperture radar imaging with multi-channel Terahertz radar system. Sensors 2019, 19, 2330. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, Y.; Yang, Q.; Deng, B.; Qin, Y.; Wang, H. Estimation of translational motion parameters in Terahertz interferometric inverse synthetic aperture radar (InISAR) imaging based on a strong scattering centers fusion technique. Remote Sens. 2019, 11, 1221. [Google Scholar] [CrossRef] [Green Version]

- Viegas, C.; Alderman, B.; Huggard, P.G.; Powell, J.; Parow-Souchon, K.; Firdaus, M.; Liu, H.; Duff, C.I.; Sloan, R. Active millimeter-wave radiometry for nondestructive testing/evaluation of composites glass fiber reinforced polymer. IEEE Trans. Microw. Theory Tech. 2017, 65, 641–650. [Google Scholar] [CrossRef]

- Meier, D.; Schwarze, T.; Link, T.; Zech, C.; Baumann, B.; Schlechtweg, M.; Kühn, J.; Rösch, M.; Reindl, L.M. Millimeter-Wave tomographic imaging of composite materials based on phase evaluation. IEEE Trans. Microw. Theory Tech. 2019, 67, 4055–4068. [Google Scholar] [CrossRef]

- Meo, S.D.; Espín-López, P.F.; Martellosio, A.; Pasian, M.; Matrone, G.; Bozzi, M.; Magenes, G.; Mazzanti, A.; Perregrini, L.; Svelto, F.; et al. On the feasibility of breast cancer imaging systems at millimeter-waves frequencies. IEEE Trans. Microw. Theory Tech. 2017, 65, 1795–1806. [Google Scholar] [CrossRef]

- Richards, M.A.; Scheer, J.A.; Holm, W.A. Principles of Modern Radar; SciTech Publishing: Edison, NJ, USA, 2010. [Google Scholar]

- Singer, R.A. Estimating optimal tracking filter performance for manned maneuvering targets. IEEE Trans. Aerosp. Electron. Syst. 1970, AES-6, 473–483. [Google Scholar] [CrossRef]

- Kirubarajan, T.; Bar-Shalom, Y. Kalman filter versus IMM estimator: When do we need the latter. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1452–1457. [Google Scholar] [CrossRef]

- Li, H.; Li, C.; Gao, H.; Wu, S.; Fang, G. Study of moving targets tracking methods for a multi-beam tracking system in Terahertz band. IEEE Sens. J. 2020. [Google Scholar] [CrossRef]

- Zhu, D.; Wang, L.; Yu, Y.; Tao, Q.; Zhu, Z. Robust ISAR range alignment via minimizing the entropy of the average range profile. IEEE Geosci. Remote Sens. Lett. 2009, 6, 204–208. [Google Scholar]

- Wahl, D.E.; Eichel, P.H.; Ghiglia, D.C. Phase gradient autofocus—A robust tool for high resolution SAR phase correction. IEEE Trans. Aerosp. Electron. Syst. 1994, 30, 827–835. [Google Scholar] [CrossRef] [Green Version]

| Input: Received raw echoes from Rx1–Rx4 in real-time. |

| Step 1: Synthesize the virtual sum signal by accumulating the echoes from the four receiving channels, and extract the echoes from Rx1, Rx2, and Rx4. |

| Step 2: Perform range compression to produce the HRRPs for the virtual sum channel, receiving channel Rx1, Rx2, and Rx4. |

| Step 3: Conduct target detection based on the virtual sum HRRP to find the range cells in which scatterers locate. The range information of each detected scatterer can be obtained at the same time. |

| Step 4: Extract the complex responses of each scatterer in the HRRPs of Rx1, Rx2, and Rx4 according to their range cell numbers. Then extract the phase response differences in the two receiver couples Rx1 & Rx2 and Rx1 & Rx4, respectively. |

| Step 5: For each scatterer, following equation (14), calculate its azimuth deviation angle with the phase response difference of Rx1 & Rx2, and calculate its elevation deviation angle with the phase response difference of Rx1 & Rx4. |

| Step 6: Determine the coordinates of each scatterer using corresponding range and angle information obtained in Step 3 and Step 5. Then synthesize the target geometric center to realize target locating. |

| Step 7: Perform Kalman filtering to get the tracking result, based on which the relative deviation of target from the antenna axis can be determined. |

| Step 8: Adjust the antenna pointing direction according to the relative deviation. |

| Output: Real-time tracking of the moving target. |

| Input: Recorded raw echoes from Rx1-Rx4 during imaging windows. |

| Step 1: Synthesize the virtual sum signal by accumulating the echoes from the four receiving channels, and extract the echoes from Rx1, Rx2, and Rx4. |

| Step 2: Estimate the target moving velocities by analyzing the tracked trajectory. Then form the phase histories for corresponding channels according to equation (23) and compensate them in the raw echoes to accomplish image registration. |

| Step 3: Perform range compression to get the HRRPs of the virtual sum channel, receiving channel Rx1, Rx2, and Rx4. |

| Step 4: Estimate the range migration during imaging window baesd on the virtual sum HRRPs. Then perform combined envelope alignment by compensating the same range migration to the range profiles of Rx1, Rx2, and Rx4. |

| Step 5: Estimate the phase error history along slow-time baesd on the aligned virtual sum HRRPs. Then perform combined phase correction by compensating the same phase error history to the aligned range profiles of Rx1, Rx2, and Rx4. |

| Step 6: Conduct cross-range compression to get the ISAR images of the virtual sum channel, receiving channel Rx1, Rx2, and Rx4.. |

| Step 7: Perform interferometry for the ISAR images of Rx1 and Rx2 to obtain the scatterer coordinates in the horizontal direction according to equations (28) and (30). Meanwhile, perform interferometry for the ISAR images of Rx1 and Rx4 to obtain the scatterer coordinates in the vertical direction according to equations (29) and (31). |

| Step 8: Determine the scatterer coordinates in the range direction to acquire the 3D coordinates. |

| Output: 3D InISAR image. |

| Parameter | Symbol | Value |

|---|---|---|

| Carrier frequency | 0.2 THz | |

| Bandwidth | 15 GHz | |

| Chirp period | 4 ms | |

| IF sampling frequency | 1.024 MHz | |

| Baseline length | , | 5 mm |

| Target size | --- | 25 cm × 20 cm |

| Target range | --- | 4.5 m |

| Target velocity | (0.052, 0, 0) m/s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Li, C.; Wu, S.; Zheng, S.; Fang, G. Adaptive 3D Imaging for Moving Targets Based on a SIMO InISAR Imaging System in 0.2 THz Band. Remote Sens. 2021, 13, 782. https://doi.org/10.3390/rs13040782

Li H, Li C, Wu S, Zheng S, Fang G. Adaptive 3D Imaging for Moving Targets Based on a SIMO InISAR Imaging System in 0.2 THz Band. Remote Sensing. 2021; 13(4):782. https://doi.org/10.3390/rs13040782

Chicago/Turabian StyleLi, Hongwei, Chao Li, Shiyou Wu, Shen Zheng, and Guangyou Fang. 2021. "Adaptive 3D Imaging for Moving Targets Based on a SIMO InISAR Imaging System in 0.2 THz Band" Remote Sensing 13, no. 4: 782. https://doi.org/10.3390/rs13040782

APA StyleLi, H., Li, C., Wu, S., Zheng, S., & Fang, G. (2021). Adaptive 3D Imaging for Moving Targets Based on a SIMO InISAR Imaging System in 0.2 THz Band. Remote Sensing, 13(4), 782. https://doi.org/10.3390/rs13040782