Hand Gestures Recognition Using Radar Sensors for Human-Computer-Interaction: A Review

Abstract

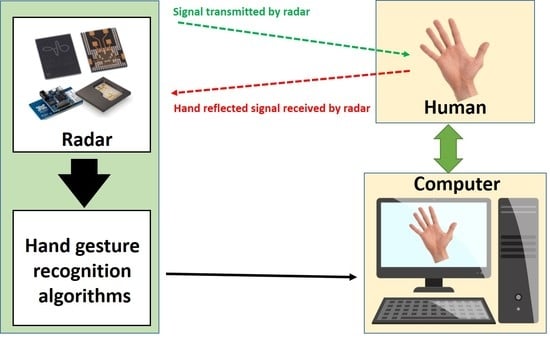

:1. Introduction

1.1. Understanding Human Gestures

1.2. Hand-Gesture Based HCI Design

- Hand-gesture movement acquisition, where one of the available radar technologies is chosen;

- Pre-processing the received signal, which involves pre-filtering followed by a data formatting which depends on step 3. For example, the 1D, 2D, and 3D deep Convolutional Neural Network (DCNN) will, respectively, require data to be in a 1D, 2D or 3D shape;

- The final step of hand-gesture classification is similar to any other classification problem, where the input data are classified using a suitable classifier.

1.3. Main Contribution and Scope of Article

- We provide a first ever comprehensive review of the available radar-based HGR systems;

- We have discussed different available radar technologies to comprehend their similarities and differences. All the aspects related to HGR recognition, including data acquisition, data representation, data preprocessing and classification, are explained in detail;

- We explained the radar-recorded hand-gesture data representation techniques for 1D, 2D and 3D classifiers. Based on this data representation, details of the available HGR algorithms are discussed;

- Finally, details related to application-oriented HGR research works are also presented;

- Several trends and survey analyses are also included.

2. Hand-Gesture Signal Acquisition through Radar

- Pulsed Radar;

- Continuous-Wave (CW) Radar.

2.1. Pulsed Radars

2.2. CW Radars

2.2.1. SFCW Radar

2.2.2. FMCW Radar

3. Hand-Gesture Radar Signal Representation

- Time-Amplitude: The time-varying amplitude of the received signal is exploited to extract a hand-gesture motion profile. The signal, in this case, is 1-Dimensional (1-D), as represented in Figure 7a. For gesture-recognition, such types of signal have been used as input for a deep-learning classifier such as 1-D CNN [51], where several American Sign Language (ASL) gestures were classified, as shown in Figure 7a. Additionally, this type of representation of hand-gesture signal can be utilized to develop signal-processing-based simple classifiers as well [62];

- Time-Range: Time-varying distance of received signal is used to classify hand gestures. Against hand-gestures, the magnitude of variations in distance of hand is recorded over time to obtain a 1-D [30] and 2-D signal [12]. For example, authors in [38] used the Time-Range 2D gesture data representation scheme shown in Figure 7c. For gesture recognition, authors have used 2-D and 3-D Time-Range signals to drive 2-D [13,20] and 3-D [12,38] CNN;

- Time-Doppler (frequency/speed): Time-varying Doppler shift is used to extract features of hand gestures; for example, the authors in [54] used the change in Doppler frequency over time as input to CNN for gesture classification;

4. HGR Algorithms

4.1. HGR Algorithms for Pulsed Radar

4.2. HGR through CW Radar

4.2.1. HGR through SFCW (Doppler) Radar

4.2.2. HGR Algorithms for FMCW

5. Summary

5.1. Quantitative Analysis

5.2. Nature of Used Hand Gestures and Application Domain Analysis

5.3. Real-Time HGR Examples

5.4. Security and Privay Analysis of Radar-Based HGR System

5.5. Commercially Available Radars for HGR

5.6. Ongoing Trends Limitations and Future Direction

- Continuous-wave radars (FMCW and Doppler radar) are the most widely used radars for HGR;

- All the research presented in this paper used a single hand for gesture recognition. No research work has been done to detect gestures performed by two hands simultaneously. The detection of gestures using two hands simultaneously has yet to be explored;

- The most commonly used machine learning algorithms for gesture classification are (kNN), Support vector machine (SVM), CNN, LSTM;

- For pulsed radar, raw-data-driven deep-learning algorithms are mostly used for HGR. In comparison to CW radars, less work has been done on feature extraction;

- For FMCW and SFCW radars, various feature-extraction techniques exist. Contrary to this, for pulsed radar, we observed that most of the studies used deep-learning approaches, and hand-crafted features for classification are often not considered. There is a need for a strong set of features for Pulsed UWB radars. All the machine-learning-based classifiers were utilized supervised learning only;

- Experimentation is normally performed in a controlled lab environment, and scalability to outdoor spaces, large crowds and indoor spaces needs to be tested. Real-time implementation is another challenge, particularly for deep-learning-based algorithms. Several studies performed offline testing only. Usually, the gesture set used is limited to 12–15 gestures only, and each gesture is classified separately. Classifying a series of gestures and continuous gestures remain open issues;

- Soli radar was seen to be used in Smart Phone and smart watches. However, most of the research did not suggest any strategy to make gesture recognition radars interoperable with other appliances;

- Researchers focused on training algorithms using supervised machine-learning concepts only. The un-supervised machine-learning algorithms have a great potential for gesture-recognition algorithms in future;

- The security of radar-based HGR devices has yet to be explored.

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Laurel, B.; Mountford, S.J. The Art of Human-Computer Interface Design; Addison-Wesley Longman Publishing Co. Inc.: Boston, MA, USA, 1990. [Google Scholar]

- Yeo, H.-S.; Lee, B.-G.; Lim, H. Hand tracking and gesture recognition system for human-computer interaction using low-cost hardware. Multimed. Tools Appl. 2015, 74, 2687–2715. [Google Scholar] [CrossRef]

- Pisa, S.; Chicarella, S.; Pittella, E.; Piuzzi, E.; Testa, O.; Cicchetti, R. A Double-Sideband Continuous-Wave Radar Sensor for Carotid Wall Movement Detection. IEEE Sens. J. 2018, 18, 8162–8171. [Google Scholar] [CrossRef]

- Nanzer, J.A. A review of microwave wireless techniques for human presence detection and classification. IEEE Trans. Microw. Theory Tech. 2017, 65, 1780–1794. [Google Scholar] [CrossRef]

- Kang, S.; Lee, Y.; Lim, Y.-H.; Park, H.-K.; Cho, S.H. Validation of noncontact cardiorespiratory monitoring using impulse-radio ultra-wideband radar against nocturnal polysomnography. Sleep Breath. 2019, 24, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Putzig, N.E.; Smith, I.B.; Perry, M.R.; Foss, F.J., II; Campbell, B.A.; Phillips, R.J.; Seu, R. Three-dimensional radar imaging of structures and craters in the Martian polar caps. Icarus 2018, 308, 138–147. [Google Scholar] [CrossRef]

- Choi, J.W.; Quan, X.; Cho, S.H. Bi-directional passing people counting system based on IR-UWB radar sensors. IEEE Internet Things J. 2017, 5, 512–522. [Google Scholar] [CrossRef]

- Santra, A.; Ulaganathan, R.V.; Finke, T. Short-range millimetric-wave radar system for occupancy sensing application. IEEE Sens. Lett. 2018, 2, 1–4. [Google Scholar] [CrossRef]

- Leem, S.K.; Khan, F.; Cho, S.H. Detecting Mid-air Gestures for Digit Writing with Radio Sensors and a CNN. IEEE Trans. Instrum. Meas. 2019, 69, 1066–1081. [Google Scholar] [CrossRef]

- Li, G.; Zhang, S.; Fioranelli, F.; Griffiths, H. Effect of sparsity-aware time–frequency analysis on dynamic hand gesture classification with radar micro-Doppler signatures. IET RadarSonar Navig. 2018, 12, 815–820. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, S.; Khan, F.; Ghaffar, A.; Hussain, F.; Cho, S.H. Finger-counting-based gesture recognition within cars using impulse radar with convolutional neural network. Sensors 2019, 19, 1429. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, S.; Cho, S.H. Hand Gesture Recognition Using an IR-UWB Radar with an Inception Module-Based Classifier. Sensors 2020, 20, 564. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ghaffar, A.; Khan, F.; Cho, S.H. Hand pointing gestures based digital menu board implementation using IR-UWB transceivers. IEEE Access 2019, 7, 58148–58157. [Google Scholar] [CrossRef]

- Skaria, S.; Al-Hourani, A.; Lech, M.; Evans, R.J. Hand-gesture recognition using two-antenna Doppler radar with deep convolutional neural networks. IEEE Sens. J. 2019, 19, 3041–3048. [Google Scholar] [CrossRef]

- Zhang, Z.; Tian, Z.; Zhou, M. Latern: Dynamic continuous hand gesture recognition using FMCW radar sensor. IEEE Sens. J. 2018, 18, 3278–3289. [Google Scholar] [CrossRef]

- Choi, J.-W.; Ryu, S.-J.; Kim, J.-H. Short-range radar based real-time hand gesture recognition using LSTM encoder. IEEE Access 2019, 7, 33610–33618. [Google Scholar] [CrossRef]

- Lien, J.; Gillian, N.; Karagozler, M.E.; Amihood, P.; Schwesig, C.; Olson, E.; Raja, H.; Poupyrev, I. Soli: Ubiquitous gesture sensing with millimeter wave radar. ACM Trans. Graph. 2016, 35, 1–19. [Google Scholar] [CrossRef] [Green Version]

- Liu, C.; Li, Y.; Ao, D.; Tian, H. Spectrum-Based Hand Gesture Recognition Using Millimeter-Wave Radar Parameter Measurements. IEEE Access 2019, 7, 79147–79158. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, S.; Zhou, M.; Jiang, Q.; Tian, Z. TS-I3D based hand gesture recognition method with radar sensor. IEEE Access 2019, 7, 22902–22913. [Google Scholar] [CrossRef]

- Khan, F.; Leem, S.K.; Cho, S.H. In-Air Continuous Writing Using UWB Impulse Radar Sensors. IEEE Access 2020, 8, 99302–99311. [Google Scholar] [CrossRef]

- Miller, E.; Li, Z.; Mentis, H.; Park, A.; Zhu, T.; Banerjee, N. RadSense: Enabling one hand and no hands interaction for sterile manipulation of medical images using Doppler radar. Smart Health 2020, 15, 100089. [Google Scholar] [CrossRef]

- Thi Phuoc Van, N.; Tang, L.; Demir, V.; Hasan, S.F.; Duc Minh, N.; Mukhopadhyay, S. Microwave Radar Sensing Systems for Search and Rescue Purposes. Sensors 2019, 19, 2879. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mitra, S.; Acharya, T. Gesture recognition: A survey. IEEE Trans. Syst. ManCybern. Part C 2007, 37, 311–324. [Google Scholar] [CrossRef]

- Hogan, K. Can’t Get through: Eight Barriers to Communication; Pelican Publishing: New Orleans, LA, USA, 2003. [Google Scholar]

- Rautaray, S.S.; Agrawal, A. Vision based hand gesture recognition for human computer interaction: A survey. Artif. Intell. Rev. 2015, 43, 1–54. [Google Scholar] [CrossRef]

- Wachs, J.P.; Stern, H.I.; Edan, Y.; Gillam, M.; Handler, J.; Feied, C.; Smith, M. A gesture-based tool for sterile browsing of radiology images. J. Am. Med Inform. Assoc. 2008, 15, 321–323. [Google Scholar] [CrossRef]

- Joseph, J.; Divya, D. Hand Gesture Interface for Smart Operation Theatre Lighting. Int. J. Eng. Technol. 2018, 7, 20–23. [Google Scholar] [CrossRef]

- Hotson, G.; McMullen, D.P.; Fifer, M.S.; Johannes, M.S.; Katyal, K.D.; Para, M.P.; Armiger, R.; Anderson, W.S.; Thakor, N.V.; Wester, B.A. Individual finger control of a modular prosthetic limb using high-density electrocorticography in a human subject. J. Neural Eng. 2016, 13, 026017. [Google Scholar] [CrossRef] [Green Version]

- Nakanishi, Y.; Yanagisawa, T.; Shin, D.; Chen, C.; Kambara, H.; Yoshimura, N.; Fukuma, R.; Kishima, H.; Hirata, M.; Koike, Y. Decoding fingertip trajectory from electrocorticographic signals in humans. Neurosci. Res. 2014, 85, 20–27. [Google Scholar] [CrossRef]

- Zheng, C.; Hu, T.; Qiao, S.; Sun, Y.; Huangfu, J.; Ran, L. Doppler bio-signal detection based time-domain hand gesture recognition. In Proceedings of the 2013 IEEE MTT-S International Microwave Workshop Series on RF and Wireless Technologies for Biomedical and Healthcare Applications (IMWS-BIO), Singapore, 9–13 December 2013. [Google Scholar]

- Kim, Y.; Ling, H. Human activity classification based on micro-Doppler signatures using a support vector machine. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1328–1337. [Google Scholar]

- Li, C.; Peng, Z.; Huang, T.-Y.; Fan, T.; Wang, F.-K.; Horng, T.-S.; Munoz-Ferreras, J.-M.; Gomez-Garcia, R.; Ran, L.; Lin, J. A review on recent progress of portable short-range noncontact microwave radar systems. IEEE Trans. Microw. Theory Tech. 2017, 65, 1692–1706. [Google Scholar] [CrossRef]

- Pramudita, A.A. Time and Frequency Domain Feature Extraction Method of Doppler Radar for Hand Gesture Based Human to Machine Interface. Prog. Electromagn. Res. 2020, 98, 83–96. [Google Scholar] [CrossRef] [Green Version]

- Rao, S.; Ahmad, A.; Roh, J.C.; Bharadwaj, S. 77GHz single chip radar sensor enables automotive body and chassis applications. Tex. Instrum. 2017. Available online: Http://Www.Ti.Com/Lit/Wp/Spry315/Spry315.Pdf (accessed on 24 August 2020).

- Islam, M.T.; Nirjon, S. Wi-Fringe: Leveraging Text Semantics in WiFi CSI-Based Device-Free Named Gesture Recognition. arXiv 2019, arXiv:1908.06803. [Google Scholar]

- Pu, Q.; Gupta, S.; Gollakota, S.; Patel, S. Whole-home gesture recognition using wireless signals. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking, Miami, FL, USA, 30 September–4 October 2013. [Google Scholar]

- Arbabian, A.; Callender, S.; Kang, S.; Rangwala, M.; Niknejad, A.M. A 94 GHz mm-wave-to-baseband pulsed-radar transceiver with applications in imaging and gesture recognition. IEEE J. Solid-State Circuits 2013, 48, 1055–1071. [Google Scholar] [CrossRef]

- Fhager, L.O.; Heunisch, S.; Dahlberg, H.; Evertsson, A.; Wernersson, L.-E. Pulsed Millimeter Wave Radar for Hand Gesture Sensing and Classification. IEEE Sens. Lett. 2019, 3, 1–4. [Google Scholar] [CrossRef]

- Heunisch, S.; Fhager, L.O.; Wernersson, L.-E. Millimeter-wave pulse radar scattering measurements on the human hand. IEEE Antennas Wirel. Propag. Lett. 2019, 18, 1377–1380. [Google Scholar] [CrossRef]

- Fan, T.; Ma, C.; Gu, Z.; Lv, Q.; Chen, J.; Ye, D.; Huangfu, J.; Sun, Y.; Li, C.; Ran, L. Wireless hand gesture recognition based on continuous-wave Doppler radar sensors. IEEE Trans. Microw. Theory Tech. 2016, 64, 4012–4020. [Google Scholar] [CrossRef]

- Huang, S.-T.; Tseng, C.-H. Hand-gesture sensing Doppler radar with metamaterial-based leaky-wave antennas. In Proceedings of the 2017 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Nagoya, Aichi, Japan, 19–21 March 2017. [Google Scholar]

- Ryu, S.-J.; Suh, J.-S.; Baek, S.-H.; Hong, S.; Kim, J.-H. Feature-based hand gesture recognition using an FMCW radar and its temporal feature analysis. IEEE Sens. J. 2018, 18, 7593–7602. [Google Scholar] [CrossRef]

- Dekker, B.; Jacobs, S.; Kossen, A.; Kruithof, M.; Huizing, A.; Geurts, M. Gesture recognition with a low power FMCW radar and a deep convolutional neural network. In Proceedings of the 2017 European Radar Conference (EURAD), Nuremberg, Germany, 10–13 October 2017. [Google Scholar]

- Hazra, S.; Santra, A. Robust gesture recognition using millimetric-wave radar system. IEEE Sens. Lett. 2018, 2, 1–4. [Google Scholar] [CrossRef]

- Molchanov, P.; Gupta, S.; Kim, K.; Pulli, K. Short-range FMCW monopulse radar for hand-gesture sensing. In Proceedings of the 2015 IEEE Radar Conference, Johannesburg, South Africa, 27–30 October 2015. [Google Scholar]

- Peng, Z.; Li, C.; Muñoz-Ferreras, J.-M.; Gómez-García, R. An FMCW radar sensor for human gesture recognition in the presence of multiple targets. In Proceedings of the 2017 First IEEE MTT-S International Microwave Bio Conference (IMBIOC), Gothenburg, Sweden, 15–17 May 2017. [Google Scholar]

- Khan, F.; Leem, S.K.; Cho, S.H. Hand-based gesture recognition for vehicular applications using IR-UWB radar. Sensors 2017, 17, 833. [Google Scholar] [CrossRef]

- Khan, F.; Cho, S.H. Hand based Gesture Recognition inside a car through IR-UWB Radar. Korean Soc. Electron. Eng. 2017, 154–157. Available online: https://repository.hanyang.ac.kr/handle/20.500.11754/106113 (accessed on 24 August 2020).

- Ren, N.; Quan, X.; Cho, S.H. Algorithm for gesture recognition using an IR-UWB radar sensor. J. Comput. Commun. 2016, 4, 95–100. [Google Scholar] [CrossRef] [Green Version]

- Park, J.; Cho, S.H. IR-UWB radar sensor for human gesture recognition by using machine learning. In Proceedings of the 2016 IEEE 18th International Conference on High Performance Computing and Communications; IEEE 14th International Conference on Smart City; IEEE 2nd International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Sydney, Australia, 12–14 December 2016. [Google Scholar]

- Kim, S.Y.; Han, H.G.; Kim, J.W.; Lee, S.; Kim, T.W. A hand gesture recognition sensor using reflected impulses. IEEE Sens. J. 2017, 17, 2975–2976. [Google Scholar] [CrossRef]

- Khan, F.; Leem, S.K.; Cho, S.H. Algorithm for Fingers Counting Gestures Using IR-UWB Radar Sensor. Available online: https://www.researchgate.net/publication/323726266_Algorithm_for_fingers_counting_gestures_using_IR-UWB_radar_sensor (accessed on 28 August 2020).

- Leem, S.K.; Khan, F.; Cho, S.H. Remote Authentication Using an Ultra-Wideband Radio Frequency Transceiver. In Proceedings of the 2020 IEEE 17th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 10–13 January 2020. [Google Scholar]

- Kim, Y.; Toomajian, B. Hand gesture recognition using micro-Doppler signatures with convolutional neural network. IEEE Access 2016, 4, 7125–7130. [Google Scholar] [CrossRef]

- Sang, Y.; Shi, L.; Liu, Y. Micro hand gesture recognition system using ultrasonic active sensing. IEEE Access 2018, 6, 49339–49347. [Google Scholar] [CrossRef]

- Kim, Y.; Toomajian, B. Application of Doppler radar for the recognition of hand gestures using optimized deep convolutional neural networks. In Proceedings of the 2017 11th European Conference on Antennas and Propagation (EUCAP), Paris, France, 19–24 March 2017. [Google Scholar]

- Yu, M.; Kim, N.; Jung, Y.; Lee, S. A Frame Detection Method for Real-Time Hand Gesture Recognition Systems Using CW-Radar. Sensors 2020, 20, 2321. [Google Scholar] [CrossRef] [Green Version]

- Amin, M.G.; Zeng, Z.; Shan, T. Hand gesture recognition based on radar micro-Doppler signature envelopes. In Proceedings of the 2019 IEEE Radar Conference (RadarConf), Boston, MA, USA, 22–26 April 2019. [Google Scholar]

- Li, G.; Zhang, R.; Ritchie, M.; Griffiths, H. Sparsity-based dynamic hand gesture recognition using micro-Doppler signatures. In Proceedings of the 2017 IEEE Radar Conference (RadarConf), Seattle, WA, USA, 8–12 May 2017. [Google Scholar]

- Li, G.; Zhang, R.; Ritchie, M.; Griffiths, H. Sparsity-driven micro-Doppler feature extraction for dynamic hand gesture recognition. IEEE Trans. Aerosp. Electron. Syst. 2017, 54, 655–665. [Google Scholar] [CrossRef]

- Zhang, S.; Li, G.; Ritchie, M.; Fioranelli, F.; Griffiths, H. Dynamic hand gesture classification based on radar micro-Doppler signatures. In Proceedings of the 2016 CIE International Conference on Radar (RADAR), Guangzhou, China, 10–13 October 2016. [Google Scholar]

- Gao, X.; Xu, J.; Rahman, A.; Yavari, E.; Lee, A.; Lubecke, V.; Boric-Lubecke, O. Barcode based hand gesture classification using AC coupled quadrature Doppler radar. In Proceedings of the 2016 IEEE MTT-S International Microwave Symposium (IMS), San Francisco, CA, USA, 22–27 May 2014. [Google Scholar]

- Wan, Q.; Li, Y.; Li, C.; Pal, R. Gesture recognition for smart home applications using portable radar sensors. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014. [Google Scholar]

- Sakamoto, T.; Gao, X.; Yavari, E.; Rahman, A.; Boric-Lubecke, O.; Lubecke, V.M. Radar-based hand gesture recognition using IQ echo plot and convolutional neural network. In Proceedings of the 2017 IEEE Conference on Antenna Measurements & Applications (CAMA), Tsukuba, Japan, 4–6 December 2017. [Google Scholar]

- Sakamoto, T.; Gao, X.; Yavari, E.; Rahman, A.; Boric-Lubecke, O.; Lubecke, V.M. Hand gesture recognition using a radar echo I–Q plot and a convolutional neural network. IEEE Sens. Lett. 2018, 2, 1–4. [Google Scholar] [CrossRef]

- Wang, Z.; Li, G.; Yang, L. Dynamic Hand Gesture Recognition Based on Micro-Doppler Radar Signatures Using Hidden Gauss-Markov Models. IEEE Geosci. Remote Sens. Lett. 2020, 18, 291–295. [Google Scholar] [CrossRef]

- Klinefelter, E.; Nanzer, J.A. Interferometric radar for spatially-persistent gesture recognition in human-computer interaction. In Proceedings of the 2019 IEEE Radar Conference (RadarConf), Boston, MA, USA, 22–26 April 2019. [Google Scholar]

- Li, K.; Jin, Y.; Akram, M.W.; Han, R.; Chen, J. Facial expression recognition with convolutional neural networks via a new face cropping and rotation strategy. Vis. Comput. 2020, 36, 391–404. [Google Scholar] [CrossRef]

- Zhang, Z.; Tian, Z.; Zhou, M.; Liu, Y. Application of FMCW radar for dynamic continuous hand gesture recognition. In Proceedings of the 11th EAI International Conference on Mobile Multimedia Communications, Qingdao, China, 21–22 June 2018. [Google Scholar]

- Suh, J.S.; Ryu, S.; Han, B.; Choi, J.; Kim, J.-H.; Hong, S. 24 GHz FMCW radar system for real-time hand gesture recognition using LSTM. In Proceedings of the 2018 Asia-Pacific Microwave Conference (APMC), Kyoto, Japan, 6–9 November 2018. [Google Scholar]

- Wang, S.; Song, J.; Lien, J.; Poupyrev, I.; Hilliges, O. Interacting with soli: Exploring fine-grained dynamic gesture recognition in the radio-frequency spectrum. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016. [Google Scholar]

- Malysa, G.; Wang, D.; Netsch, L.; Ali, M. Hidden Markov model-based gesture recognition with FMCW radar. In Proceedings of the 2016 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Washington, DC, USA, 7–9 December 2016. [Google Scholar]

- Molchanov, P.; Gupta, S.; Kim, K.; Pulli, K. Multi-sensor system for driver’s hand-gesture recognition. In Proceedings of the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, 4–8 May 2015. [Google Scholar]

- Sun, Y.; Fei, T.; Schliep, F.; Pohl, N. Gesture classification with handcrafted micro-Doppler features using a FMCW radar. In Proceedings of the 2018 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Munich, Germany, 15–17 April 2018. [Google Scholar]

- Yeo, H.-S.; Flamich, G.; Schrempf, P.; Harris-Birtill, D.; Quigley, A. Radarcat: Radar categorization for input & interaction. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016. [Google Scholar]

- Gupta, S.; Morris, D.; Patel, S.; Tan, D. Soundwave: Using the doppler effect to sense gestures. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012. [Google Scholar]

- Lazaro, A.; Girbau, D.; Villarino, R. Techniques for clutter suppression in the presence of body movements during the detection of respiratory activity through UWB radars. Sensors 2014, 14, 2595–2618. [Google Scholar] [CrossRef]

| Comparison Criterion | Wearable Systems (Gloves, Wristbands, etc.) | Wireless Systems (Radar and Camera) |

|---|---|---|

| Health-related issues | May cause discomfort to users, as they are always required to wear gloves, or other related sensors | Wireless sensor will not cause any skin-related issue |

| Sensing/operational range | Usually high, if wireless data transfer is supported | Operates in a contained environment. Line of sight is usually required between hand and the sensors |

| Usage convenience | Less convenient (for case of HCI): Users are always required to wear a sensor | Users are not required to wear any sensor |

| Simultaneous recognition of multiple users/hands within a small area. | Can sense gestures from different users simultaneously at one location | At one location, recognition capability is often limited to a specific number of users/hands |

| Sensitivity to background conditions (such as noise) | Often less sensitive to ambient conditions | More sensitive than wearable devices |

| Device theft issues | Can be lost or forgotten | No such concerns, since the sensor is usually fabricated inside device or installed at a certain location. |

| Research Focus | Pulsed Radar | Single Frequency Continuous Wave (SFCW) Radar | Frequency Modulated Continuous Wave (FMCW) Radar | Radar Alike Hardware’s (SONAR, etc.) |

|---|---|---|---|---|

| Hardware designing | [37,38,39] | [40,41] | [17,34,42,43,44,45,46] | N/A |

| Algorithm Designing | [9,11,12,13,20,47,48,49,50,51,52,53,54,55,56] | [14,21,30,31,33,57,58,59,60,61,62,63,64,65,66,67] | [15,16,18,19,68,69,70,71,72,73,74,75] | [27,35,36,76] |

| Study and Year | Data Representation and Data Dimensions | Algorithmic Details | Frequency | No. of Gestures | Distance Between Hand and Sensor | Participants and Samples Per Gesture | Number of Radars |

|---|---|---|---|---|---|---|---|

| Arbabian et al. [37] (2013) | N/A | Hardware only, no algorithm proposed | 94 GHz | N/A | Not mentioned | Tested hand tracking only | 1 |

| Park and Cho [50] (2016) | Time–Range (2D) | SVM | 7.29 GHz | 5 | 0–1 m | 1, 500 | 1 |

| Ren et al. [49] (2016) | Time–Amplitude (1D) | Conditional statements | 6.8 GHz | 6 | 1 m | 1, 50 | 1 |

| Khan and Cho [48] (2017) | Time–Range (2D) | Neural Network | 6.8 GHz | 6 | Not specified | 1, 10 s (Samples not specified) | 1 |

| Kim and Toomajian [54], (2016) | Time–Doppler (3D-RGB) | DCNN | 5.8 GHz | 10 | 0.1 m | 1, 500 | 1 |

| Khan et al. [47] (2017) | Time–Range (2D matrix) | Unsupervised clustering. K-means | 6.8 GHz | 5 | ~ 1 m approx | 3, 50 | 1 |

| Kim et al. [51] (2017) | Time–Amplitude (1-D) | (1-D) CNN | Not mentioned | 6 | 0.15 m | 5, 81 | 1 |

| Kim and Toomajian [56], (2017) | Time–Doppler (3D-RGB) | DCNN | 5.8 GHz | 7 | 0.1 m | 1, 25 | 1 |

| Sang et al. [55], (2018) | Range–Doppler image features (2D; constructed greyscale image from data) | HMM | 300 kHz (active sensing) | 7 | Not specified | 9, 50 | 1 |

| Ahmed et al. [11], (2019) | Time–Range (2D; constructed greyscale image from data) | Deep-Learning | 7.29 GHz | 5 | 0.45 m | 3, 100 | 1 |

| Fhager et al. [38], (2019) | Time–Range envelop (1D) | DCNN | 60 GHz | 3 | 0.10–0.30 m | 2, 180 | 1 |

| Heunisch et al. [39], (2019) | Range–RCS (1D) | Observing backscattered waves | 60 GHz | 3 | 0.25 m | Note specified, 1000 | 1 |

| Ghaffar et al. [13] (2019) | Time–Range (2D; constructed greyscale image from data) | Multiclass SVM | 7.29 GHz | 9 | Less than 0.5 m | 4, 100 | 4 |

| Leem et al. [9] (2019) | Time–Range (2D; constructed greyscale image from data) | CNN | 7.29 GHz | 10 | 0–1 m | 5, 400 | 3 |

| Ahmed and Cho. [12], (2020) | Time–Range (3D-RGB data) | GoogLeNet framework | 7.29 GHz | 8 | 3–8 m | 3, 100 | 1 & 2 |

| Leem et al. [53], (2020) | Time–Range (2D; constructed greyscale image from data) | DCNN | 7.29 GHz | Drawing gesture | Not Specified | 5, Not specified | 4 |

| Khan et al. [20], (2020) | Time–Range (2D; constructed greyscale image from data) | CNN | 7.29 GHz | Performed digit writing | 0–1 m | 3, 300 | 4 |

| Study and Year | Data Representation and Data Dimensions | Algorithmic Details | Frequency | No. of Gestures | Distance Between Hand & Sensor | Participants and Total Samples Per Gesture | Number of Radars |

|---|---|---|---|---|---|---|---|

| Kim et al. [31] (2009) | Time–Frequency (3D; radar signal was passed through a STFT) | SVM | 2.4 GHz | 7 (including activities) | 2–8 m | 12, Not specified | 1 |

| Zheng et al. [30] (2013) | Time–Range (1-D; hand motion vector) | Differentiate and Cross-Multiply | N/A | Not applicable | 0–1 m (tracking) | Not applicable (tracked hand) | 2 and 3 |

| Wan et al. [63] (2014) | Time–Amplitude (1D) | kNN (k = 3) | 2.4 GHz | 3 | Up to 2 m | 1, 20 | 1 |

| Fan et al. [40] (2016) | Positioning (2D; motion imaging) | Arcsine Algorithm, 2D motion imaging algorithm | 5.8 GHz | 2 | 0–0.2 m | Did not trained algorithm | 1 (with multiple antennas) |

| Gao et al. [62] (2016) | Time–Amplitude (1D; A barcode was made based on zero-crossing rate) | time-domain zero-crossing | 2.4 GHz | 8 | 1.5 m, 0.76 m | Measured for 60 s to generate the barcode | 1 |

| Zhang et al. [61] (2016) | Time–Doppler frequency (2D) | SVM | 9.8 GHz | 4 | 0.3 m | 1, 50 | 1 |

| Huang et al. [41] (2017) | Time–Amplitude (1D) | Range–Doppler map (RDM) | 5.1, 5.8, 6.5 GHz | 2 | 0.2 m | Not applicable (hand-tracking) | 1 |

| Li. et al. [60] (2018) | Time-Doppler (2D) | NN Classifier (with Modified Hausdorff Distance) | 25 GHz | 4 | 0.3 m | 3, 60 | 1 |

| Sakamoto et al. [64] (2017) | Image made with the In-Phase and Quadrature signal trajectory (2D) | CNN | 2.4 GHz | 6 | 1.2 m | 1, 29 | 1 |

| Sakamoto et al. [65] (2018) | Image made with the In-Phase and Quadrature signal trajectory (2D) | CNN | 2.4-GHz | 6 | 1.2 m | 1, 29 | 1 |

| Amin et al. [58] (2019) | Time–Doppler frequency (3D RGB image) | kNN with k = 1 | 25 GHz | 15 | 0.2 m | 4, 5 | 1 |

| Skaria et al. [14] (2019) | Time–Doppler (2D image) | DCNN | 24 GHz | 14 | 0.1–0.3 m | 1, 250 | 1 |

| Klinefelter and Nanzer [67] (2019) | Time–Frequency (2D; frequency analysis) | Angular velocity of hand motions | 16.9 GHz | 5 | 0.2 m | Not applicable | 1 |

| Miller et al. [21] (2020) | Time–Amplitude (1D) | kNN with k = 10 | 25 GHz | 5 | Less than 0.5 m | 5, Continuous data | 1 |

| Yu et al. [57] (2020) | Time–Doppler (3D RGB image) | DCNN | 24 GHz | 6 | 0.3 m | 4, 100 | 1 |

| Wang et al. [66] (2020) | Time–Doppler (2D) | Hidden Gauss Markov Model | 25 GHz | 4 | 0.3 m | 5, 20 | 1 |

| Study and Year | Data Representation and Data Dimensions |

Algorithmic Details | Frequency and BW |

No. of Gestures | Distance Between Hand and Sensor |

Participants and Samples Per Gesture | Number of Radars |

|---|---|---|---|---|---|---|---|

| Molchanov et al. (NVIDIA) [45] (2015) | Time–Doppler (2D) | Energy estimation | 4 GHz | Not specified | Not specified | Performed hand tracking | 1 (and 1 depth sensor) |

| Molchanov et al. (NVIDIA) [73] (2015) | Time–Doppler (2D) | DCNN | 4 GHz | 10 | 0–0.5 m | 3, 1714 (total samples in dataset) | 1 |

| Lien eta al. (Google) [17] (2016) | Range–Doppler, Time–Range and Time-Doppler (1D and 2D representation) | Random Forest | 60 GHz | 4 (performed several tracking tasks too) | Limited to 0.3 m [71] | 5, 1000 | 1 |

| Malysa et al. [72] (2016) | Time–Doppler, Time–velocity (2D) | HMM | 77 GHz | 6 | Not specified | 2, 100 | 1 |

| Wang et al. [71] (2016) | Range–Doppler (3D RGB image) | CNN/RNN | 60 GHz | 11 | 0.3 m | 10, specified 251 | 1 |

| Yeo et al. [75] (2016) | Range–Amplitude (1D) | Random forest | 60 GHz | Not applicable | 0–0.3 m | Tracked the hand | 1 |

| Dekker et al. [43] (2017) | Time–Doppler velocity (3D RGB image) | CNN | 24 GHz | 3 | 3, 1000 samples in total | 1 | |

| Peng et al. [46] (2017) | Range–Doppler frequency (3D RGB image) | Did not performed the classification | 5.8 GHz | 3 | Not specified | 2, Not applicable | 1 |

| Rao et al. (TI) [34] (2017) | Range–Doppler velocity (3D RGB image) | Demonstrated the potential use only. | 77 GHz | 1 (car trunk door opening) | 0.5 m | Not specified | 1 |

| Li and Ritchie [59] (2017) | Time–Doppler frequency (3D RGB image) | Naïve Bayes, kernel estimators NN, SVM | 25 GHz | 4 | 0.3 m | 3, 20 | 1 |

| Hazra and Santra [44] (2018) | Range–Doppler (3D RGB image) | DCNN | 60 GHz | 5 | Not specified | 10, 150 later used 5 other individuals for testing. | 1 |

| Ryu et al. [42] (2018) | Range–Doppler (2D FFT) | QEA | 25 GHz | 7 | 0–0.5 m | Not specified, 15 | 1 |

| Suh et al. [70] (2018) | Range–Doppler (2D greyscale image) | Machine learning | 24 GHz | 7 | 0.2–0.4 m | 2, 120 (140 additional samples for testing. | 1 |

| Sun et al. [74] (2018) | Time–Doppler frequency (2D; features were extracted from an image) | kNN | 77 GHz | 7, performed by car-driver | Not specified | 6, 50 | 1 |

| Zhang et al. [15] (2018) | Time–Range (3D RGB image) | Deep learning (DCNN) | 24 GHz | 8 | 1.5, 2, and 3 m | 4, 100 | 1 |

| Zhang et al. [69] (2018) | Time–Range (3D RGB image) | Deep-Learning (DCNN) | 24 GHz | 8 | Not specified | Authors mentioned 80 seconds | 1 |

| Choi et al. [16] (2019) | Range–Doppler. 1D Motion profiles generated using 3D range–Doppler map (1D) | LSTM encoder. | 77–81 GHz | 10 | Not specified | 10, 20 | 1 |

| Liu et al. [18] (2019) | Time–Range and and time–Velocity (2D) | Signal processing-based technique | 77–81 GHz | 6 | Not specified | Mentioned 869 total samples only. | 1 |

| Wang et al. [19] (2019) | Range–Doppler velocity | Deep-Learning | 77–79 GHz | 10 | Not specified | Not specified, 400 | 1 |

| Study | Implemented Application(s) |

|---|---|

| [9] | In-air digit-writing virtual keyboard using multiple UWB Impulse Radars |

| [75] | Digital painting. |

| [17] | Scroll and dial implementation using finger sliding and rotation |

| [11] | Finger-counting-based HCI to control devices inside car |

| [73] | A multisensory HGR system to provide HCI to assist drivers |

| [48] | HGR inside car using pulsed radar, intended for vehicular applications. |

| [36,63] | Smart Home applications |

| Radar Hardware | Company | Studies |

|---|---|---|

| Bumblebee Doppler radar | Samraksh Co. Ltd., Dublin OH 43017, Ireleand | [54,56] |

| Xethru X2 | Novelda, Oslo, Gjerdrums vei 8 0484 Oslo, Norway | [47] |

| NVA6100 (Novelda) | Novelda, Oslo, Gjerdrums vei 8 0484 Oslo, Norway | [49] |

| NVA6201 (Novelda) | Novelda, Oslo, Gjerdrums vei 8 0484 Oslo, Norway | [51] |

| MA300D1-1 (Transducer only) | Murata Manufacturing, Nagaokakyo-shi, Kyoto 617-8555, Japan | [55] |

| X4 (Novelda, Norway) | Novelda, Oslo, Gjerdrums vei 8 0484 Oslo, Norway | [9,12,13,20,53] |

| MAX2829 (transceiver only) | Maxim Integrated, California, 95134 United States. | [40] |

| Ancortek SDR-kit 2500B | Ancortek Radars, Fairfax, VA 22030, United States | [66] |

| BGT23MTR12 | Infineon Technologies, Neubiberg Germany | [45] |

| BGT24MRT122 | Infineon Technologies, Neubiberg Germany | [15] |

| 77 GHz FMCW TI | Texas-Instrument (TI) Dallas, Texas 75243, USA | [34,72] |

| Soli | Google and the Infineon Technologies, Neubiberg, Germany | [71,75] |

| BGT60TR24 | Infineon Technologies, Neubiberg, Germany | [44] |

| TI’s AWR1642 | Texas-Instrument (TI) Dallas, Texas 75243, USA | [19] |

| Acconeer Pulsed coherent radar | Acconeer AB, Lund, Sweden | [38] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmed, S.; Kallu, K.D.; Ahmed, S.; Cho, S.H. Hand Gestures Recognition Using Radar Sensors for Human-Computer-Interaction: A Review. Remote Sens. 2021, 13, 527. https://doi.org/10.3390/rs13030527

Ahmed S, Kallu KD, Ahmed S, Cho SH. Hand Gestures Recognition Using Radar Sensors for Human-Computer-Interaction: A Review. Remote Sensing. 2021; 13(3):527. https://doi.org/10.3390/rs13030527

Chicago/Turabian StyleAhmed, Shahzad, Karam Dad Kallu, Sarfaraz Ahmed, and Sung Ho Cho. 2021. "Hand Gestures Recognition Using Radar Sensors for Human-Computer-Interaction: A Review" Remote Sensing 13, no. 3: 527. https://doi.org/10.3390/rs13030527

APA StyleAhmed, S., Kallu, K. D., Ahmed, S., & Cho, S. H. (2021). Hand Gestures Recognition Using Radar Sensors for Human-Computer-Interaction: A Review. Remote Sensing, 13(3), 527. https://doi.org/10.3390/rs13030527