1. Introduction

With the onset of human-induced climate change, wildfires are growing in frequency and intensity. Wildfires in recent years have reached unprecedented levels in a number of countries, including the United States [

1], Australia [

2,

3], Portugal [

4] and Canada [

5]. Over the past five years, Aotearoa New Zealand (NZ) has experienced some of the largest and most destructive wildfires in its history [

6,

7,

8]. The destructive nature of these fires appears to be linked to an increase in the frequency of fires at the rural–urban interface, posing a greater threat to human life and property [

6,

9]. Shrubby environments comprise a considerable amount of the natural vegetation in heavily populated areas of many countries, including NZ [

10] and shrub encroachment into other ecosystems poses a higher risk of wildfire [

10,

11]. Shrub encroachment is increasing globally for various reasons including climate change [

12,

13] and changes in grazing or agricultural practices [

14,

15], and as a response to the suppression of indigenous fire management practices [

16]. As wildfires continue to grow in intensity and frequency, it is critical that scalable methods are developed in order to better measure the environmental variables that can be used to model, manage and suppress wildfires.

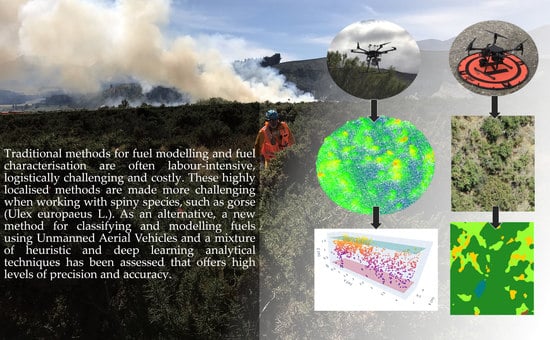

Vegetation management is in need of practical, accurate and scalable methods for vegetation inventory, including forest mensuration [

17,

18], fuel type mapping [

19], risk evaluation for wildfire management [

20,

21] and habitat monitoring [

22]. Traditional methods that are used for mapping fuel types and fuel properties based on field data collection can be costly and challenging [

23,

24]. Traditional methods can yield precise measurements for cover and biomass; however, they require labour-intensive and logistically challenging field work to collect data in a highly localized manner, which is not easily scalable [

22]. This is especially true for vegetation communities consisting of spiny species that grow in dense masses, such as gorse (

Ulex europaeus L.), offering additional challenges and hazards for field crews.

As an alternative to field-based fuel assessments, remote sensing offers multiple benefits, most notably the ability to capture objective, cost-efficient measurements over large, often isolated areas [

25]. Remote sensing technologies have been demonstrated to be an effective method for estimating vegetation metrics such as biomass, structural properties and fuel loadings [

23,

26,

27,

28]. Spectral and spatial information can be retrieved from passive sensors mounted on airborne and spaceborne platforms [

23]. Structural information can be retrieved from active sensors such as airborne laser scanning (ALS) or synthetic aperture radar (SAR) [

29]. Arguably the best remote-sensing methods for retrieving vegetation structural information are ALS and terrestrial laser scanning (TLS) [

30]. Although many studies have used ALS to predict forest fuel metrics [

28,

29,

31,

32,

33,

34,

35,

36,

37], comparatively few have applied this technology in shrublands [

38,

39,

40,

41,

42] and even fewer attempted to predict shrub fuel metrics [

43]. The difficulty in applying ALS to describe vegetation properties in shrub environments lies in the structural homogeneity of low heights and relatively uniform canopy surface common to shrubland vegetation [

23,

44]. ALS is arguably more suited for characterising forest canopy structures than for characterizing low lying vegetation, such as understory or shrubland vegetation, owing to the low density of returns and the obstruction caused by taller canopy structures [

45].

An alternative method of laser scanning that creates point clouds with a higher definition of vegetation surfaces is TLS. This method utilises a ground-based static laser scanner to create point clouds from a much closer range, leading to a greater point density, and smaller laser footprint. In combination, these features of TLS result in a more accurate representation of vegetation structure [

46,

47]. TLS has been used for a variety of forestry-related applications, including tree height measurement [

48], biomass estimation [

49], and extensively for forest mensuration and tree stem characterization [

50]. This technology has been proven to be highly effective for the characterization of shrub environments without destructive sampling [

46,

51,

52]. There is a discrete body of research building up around the application of TLS to fuel modelling [

53,

54,

55]. Some of these published studies are focused on the efficacy of TLS for fuel load characterization in shrub ecosystems [

45,

56,

57,

58,

59]. Although previous studies have observed good correlations between field and TLS measurements for cover and biomass [

22,

39,

51], a common issue with TLS data is the presence of “shadows” in the point cloud. S Due to the stationary nature of the TLS system, shadows are caused when objects in the captured scene are occluded by other objects [

22].

With the miniaturisation of airborne laser scanners, unmanned aerial vehicles (UAVs) are now a viable alternative for characterizing increasingly large areas with laser scanning technology [

30]. UAV laser scanning (ULS) has been shown to be a highly effective tool for characterizing tree height, stem diameters and other individual tree metrics in forest environments [

30,

60,

61]. As ULS has a higher point density and smaller laser footprint than ALS, ULS has the potential to accurately characterize more homogeneous environments, such as shrublands, by providing an enhanced definition of vegetation architecture [

46]. It has been proposed that ULS could provide a useful tool to fill the void between the high-density yet small area coverage of TLS and the low-density, wide area coverage of ALS [

57]. To date, very few studies have focused on the characterization of shrub environments using ULS [

42,

46,

62]. Even fewer of these studies have focused on fuel modelling [

36], and only one has used ULS to characterise shrub fuel [

63].

As the type of fuel has a strong influence on fire behaviour [

64], it is useful not only to characterise the fuel load but also to classify the fuel type. Previous studies have found that ALS alone is not effective for characterising the vegetation canopy of low vegetation [

65]. Even at higher laser return densities, the structure of completely different vegetation types could look very similar in a laser-scanned point cloud. The utility of lidar for fuel characterisation has been supplemented through the addition of multispectral imagery from fixed-wing aircraft [

65,

66] and satellites [

28,

35].

Recent advances in the field of machine learning have led to the development of highly versatile deep learning models for the detection and classification of objects in images [

67]. When combined with visual-spectrum UAV imagery, deep learning algorithms can be used to undertake fine-grained detection and classification for a wide range of vegetation-related applications. These applications include pest–plant detection [

68,

69], tree seedling detection [

70] and the semantic segmentation of different vegetation types [

71,

72,

73]. Semantic segmentation is the process of automatically assigning each pixel in the image to a specific class. The segmentation can either be single class, also referred to as binary segmentation, or multi-class segmentation, where the model can be trained to distinguish between multiple categories at the same time. Very little research has focused on deep learning semantic segmentation of UAV imagery for the classification of fuel types. Using high-resolution UAV orthomosaics, our objectives were to address this knowledge gap by classifying fuel components within a gorse scrub environment down to individual species and characterising their health status by discriminating live and dead vegetation.

The aims of this study were (1) to assess whether UAV orthomosaics combined with semantic segmentation through deep learning can be used to characterise fuel classes, (2) to assess whether metrics derived from ULS and UAV orthomosaic data can be used to accurately determine fuel load, and (3) to evaluate ULS as a viable alternative to non-destructive field measurements.

2. Materials and Methods

2.1. Study Site

Data for this study were collected as part of a large, multi-year fire research programme in Aotearoa New Zealand (NZ). The aim of this wider programme was to conduct heavily instrumented experimental field burns in a range of different vegetation types [

74,

75] from cereal crop stubble to shrubland vegetation and wilding conifer forests. The site for the shrubland burn experiments was located at ~345 m above sea level, between the base of Mount Hutt and the Rakaia River in Canterbury in the South Island of NZ (43°24′31″S, 171°34′01′′E;

Figure 1). The terrain was flat with a slight NW to SE gradient of ~2 m, with thin soils over river rock. At this site, shrub vegetation has colonised a fluvial deposition alongside a meandering braided river. The Rakaia river site has been extensively colonised by gorse (

Ulex europaeus L.), and to a lesser extent by the native shrub species matagouri (

Discaria toumatou Raoul, matakoura or tūmatakuru in te reo Maori), dog rose (

Rosa canina), various species of lupin (

Lupinus spp.) and several species of native and exotic grasses. The shrub layer within the study site ranged from 0.2 to 2.0 m tall, with the site exhibiting heterogeneity in both height and density.

The study site sits within a cool wet hill climate with annual rainfall between 750–1500 mm and prevailing north-westerly winds [

76]. Meteorological conditions were recorded on a temporary weather station that was installed on the site for the duration of the study. The mean daily temperature on the site during the study period ranged from 12.5 °C to 23.8 °C, with the mean daily relative humidity ranging between 53% and 83% and the mean daily wind speed ranging between 3.5 m/s and 11.9 m/s. No precipitation was observed during the study period. More detailed information on the ambient conditions of the site for the study period can be found in

Appendix A,

Table A1.

2.2. Field Sampling and Non-Destructive Measurements

Six 200 m × 200 m burn blocks were established across the site. These blocks were separated from each other by 10 m wide fire breaks, which were bulldozed and graded to prevent fire escape into the neighbouring shrubland. Within each of the six burn blocks, three 4 × 1 m fuel sampling quadrats were established (

Figure 2). The quadrats were positioned so that the vegetation samples were representative of the range of vegetation across the burn blocks. This was determined by analysing previously sampled transects across the burn area. Each sampling quadrat was divided into four 1 × 1 m “sub-quadrats” aligned in a north to south orientation (

Figure 2b). The method follows the methodology laid out in [

24], with non-destructive fuel measurements being made within each sub-quadrat. The four sub-quadrats method maximises the variability in vegetation cover, which can be dominated by a single shrub in the larger 4 × 1 m quadrats. Once established, average height and cover measurements were determined for each of the vegetation components in the overstory, understory and litter strata present within the overall quadrat. Non-destructive sampling took place between the 10 and 14 February 2020.

Heights for each of the vegetation strata were determined as the average height of the tallest and shortest clumps of fuel. Heights for isolated shrubs that clearly did not belong to the main fuel strata were disregarded. Overstory height (OsHt) for each quadrat was then calculated as the mean of the overstory measurements from each of the sub-quadrats. Percentage cover for each of the strata was visually estimated within each sub-quadrat, and overstory cover (OsCo) was calculated from a combination of the percentage cover of the woody overstory species such as gorse, matagouri and rose.

2.3. Destructive Sampling

Following the methodology described in [

24], all vegetative material for each fuel component within each 4 × 1 m quadrat was destructively sampled, starting with the overstory layer and finishing with the litter layer. The vegetative material was separated by species, sorted as either live or dead based on colour and general appearance, and split into four groups based on diameter (≤0.49, 0.5–0.99, 1.0–2.99 and ≥3.0 cm) as described in [

77]. Destructive sampling took place between 11 and 20 February 2020.

The wet weight of each fuel component was measured in the field using a hanging scale suspended from a 2 m tall metal frame, which provided a measurement of fresh biomass. Total above ground biomass (TAGB; kg/m

2) was determined by drying samples in an oven at 105 °C for 24 h or 65 °C for 48 h for the heavier fuels >1 cm, thus removing all moisture. Depending on the amount of material in each fuel component, either the entire sample or a sub-sample of it was taken to the lab for drying. Above ground available fuel (AGAF) was determined using the smallest components of the elevated fuel, plus understory vegetation and litter. The elevated fuel component in the AGAF estimates included all (100%) of the dead and 81% of live foliage (i.e., gorse and matagouri) less than 0.5 cm, as per [

24].

A total of eighteen 4 × 1 m plots were measured across the site; however, due to an outbreak of COVID-19, two of the samples were not able to be processed in time and were therefore discarded from the sample. The results from the destructive and non-destructive field measurements showed a wide range in quantitative and structural properties (

Table 1), with maximum vegetation height ranging from 0.74 to 2.15 m and biomass ranging from 1.68 to 12.7 kg/m

2.

2.4. UAV Data Acquisition

Prior to flight, an extensive network of ground control points (GCPs) was set up across the site to geolocate the UAV data for lidar and Structure from Motion (SfM) captures. GCPs were surveyed prior to the data capture using a handheld Trimble Geo7X GPS unit (Trimble Inc., Sunnyvale, CA, USA) combined with a Trimble Zephyr Model 2 external aerial (Trimble Inc., Sunnyvale, CA, USA). To attain a GPS fix at the centre of each point, an average was taken from a minimum of 180 point fixes over approximately 3 min.

Nine GCPs were established across the site to increase the accuracy of the lidar, with minimum, maximum and average RMSE values of 0.03 m and 0.28 m and 0.15 m, respectively. An additional point was surveyed in the centre of the area of interest utilising the same method as described above to be used as the base station location for the UAV lidar system. Surveying of this point collected 600 points averaged over 10 min, and the resulting RMSE for this point was 0.18 m.

For the photogrammetry, a further 32 GCPs were established across the site. In total, 41 GCPs were installed with a maximum spacing of ~100 m between GCPs (

Figure 1), which resulted in a vertical RMSE for the photogrammetric data of less than 0.1 m [

78]. The distribution of the points was planned to reduce potential errors in the photogrammetry, such as bowl effect [

79]. Point fixes for photogrammetric GCPs were obtained from the ULS-derived digital terrain model (DTM) to reduce survey time. High-contrast photogrammetric plates were deployed to increase their visibility in the point cloud.

The ULS dataset capture was carried out using a LidarUSA Snoopy V-series system (Fagerman Technologies, INC., Somerville, AL, USA), with an integrated Riegl MiniVUX-1 UAV scanner (Riegl, Horn, Austria). The LidarUSA system, hereafter referred to as the MiniVUX, was carried by a DJI Matrice 600 Pro UAV (DJI Ltd., Shenzhen, China). A Zenmuse X4s (DJI Ltd., Shenzhen, China) 1-inch 20 MP RGB camera mounted on a DJI Matrice 210 (DJI Ltd., Shenzhen, China) was used to capture all SfM data.

Flight planning for the ULS capture was undertaken using UgCS software version 3.4 (SPH Engineering, Riga, Latvia). This software can program adaptive banking turns into the flight path, thereby reducing the accumulation of error in the scanner’s IMU position during flights. For the photogrammetry flights, the Map Pilot (Drones Made Easy, San Diego, CA, USA) application was utilised as it has in-built terrain-following functionality that ensured an even flight altitude and, therefore, even ground sample distance (GSD) across images. SfM datasets were captured before and after destructive sampling to map the vegetation across the site and to enable accurate annotation of plot locations in the GIS (see

Section 2.6). Plot-level SfM datasets were captured at a higher resolution to evaluate the full potential of a deep learning approach. Flight parameters and data resolution statistics for the various captured datasets are given in

Table 2.

Following Dandois et al. [

80], UAV data were captured under clear sky conditions, during periods of high solar angle, on days with wind speeds lower than 20 km/h, thereby minimising blur and shadow in the imagery. Whole site operations were planned with a ground sample distance (GSD) of ~3.5 cm, a speed that kept motion blur between less than 1 × the GSD (recommendations in the literature vary between <0.5 and <1.5 times the GSD [

81,

82]) and a minimum forward and side overlap of 80% [

80]. For the high resolution photogrammetric flights, side and forward overlap was increased to 85% to reduce potential artefacts in the outputs resulting from the complex structure of the vegetation [

83]. Site-level SfM datasets were captured on 6 February and 1 March 2020, with plot-level SfM datasets captured on 7 February 2020.

For the lidar flights, a line spacing of 30 m and a speed of 5 m/s were selected to create a very high density point cloud, to ensure maximum coverage of the vegetation. In line with flight parameters used in a previous study, which reported a correlation and accuracy of R

2 = 0.99 and RMSE = 0.15 m for tree height [

60], a flight height of 50 m AGL was selected. This also maintains a small laser beam footprint (80 × 25 mm at 50 m) and, therefore, lowers the error with a reported accuracy for the MiniVUX of 0.015 m at 50 m [

84]. The ULS dataset was captured on 7 February 2020.

2.5. Raw Data Processing

2.5.1. ULS

To generate a point cloud in the universal LAS format, raw lidar data were retrieved from the sensor and converted from the sensor’s native LidarUSA format in two processing steps. First, the Inertial Explorer Xpress software version 8.90 (NovAtel Inc., Calgary, AB, Canada) was used to post-process the raw trajectory data from the GNSS rover of the LidarUSA system with the post-processed kinematic (PPK) GPS data from the CHCX900B base station (CHC Navigation, Shanghai, China). PPK processing increases the accuracy of the trajectory data. The ScanLook Point Cloud Export (ScanLook PC) software version 1.0.230 (Fagerman Technologies INC., Somerville, AL, USA) was then utilised to combine the post-processed trajectory data with the raw sensor data from the MiniVUX. ScanLook PC was also used to apply the boresight calibrations and lever arm offsets that are unique to the sensor and how it was mounted on the craft. This process removed inherent errors associated with mismatched data from within or between flight lines. The resulting point cloud was then output in the universal LAS format. LAS was preferable to the LAZ format as, being uncompressed, it could be read faster and therefore reduced the processing time and aided in parallelisation during the next processing steps.

2.5.2. Orthomosaic

Pix4Dmapper (Pix4D) software version 4.7.5 (Pix4D, Lausanne, Switzerland) was used to process the UAV imagery into orthomosaics. The image processing in Pix4D followed three general stages: (1) initial processing; (2) point cloud mesh generation; and (3) DSM (digital surface model), orthomosaic and index generation. Upon completion of stage 1, 3D GCPs were added to the project for enhanced spatial reference. In order to create a good orthomosaic in stage 3, a high-quality point cloud must first be created in stage 2; therefore, an optimisation trial was carried out to fine-tune processing parameters in Pix4D. Optimal settings were chosen based on the accuracy of canopy heights produced, when compared with the ULS point cloud. Consequently, the following settings were used in this study: geometrically verified matching enabled, the minimum number of matches set to 6 (stage 2) and image scale set to 1/2 (stage 2). Point clouds were then exported in universal LAS format, and orthomosaics were exported in GeoTIFF format.

2.6. Point Cloud Processing and Metric Extraction

Following the creation of the raw point cloud data, two software were used to process the data and to derive the spatial outputs. First, the point cloud was tiled and basic noise filtering was applied using LasTools version 210,418 [

85]. The output tiles from LasTools were then imported into the R statistical software version 4.0.4 [

86] and processed using a data processing pipeline developed using the lidR package [

87]. Ground points were classified and a DTM with a resolution of 1 m was derived from these points. The point cloud was then height-normalised using this DTM; more noise filtering was applied to remove spurious points; and finally, a pit-free canopy height model (CHM) with a resolution of 0.25 m was derived.

An additional UAV flight was carried out between the completion of destructive sampling and burning to ensure a good match between field and spatial data. Prior to this second flight, the outline of each sampling plot was painted on the ground using high-visibility road marker paint so that they would be easily identified in the imagery (

Figure 2c). After the orthomosaic was processed, the vegetation plots were manually digitised from the orthomosaic using Global Mapper 22.1 (Blue Marble Geographics, Hallowell, ME, USA), and a shapefile was created for the plot locations. An example of a digitised plot can be seen in

Figure 2b.

To derive fuel metrics for the vegetation plots, the plot shapefile was used to segregate the filtered and de-noised point cloud into individual point clouds for each plot. In total, 66 metrics were extracted (

Table 3), including 47 metrics for structure and intensity of the returns from the “stdmetrics” function in the lidR package, ten voxel metrics that were calculated after the plots were voxelised using lidR, six variations of mean top height (MTH) calculated using different methods, and three metrics for leaf area density (LAD) calculated using the leafR package [

88]. The derived metrics were then used as an input for statistical modelling.

2.7. Deep Learning Multi-Class Segmentation and Evaluation

Previous research indicates that percentage cover of different fuel types can be a useful metric for estimating fuel load [

24]. In order to test the efficacy of deriving this information from UAV data, a model for fuel type classification had to be built. First, the major fuel components within the area of interest were chosen to give best representation. It was not realistic to characterise some fuel components from the UAV data, such as litter depth or the height and cover of different herbaceous species. Consequently, we decided to select five of the most common fuel components that were representative of the other vegetation present on the site. For this study, five fuel components were chosen: live gorse, dead gorse, grass, matagouri and bare earth. These fuel components were chosen largely due to their dominance in the area and to assess whether deep learning could distinguish between a species in a live or dead state. Gorse and matagouri are also spiny species, making field measurement both time consuming and hazardous. A training dataset was then created by extracting 244~1000 × 1000 pixel (~56.25 m

2) tiles from the ~0.75 cm plot-level orthomosaics. Semantic image annotation of each tile was then carried out, according to our class definitions of five vegetation types. To ensure pixel-wise annotation was manageable, if a region contained a small / insignificant patch of pixels belonging to one class inside another class then all pixels within the region were assigned to the majority class.

The annotated images were used to train a convolutional neural network, using deep learning, to classify the five fuel components within each plot on the orthomosaics. The dataset of 244 images was randomly split into a ratio of 70:15:15 for training, validation and testing sets, respectively. Images were downsized to 864 × 864 pixels to match the architecture of the convolutional neural network. State-of-the-art segmentation network DeepLabV3Plus [

90] was chosen as the network architecture, with a ResNet-50 encoder [

91]. The network was pretrained on the ImageNet dataset [

92] to help account for the relatively small dataset size. Images were all normalized according to the data normalization function specific to ResNet-50 trained on ImageNet. To improve model generalization, data augmentation was applied to the training images including horizontal flip, random crop and padding. The network was trained for 500 epochs with a learning rate of 0.0001, reduced to 0.00001 after 80 epochs. A batch size of 3 was used which was the maximum possible using our NVIDIA RTX 2080 GPU with 8GB RAM and a relatively large image input size (864 × 864). The Adam optimizer method was used as the model optimizer and Dice-Loss was used as the loss function, which are both commonly used for multi-class semantic segmentation tasks. The Segmentation Models Pytorch python library [

93], which utilizes PyTorch [

94], was used to train and evaluate the network.

Two additional predictor variables were derived from the segmentation results generated from the deep learning model. Percentage cover (PCo_f) and overstory cover (OsCo_UAV), were calculated for each fuel class within plot area (

Table 4).

The UAV imagery semantic segmentation literature does not appear to use a common set of metrics; therefore, we have computed four of the key metrics. The main evaluation metrics used were F1 score, precision, recall and intersection over union (IoU). These were computed in the Scikit-learn library [

95] in Python as average-weighted across classes and as per-class measures of segmentation accuracy, which take into account the class imbalances. F1 score, precision and recall were calculated as follows:

where TP = true positives, FP = false positives and FN = false negatives.

The IoU metric is based on the Jaccard Index, which can be defined as the ratio of the intersection and union between the predicted and ground truth segmentation areas. The Jaccard Index is used for gauging the similarity and diversity of sample sets and can be expressed as follows:

where

B represents the predicted segmentation and

A represents the ground truth segmentation.

IoU can be calculated based on the Jaccard Index and expresses the number of overlapping pixels between the predicted and ground truth segmentations, represented as a number between 0 and 1:

2.8. Models of Total above Ground Biomass and Above Ground Available Fuel

All analyses were undertaken using the R statistical software [

86]. Models of TAGB and AGAF were created using multiple regression from the predictor variables described in

Table 3 and

Table 4. Predictor variables included in the regression model were identified and introduced into the model one at a time, starting with the predictor variable that was most strongly related to fuel load. Residual values from this model were then regressed against the remainder of the predictor variables to identify the next most strongly related variable, and this process was repeated until all important and significant variables were included in the model. For the final model, the degree of multicollinearity between independent variables was assessed using the variance inflation factor (VIF), with values of less than 10, indicating that multicollinearity is within acceptable bounds. Following [

24], additional models of TAGB and AGAF were created using field-measured variables for overstory height (OsHt) and overstory cover (OsCo), with data for these two independent variables obtained from field and UAV-derived methodologies.

Correlation and precision were calculated using the coefficient of determination (R

2) and root mean square error (RMSE) using the following equations:

where field measurements are represented by

, predicted measurements are represented by

, the average of the observed values is

and the sample size is represented by

. The relative RMSE (RMSE%) was calculated as RMSE% = 100 (RMSE/

).