Combining Deep Learning with Single-Spectrum UV Imaging for Rapid Detection of HNSs Spills

Abstract

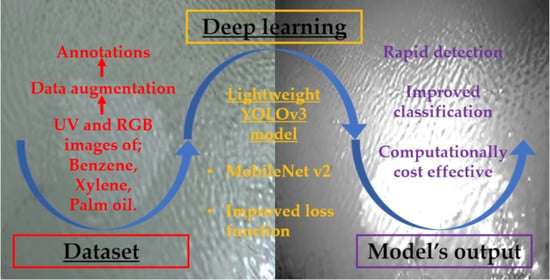

:1. Introduction

- Loss function was updated by adding the generalized intersection over union (GIoU) for bounding box regression, and k-means clustering was applied to regenerate the appropriate anchor boxes for enhancement in detection accuracy.

- Finally, the lightweight YOLOv3 was trained and tested on the HNSs dataset, and a comparison in the detection based on UV and RGB images was conducted to validate the proposal’s applicability.

2. Methodology

2.1. HNSs Image Dataset

2.1.1. Image Acquisition

2.1.2. Data Augmentation

2.2. DCNN Model for HNSs Spill Detection

2.2.1. Improved Loss Function

2.2.2. Anchor Box Generation

3. Experimentation

3.1. Model Training

3.2. Evaluation Protocols

4. Detection Results of the Proposed Model

4.1. Spill Location Detection

4.2. Evaluation Based on Precision and Recall

4.3. Evaluation Based on Multiscale Resolution

4.4. Sample HNSs Spill Classification

5. Discussion

- Overfitting problem caused by the small size of the dataset resulting in the detection model may not generalize well to unseen features in test images.

- Influence of ambient conditions, which may cause errors in detection. This problem can be solved by enhancing the generalization capability of the detection model by adding more training images.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. YOLOv3

References

- Harold, P.D.; De Souza, A.S.; Louchart, P.; Russell, D.; Brunt, H. Development of a risk-based prioritization methodology to inform public health emergency planning and preparedness in case of accidental spill at sea of hazardous and noxious substances (HNS). Environ. Int. 2014, 72, 157–163. [Google Scholar] [CrossRef] [PubMed]

- Michel, G.; Siemiatycki, J.; Désy, M.; Krewski, D. Associations between several sites of cancer and occupational exposure to benzene, toluene, xylene, and styrene: Results of a case-control study in Montreal. Am. J. Ind. Med. 1998, 34, 144–156. [Google Scholar] [CrossRef]

- Häkkinen, J.M.; Posti, A.I. Review of maritime accidents involving chemicals–special focus on the Baltic Sea. TransNav Int. J. Mar. Navig. Saf. Sea Transp. 2014, 8, 295–305. [Google Scholar] [CrossRef] [Green Version]

- Cunha, I.; Oliveira, H.; Neuparth, T.; Torres, T.; Santos, M.M. Fate, behaviour and weathering of priority HNS in the marine environment: An online tool. Mar. Pollut. Bull. 2016, 111, 330–338. [Google Scholar] [CrossRef] [PubMed]

- Cunha, I.; Moreira, S.; Santos, M.M. Review on hazardous and noxious substances (HNS) involved in marine spill incidents—An online database. J. Hazard. Mater. 2015, 285, 509–516. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.-R.; Lee, M.; Jung, J.-Y.; Kim, T.-W.; Kim, D. Initial environmental risk assessment of hazardous and noxious substances (HNS) spill accidents to mitigate its damages. Mar. Pollut. Bull. 2019, 139, 205–213. [Google Scholar] [CrossRef]

- Kirby, M.F.; Law, R.J. Accidental spills at sea–risk, impact, mitigation and the need for coordinated post-incident monitoring. Mar. Pollut. Bull. 2010, 60, 797–803. [Google Scholar] [CrossRef]

- Neuparth, T.; Moreira, S.; Santos, M.M.; Reis-Henriques, M.A. Review of oil and HNS accidental spills in Europe: Identifying major environmental monitoring gaps and drawing priorities. Mar. Pollut. Bull. 2012, 64, 1085–1095. [Google Scholar] [CrossRef]

- Yim, U.H.; Kim, M.; Ha, S.Y.; Kim, S.; Shim, W.J. Oil spill environmental forensics: The Hebei Spirit oil spill case. Environ. Sci. Technol. 2012, 46, 6431–6437. [Google Scholar] [CrossRef]

- Koeber, R.; Bayona, J.M.; Niessner, R. Determination of benzo [a] pyrene diones in air particulate matter with liquid chromatography mass spectrometry. Environ. Sci. Technol. 1999, 33, 1552–1558. [Google Scholar] [CrossRef]

- Li, C.-W.; Benjamin, M.M.; Korshin, G.V. Use of UV spectroscopy to characterize the reaction between NOM and free chlorine. Environ. Sci. Technol. 2000, 34, 2570–2575. [Google Scholar] [CrossRef]

- Hilmi, A.; Luong, J.H.T. Micromachined electrophoresis chips with electrochemical detectors for analysis of explosive compounds in soil and groundwater. Environ. Sci. Technol. 2000, 34, 3046–3050. [Google Scholar] [CrossRef]

- Alpers, W.; Holt, B.; Zeng, K. Oil spill detection by imaging radars: Challenges and pitfalls. Remote Sens. Environ. 2017, 201, 133–147. [Google Scholar] [CrossRef]

- Zhao, J.; Temimi, M.; Ghedira, H.; Hu, C. Exploring the potential of optical remote sensing for oil spill detection in shallow coastal waters-a case study in the Arabian Gulf. Opt. Express 2014, 22, 13755–13772. [Google Scholar] [CrossRef] [PubMed]

- Taravat, A.; Frate, F.D. Development of band ratioing algorithms and neural networks to detection of oil spills using Landsat ETM+ data. EURASIP J. Adv. Signal Process. 2012, 1, 1–8. [Google Scholar] [CrossRef]

- Al-Ruzouq, R.; Gibril, M.B.A.; Shanableh, A.; Kais, A.; Hamed, O.; Al-Mansoori, S.; Khalil, M.A. Sensors, features, and machine learning for oil spill detection and monitoring: A review. Remote Sens. 2020, 12, 3338. [Google Scholar] [CrossRef]

- Park, J.-J.; Park, K.-A.; Foucher, P.-Y.; Deliot, P.; Floch, S.L.; Kim, T.-S.; Oh, S.; Lee, M. Hazardous Noxious Substance Detection Based on Ground Experiment and Hyperspectral Remote Sensing. Remote Sens. 2021, 13, 318. [Google Scholar] [CrossRef]

- Huang, H.; Liu, S.; Wang, C.; Xia, K.; Zhang, D.; Wang, H.; Zhan, S.; Huang, H.; He, S.; Liu, C.; et al. On-site visualized classification of transparent hazards and noxious substances on a water surface by multispectral techniques. Appl. Opt. 2019, 58, 4458–4466. [Google Scholar] [CrossRef]

- Zhan, S.; Wang, C.; Liu, S.; Xia, K.; Huang, H.; Li, X.; Liu, C.; Xu, R. Floating xylene spill segmentation from ultraviolet images via target enhancement. Remote Sens. 2019, 11, 1142. [Google Scholar] [CrossRef] [Green Version]

- Han, Y.; Hong, B.-W. Deep learning based on Fourier convolutional neural network incorporating random kernels. Electronics 2021, 10, 2004. [Google Scholar] [CrossRef]

- Choi, J.; Kim, Y. Time-aware learning framework for over-the-top consumer classification based on machine- and deep-learning capabilities. Appl. Sci. 2020, 10, 8476. [Google Scholar] [CrossRef]

- Rew, J.; Park, S.; Cho, Y.; Jung, S.; Hwang, E. Animal movement prediction based on predictive recurrent neural network. Sensors 2019, 19, 4411. [Google Scholar] [CrossRef] [Green Version]

- Song, H.; Mehdi, S.R.; Zhang, Y.; Shentu, Y.; Wan, Q.; Wang, W.; Raza, K.; Huang, H. Development of coral investigation system based on semantic segmentation of single-channel images. Sensors 2021, 21, 1848. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Wang, C.; Liu, S.; Sun, Z.; Zhang, D.; Liu, C.; Jiang, Y.; Zhan, S.; Zhang, H.; Xu, R. Single spectral imagery and faster R-CNN to identify hazardous and noxious substances spills. Environ. Pollut. 2020, 258, 113688. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Guo, H.; Wu, D.; An, J. Discrimination of oil slicks and lookalikes in polarimetric SAR images using CNN. Sensors 2017, 17, 1837. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nieto-Hidalgo, M.; Gallego, A.-J.; Gil, P.; Pertusa, A. Two-stage convolutional neural network for ship and spill detection using SLAR images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5217–5230. [Google Scholar] [CrossRef] [Green Version]

- Guo, H.; Wei, G.; An, J. Dark spot detection in SAR images of oil spill using Segnet. Appl. Sci. 2018, 8, 2670. [Google Scholar] [CrossRef] [Green Version]

- Liu, B.; Li, Y.; Li, G.; Liu, A. A spectral feature based convolutional neural network for classification of sea surface oil spill. ISPRS Int. J. Geo-Inf. 2019, 8, 160. [Google Scholar] [CrossRef] [Green Version]

- Krestenitis, M.; Orfanidis, G.; Ioannidis, K.; Avgerinakis, K.; Vrochidis, S.; Kompatsiaris, I. Oil spill identification from satellite images using deep neural networks. Remote Sens. 2019, 11, 1762. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.-F.; Wan, J.-H.; Ma, Y.; Zhang, J.; Hu, Y.-B.; Jiang, Z.-C. Oil spill hyperspectral remote sensing detection based on DCNN with multiscale features. J. Coast. Res. 2019, 90, 332–339. [Google Scholar] [CrossRef]

- Zeng, K.; Wang, Y. A deep convolutional neural network for oil spill detection from spaceborne SAR images. Remote Sens. 2020, 12, 1015. [Google Scholar] [CrossRef] [Green Version]

- Song, D.; Zhen, Z.; Wang, B.; Li, X.; Gao, L.; Wang, N.; Xie, T.; Zhang, T. A novel marine oil spillage identification scheme based on convolution neural network feature extraction from fully polarimetric SAR imagery. IEEE Access 2020, 8, 59801–59820. [Google Scholar] [CrossRef]

- Chen, Y.; Li, Y.; Wang, J. An end-to-end oil-spill monitoring method for multisensory satellite images based on deep 386 semantic segmentation. Sensors 2020, 20, 725. [Google Scholar] [CrossRef] [Green Version]

- Yekeen, S.T.; Balogun, A.-L.; Yusof, K.B.W. A novel deep learning instance segmentation model for automated marine oil spill detection. ISPRS J. Photogramm. Remote Sens. 2020, 167, 190–200. [Google Scholar] [CrossRef]

- Tzutalin. LabelImg. Git code (2015). Available online: https://github.com/tzutalin/labelImg (accessed on 19 January 2022).

- Rew, J.; Cho, Y.; Moon, J.; Hwang, E. Habitat Suitability Estimation Using a Two-Stage Ensemble Approach. Remote Sens. 2020, 12, 1475. [Google Scholar] [CrossRef]

- Zhao, H.; Zhou, Y.; Zhang, L.; Peng, Y.; Hu, X.; Peng, H.; Cai, X. Mixed YOLOv3-LITE: A lightweight real-time object detection method. Sensors 2020, 20, 1861. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Year | Task | DCNN Architectures | Image Dataset | References |

|---|---|---|---|---|

| 2017 | Pixel-based spill classification | CNN with multiple convolutions and pooling layers | Radarsat-2 (SAR images) | [27] |

| 2018 | Object (spill) detection | 2-stage CNN | SAR images | [28] |

| Semantic segmentation | SegNet | Radarsat-2 (SAR images) | [29] | |

| 2019 | Pixel-based spill classification | 1-dimensional CNN | AVIRIS | [30] |

| Semantic segmentation | DeepLabv3 | Sentinel-1 (SAR images) | [31] | |

| Object (spill) detection | Multiscale features DCNN | Airborne hyperspectral images | [32] | |

| 2020 | Pixel-based spill classification | VGG-16 | ERS-1,2, COSMO SkyMed, ENVISAT (SAR images) | [33] |

| Pixel-based spill classification | CNN + SVM | Radarsat-2 (SAR images) | [34] | |

| Semantic segmentation | DeepLab + Fully connected conditional random field | QuickBird, Google Earth, and Worldview | [35] | |

| Instance segmentation | Mask R-CNN | Sentinel-1 (SAR images) | [36] |

| Imaging Model | Spilled Chemical | Number of Images Captured at Different Locations | Total Training Images Augmentation (Yes/No) | Total Testing Images | |||

|---|---|---|---|---|---|---|---|

| Freshwater Lake | Canal | Artificial Pool | No | Yes | |||

| UV imaging | Benzene | 16 | 29 | 16 | 387 | 958 | 60 |

| Xylene | 11 | 28 | 31 | ||||

| Palm oil | 53 | 168 | 35 | ||||

| RGB imaging | Benzene | 09 | 37 | – | 468 | 1096 | 60 |

| Xylene | 14 | 40 | – | ||||

| Palm oil | 63 | 305 | – | ||||

| Model Training Parameters | Parameter Values |

|---|---|

| Learning rate | 1 × e−4 and 1 × e−6 |

| Total training epoch | 300 for the baseline model, 450 for lightweight YOLOv3 |

| Batch size | 4 and 6 |

| Image size | 320 × 320 to 608 × 608 |

| IoU threshold | 0.5 |

| Average decay | 0.995 |

| Gradient optimizer | Adam |

| Image Size | Per Class AP (%) of UV Images | Per Class AP (%) of RGB Images | UV mAP | RGB mAP | Avg D-Time (ms) | FPS | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Benzene | Xylene | Palm Oil | Benzene | Xylene | Palm Oil | |||||

| 320 × 320 | 54.76 | 54.39 | 90.70 | 52.03 | 56.43 | 85.07 | 75.25 | 70.02 | 8.20 | 120 |

| 352 × 352 | 58.19 | 58.79 | 93.07 | 49.46 | 78.23 | 90.93 | 72.45 | 70.20 | 8.56 | 117 |

| 384 × 384 | 58.31 | 59.40 | 93.27 | 55.55 | 61.27 | 92.08 | 69.15 | 68.44 | 8.93 | 111 |

| 416 × 416 | 67.39 | 67.96 | 94.50 | 64.08 | 57.85 | 93.77 | 69.83 | 66.97 | 10.14 | 98 |

| 448 × 448 | 68.65 | 75.43 | 94.79 | 69.67 | 57.28 | 94.27 | 74.94 | 69.37 | 10.96 | 91 |

| 480 × 480 | 69.52 | 69.32 | 94.63 | 70.33 | 79.94 | 94.78 | 76.62 | 69.05 | 11.52 | 86 |

| 512 × 512 | 74.51 | 61.53 | 95.32 | 70.89 | 77.92 | 94.85 | 77.27 | 68.16 | 12.91 | 77 |

| 544 × 544 | 76.56 | 71.35 | 95.17 | 70.53 | 80.76 | 91.67 | 79.62 | 69.62 | 14.07 | 70 |

| 576 × 576 | 81.96 | 66.78 | 94.87 | 72.29 | 68.39 | 91.01 | 83.05 | 74.04 | 15.49 | 64 |

| 608 × 608 | 85.48 | 76.34 | 95.32 | 76.24 | 74.36 | 91.49 | 86.13 | 80.60 | 17.78 | 57 |

| Characteristic Parameters | Proposed Model | YOLOv3 Baseline | Faster RCNN by the Authors of [24] |

|---|---|---|---|

| mAP (UV) | 86.89% | 81.13% | 86.46% |

| mAP (RGB) | 72.40% | 66.94% | 66.73% |

| Parameters (million) | 31 | 61 | – |

| FPS | 57 | 23 | 5 |

| Average detection time (s) | 0.0119 | 0.0316 | 0.607 |

| Single Checkpoint size (Megabytes) | 107.6 | 985.1 | – |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mehdi, S.R.; Raza, K.; Huang, H.; Naqvi, R.A.; Ali, A.; Song, H. Combining Deep Learning with Single-Spectrum UV Imaging for Rapid Detection of HNSs Spills. Remote Sens. 2022, 14, 576. https://doi.org/10.3390/rs14030576

Mehdi SR, Raza K, Huang H, Naqvi RA, Ali A, Song H. Combining Deep Learning with Single-Spectrum UV Imaging for Rapid Detection of HNSs Spills. Remote Sensing. 2022; 14(3):576. https://doi.org/10.3390/rs14030576

Chicago/Turabian StyleMehdi, Syed Raza, Kazim Raza, Hui Huang, Rizwan Ali Naqvi, Amjad Ali, and Hong Song. 2022. "Combining Deep Learning with Single-Spectrum UV Imaging for Rapid Detection of HNSs Spills" Remote Sensing 14, no. 3: 576. https://doi.org/10.3390/rs14030576

APA StyleMehdi, S. R., Raza, K., Huang, H., Naqvi, R. A., Ali, A., & Song, H. (2022). Combining Deep Learning with Single-Spectrum UV Imaging for Rapid Detection of HNSs Spills. Remote Sensing, 14(3), 576. https://doi.org/10.3390/rs14030576