The Evaluation of Color Spaces for Large Woody Debris Detection in Rivers Using XGBoost Algorithm

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Field Investigations

2.3. Image Modeling

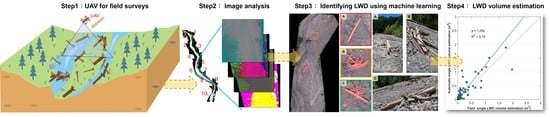

2.4. LWD Identification Process

2.5. Block Division of LWD Identification

2.6. Estimating the Volume of a Single LWD

2.7. Color Space Model Conversion

2.8. XGBoost Model

2.9. Model Evaluation

3. Results

3.1. Training and Test Data Selection

3.2. Filtering the Factors of the Color Space Model

3.3. LWD Identification

3.4. The Single LWD Volume Estimation Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Vaz, P.G.; Warren, D.R.; Pinto, P.; Merten, E.C.; Robinson, C.T.; Rego, F.C. Tree type and forest management effects on the structure of stream wood following wildfires. For. Ecol. Manag. 2011, 262, 561–570. [Google Scholar] [CrossRef]

- Short, L.E.; Gabet, E.J.; Hoffman, D.F. The role of large woody debris in modulating the dispersal of a post-fire sediment pulse. Geomorphology 2015, 246, 351–358. [Google Scholar] [CrossRef]

- Wohl, E.; Scott, D.N. Wood and sediment storage and dynamics in river corridors. Earth Surf. Processes Landf. 2017, 42, 5–23. [Google Scholar] [CrossRef] [Green Version]

- Ravazzolo, D.; Mao, L.; Picco, L.; Lenzi, M.A. Tracking log displacement during floods in the Tagliamento River using RFID and GPS tracker devices. Geomorphology 2015, 228, 226–233. [Google Scholar] [CrossRef] [Green Version]

- Mao, L.; Ugalde, F.; Iroume, A.; Lacy, S.N. The Effects of Replacing Native Forest on the Quantity and Impacts of In-Channel Pieces of Large Wood in Chilean Streams. River Res. Appl. 2017, 33, 73–88. [Google Scholar] [CrossRef] [Green Version]

- Montgomery, D.R.; Collins, B.D.; Buffington, J.M.; Abbe, T.B. Geomorphic effects of wood in rivers. Ecol. Manag. Wood World Rivers 2003, 37, 21–47. [Google Scholar]

- Chen, S.-C.; Tfwala, S.S.; Wang, C.-R.; Kuo, Y.-M.; Chao, Y.-C. Incipient motion of large wood in river channels considering log density and orientation. J. Hydraul. Res. 2020, 58, 489–502. [Google Scholar] [CrossRef]

- Manners, R.B.; Doyle, M.W.; Small, M.J. Structure and hydraulics of natural woody debris jams. Water Resour. Res. 2007, 43, 1–17. [Google Scholar] [CrossRef]

- Wohl, E.; Beckman, N. Controls on the Longitudinal Distribution of Channel-Spanning Logjams in the Colorado Front Range, USA. River Res. Appl. 2014, 30, 112–131. [Google Scholar] [CrossRef]

- Diez, J.R.; Elosegi, A.; Pozo, J. Woody debris in north Iberian streams: Influence of geomorphology, vegetation, and management. Environ. Manag. 2001, 28, 687–698. [Google Scholar] [CrossRef]

- Fausch, K.D.; Northcote, T.G. Large Woody Debris and Salmonid Habitat in a Small Coastal British Columbia Stream. Can. J. Fish. Aquat. Sci. 1992, 49, 682–693. [Google Scholar] [CrossRef]

- de Paula, F.R.; Ferraz, S.F.; Gerhard, P.; Vettorazzi, C.A.; Ferreira, A. Large woody debris input and its influence on channel structure in agricultural lands of Southeast Brazil. Environ. Manag. 2011, 48, 750–763. [Google Scholar] [CrossRef]

- Máčka, Z.; Kinc, O.; Hlavňa, M.; Hortvík, D.; Krejčí, L.; Matulová, J.; Coufal, P.; Zahradníček, P. Large wood load and transport in a flood-free period within an inter-dam reach: A decade of monitoring the Dyje River, Czech Republic. Earth Surf. Processes Landf. 2020, 45, 3540–3555. [Google Scholar] [CrossRef]

- Mao, L.; Ravazzolo, D.; Bertoldi, W. The role of vegetation and large wood on the topographic characteristics of braided river systems. Geomorphology 2020, 367, 107299. [Google Scholar] [CrossRef]

- Galia, T.; Macurová, T.; Vardakas, L.; Škarpich, V.; Matušková, T.; Kalogianni, E. Drivers of variability in large wood loads along the fluvial continuum of a Mediterranean intermittent river. Earth Surf. Processes Landf. 2020, 45, 2048–2062. [Google Scholar] [CrossRef]

- Martin, D.J.; Pavlowsky, R.T.; Harden, C.P. Reach-scale characterization of large woody debris in a low-gradient, Midwestern USA river system. Geomorphology 2016, 262, 91–100. [Google Scholar] [CrossRef]

- Ortega-Terol, D.; Moreno, M.A.; Hernández-López, D.; Rodríguez-Gonzálvez, P. Survey and Classification of Large Woody Debris (LWD) in Streams Using Generated Low-Cost Geomatic Products. Remote Sens. 2014, 6, 11770–11790. [Google Scholar] [CrossRef] [Green Version]

- Morgan, J.A.; Brogan, D.J.; Nelson, P.A. Application of Structure-from-Motion photogrammetry in laboratory flumes. Geomorphology 2017, 276, 125–143. [Google Scholar] [CrossRef] [Green Version]

- Smith, M.W.; Carrivick, J.L.; Quincey, D.J. Structure from motion photogrammetry in physical geography. Prog. Phys. Geogr. Earth Environ. 2015, 40, 247–275. [Google Scholar] [CrossRef] [Green Version]

- Rusnák, M.; Sládek, J.; Kidová, A.; Lehotský, M. Template for high-resolution river landscape mapping using UAV technology. Measurement 2018, 115, 139–151. [Google Scholar] [CrossRef]

- Thevenet, A.; Citterio, A.; Piegay, H. A new methodology for the assessment of large woody debris accumulations on highly modified rivers (example of two French Piedmont rivers). Regul. Rivers: Res. Manag. 1998, 14, 467–483. [Google Scholar] [CrossRef]

- Spreitzer, G.; Tunnicliffe, J.; Friedrich, H. Using Structure from Motion photogrammetry to assess large wood (LW) accumulations in the field. Geomorphology 2019, 346, 106851. [Google Scholar] [CrossRef]

- Windrim, L.; Bryson, M.; McLean, M.; Randle, J.; Stone, C. Automated Mapping of Woody Debris over Harvested Forest Plantations Using UAVs, High-Resolution Imagery, and Machine Learning. Remote Sens. 2019, 11, 733. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.; Panahi, M.; Pourghasemi, H.R.; Shahabi, H.; Alizadeh, M.; Shirzadi, A.; Khosravi, K.; Melesse, A.M.; Yekrangnia, M.; Rezaie, F.; et al. SEVUCAS: A Novel GIS-Based Machine Learning Software for Seismic Vulnerability Assessment. Appl. Sci. 2019, 9, 3495. [Google Scholar] [CrossRef] [Green Version]

- Shafizadeh-Moghadam, H.; Valavi, R.; Shahabi, H.; Chapi, K.; Shirzadi, A. Novel forecasting approaches using combination of machine learning and statistical models for flood susceptibility mapping. J. Environ. Manag. 2018, 217, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Chen, W.; Li, Y.; Xue, W.; Shahabi, H.; Li, S.; Hong, H.; Wang, X.; Bian, H.; Zhang, S.; Pradhan, B.; et al. Modeling flood susceptibility using data-driven approaches of naïve Bayes tree, alternating decision tree, and random forest methods. Sci. Total Environ. 2020, 701, 134979. [Google Scholar] [CrossRef]

- Tien Bui, D.; Shirzadi, A.; Chapi, K.; Shahabi, H.; Pradhan, B.; Pham, B.T.; Singh, V.P.; Chen, W.; Khosravi, K.; Bin Ahmad, B.; et al. A Hybrid Computational Intelligence Approach to Groundwater Spring Potential Mapping. Water 2019, 11, 2013. [Google Scholar] [CrossRef] [Green Version]

- Miraki, S.; Zanganeh, S.H.; Chapi, K.; Singh, V.P.; Shirzadi, A.; Shahabi, H.; Pham, B.T. Mapping Groundwater Potential Using a Novel Hybrid Intelligence Approach. Water Resour. Manag. 2019, 33, 281–302. [Google Scholar] [CrossRef]

- Chen, W.; Pradhan, B.; Li, S.; Shahabi, H.; Rizeei, H.M.; Hou, E.; Wang, S. Novel Hybrid Integration Approach of Bagging-Based Fisher’s Linear Discriminant Function for Groundwater Potential Analysis. Nat. Resour. Res. 2019, 28, 1239–1258. [Google Scholar] [CrossRef] [Green Version]

- Tien Bui, D.; Shahabi, H.; Shirzadi, A.; Chapi, K.; Hoang, N.-D.; Pham, B.T.; Bui, Q.-T.; Tran, C.-T.; Panahi, M.; Bin Ahmad, B.; et al. A Novel Integrated Approach of Relevance Vector Machine Optimized by Imperialist Competitive Algorithm for Spatial Modeling of Shallow Landslides. Remote Sens. 2018, 10, 1538. [Google Scholar] [CrossRef] [Green Version]

- Shafizadeh-Moghadam, H.; Minaei, M.; Shahabi, H.; Hagenauer, J. Big data in Geohazard; pattern mining and large scale analysis of landslides in Iran. Earth Sci. Inform. 2019, 12, 1–17. [Google Scholar] [CrossRef]

- Jaafari, A.; Zenner, E.K.; Panahi, M.; Shahabi, H. Hybrid artificial intelligence models based on a neuro-fuzzy system and metaheuristic optimization algorithms for spatial prediction of wildfire probability. Agric. For. Meteorol. 2019, 266–267, 198–207. [Google Scholar] [CrossRef]

- Casado, M.R.; Gonzalez, R.B.; Kriechbaumer, T.; Veal, A. Automated Identification of River Hydromorphological Features Using UAV High Resolution Aerial Imagery. Sensors 2015, 15, 27969–27989. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Laslier, M.; Hubert-Moy, L.; Corpetti, T.; Dufour, S. Monitoring the colonization of alluvial deposits using multitemporal UAV RGB-imagery. Appl. Veg. Sci. 2019, 22, 561–572. [Google Scholar] [CrossRef]

- Dunford, R.; Michel, K.; Gagnage, M.; Piégay, H.; Trémelo, M.L. Potential and constraints of Unmanned Aerial Vehicle technology for the characterization of Mediterranean riparian forest. Int. J. Remote Sens. 2009, 30, 4915–4935. [Google Scholar] [CrossRef]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation, 7th ed.; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Shaik, K.B.; Ganesan, P.; Kalist, V.; Sathish, B.S.; Jenitha, J.M.M. Comparative Study of Skin Color Detection and Segmentation in HSV and YCbCr Color Space. Procedia Comput. Sci. 2015, 57, 41–48. [Google Scholar] [CrossRef] [Green Version]

- Amanpreet, K.; Kranth, B.V. Comparison between YCbCr Color Space and CIELab Color Space for Skin Color Segmentation. Int. J. Appl. Inf. Syst. 2012, 3, 30–33. [Google Scholar]

- Soriano, M.; Martinkauppi, B.; Huovinen, S.; Laaksonen, M. Skin detection in video under changing illumination conditions. In Proceedings of the 15th International Conference on Pattern Recognition. ICPR-2000, Barcelona, Spain, 3–7 September 2000; Volume 831, pp. 839–842. [Google Scholar]

- Schloss, K.B.; Lessard, L.; Racey, C.; Hurlbert, A.C. Modeling color preference using color space metrics. Vis. Res. 2018, 151, 99–116. [Google Scholar] [CrossRef]

- Schwarz, M.W.; Cowan, W.B.; Beatty, J.C. An experimental comparison of RGB, YIQ, LAB, HSV, and opponent color models. ACM Trans. Graph. 1987, 6, 123–158. [Google Scholar] [CrossRef]

- Ford, A.; Roberts, A. Color Space Conversions; Westminster University: London, UK, 1996. [Google Scholar]

- Ruiz-Abellón, M.D.; Gabaldón, A.; Guillamón, A. Load Forecasting for a Campus University Using Ensemble Methods Based on Regression Trees. Energies 2018, 11, 2038. [Google Scholar] [CrossRef] [Green Version]

- Manju, N.; Harish, B.; Prajwal, V. Ensemble Feature Selection and Classification of Internet Traffic using XGBoost Classifier. Int. J. Comput. Netw. Inf. Secur. 2019, 11, 37–44. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd SIGKDD Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Forthofer, R.N.; Lee, E.S.; Hernandez, M. 13-Linear Regression. In Biostatistics, 2nd ed.; Forthofer, R.N., Lee, E.S., Hernandez, M., Eds.; Academic Press: San Diego, CA, USA, 2007; pp. 349–386. [Google Scholar]

| Group | R | G | B | Y | Cb | Cr | H | S | V | nR | nG | nB | l* | a* | b* |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 56.8 | 34.9 | 11.0 | ||||||||||||

| 2 | 1.5 | 1.0 | 1.6 | ||||||||||||

| 3 | 1.5 | 1.2 | 1.3 | ||||||||||||

| 4 | 7.7 | 5.4 | 2.0 | ||||||||||||

| 5 | 1.4 | 5.1 | 4.4 | ||||||||||||

| 6 | 303.1 | 4.9 | 2.2 | 2.3 | 5.9 | 301.1 | |||||||||

| 7 | 464.1 | 7.7 | 5.9 | 2.4 | 16.2 | 446.0 | 28.8 | 28.7 | 11.2 | ||||||

| 8 | 1060.6 | 310.3 | 106.7 | 2.8 | 22.5 | 563.5 | 55.6 | 52.6 | 18.0 | 1142.7 | 441.9 | 809.9 | |||

| 9 | 2.0 | 5.8 | 2.4 | 9.7 | 10.9 | 4.6 | |||||||||

| 10 | 2.7 | 17.7 | 496.9 | 46.5 | 38.0 | 13.3 | 509.7 | 35.5 | 26.2 | ||||||

| 11 | 31.3 | 25.1 | 10.2 | 2.2 | 18.5 | 17.0 | |||||||||

| 12 | 2.6 | 6.9 | 288.9 | 288.3 | 8.2 | 8.6 | |||||||||

| 13 | 2.4 | 5.6 | 4.8 | 26.0 | 20.4 | 8.6 | |||||||||

| 14 | 794.7 | 191.7 | 68.8 | 763.8 | 222.1 | 406.1 | |||||||||

| 15 | 2.2 | 4.1 | 1.7 | 2.2 | 3.4 | ||||||||||

| 16 | 2.2 | 3.5 | 2.1 | 5.7 | 7.7 |

| Factor Combinations | TP | FN | FP | TN | Recall (%) | Precision (%) | F1 (%) |

|---|---|---|---|---|---|---|---|

| Y Cb Cr | 21,333 | 4817 | 9938 | 3,998,822 | 81.58 | 68.22 | 74.90 |

| H S V | 21,655 | 4495 | 12,122 | 3,996,638 | 82.81 | 64.11 | 73.46 |

| nR nG nB | 17,110 | 9040 | 8173 | 4,000,587 | 65.43 | 67.67 | 66.55 |

| l* a* b* | 21,898 | 4252 | 11,967 | 3,996,793 | 83.74 | 64.66 | 74.20 |

| Cb Cr H S | 21,624 | 4526 | 11,719 | 3,997,041 | 82.69 | 64.85 | 73.77 |

| Cb Cr H | 21,497 | 4653 | 9463 | 3,999,297 | 82.21 | 69.43 | 75.82 |

| H S V nR nB | 21,290 | 4860 | 10,976 | 3,997,784 | 81.41 | 65.98 | 73.70 |

| H S a* b* | 21,193 | 4957 | 9948 | 3,998,812 | 81.04 | 68.05 | 74.55 |

| Y Cb Cr nB | 22,017 | 4133 | 12,211 | 3,996,549 | 84.20 | 64.32 | 74.26 |

| Y Cb Cr H S | 21,970 | 4180 | 12,679 | 3,996,081 | 84.02 | 63.41 | 73.71 |

| H S V a* b* | 21,974 | 4176 | 12,287 | 3,996,473 | 84.03 | 64.14 | 74.08 |

| Cb Cr | 21,531 | 4619 | 9547 | 3,999,213 | 82.34 | 69.28 | 75.81 |

| TP | FN | FP | TN | Recall (%) | Precision (%) | F1 (%) | |

|---|---|---|---|---|---|---|---|

| Block 5 | 21,531 | 4619 | 9547 | 3,999,213 | 82.34 | 69.28 | 75.81 |

| Block 6 | 13,549 | 5892 | 5216 | 2,756,964 | 69.69 | 72.20 | 70.95 |

| Block 7 | 57,569 | 7511 | 7662 | 3,025,384 | 88.46 | 88.25 | 88.36 |

| Block 8 | 14,021 | 1929 | 1391 | 3,387,310 | 87.91 | 90.97 | 89.44 |

| TP | FN | FP | TN | Recall (%) | Precision (%) | F1 (%) | |

|---|---|---|---|---|---|---|---|

| Block 5 | 21,455 | 4695 | 7233 | 4,001,527 | 82.05 | 74.79 | 78.42 |

| Block 6 | 13,381 | 6060 | 3266 | 2,758,914 | 68.83 | 80.38 | 74.60 |

| Block 7 | 57,385 | 7695 | 3474 | 3,029,572 | 88.18 | 94.29 | 91.23 |

| Block 8 | 14,006 | 1944 | 362 | 3,388,339 | 87.81 | 97.48 | 92.65 |

| Block | Amount | Loss | Length L (m) | Head DH (m) | Root DR (m) | Volume Estimation V (m3) | ||

|---|---|---|---|---|---|---|---|---|

| Field | Manual | Automatic | ||||||

| 1 | 9 | 3 | 2.01~4.44 | 0.06~0.35 | 0.08~0.35 | 0.56 | 1.21 | 2.22 |

| 2 | 21 | 2 | 0.80~4.69 | 0.09~0.40 | 0.05~0.60 | 1.78 | 1.50 | 2.93 |

| 3 * | 5 | 4 | 1.72 | 0.37 | 0.37 | 0.18 | 0.68 | 0.84 |

| 4 | 11 | 3 | 0.86~4.67 | 0.02~0.47 | 0.05~0.55 | 0.75 | 1.14 | 1.33 |

| 5 | 12 | 4 | 0.72~5.70 | 0.08~0.39 | 0.09~0.55 | 1.47 | 1.09 | 1.16 |

| 6 | 8 | 2 | 1.50~8.53 | 0.06~0.48 | 0.13~0.46 | 0.68 | 1.29 | 2.42 |

| 7 | 8 | 2 | 1.20~9.21 | 0.06~1.13 | 0.03~1.00 | 5.83 | 4.37 | 5.37 |

| 8 | 4 | 1 | 3.92~6.54 | 0.04~0.29 | 0.1~0.90 | 1.72 | 1.26 | 2.99 |

| 9 | 3 | 0 | 2.40~10.63 | 0.12~0.40 | 0.1~0.64 | 2.12 | 1.12 | 1.51 |

| 10 | 11 | 1 | 1.23~5.32 | 0.08~0.63 | 0.1~0.31 | 1.15 | 1.29 | 2.08 |

| 11 * | 4 | 3 | 10.2 | 0.46 | 0.27 | 1.14 | 1.25 | 1.96 |

| 12 | 9 | 2 | 1.23~4.33 | 0.08~0.40 | 0.06~0.44 | 0.67 | 0.92 | 1.11 |

| 13 | 12 | 0 | 1.93~13.57 | 0.13~0.65 | 0.12~0.60 | 4.60 | 4.83 | 6.52 |

| 13 | 116 | 27 | Does not include unrecognized success | 22.65 | 21.97 | 32.45 | ||

| Not recognized successfully | 0.82 | 1.68 | 0 | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liang, M.-C.; Tfwala, S.S.; Chen, S.-C. The Evaluation of Color Spaces for Large Woody Debris Detection in Rivers Using XGBoost Algorithm. Remote Sens. 2022, 14, 998. https://doi.org/10.3390/rs14040998

Liang M-C, Tfwala SS, Chen S-C. The Evaluation of Color Spaces for Large Woody Debris Detection in Rivers Using XGBoost Algorithm. Remote Sensing. 2022; 14(4):998. https://doi.org/10.3390/rs14040998

Chicago/Turabian StyleLiang, Min-Chih, Samkele S. Tfwala, and Su-Chin Chen. 2022. "The Evaluation of Color Spaces for Large Woody Debris Detection in Rivers Using XGBoost Algorithm" Remote Sensing 14, no. 4: 998. https://doi.org/10.3390/rs14040998

APA StyleLiang, M. -C., Tfwala, S. S., & Chen, S. -C. (2022). The Evaluation of Color Spaces for Large Woody Debris Detection in Rivers Using XGBoost Algorithm. Remote Sensing, 14(4), 998. https://doi.org/10.3390/rs14040998