Multimodal Deep Learning for Rice Yield Prediction Using UAV-Based Multispectral Imagery and Weather Data

Abstract

:1. Introduction

2. Materials and Methods

2.1. Description of the Study Site

2.2. Field Experimentation, Sampling Procedures and Data Collection

2.3. Image Acquisition and Processing

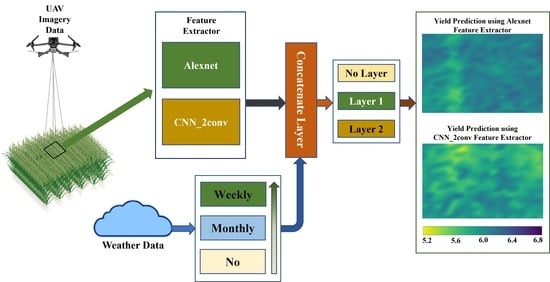

2.4. Neural Network Architectures

2.5. Training and Validation Processes

2.6. Statistical Analysis

3. Results

3.1. Yield Variations

3.2. Model Performance

3.3. Within-Field Prediction of Rice Yield

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nyéki, A.; Neményi, M. Crop Yield Prediction in Precision Agriculture. Agronomy 2022, 12, 2460. [Google Scholar] [CrossRef]

- Mariotto, I.; Thenkabail, P.S.; Huete, A.; Slonecker, E.T.; Platonov, A. Hyperspectral versus Multispectral Crop-Productivity Modeling and Type Discrimination for the HyspIRI Mission. Remote Sens. Environ. 2013, 139, 291–305. [Google Scholar] [CrossRef]

- Wang, L.; Tian, Y.; Yao, X.; Zhu, Y.; Cao, W. Predicting Grain Yield and Protein Content in Wheat by Fusing Multi-Sensor and Multi-Temporal Remote-Sensing Images. Field Crops Res. 2014, 164, 178–188. [Google Scholar] [CrossRef]

- Zhou, X.; Kono, Y.; Win, A.; Matsui, T.; Tanaka, T.S.T. Predicting Within-Field Variability in Grain Yield and Protein Content of Winter Wheat Using UAV-Based Multispectral Imagery and Machine Learning Approaches. Plant Prod. Sci. 2021, 24, 137–151. [Google Scholar] [CrossRef]

- Han, X.; Liu, F.; He, X.; Ling, F. Research on Rice Yield Prediction Model Based on Deep Learning. Comput. Intell. Neurosci. 2022, 2022, 1922561. [Google Scholar] [CrossRef]

- Cao, J.; Zhang, Z.; Tao, F.; Zhang, L.; Luo, Y.; Zhang, J.; Han, J.; Xie, J. Integrating Multi-Source Data for Rice Yield Prediction across China Using Machine Learning and Deep Learning Approaches. Agric. For. Meteorol. 2021, 297, 108275. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Lobell, D.; Potgieter, A.B.; Wang, S.; Peng, J.; Xu, T.; Asseng, S.; Zhang, Y.; You, L.; et al. Integrating Satellite and Climate Data to Predict Wheat Yield in Australia Using Machine Learning Approaches. Agric. Meteorol. 2019, 274, 144–159. [Google Scholar] [CrossRef]

- Haghighat, A.K.; Ravichandra-Mouli, V.; Chakraborty, P.; Esfandiari, Y.; Arabi, S.; Sharma, A. Applications of Deep Learning in Intelligent Transportation Systems. J. Big Data Anal. Transp. 2020, 2, 115–145. [Google Scholar] [CrossRef]

- Srivastava, A.K.; Safaei, N.; Khaki, S.; Lopez, G.; Zeng, W.; Ewert, F.; Gaiser, T.; Rahimi, J. Winter Wheat Yield Prediction Using Convolutional Neural Networks from Environmental and Phenological Data. Sci. Rep. 2022, 12, 3215. [Google Scholar] [CrossRef]

- Fieuzal, R.; Marais Sicre, C.; Baup, F. Estimation of Corn Yield Using Multi-Temporal Optical and Radar Satellite Data and Artificial Neural Networks. Int. J. Appl. Earth Obs. Geoinf. 2017, 57, 14–23. [Google Scholar] [CrossRef]

- Amaratunga, V.; Wickramasinghe, L.; Perera, A.; Jayasinghe, J.; Rathnayake, U. Artificial Neural Network to Estimate the Paddy Yield Prediction Using Climatic Data. Math. Probl. Eng. 2020, 2020, 8627824. [Google Scholar] [CrossRef]

- Aghighi, H.; Azadbakht, M.; Ashourloo, D.; Shahrabi, H.S.; Radiom, S. Machine Learning Regression Techniques for the Silage Maize Yield Prediction Using Time-Series Images of Landsat 8 OLI. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4563–4577. [Google Scholar] [CrossRef]

- Jeong, J.H.; Resop, J.P.; Mueller, N.D.; Fleisher, D.H.; Yun, K.; Butler, E.E.; Timlin, D.J.; Shim, K.-M.; Gerber, J.S.; Reddy, V.R.; et al. Random Forests for Global and Regional Crop Yield Predictions. PLoS ONE 2016, 11, e0156571. [Google Scholar] [CrossRef] [PubMed]

- Prasad, N.R.; Patel, N.R.; Danodia, A. Crop Yield Prediction in Cotton for Regional Level Using Random Forest Approach. Spat. Inf. Res. 2021, 29, 195–206. [Google Scholar] [CrossRef]

- Jui, S.J.J.; Ahmed, A.A.M.; Bose, A.; Raj, N.; Sharma, E.; Soar, J.; Chowdhury, M.W.I. Spatiotemporal Hybrid Random Forest Model for Tea Yield Prediction Using Satellite-Derived Variables. Remote Sens. 2022, 14, 805. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, S.; Wang, X.; Chen, B.; Chen, J.; Wang, J.; Huang, M.; Wang, Z.; Ma, L.; Wang, P.; et al. Exploring the Superiority of Solar-Induced Chlorophyll Fluorescence Data in Predicting Wheat Yield Using Machine Learning and Deep Learning Methods. Comput. Electron. Agric. 2022, 192, 106612. [Google Scholar] [CrossRef]

- Kuwata, K.; Shibasaki, R. Estimating Corn Yield in the United States with Modis Evi and Machine Learning Methods. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, III-8, 131–136. [Google Scholar] [CrossRef]

- Wang, K.; Franklin, S.E.; Guo, X.; Cattet, M. Remote Sensing of Ecology, Biodiversity and Conservation: A Review from the Perspective of Remote Sensing Specialists. Sensors 2010, 10, 9647–9667. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean Yield Prediction from UAV Using Multimodal Data Fusion and Deep Learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Zhou, J.; Lu, X.; Yang, R.; Chen, H.; Wang, Y.; Zhang, Y.; Huang, J.; Liu, F. Developing Novel Rice Yield Index Using UAV Remote Sensing Imagery Fusion Technology. Drones 2022, 6, 151. [Google Scholar] [CrossRef]

- Fu, Z.; Yu, S.; Zhang, J.; Xi, H.; Gao, Y.; Lu, R.; Zheng, H.; Zhu, Y.; Cao, W.; Liu, X. Combining UAV Multispectral Imagery and Ecological Factors to Estimate Leaf Nitrogen and Grain Protein Content of Wheat. Eur. J. Agron. 2022, 132, 126405. [Google Scholar] [CrossRef]

- Tanabe, R.; Matsui, T.; Tanaka, T.S.T. Winter Wheat Yield Prediction Using Convolutional Neural Networks and UAV-Based Multispectral Imagery. Field Crops Res. 2023, 291, 108786. [Google Scholar] [CrossRef]

- Yang, Q.; Shi, L.; Han, J.; Zha, Y.; Zhu, P. Deep Convolutional Neural Networks for Rice Grain Yield Estimation at the Ripening Stage Using UAV-Based Remotely Sensed Images. Field Crops Res. 2019, 235, 142–153. [Google Scholar] [CrossRef]

- Wang, F.; Wang, F.; Zhang, Y.; Hu, J.; Huang, J.; Xie, J. Rice Yield Estimation Using Parcel-Level Relative Spectral Variables from UAV-Based Hyperspectral Imagery. Front. Plant Sci. 2019, 10, 453. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; IEEE: New York, NY, USA, 2015; pp. 3431–3440. [Google Scholar]

- Collobert, R.; Weston, J. A Unified Architecture for Natural Language Processing: Deep Neural Networks with Multitask Learning. In Proceedings of the 25th International Conference on Machine Learning, New York, NY, USA, 5–9 July 2008. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D Convolutional Neural Networks for Human Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef]

- Sinclair, T.R.; Horie, T. Leaf Nitrogen, Photosynthesis, and Crop Radiation Use Efficiency: A Review. Crop. Sci. 1989, 29, 90–98. [Google Scholar] [CrossRef]

- Yoshimoto, M.; Fukuoka, M.; Tsujimoto, Y.; Matsui, T.; Kobayasi, K.; Saito, K.; van Oort, P.A.J.; Inusah, B.I.Y.; Vijayalakshmi, C.; Vijayalakshmi, D.; et al. Monitoring Canopy Micrometeorology in Diverse Climates to Improve the Prediction of Heat-Induced Spikelet Sterility in Rice under Climate Change. Agric. Meteorol. 2022, 316, 108860. [Google Scholar] [CrossRef]

- Song, Y.; Wang, J.; Wang, L. Satellite Solar-Induced Chlorophyll Fluorescence Reveals Heat Stress Impacts on Wheat Yield in India. Remote Sens. 2020, 12, 3277. [Google Scholar] [CrossRef]

- Jiang, D.; Yang, X.; Clinton, N.; Wang, N. An Artificial Neural Network Model for Estimating Crop Yields Using Remotely Sensed Information. Int. J. Remote Sens. 2004, 25, 1723–1732. [Google Scholar] [CrossRef]

- Kim, N.; Lee, Y.-W. Machine Learning Approaches to Corn Yield Estimation Using Satellite Images and Climate Data: A Case of Iowa State. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2016, 34, 383–390. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Qian, W.; Kang, H.S.; Lee, D.K. Distribution of seasonal rainfall in the East Asian monsoon region. Theor. Appl. Climatol. 2002, 73, 151–168. [Google Scholar] [CrossRef]

- Kanda, T.; Takata, Y.; Kohayama, K.; Ohkura, T.; Maejima, Y.; Wakabayashi, S.; Obara, H. New Soil Maps of Japan based on the Comprehensive Soil Classification System of Japan—First Approximation and its Application to the World Reference Base for Soil Resources 2006. Jpn. Agric. Res. Q. (JARQ) 2018, 52, 285–292. Available online: https://www.jstage.jst.go.jp/article/jarq/52/4/52_285 (accessed on 5 April 2023). [CrossRef]

- Ohno, H.; Sasaki, K.; Ohara, G.; Nakazono, K. Development of Grid Square Air Temperature and Precipitation Data Compiled from Observed, Forecasted, and Climatic Normal Data. Clim. Biosph. 2016, 16, 71–79. [Google Scholar] [CrossRef]

- Luo, S.; Jiang, X.; Jiao, W.; Yang, K.; Li, Y.; Fang, S. Remotely Sensed Prediction of Rice Yield at Different Growth Durations Using UAV Multispectral Imagery. Agriculture 2022, 12, 1447. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving Neural Networks by Preventing Co-Adaptation of Feature Detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Huang, H.; Huang, J.; Feng, Q.; Liu, J.; Li, X.; Wang, X.; Niu, Q. Developing a Dual-Stream Deep-Learning Neural Network Model for Improving County-Level Winter Wheat Yield Estimates in China. Remote Sens. 2022, 14, 5280. [Google Scholar] [CrossRef]

- Shook, J.; Gangopadhyay, T.; Wu, L.; Ganapathysubramanian, B.; Sarkar, S.; Singh, A.K. Crop Yield Prediction Integrating Genotype and Weather Variables Using Deep Learning. PLoS ONE 2021, 16, e0252402. [Google Scholar] [CrossRef] [PubMed]

- Gavahi, K.; Abbaszadeh, P.; Moradkhani, H. DeepYield: A Combined Convolutional Neural Network with Long Short-Term Memory for Crop Yield Forecasting. Expert. Syst. Appl. 2021, 184, 115511. [Google Scholar] [CrossRef]

- Tanaka, T.S.T. Assessment of Design and Analysis Frameworks for On-Farm Experimentation through a Simulation Study of Wheat Yield in Japan. Precis. Agric. 2021, 22, 1601–1616. [Google Scholar] [CrossRef]

| Field ID | Prefecture | Latitude | Longitude | Environment ID | Sowing/Transplanting Date | Planting System | Uav Imagery Acquisition Date | Variety | Camera |

|---|---|---|---|---|---|---|---|---|---|

| 01 | Miyagi | 38°13′N | 140°58′E | A | 25 April 2017 | Direct Seeding | 2 August 2017 | Hitomebore | Sequoia+ |

| 02 | Miyagi | 38°13′N | 140°58′E | A | 7 May 2017 | Transplanting | 2 August 2017 | Hitomebore | Sequoia+ |

| 03 | Miyagi | 38°13′N | 140°58′E | B | 29 April 2018 | Direct Seeding | 2 August 2018 | Manamusume | Sequoia+ |

| 04 | Miyagi | 38°13′N | 140°58′E | B | 7 May 2018 | Transplanting | 2 August 2018 | Hitomebore | Sequoia+ |

| 05 | Miyagi | 38°12′N | 140°58′E | C | 4 May 2019 | Direct Seeding | 8 August 2019 | Hitomebore | Sequoia+ |

| 06 | Miyagi | 38°13′N | 140°58′E | C | 12 May 2019 | Transplanting | 8 August 2019 | Hitomebore | Sequoia+ |

| 07 | Miyagi | 38°13′N | 140°58′E | C | 16 May 2019 | Transplanting | 8 August 2019 | Hitomebore | Sequoia+ |

| 08 | Gifu | 35°38′N | 137°06′E | D | 11 May 2020 | Transplanting | 12 August 2020 | Koshihikari | Rededge Altum |

| 09 | Gifu | 35°13′N | 136°40′E | E | 11 May 2020 | Transplanting | 6 August 2020 | Koshihikari | Rededge Altum |

| 10 | Gifu | 35°14′N | 136°35′E | F | 11 May 2020 | Transplanting | 6 August 2020 | Hoshijirushi | Rededge Altum |

| 11 | Gifu | 35°15′N | 136°35′E | G | 23 May 2020 | Transplanting | 6 August 2020 | Hoshijirushi | Rededge Altum |

| 12 | Gifu | 35°15′N | 136°35′E | H | 25 April 2021 | Transplanting | 13 July 2021 | Akitakomachi | Rededge Altum |

| 13 | Gifu | 35°14′N | 136°35′E | H | 19 April 2021 | Transplanting | 13 July 2021 | Shikiyutaka | Rededge Altum |

| 14 | Gifu | 35°15′N | 136°35′E | I | 14 May 2021 | Transplanting | 11 August 2021 | Hoshijirushi | Rededge Altum |

| 15 | Gifu | 35°11′N | 136°38′E | J | 10 May 2021 | Transplanting | 11 August 2021 | Hoshijirushi | Rededge Altum |

| 16 | Gifu | 35°13′N | 136°40′E | K | 11 May 2021 | Transplanting | 11 August 2021 | Hoshijirushi | Rededge Altum |

| 17 | Gifu | 35°15′N | 136°35′E | L | 16 May 2022 | Transplanting | 9 August 2022 | Hoshijirushi | Rededge Altum |

| 18 | Gifu | 35°16′N | 136°35′E | M | 23 May 2022 | Transplanting | 9 August 2022 | Hoshijirushi | Rededge Altum |

| 19 | Gifu | 35°14′N | 136°39′E | M | 2 May 2022 | Transplanting | 9 August 2022 | Hoshijirushi | Rededge Altum |

| 20 | Kochi | 33°35′N | 133°38′E | N | 31 March 2022 | Transplanting | 1 July 2022 | Nangoku Sodachi | P4 Multispectral |

| 21 | Kochi | 33°35′N | 133°38′E | O | 4 April 2022 | Transplanting | 1 July 2022 | Yosakoi bijin | P4 Multispectral |

| 22 | Kochi | 33°35′N | 133°39′E | O | 25 May 2022 | Transplanting | 29 July 2022 | Koshihikari | P4 Multispectral |

| Camera | Spectral Band Width (nm) | Field of View (H × V) | Resolution (Pixel) | ||

|---|---|---|---|---|---|

| Green | Red | Near Infrared (NIR) | |||

| Sequoia+ | 550 ± 40 | 660 ± 40 | 790 ± 40 | 62 × 249 | 1280 × 960 |

| Rededge Altum | 560 ± 27 | 668 ± 14 | 842 ± 57 | 48 × 37 | 2064 × 1544 |

| P4 Multispectral | 560 ± 16 | 650 ± 16 | 840 ± 26 | 62.7 × 62.7 | 1600 × 1300 |

| RMSE t ha−1 | |

|---|---|

| Layer | |

| 0 | 0.933 a |

| 1 | 0.910 a |

| 2 | 0.898 a |

| Weather | |

| No | 0.941 a |

| Monthly | 0.917 a |

| Weekly | 0.877 b |

| Architecture | |

| AlexNet | 0.909 a |

| CNN_2conv | 0.910 a |

| ANOVA | p value |

| Layer | 0.024 |

| Weather | 0.003 |

| Architecture | n.s |

| Layer × Weather | n.s |

| Layer × Architecture | n.s |

| Weather × Architecture | n.s |

| Model No. | Architecture | Weather | Layer | Train | Validation | Test | Time for Prediction | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | RMSPE | R2 | RMSE | RMSPE | R2 | RMSE | RMSPE | R2 | |||||

| t ha−1 | % | t ha−1 | % | t ha−1 | % | s/ha | |||||||

| 1 | AlexNet | No | 0 | 0.985 | 16 | 0.54 | 0.867 | 15 | 0.65 | 0.929 | 15 | 0.59 | 25.91 |

| 2 | AlexNet | No | 1 | 0.986 | 15 | 0.54 | 0.908 | 16 | 0.62 | 0.948 | 15 | 0.57 | 25.75 |

| 3 | AlexNet | No | 2 | 0.964 | 15 | 0.56 | 0.882 | 15 | 0.64 | 0.940 | 16 | 0.58 | 25.69 |

| 4 | AlexNet | Monthly | 0 | 0.953 | 15 | 0.57 | 0.897 | 16 | 0.63 | 0.917 | 15 | 0.60 | 25.67 |

| 5 | AlexNet | Monthly | 1 | 0.938 | 15 | 0.58 | 0.869 | 15 | 0.65 | 0.920 | 15 | 0.59 | 25.53 |

| 6 | AlexNet | Monthly | 2 | 0.905 | 15 | 0.61 | 0.862 | 15 | 0.65 | 0.897 | 15 | 0.61 | 25.82 |

| 7 | AlexNet | Weekly | 0 | 0.897 | 15 | 0.62 | 0.859 | 15 | 0.65 | 0.905 | 15 | 0.61 | 25.56 |

| 8 | AlexNet | Weekly | 1 | 0.842 | 14 | 0.66 | 0.830 | 15 | 0.68 | 0.859 | 14 | 0.65 | 25.69 |

| 9 | AlexNet | Weekly | 2 | 0.839 | 14 | 0.67 | 0.845 | 15 | 0.67 | 0.868 | 14 | 0.64 | 25.75 |

| 10 | CNN_2conv | No | 0 | 1.045 | 17 | 0.48 | 0.912 | 16 | 0.61 | 0.969 | 16 | 0.55 | 3.29 |

| 11 | CNN_2conv | No | 1 | 1.024 | 16 | 0.50 | 0.894 | 15 | 0.63 | 0.931 | 15 | 0.58 | 3.24 |

| 12 | CNN_2conv | No | 2 | 0.978 | 15 | 0.55 | 0.893 | 15 | 0.63 | 0.929 | 15 | 0.59 | 3.25 |

| 13 | CNN_2conv | Monthly | 0 | 0.998 | 16 | 0.53 | 0.916 | 16 | 0.61 | 0.970 | 16 | 0.55 | 3.32 |

| 14 | CNN_2conv | Monthly | 1 | 0.906 | 14 | 0.61 | 0.877 | 15 | 0.64 | 0.905 | 15 | 0.61 | 3.52 |

| 15 | CNN_2conv | Monthly | 2 | 0.905 | 14 | 0.61 | 0.876 | 15 | 0.64 | 0.895 | 15 | 0.62 | 3.16 |

| 16 | CNN_2conv | Weekly | 0 | 0.905 | 15 | 0.61 | 0.857 | 15 | 0.66 | 0.907 | 15 | 0.60 | 2.21 |

| 17 | CNN_2conv | Weekly | 1 | 0.833 | 13 | 0.67 | 0.834 | 14 | 0.68 | 0.864 | 14 | 0.64 | 3.20 |

| 18 | CNN_2conv | Weekly | 2 | 0.821 | 13 | 0.68 | 0.831 | 14 | 0.68 | 0.860 | 14 | 0.65 | 3.22 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mia, M.S.; Tanabe, R.; Habibi, L.N.; Hashimoto, N.; Homma, K.; Maki, M.; Matsui, T.; Tanaka, T.S.T. Multimodal Deep Learning for Rice Yield Prediction Using UAV-Based Multispectral Imagery and Weather Data. Remote Sens. 2023, 15, 2511. https://doi.org/10.3390/rs15102511

Mia MS, Tanabe R, Habibi LN, Hashimoto N, Homma K, Maki M, Matsui T, Tanaka TST. Multimodal Deep Learning for Rice Yield Prediction Using UAV-Based Multispectral Imagery and Weather Data. Remote Sensing. 2023; 15(10):2511. https://doi.org/10.3390/rs15102511

Chicago/Turabian StyleMia, Md. Suruj, Ryoya Tanabe, Luthfan Nur Habibi, Naoyuki Hashimoto, Koki Homma, Masayasu Maki, Tsutomu Matsui, and Takashi S. T. Tanaka. 2023. "Multimodal Deep Learning for Rice Yield Prediction Using UAV-Based Multispectral Imagery and Weather Data" Remote Sensing 15, no. 10: 2511. https://doi.org/10.3390/rs15102511

APA StyleMia, M. S., Tanabe, R., Habibi, L. N., Hashimoto, N., Homma, K., Maki, M., Matsui, T., & Tanaka, T. S. T. (2023). Multimodal Deep Learning for Rice Yield Prediction Using UAV-Based Multispectral Imagery and Weather Data. Remote Sensing, 15(10), 2511. https://doi.org/10.3390/rs15102511