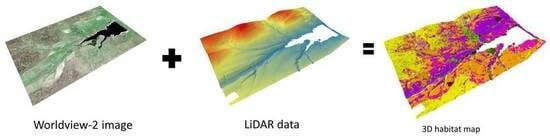

Three-Dimensional Mapping of Habitats Using Remote-Sensing Data and Machine-Learning Algorithms

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Field Data

2.3. RS Datasets

2.4. Methodology

3. Results and Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Guo, Q.; Su, Y.; Hu, T.; Zhao, X.; Wu, F.; Li, Y.; Liu, J.; Chen, L.; Xu, G.; Lin, G. An integrated UAV-borne lidar system for 3D habitat mapping in three forest ecosystems across China. Int. J. Remote Sens. 2017, 38, 2954–2972. [Google Scholar] [CrossRef]

- Tittensor, D.P.; Walpole, M.; Hill, S.L.L.; Boyce, D.G.; Britten, G.L.; Burgess, N.D.; Butchart, S.H.M.; Leadley, P.W.; Regan, E.C.; Alkemade, R. A mid-term analysis of progress toward international biodiversity targets. Science 2014, 346, 241–244. [Google Scholar] [CrossRef] [PubMed]

- Foresight. The Future of Food and Farming. Executive Summary; Government Office for Science: London, UK, 2011.

- Jetz, W.; Wilcove, D.S.; Dobson, A.P. Projected impacts of climate and land-use change on the global diversity of birds. PLoS Biol. 2007, 5, e157. [Google Scholar] [CrossRef]

- Sala, O.E.; Stuart Chapin, F.; Armesto, J.J.; Berlow, E.; Bloomfield, J.; Dirzo, R.; Huber-Sanwald, E.; Huenneke, L.F.; Jackson, R.B.; Kinzig, A. Global biodiversity scenarios for the year 2100. Science 2000, 287, 1770–1774. [Google Scholar] [CrossRef]

- Brooks, T.M.; Mittermeier, R.A.; Da Fonseca, G.A.B.; Gerlach, J.; Hoffmann, M.; Lamoreux, J.F.; Mittermeier, C.G.; Pilgrim, J.D.; Rodrigues, A.S.L. Global biodiversity conservation priorities. Science 2006, 313, 58–61. [Google Scholar] [CrossRef]

- Luque, S.; Pettorelli, N.; Vihervaara, P.; Wegmann, M. Improving biodiversity monitoring using satellite remote sensing to provide solutions towards the 2020 conservation targets. Methods Ecol. Evol. 2018, 9, 1784–1786. [Google Scholar] [CrossRef]

- Bergen, K.M.; Goetz, S.J.; Dubayah, R.O.; Henebry, G.M.; Hunsaker, C.T.; Imhoff, M.L.; Nelson, R.F.; Parker, G.G.; Radeloff, V.C. Remote sensing of vegetation 3-D structure for biodiversity and habitat: Review and implications for lidar and radar spaceborne missions. J. Geophys. Res. Biogeosci. 2009, 114. [Google Scholar] [CrossRef]

- Mahdavi, S.; Salehi, B.; Granger, J.; Amani, M.; Brisco, B.; Huang, W. Remote sensing for wetland classification: A comprehensive review. GISci. Remote Sens. 2018, 55, 623–658. [Google Scholar] [CrossRef]

- Amani, M.; Mahdavi, S.; Kakooei, M.; Ghorbanian, A.; Brisco, B.; DeLancey, E.; Toure, S.; Reyes, E.L. Wetland Change Analysis in Alberta, Canada Using Four Decades of Landsat Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10314–10335. [Google Scholar] [CrossRef]

- Amani, M.; Salehi, B.; Mahdavi, S.; Brisco, B. Spectral analysis of wetlands using multi-source optical satellite imagery. ISPRS J. Photogramm. Remote Sens. 2018, 144, 119–136. [Google Scholar] [CrossRef]

- Zhang, R.; Zhou, X.; Ouyang, Z.; Avitabile, V.; Qi, J.; Chen, J.; Giannico, V. Estimating aboveground biomass in subtropical forests of China by integrating multisource remote sensing and ground data. Remote Sens. Environ. 2019, 232, 111341. [Google Scholar] [CrossRef]

- Jin, Y.; Yang, X.; Qiu, J.; Li, J.; Gao, T.; Wu, Q.; Zhao, F.; Ma, H.; Yu, H.; Xu, B. Remote sensing-based biomass estimation and its spatio-temporal variations in temperate grassland, Northern China. Remote Sens. 2014, 6, 1496–1513. [Google Scholar] [CrossRef]

- Zhang, Y.; Shao, Z. Assessing of urban vegetation biomass in combination with LiDAR and high-resolution remote sensing images. Int. J. Remote Sens. 2021, 42, 964–985. [Google Scholar] [CrossRef]

- Hashim, H.; Abd Latif, Z.; Adnan, N.A. Urban vegetation classification with NDVI threshold value method with very high resolution (VHR) Pleiades imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 237–240. [Google Scholar] [CrossRef]

- Karlson, M.; Ostwald, M.; Reese, H.; Bazié, H.R.; Tankoano, B. Assessing the potential of multi-seasonal WorldView-2 imagery for mapping West African agroforestry tree species. Int. J. Appl. Earth Obs. Geoinf. 2016, 50, 80–88. [Google Scholar] [CrossRef]

- Joy, S.M.; Reich, R.M.; Reynolds, R.T. A non-parametric, supervised classification of vegetation types on the Kaibab National Forest using decision trees. Int. J. Remote Sens. 2003, 24, 1835–1852. [Google Scholar] [CrossRef]

- Pricope, N.G.; Minei, A.; Halls, J.N.; Chen, C.; Wang, Y. UAS Hyperspatial LiDAR Data Performance in Delineation and Classification across a Gradient of Wetland Types. Drones 2022, 6, 268. [Google Scholar] [CrossRef]

- Wu, G.; You, Y.; Yang, Y.; Cao, J.; Bai, Y.; Zhu, S.; Wu, L.; Wang, W.; Chang, M.; Wang, X. UAV-LiDAR Measurement of Vegetation Canopy Structure Parameters and Their Impact on Land–Air Exchange Simulation Based on Noah-MP Model. Remote Sens. 2022, 14, 2998. [Google Scholar] [CrossRef]

- Rahmanian, S.; Pourghasemi, H.R.; Pouyan, S.; Karami, S. Habitat potential modelling and mapping of Teucrium polium using machine learning techniques. Environ. Monit. Assess. 2021, 193, 1–21. [Google Scholar] [CrossRef]

- Thapa, A.; Wu, R.; Hu, Y.; Nie, Y.; Singh, P.B.; Khatiwada, J.R.; Yan, L.I.; Gu, X.; Wei, F. Predicting the potential distribution of the endangered red panda across its entire range using MaxEnt modeling. Ecol. Evol. 2018, 8, 10542–10554. [Google Scholar] [CrossRef]

- Pham, T.D.; Yokoya, N.; Bui, D.T.; Yoshino, K.; Friess, D.A. Remote sensing approaches for monitoring mangrove species, structure, and biomass: Opportunities and challenges. Remote Sens. 2019, 11, 230. [Google Scholar] [CrossRef]

- Amani, M.; Salehi, B.; Mahdavi, S.; Granger, J.E.; Brisco, B.; Hanson, A. Wetland Classification Using Multi-Source and Multi-Temporal Optical Remote Sensing Data in Newfoundland and Labrador, Canada. Can. J. Remote Sens. 2017, 43, 360–373. [Google Scholar] [CrossRef]

- Mahdavi, S.; Salehi, B.; Amani, M.; Granger, J.E.; Brisco, B.; Huang, W.; Hanson, A. Object-Based Classification of Wetlands in Newfoundland and Labrador Using Multi-Temporal PolSAR Data. Can. J. Remote Sens. 2017, 43, 432–450. [Google Scholar] [CrossRef]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Agarwal, S.; Vailshery, L.S.; Jaganmohan, M.; Nagendra, H. Mapping urban tree species using very high resolution satellite imagery: Comparing pixel-based and object-based approaches. ISPRS Int. J. Geo-Inf. 2013, 2, 220–236. [Google Scholar] [CrossRef]

- Förster, M.; Schmidt, T.; Schuster, C.; Kleinschmit, B. Multi-temporal detection of grassland vegetation with RapidEye imagery and a spectral-temporal library. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 4930–4933. [Google Scholar]

- Raciti, S.M.; Hutyra, L.R.; Newell, J.D. Mapping carbon storage in urban trees with multi-source remote sensing data: Relationships between biomass, land use, and demographics in Boston neighborhoods. Sci. Total Environ. 2014, 500, 72–83. [Google Scholar] [CrossRef]

- Wang, D.; Wan, B.; Qiu, P.; Su, Y.; Guo, Q.; Wu, X. Artificial mangrove species mapping using pléiades-1: An evaluation of pixel-based and object-based classifications with selected machine learning algorithms. Remote Sens. 2018, 10, 294. [Google Scholar] [CrossRef]

- Franklin, S.E.; Ahmed, O.S. Deciduous tree species classification using object-based analysis and machine learning with unmanned aerial vehicle multispectral data. Int. J. Remote Sens. 2018, 39, 5236–5245. [Google Scholar] [CrossRef]

- Letard, M.; Collin, A.; Corpetti, T.; Lague, D.; Pastol, Y.; Ekelund, A. Classification of land-water continuum habitats using exclusively airborne topobathymetric LiDAR green waveforms and infrared intensity point clouds. Remote Sens. 2022, 14, 341. [Google Scholar] [CrossRef]

- Leon, J.X.; Roelfsema, C.M.; Saunders, M.I.; Phinn, S.R. Measuring coral reef terrain roughness using ‘Structure-from-Motion’ close-range photogrammetry. Geomorphology 2015, 242, 21–28. [Google Scholar] [CrossRef]

- Mohamed, H.; Nadaoka, K.; Nakamura, T. Towards Benthic Habitat 3D Mapping Using Machine Learning Algorithms and Structures from Motion Photogrammetry. Remote Sens. 2020, 12, 127. [Google Scholar] [CrossRef]

- UK Government. Defra Survey Data. Available online: https://environment.data.gov.uk/DefraDataDownload/?Mode=survey (accessed on 25 September 2020).

- Amani, M.; Salehi, B.; Mahdavi, S.; Granger, J.; Brisco, B. Wetland classification in Newfoundland and Labrador using multi-source SAR and optical data integration. GISci. Remote Sens. 2017, 54, 779–796. [Google Scholar] [CrossRef]

- Fatemighomi, H.S.; Golalizadeh, M.; Amani, M. Object-based hyperspectral image classification using a new latent block model based on hidden Markov random fields. Pattern Anal. Appl. 2022, 25, 467–481. [Google Scholar] [CrossRef]

- Mirmazloumi, S.M.; Kakooei, M.; Mohseni, F.; Ghorbanian, A.; Amani, M.; Crosetto, M.; Monserrat, O. ELULC-10, a 10 m European land use and land cover map using sentinel and landsat data in google earth engine. Remote Sens. 2022, 14, 3041. [Google Scholar] [CrossRef]

- Curcio, A.C.; Peralta, G.; Aranda, M.; Barbero, L. Evaluating the Performance of High Spatial Resolution UAV-Photogrammetry and UAV-LiDAR for Salt Marshes: The Cádiz Bay Study Case. Remote Sens. 2022, 14, 3582. [Google Scholar] [CrossRef]

- Cheng, J.; Bo, Y.; Ji, X. Effect of Modulation Transfer Function on high spatial resolution remote sensing imagery segmentation quality. In Proceedings of the 2012 Second International Workshop on Earth Observation and Remote Sensing Applications, Shanghai, China, 8–11 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 149–152. [Google Scholar]

| Study Area | Habitat Class | Area (Ha) |

|---|---|---|

| Colt Crag Reservoir | Arable | 56.28 |

| Bare Ground | 0.22 | |

| Broadleaf Woodland | 10.79 | |

| Building | 0.92 | |

| Coniferous Woodland | 17.39 | |

| Improved Grassland | 41.26 | |

| Marshy Grassland | 4.16 | |

| Mixed Woodland | 12.10 | |

| Open Water | 10.84 | |

| Quarry | 25.01 | |

| Scrub | 0.83 | |

| Semi-improved Acid Grassland | 15.73 | |

| Semi-improved Calcareous Grassland | 10.42 | |

| Wet Modified Bog | 6.02 | |

| Total | 211.96 | |

| Grassholme Reservoir | Blanket bog | 0.52 |

| Bracken | 0.07 | |

| Broadleaf Woodland | 0.14 | |

| Built/Hardstanding | 0.13 | |

| Coniferous Woodland | 0.56 | |

| Disturbed Ground | 0.02 | |

| Grassland | 0.31 | |

| Marshy Grassland | 0.26 | |

| Open Water | 0.07 | |

| Scrub | 0.04 | |

| Wet Modified Bog/Heath (High Calluna Cover) | 0.16 | |

| Total | 2.28 |

| Feature Type | Source | Utilized Features |

|---|---|---|

| Spectral bands | WorldView-2 | Coastal, Blue, Green, Red, Yellow, Red Edge, Near Infrared (NIR)-1, Near Infrared-2 |

| Ratio and spectral indices | WorldView-2 | |

| Spatial | WorldView-2 | Shape, Size |

| Gray level Co-occurrence Matrix (GLCM) | WorldView-2 | Mean, Variance, Contrast, Dissimilarity, Entropy, and Homogeneity |

| Elevation derivations | LiDAR | Digital Elevation Model, Digital Surface Model, Canopy Height Model, Slope, and Aspect |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amani, M.; Foroughnia, F.; Moghimi, A.; Mahdavi, S.; Jin, S. Three-Dimensional Mapping of Habitats Using Remote-Sensing Data and Machine-Learning Algorithms. Remote Sens. 2023, 15, 4135. https://doi.org/10.3390/rs15174135

Amani M, Foroughnia F, Moghimi A, Mahdavi S, Jin S. Three-Dimensional Mapping of Habitats Using Remote-Sensing Data and Machine-Learning Algorithms. Remote Sensing. 2023; 15(17):4135. https://doi.org/10.3390/rs15174135

Chicago/Turabian StyleAmani, Meisam, Fatemeh Foroughnia, Armin Moghimi, Sahel Mahdavi, and Shuanggen Jin. 2023. "Three-Dimensional Mapping of Habitats Using Remote-Sensing Data and Machine-Learning Algorithms" Remote Sensing 15, no. 17: 4135. https://doi.org/10.3390/rs15174135

APA StyleAmani, M., Foroughnia, F., Moghimi, A., Mahdavi, S., & Jin, S. (2023). Three-Dimensional Mapping of Habitats Using Remote-Sensing Data and Machine-Learning Algorithms. Remote Sensing, 15(17), 4135. https://doi.org/10.3390/rs15174135