Recognition of Severe Convective Cloud Based on the Cloud Image Prediction Sequence from FY-4A

Abstract

:1. Introduction

2. Study Area and Data

2.1. Study Area

2.2. FY-4A Data

2.3. Data Preprocessing

2.3.1. Geometric Correction

2.3.2. Radiometric Calibration

2.3.3. Data Normalization

3. Method

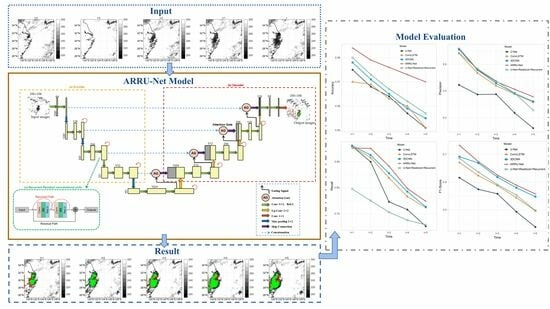

3.1. The Proposed ARRU-Net Model

3.1.1. Attention Mechanism

3.1.2. Recurrent Convolutional Block

3.1.3. Residual Connection

3.2. Severe Convective Cloud Label-Making Method

3.2.1. Analyze Spectral features

3.2.2. Extract Texture Features

3.2.3. Image Binarization

3.2.4. Closed Operations and Intersection Operations

- (1)

- A closed operation was carried out on the preliminary recognition results of severe convection by TBB9, TBB9−TBB12, and TBB12−TBB13, as shown in Figure 10.

- (2)

3.3. Model Performance Evaluation Method

3.3.1. Model Performance Evaluation of Cloud Image Prediction

3.3.2. Model Performance Evaluation of Recognition of Severe Convective Cloud

4. Results

4.1. Cloud Image Prediction

4.1.1. Training

4.1.2. Comparison of Cloud Image Prediction Models

4.2. Recognition of Severe Convective Cloud Based on Cloud Image Prediction Sequence

4.2.1. Training

4.2.2. Comparison of Recognition of Severe Convective Cloud Based on the Cloud Image Prediction Sequence

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gernowo, R.; Sasongko, P. Tropical Convective Cloud Growth Models for Hydrometeorological Disaster Mitigation in Indonesia. Glob. J. Eng. Technol. Adv. 2021, 6, 114–120. [Google Scholar] [CrossRef]

- Gernowo, R.; Adi, K.; Yulianto, T. Convective Cloud Model for Analyzing of Heavy Rainfall of Weather Extreme at Semarang Indonesia. Adv. Sci. Lett. 2017, 23, 6593–6597. [Google Scholar] [CrossRef]

- Sharma, N.; Kumar Varma, A.; Liu, G. Percentage Occurrence of Global Tilted Deep Convective Clouds under Strong Vertical Wind Shear. Adv. Space Res. 2022, 69, 2433–2442. [Google Scholar] [CrossRef]

- Jamaly, M.; Kleissl, J. Robust cloud motion estimation by spatio-temporal correlation analysis of irradiance data. Sol. Energy 2018, 159, 306–317. [Google Scholar] [CrossRef]

- Dissawa, D.M.L.H.; Ekanayake, M.P.B.; Godaliyadda, G.M.R.I.; Ekanayake, J.B.; Agalgaonkar, A.P. Cloud motion tracking for short-term on-site cloud coverage prediction. In Proceedings of the 2017 Seventeenth International Conference on Advances in ICT for Emerging Regions (ICTer), Colombo, Sri Lanka, 6–9 September 2017; pp. 1–6. [Google Scholar]

- Shakya, S.; Kumar, S. Characterising and predicting them ovement of clouds using fractional-order optical flow. IET Image Process. 2019, 13, 1375–1381. [Google Scholar] [CrossRef]

- Son, Y.; Zhang, X.; Yoon, Y.; Cho, J.; Choi, S. LSTM–GAN Based Cloud Movement Prediction in Satellite Images for PV Forecast. J. Ambient Intell. Humaniz. Comput. 2023, 14, 12373–12386. [Google Scholar] [CrossRef]

- Xu, Z.; Du, J.; Wang, J.; Jiang, C.; Ren, Y. Satellite image prediction relying on GAN and LSTM neural networks. In Proceedings of the ICC 2019-2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Bo, M.; Ning, Y.; Chenggang, C.; Jing, C.; Peifeng, X. Cloud Position Forecasting Based on ConvLSTM Network. In Proceedings of the 2020 5th International Conference on Power and Renewable Energy (ICPRE), Shanghai, China, 12–14 September 2020; pp. 562–565. [Google Scholar]

- Harr, T.J.; Vonder, T.H. The Diurnal Cycle of West Pacific Deep Convection and Its Relation to the Spatial and Temporal Variation of Tropical MCS. J. Atmos. Sci. 1999, 56, 3401–3415. [Google Scholar]

- Fu, R.; Del, A.D.; Rossow, W.B. Behavior of Deep Convection Clouds in the Tropical Pacific Deduced from ISCCP Radiances. J. Clim. 1990, 3, 1129–1152. [Google Scholar] [CrossRef]

- Vila, D.A.; Machado, L.A.T.; Laurent, H. Forecast and Tracking of Cloud Clusters Using Satellite Infrared Imagery: Methodology and Validation. Weather Forecast. 2008, 23, 233–244. [Google Scholar] [CrossRef]

- Mecikalski, J.R.; Bedka, K.M. Forecasting Convective Initiation by Monitoring the Evolution of Moving Cumulus in Daytime GOES Imagery. Mon. Weather Rev. 2006, 134, 49–78. [Google Scholar] [CrossRef]

- Jirak, I.L.; Cotton, W.R.; McAnelly, R.L. Satellite and Radar Survey of Mesoscale Convective System Development. Mon. Weather Rev. 2003, 131, 2428–2449. [Google Scholar] [CrossRef]

- Sun, L.-X.; Zhuge, X.-Y.; Wang, Y. A Contour-Based Algorithm for Automated Detection of Overshooting Tops Using Satellite Infrared Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 497–508. [Google Scholar] [CrossRef]

- Mitra, A.K.; Parihar, S.; Peshin, S.K.; Bhatla, R.; Singh, R.S. Monitoring of Severe Weather Events Using RGB Scheme of INSAT-3D Satellite. J. Earth Syst. Sci. 2019, 128, 36. [Google Scholar] [CrossRef]

- Welch, R.M.; Sengupta, S.K.; Goroch, A.K.; Rabindra, P.; Rangaraj, N.; Navar, M.S. Polar Cloud and Surface Classification Using AVHRR Imagery: An Intercomparison of Methods. J. Appl. Meteorol. 1992, 31, 405–420. [Google Scholar] [CrossRef]

- Zinner, T.; Mannstein, H.; Tafferner, A. Cb-TRAM: Tracking and Monitoring Severe Convection from Onset over Rapid Development to Mature Phase Using Multi-Channel Meteosat-8 SEVIRI Data. Meteorol. Atmos. Phys. 2008, 49, 181–202. [Google Scholar] [CrossRef]

- Bedka, K.; Brunner, J.; Dworak, R.; Feltz, W.; Otkin, J.; Greenwald, T. Objective Satellite-Based Detection of Overshooting Tops Using Infrared Window Channel Brightness Temperature Gradients. J. Appl. Meteorol. Climatol. 2010, 49, 181–202. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, Z.; Wei, C.; Lu, F.; Guo, Q. Introducing the New Generation of Chinese Geostationary Weather Satellites, Fengyun-4. Bull. Am. Meteorol. Soc. 2017, 98, 1637–1658. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015 Conference Proceedings, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent convolutional neural networks for text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Alom, M.Z.; Yakopcic, C.; Taha, T.M.; Asari, V.K. Nuclei Segmentation with Recurrent Residual Convolutional Neural Networks Based U-Net (R2U-Net). In Proceedings of the NAECON 2018-IEEE National Aerospace and Electronics Conference, Dayton, OH, USA, 23–26 July 2018; pp. 228–233. [Google Scholar]

- Liang, M.; Hu, X. Recurrent Convolutional Neural Network for Object Recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3367–3375. [Google Scholar]

- Orhan, E.; Pitkow, X. Skip Connections Eliminate Singularities. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018; Volume 24, pp. 183–243. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 630–645. [Google Scholar]

- Jain, A.K.; Farrokhnia, F. Unsupervised Texture Segmentation Using Gabor Filters. Pattern Recognit. 1991, 24, 1167–1186. [Google Scholar] [CrossRef]

- Knowlton, K.; Harmon, L. Computer-Produced Grey Scales. Comput. Graph. Image Process. 1972, 1, 1–20. [Google Scholar] [CrossRef]

- Bouguet, J.Y. Pyramidal implementation of the affine Lucas Kanade feature tracker description of the algorithm. Intel Corp. 2001, 1, 4. [Google Scholar]

- Haris, M.; Shakhnarovich, G.; Ukita, N. Deep back-projection networks for super-resolution. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1664–1673. [Google Scholar]

- Zhang, J.; Yang, Z.; Jia, Z.; Bai, C. Superresolution imaging with a deep multipath network for the reconstruction of satellite cloud images. Earth Space Sci. 2021, 10, 1029–1559. [Google Scholar] [CrossRef]

- Guibas, J.; Mardani, M.; Li, Z.; Tao, A.; Anandkumar, A.; Catanzaro, B. Proceedings of the Adaptive Fourier neural operators: Efficient token mixers for transformers. International Conference on Learning Representations, Virtual Event, 25–29 April 2022; pp. 1–15. [Google Scholar]

- Wang, R.; Teng, D.; Yu, W. Improvement and Application of GAN Models for Time Series Image Prediction—A Case Study of Time Series Satellite Nephograms. Res. Sq. 2022, 29, 403–417. [Google Scholar]

- Zhou, J.; Xiang, J.; Huang, S. Classification and Prediction of Typhoon Levels by Satellite Cloud Pictures through GC–LSTM Deep Learning Model. Sensors 2020, 20, 5132. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Tao, R.; Zhang, Y.; Wang, L.; Cai, P.; Tan, H. Detection of precipitation cloud over the tibet based on the improved U-net. Comput. Mater. Contin. 2020, 5, 115–118. [Google Scholar] [CrossRef]

- Heming, J.T.; Prates, F.; Bender, M.A.; Bowyer, R.; Cangialosi, J.; Caroff, P.; Coleman, T.; Doyle, J.D.; Dube, A.; Faure, G. Review of recent progress in tropical cyclone track forecasting and expression of uncertainties. Trop. Cyclone Res. Rev. 2019, 8, 181–218. [Google Scholar] [CrossRef]

| Channel Type | Central Wavelength | Spectral Bandwidth | Spatial Resolution | Main Applications |

|---|---|---|---|---|

| VIS/NIR | 0.47 µm | 0.45–0.49 µm | 1 km | Aerosol, visibility |

| 0.65 µm | 0.55–0.75 µm | 0.5 km | Fog, clouds | |

| 0.825 µm | 0.75–0.90 µm | 1 km | Aerosol, vegetation | |

| Shortwave IR | 1.375 µm | 1.36–1.39 µm | 2 km | Cirrus |

| 1.61 µm | 1.58–1.64 µm | 2 km | Cloud, snow | |

| 2.25 µm | 2.1–2.35 µm | 2km | Cloud phase, aerosol, vegetation | |

| Midwave IR | 3.75 µm | 3.5–4.0 µm | 2 km | Clouds, fire, moisture, snow |

| 3.75 µm | 3.5–4.0µm | 4 km | Land surface | |

| Water vapor | 6.25 µm | 3.5–4.0 µm | 2 km | Upper-level WV |

| 7.1 µm | 3.5–4.0µm | 4 km | Midlevel WV | |

| Longwave IR | 8.5 µm | 8.0–9.0 µm | 4 km | Volcanic, ash, cloud top, phase |

| 10.7 µm | 3.5–4.0 µm | 4 km | SST, LST | |

| 12.0 µm | 3.5–4.0 µm | 4 km | Clouds, low-level WV | |

| 13.5 µm | 3.5–4.0 µm | 4 km | Clouds, air temperature |

| Literature | Satellite | Model | PSNR (dB) |

|---|---|---|---|

| Bouguet et al. [30] | / | Opticalflow-LK | 24.40 |

| Haris et al. [31] | / | DBPN | 32.36 |

| Zhang et al. [32] | Himawari-8 | SRCloudNet | 32.43 |

| Guibas et al. [33] | / | AFNO | 25.22 |

| Wang et al. [34] | FY-4A/AGRI | GAN+Mish+Huber | 31.49 |

| Our model | FY-4A/AGRI | ARRU-Net | 35.52 |

| Literature | Satellite | Model | Accuracy |

|---|---|---|---|

| Zhou et al. [35] | Himawari-8 | GCN-LSTM | 91.51% |

| Badrinarayanan et al. [36] | / | SegNet | 86.80% |

| Tao et al. [37] | Himawari-8 | GC–LSTM | 95.12% |

| Heming et al. [38] | / | CNN + SVM/LSTM | 91.67% |

| Our model | FY-4A/AGRI | ARRU-Net | 97.62% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Q.; Yin, X.; Li, Y.; Zheng, P.; Chen, M.; Xu, Q. Recognition of Severe Convective Cloud Based on the Cloud Image Prediction Sequence from FY-4A. Remote Sens. 2023, 15, 4612. https://doi.org/10.3390/rs15184612

Chen Q, Yin X, Li Y, Zheng P, Chen M, Xu Q. Recognition of Severe Convective Cloud Based on the Cloud Image Prediction Sequence from FY-4A. Remote Sensing. 2023; 15(18):4612. https://doi.org/10.3390/rs15184612

Chicago/Turabian StyleChen, Qi, Xiaobin Yin, Yan Li, Peinan Zheng, Miao Chen, and Qing Xu. 2023. "Recognition of Severe Convective Cloud Based on the Cloud Image Prediction Sequence from FY-4A" Remote Sensing 15, no. 18: 4612. https://doi.org/10.3390/rs15184612

APA StyleChen, Q., Yin, X., Li, Y., Zheng, P., Chen, M., & Xu, Q. (2023). Recognition of Severe Convective Cloud Based on the Cloud Image Prediction Sequence from FY-4A. Remote Sensing, 15(18), 4612. https://doi.org/10.3390/rs15184612