1. Introduction

Object detection plays a crucial role in remote sensing image processing, enabling the automated extraction of objects of interest from RSIs, including buildings, roads, vehicles, and more. In the past, manual visual interpretation was the predominant method for acquiring geographic information. However, with the ongoing advancements in remote sensing technology and the continuous innovation of image-processing algorithms, object detection for RSIs has become one of the essential means to obtain large-scale geographic information efficiently. Currently, object detection technology in RSIs has found widespread applications across diverse domains, including urban planning [

1], environmental monitoring [

2], agricultural production [

3], and other fields. This technology holds promising application prospects.

Traditional object-detection methods, such as scale-invariant feature transform (SIFT) [

4], support vector machine (SVM) [

5], and histogram of oriented gradients (HOG) [

6], have certain limitations due to manual features extraction and classifier construction. With the development of deep learning, object-detection methods can automatically learn features and construct efficient classifiers, resulting in higher accuracy and faster processing speeds. For example, Edge YOLO [

7] can run in real-time on edge devices while maintaining high accuracy. At present, object detectors can be categorized into two main types: two-stage object detectors and one-stage object detectors. Two-stage detectors are represented by Faster R-CNN [

8], Mask R-CNN [

9], Cascade R-CNN [

10], etc. Faster R-CNN is among the early pioneers of two-stage object detectors, distinguished in achieving both high detection accuracy and precise localization. The region proposal network (RPN) has been introduced to generate object candidate regions, followed by performing object classification and localization on these candidate regions. Mask R-CNN is a further advancement built upon the foundation of Faster R-CNN, which is able to not only detect object location and category, but also to generate accurate segmentation masks of objects. This makes Mask R-CNN perform well in image segmentation tasks. Cascade R-CNN is a two-stage detector proposed for small object detection, it significantly enhances the detection accuracy of small objects by means of cascade classifiers. Compared with other two-stage object detectors, Cascade R-CNN has higher detection accuracy for small objects, but slightly decreased detection accuracy for large objects.

Although two-stage detectors excel in high detection accuracy and positioning accuracy, their calculation speed is relatively slow. Moreover, training a two-stage object-detection model requires substantial data, and its efficiency diminishes when faced with a high volume of objects to detect. This situation is a challenge for both data acquisition cost and time cost. One-stage object detection models represented by SSD [

11], RetinaNet [

12], and you only look once (YOLO) [

13] exhibit the advantages of high speed and applicability to scenarios such as real-time detection. With the continuous development of algorithms, their detection accuracy is gradually improved. However, the disadvantages of the one-stage detection model are low positioning accuracy, insensitivity to complex backgrounds, and occlusion, and these problems may have a certain impact on the target-detection effect of remote sensing images. In 2016, YOLOv1 [

13] was proposed by Redmon et al., renowned for its fast detection speed, but the positioning accuracy of object detection is low. In 2017, Redmon et al. introduced YOLOv2 [

14], which used DarkNet-19 as its backbone architecture. Despite achieving higher detection accuracy, it is still not friendly to small target recognition. In 2018, Redmon et al. introduced YOLOv3 [

15], which used DarkNet-53 with the residual network to extract features from images. In 2019, Bochkovskiy proposed YOLOv4 [

16]; he embedded the cross-stage partial (CSP) module into DarkNet-53, improving the accuracy and speed. In 2020, YOLOv5 was proposed with the Focus architecture, and it speeded up the training and improved the detection accuracy. In 2022, YOLOv7 [

17] was proposed by Wang et al., which maintained a fast running speed and small memory consumption and achieved ideal results in detection accuracy. Although the YOLO series models have become some of the representative algorithms for object detection, single-stage object-detection methods still have certain limitations in object detection on large images such as RSIs.

In recent years, researchers have achieved notable advancements in the detection of small [

18] or rotating object detection [

19]. Yang et al. [

20] proposed the sampling fusion network (SF-Net) to optimize for dense and small targets. Fu et al. [

21] constructed a semantic module and a spatial layout module and then fed both into a contextual reasoning module to integrate contextual information about objects and their relationships. Han et al. [

22] proposed the rotation-equivariant detector (ReDet), which explicitly codes rotational equivalence and rotational invariance to predict the direction accurately. Li et al. [

23] proposed a model named OrientedRepPoints and a novel adaptive point learning quality assessment and sample allocation method, and they achieved more-accurate performance by accurately predicting the direction. However, while many researchers concentrate on challenges such as small object detection or the detection of rotating objects, they often overlook other common issues in RSIs’ object detection. These issues include enhancing the detection of multi-scale objects, tackling the difficulties of identifying objects concealed in shadows or with colors resembling the ground, and detecting objects with only partial features exposed in RSIs, as illustrated in

Figure 1.

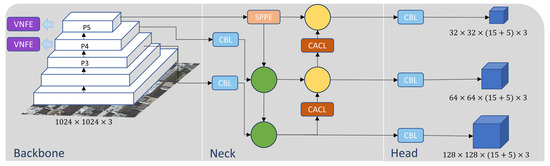

Considering the multi-faceted nature and complexity of current RSI problems, this paper proposes an enhanced detector, MHLDet, so as to address the issues mentioned above. Firstly, the VNFE is designed to alleviate the surroundings’ impact and obtain more-robust feature information. In addition, it can concentrate more effectively on crucial regions to address the issue of partial feature detection. Secondly, we propose the SPPE with large receptive fields to fuse better multi-scale features to enhance the network’s ability to detect targets of various scales. Finally, we introduce the CACL to preserve more feature information by minimizing the information loss during downsampling. Therefore, this model holds an advantage in detecting objects with colors close to the ground and in detecting multi-scale targets. In addition, it achieves higher precision and is more lightweight than existing methods. The primary contributions of this paper are outlined as follows:

This paper proposes a novel method for object detection in RSIs, which achieves a preferable balance between lightweight and accuracy.

We propose the VNFE module for more-effective feature extraction and integrated the SimAM attention mechanism into the backbone to focus on the key features. The VNFE module has fewer parameters, so it can reduce the computational cost more effectively and make the model lighter. In addition, the VNFE module can also enhance the generalization ability of the detector, as it can reduce the risk of overfitting while maintaining high accuracy.

This paper introduces a novel SPPE module by optimizing the SPPCSPC of YOLOv7-Tiny [

17]. The SPPE is designed to enhance detection accuracy for medium- to large-sized objects while preserving the original advantages. As a result, it effectively fulfills the demands for detecting objects of various scales and addresses the application requirements.

The CACL module is designed to minimize the loss of feature information during the downsampling process in the neck network. This module can focus on feature regions with higher discrimination by performing attention calculations on the feature map and enriching the semantic information by fusing feature elements. Consequently, it improves the accuracy and robustness of the model.

The remainder of this study is structured as follows:

Section 2 reviews related work on lightweight object detection algorithms and outlines the details of the proposed model.

Section 3 introduces the implementation details of the experiments conducted on the SIMD and the UCAS-AOD datasets, and we present the results obtained from the ablation experiments.

Section 4 carries out the discussion. Finally,

Section 5 provides the conclusions from the study.

3. Results

3.1. Datasets

We conducted the experiments using the SIMD [

40] and UCAS-AOD [

41] datasets to evaluate the effectiveness.

Figure 6 shows some examples of the datasets this paper used.

The SIMD dataset is a recently released high-resolution remote sensing dataset. It is characterized by its high resolution and the inclusion of multi-scale imagery. This dataset contains 5000 high-resolution images with 15 categories, Car, Truck, Van, Long Vehicle, Bus, Airliner, Propeller Aircraft, Trainer Aircraft, Chartered Aircraft, Fighter Aircraft, Others, Stair Truck, Pushback Truck, Helicopter, Boat, respectively. There are a total of 45,096 instances, and the image size is 1024 × 768 pixels. We used 4000 images for training and 1000 images for validation according to the division of the original dataset.

The UCAS-AOD dataset is specifically designed for the detection of airplanes and cars. The image size is 1280 × 659 pixels, and it contains two categories of airplane and car. The objects in the images are evenly distributed in terms of their orientations. In this experiment, we removed the negative example images that did not contain any example images and only kept the images that contained the two classes of airplane and car. The training set, validation set, and test set of the UCAS-AOD dataset were randomly divided with a ratio of 7:2:1.

3.2. Evaluation Criteria

To evaluate the performance of our proposed detector, we used several widely used evaluation metrics. These metrics included the precision (

P), recall (

R), average precision (

), and mean average precision (

). The formulas for precision and recall are as follows:

where

is true positive and is the number of positive samples that were correctly predicted to be positive.

is false positive, which is the number of false positives that were incorrectly predicted.

is false negative and is the number of positive samples that were incorrectly predicted as negative.

is the area under the precision–recall curve (P–R curve) and ranges from 0 to 1.

is a commonly used comprehensive evaluation metric in object detection, which comprehensively considers the precision and recall of different categories. We calculated the area under the P–R curve for each class and, then, averaged the

across all classes to obtain the

. The

provides an overall evaluation of model performance and can be used to compare the strengths and weaknesses of different models.

where

n represents the total number of categories in the detection task.

The intersection over union (

), is a metric used to measure the degree of overlap between the predicted bounding box (

) and the true bounding box (

) in the object-detection task. It is defined by computing the ratio between the intersection area of the predicted bounding box and the true bounding box and the area of their union. The calculation formula is as follows:

In addition, we followed the COCO evaluation metrics [

42], including the mAP (

threshold = 0.5),

, and

, and it also includes

(for small objects, area <

),

(for medium objects,

< area <

), and

(for large objects,

< area).

These metrics can help us understand the efficiency of the model in practical applications. Combining these evaluation metrics, we can comprehensively assess the performance of our proposed method in regard to accuracy, efficiency, and practicality and compare it with current methods.

3.3. Parameter Setting

The experimental environment was configured as follows: the computer was equipped with an NVIDIA GeForce RTX 3070 graphics card (8GB); the CPU was an Intel Core i7-10700K; the operating system was Ubuntu 20.04.4 LTS. We used the PyTorch deep learning framework (Version 1.13.1) for model development and training in Python 3.8 and CUDA 11.4.

The input image size was

; the batch size was four; the optimization was stochastic gradient descent (SGD); the momentum was 0.937; the weight decay was set to 0.0005. To improve the convergence and training efficiency of the model, we performed three epochs of warm-up training and set the warm-up momentum to 0.8 initially. The initial train parameters are shown in

Table 2.

3.4. Experimental Results

3.4.1. Experimental Results on the SIMD Dataset

This paper took YOLOv7-Tiny [

17] as the baseline and conducted experiments on the SIMD dataset to validate the effectiveness of our method and compare it with other one-stage detectors. The detectors for comparison were the anchor-free detector FCOS [

25], TOOD [

43], PP-Picodet-m [

44], YOLOX-S [

45], YOLOv6-N, YOLOv6-S [

46], and YOLOv8-N [

47], and the anchor-based detectors were RetinaNet [

12], YOLOv5-N, YOLOv5-S [

48], YOLO-HR-N [

49], and YOLOv7-Tiny. We did not compare with two-stage detectors. In our experiments, we focused on proposing a lightweight model designed to meet the needs of scenarios with limited computational resources, and our approach focused more on reducing the complexity and computational overhead of the model while maintaining high performance compared to traditional two-stage models. Our experiments on the SIMD dataset used the K-Means++ [

50] algorithm to generate a new anchor, and the experimental data after using the new anchor are shown in

Table 3. In addition, to display the results more directly, this paper assigned an abbreviation name (AN) to each category in the dataset, as shown in

Table 4.

Table 5 shows the detection accuracy results of our detector and other detectors in various categories on the SIMD dataset. It is evident that our method achieved optimal accuracy across the majority of categories, with the mAP reaching 84.7%. As shown in the table, our mAP was 2.4% higher than YOLOv7-Tiny. Additionally, it was nearly 10% higher than YOLOv5-N.

There are 15 categories in the SIMD dataset, and our detection efficiency in most categories reached an optimal level. In dense scenes, such as Car (CR) and Long Vehicle (LV), our MHLDet achieved the best performance. At the same time, it had a significant improvement compared with other models on medium- to large-sized targets such as Airliner (ALR), Chartered Aircraft (CA), and Fighter Aircraft (FA). The observed improvement can be attributed to the proposed VNFE module and the embedded SimAM attention mechanism, which effectively leverages the feature information within the backbone and enhances the feature representation, while the inclusion of the SPPE module expands the receptive fields, making it more conducive for detecting medium- to large-sized objects, and the CACL module reduced the feature loss in the process of downsampling. The experimental results further validated the effectiveness and robustness of the proposed method.

Table 6 shows the performance comparison of MHLDet with other one-stage methods.

On the SIMD dataset, our MHLDet detector achieved an 84.7% mAP with 5.28 m model parameters. As shown in

Figure 7, the proposed method outperformed all compared one-stage methods, achieving the highest detection accuracy with fewer parameters. This excellence enabled our model to excel in resource-constrained environments. Compared with the baseline, the parameters of the model proposed by us decreased by 12.7%, and the mAP increased by 2.4%; the

and

also increased by 2.9% and 2.6%, respectively. On medium- to large-sized objects,

and

increased by 2.4% and 1.3%, respectively, compared to the baseline. In particular, the proposed model achieved the highest performance scores the mAP,

,

, and small-, medium-, and large-scale AP values, while maintaining fewer parameters. Compared with the anchor-free detectors PP-PicoDet-m, YOLOv6-N, and YOLOv8-N, these detectors’ parameters were slightly fewer than MHLDet, but our mAP showed an increase of 11.8%, 10.3%, and 3.1%. In comparison to the anchor-based detectors, YOLOv5-N had the fewest parameters, but we were 9% and 8.9% higher in the mAP and

, respectively. In the aspect of multi-scale object detection, our

and

were 12.3% and 10.1% higher than their counterparts.

By comparing the , , and with each model, we found that the gains of our model mainly came from medium objects and large objects. Although the accuracy on medium objects was the same as that of YOLOv5-S, the accuracy on and improved by 8.9% and 4.1%, respectively. Despite the MHLDet model having 3.5 M more parameters compared to YOLOv5-N, it remains categorized as a lightweight model.

Figure 8 depicts the visual comparison of the heat map of the baseline with our proposed method, and it can be seen that our proposed method excels in predicting objects with only partial features and objects that are close to the surface color. To be specific, the heatmap’s colors transition from blue to red on the images, symbolizing the probability of target presence increasing from low to high. Blue areas indicate the potential absence of targets, while red regions signify a high likelihood of target presence.

Figure 8(b1–b3) are the results of YOLOv7-Tiny, and

Figure 8(c1–c3) are the results of our proposed model. From

Figure 8(b1,c1), it can be observed that we also had a heatmap representation on the ship just below, where only a portion of the features was exposed. As shown in

Figure 8(b2,c2), YOLOv7-Tiny overlooked the middle vehicle, where our model correctly annotated it. From

Figure 8(b3,c3), it is evident that our model provided more-accurate and continuous annotations for vehicles in shadow. As a result, our model can accurately depict the distribution of data. The visualization detection results are shown in

Figure 9.

To visually illustrate the improvement achieved by our proposed model, this paper presents the detection results for some typical scenes.

Figure 9(b1–b6) are the results of YOLOv7-Tiny, and

Figure 9(c1–c6) are the results of our proposed model. From

Figure 9(b1,c1), our model performed significantly better than YOLOv7-Tiny in terms of detecting small objects. From

Figure 9(b2–b4,c2–c4), it can be observed that our model detected more objects that closely matched the color of the ground. As shown in

Figure 9(b5,c5), our model detected the object that exhibited only partial features. In addition, our model detected a higher number of densely arranged objects in

Figure 9(b6,c6).

3.4.2. Experimental Results on the UCAS-AOD Dataset

Table 7 shows the accuracy comparison of our method with other methods on the UCAS-AOD dataset. It is evident from the table that our proposed method achieved the highest mAP among all the compared methods. Our method demonstrated a 4.6% improvement over the second-best method for small and 2% for large objects.

Based on the data presented in

Table 7, our proposed method improved in all scales compared to other methods, and it can be competent for the vast majority of object-detection tasks by the excellent performance compared to current state-of-the-art methods.

Figure 10 shows the visualization results on the UCAS-AOD dataset, further supporting the effectiveness of our proposed method.

3.5. Ablation Study

To verify the effectiveness of our proposed detector, we conducted ablation studies on the SIMD dataset using the same hyperparameters and parameter settings for each module to ensure a fair and unbiased comparison. We used the precision, recall, mAP, Params, and floating point operations (FLOPs) to verify the availability of the module we proposed. It can be seen in

Table 8 and

Figure 11 that each of our proposed modules had some improvements over the baseline.

It can be observed from the second and third row of

Table 8 that, with the help of SimAM, the mAP improved from 82.3% to 83.6%. Furthermore, with the help of the VNFE, the mAP increased to 83.7%, and the Params and FLOPs decreased by 18% and 10.5%, respectively. This was caused by the absence of semantic information in the backbone before the use of both of them, resulting in less-prominent features. The addition of SimAM enhanced the features of objects to be detected, while VNFE effectively reduced the parameters when extracting the features, which also explains the reason the mAP value improved when the parameters were reduced.

In order to prove the SPPE, we replaced the original SPPCSPC with the SPPE, with the rest of the model remaining the same, from

Table 8. In the fourth row, the mAP improved from 82.3% to 83.7%. In the SPPE, we changed the connection mode of the maximum pooling layer and increased its receptive fields from the original 5, 9, and 13 to 5, 13, and 25, so as to enhance the model’s detection performance for multi-scale objects.

The validity of the CACL module can be seen from the fifth row in

Table 8. The mAP increased from 82.3% to 83.4%. The CACL module effectively utilized the global information and preserved the crucial details in the feature map during downsampling, which led to the enhanced robustness of the model.

From the sixth and seventh rows of

Table 8, it can be observed that stacking multiple modules progressively yielded increasing gains, reaching 84.1% and 84.4%, surpassing the results obtained from using a single module. Additionally, as the achieved mAP continued to rise, the increase in the parameter count also remained within an acceptable range.

Finally, in the eighth row of

Table 8, it is evident that the mAP value of applying all the improved modules surpassed that of any individual module alone. It balanced the precision and light weight, enabling higher accuracy even with limited computing resources.