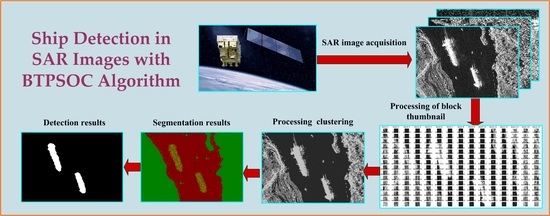

Ship Target Detection Method in Synthetic Aperture Radar Images Based on Block Thumbnail Particle Swarm Optimization Clustering

Abstract

:1. Introduction

2. Relevant Theory and Work

2.1. The Fuzzy C-Means Clustering Algorithm

2.2. The Particle Swarm Optimization Algorithm

3. Principle Description of the BTPSOC Algorithm

3.1. Generating Thumbnails

3.2. Thumbnail Pixel Segmentation and Optimization

3.2.1. Particle Representation

3.2.2. Fitness Function

3.3. Non-Thumbnail Pixel Segmentation

4. Experimental Results and Analysis

4.1. Experimental Data and Parameter Setting

4.1.1. Description of Experimental Data

4.1.2. Parameter Analysis and Setting

4.2. SAR Image Experimental Results of the SSDD Dataset

4.2.1. Evaluation Index of Experimental Results

4.2.2. Segmentation Results of Different Methods

4.2.3. Quantitative Analysis and Comparison

4.2.4. Comparison with CNN Methods

4.2.5. Comparison with the CFAR Method

4.3. Experimental Results of the SSSD Dataset Images

4.4. Complexity and Robustness Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Saha, S.; Bovolo, F.; Bruzzone, L. Building change detection in VHR SAR images via unsupervised deep transcoding. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1917–1929. [Google Scholar] [CrossRef]

- Allies, A.; Roumiguié, A.; Fieuzal, R.; Dejoux, J.-F.; Jacquin, A.; Veloso, A.; Champolivier, L.; Baup, F. Assimilation of multisensor optical and multiorbital SAR satellite data in a simplified agrometeorological model for rapeseed crops monitoring. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 15, 1123–1138. [Google Scholar] [CrossRef]

- Lê, T.T.; Froger, J.-L.; Minh, D.H.T. Multiscale framework for rapid change analysis from SAR image time series: Case study of flood monitoring in the central coast regions of Vietnam. Remote Sens. Environ. 2022, 269, 112837. [Google Scholar] [CrossRef]

- Shi, J.C.; Xu, B.; Chen, Q.; Hu, M.; Zeng, Y. Monitoring and analysing long-term vertical time-series deformation due to oil and gas extraction using multi-track SAR dataset: A study on lost hills oilfield. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102679. [Google Scholar] [CrossRef]

- Zhang, C.; Gao, G.; Zhang, L.; Chen, C.; Gao, S.; Yao, L.; Bai, Q.; Gou, S. A novel full-polarization SAR image ship detector based on scattering mechanisms and wave polarization anisotropy. ISPRS J. Photogramm. Remote Sens. 2022, 190, 129–143. [Google Scholar] [CrossRef]

- Abdikan, S.; Sekertekin, A.; Madenoglu, S.; Ozcan, H.; Peker, M.; Pinar, M.O.; Koc, A.; Akgul, S.; Secmen, H.; Kececi, M.; et al. Surface soil moisture estimation from multi-frequency SAR images using ANN and experimental data on a semi-arid environment region in Konya, Turkey. Soil Tillage Res. 2023, 228, 105646. [Google Scholar] [CrossRef]

- Cao, X.; Gao, S.; Chen, L.; Wang, Y. Ship recognition method combined with image segmentation and deep learning feature extraction in video surveillance. Multimedia Tools Appl. 2020, 79, 9177–9192. [Google Scholar] [CrossRef]

- Zhang, X.; Dong, G.; Xiong, B.; Kuang, G. Refined segmentation of ship target in SAR images based on GVF snake with elliptical constraint. Remote Sens. Lett. 2017, 8, 791–800. [Google Scholar] [CrossRef]

- Proia, N.; Pagé, V. Characterization of a Bayesian ship detection method in optical satellite images. IEEE Geosci. Remote Sens. Lett. 2009, 7, 226–230. [Google Scholar] [CrossRef]

- Wang, X.Q.; Zhu, D.; Li, G.; Zhang, X.-P.; He, Y. Proposal-copula-based fusion of spaceborne and airborne SAR images for ship target detection. Inf. Fusion 2022, 77, 247–260. [Google Scholar] [CrossRef]

- Zhang, T.W.; Xu, X.W.; Zhang, X.L. SAR ship instance segmentation based on hybrid task cascade. In Proceedings of the 18th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), Chengdu, China, 17–19 December 2021; pp. 530–533. [Google Scholar]

- Li, J.C.; Gou, S.P.; Li, R.M.; Chen, J.-W.; Sun, X. Ship segmentation via encoder-decoder network with global attention in high-resolution SAR images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Hou, X.; Ao, W.; Xu, F. End-to-end automatic ship detection and recognition in high-resolution gaofen-3 spaceborne SAR images. In Proceedings of the 2019 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Yokohama, Japan, 28 July–2 August 2019; pp. 9486–9489. [Google Scholar]

- Perdios, D.; Vonlanthen, M.; Martinez, F.; Arditi, M.; Thiran, J.-P. CNN-based image reconstruction method for ultrafast ultrasound imaging. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2022, 69, 1154–1168. [Google Scholar] [CrossRef] [PubMed]

- Yao, Y.; Yan, X.Q.; Luo, P.; Liang, Y.; Ren, S.; Hu, Y.; Han, J.; Guan, Q. Classifying land-use patterns by integrating time-series electricity data and high-spatial resolution remote sensing imagery. Int. J. Appl. Earth Obs. Geoinform. 2022, 106, 102664. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Nie, X.; Duan, M.; Ding, H.; Hu, B.; Wong, E.K. Attention mask R-CNN for ship detection and segmentation from remote sensing images. IEEE Access 2020, 8, 9325–9334. [Google Scholar] [CrossRef]

- Zhang, W.; He, X.; Li, W.; Zhang, Z.; Luo, Y.; Su, L.; Wang, P. An integrated ship segmentation method based on discriminator and extractor. Image Vis. Comput. 2020, 93, 103824. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 2007, 9, 62–66. [Google Scholar] [CrossRef]

- Kittler, J.; Illingworth, J. Minimum error thresholding. Pattern Recognit. 1986, 19, 41–47. [Google Scholar] [CrossRef]

- Yu, H.; Jiao, L.C.; Liu, F. Context based unsupervised hierarchical iterative algorithm for SAR segmentation. Chin. Acta Autom. Sin. 2014, 40, 100–116. [Google Scholar]

- Jin, D.R.; Bai, X.Z. Distribution information based intuitionistic fuzzy clustering for infrared ship segmentation. IEEE Trans. Fuzzy Syst. 2020, 28, 1557–1571. [Google Scholar] [CrossRef]

- Shang, R.H.; Chen, C.; Wang, G.G.; Jiao, L.; Okoth, M.A.; Stolkin, R. A thumbnail-based hierarchical fuzzy clustering algorithm for SAR image segmentation. Signal Process. 2020, 171, 107518. [Google Scholar] [CrossRef]

- Angelina, C.; Camille, L.; Anton, K. Ocean eddy signature on SAR-derived sea ice drift and vorticity. Geophys. Res. Lett. 2021, 48, e2020GL092066. [Google Scholar]

- Tsokas, A.; Rysz, M.; Pardalos, P.M.; Dipple, K. SAR data applications in earth observation: An overview. Expert Syst. Appl. 2022, 205, 117342. [Google Scholar] [CrossRef]

- Dou, Q.; Yan, M. Ocean small target detection in SAR image based on YOLO-v5. Int. Core J. Eng. 2021, 7, 167–173. [Google Scholar]

- Dunn, J.C. A fuzzy relative of the ISODATA process and its use in detecting compact well-separated clusters. J. Cybern. 1974, 3, 32–57. [Google Scholar] [CrossRef]

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Plenum Press: New York, UY, USA, 1981; Volume 22, pp. 203–239. [Google Scholar]

- Deng, W.; Yao, R.; Zhao, H.; Yang, X.; Li, G. A novel intelligent diagnosis method using optimal LS-SVM with improved PSO algorithm. Soft Comput. 2019, 23, 2445–2462. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Xu, G.; Zhao, Y.; Guo, R.; Wang, B.; Tian, Y.; Li, K. A salient edges detection algorithm of multi-sensor images and its rapid calculation based on PFCM kernel clustering. Chin. J. Aeronaut. 2014, 27, 102–109. [Google Scholar] [CrossRef]

- Li, J.W.; Qu, C.W.; Peng, S.J. Ship detection in SAR images based on an improved Faster R-CNN. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. A SAR dataset of ship detection for deep learning under complex backgrounds. Remote Sens. 2019, 11, 765. [Google Scholar] [CrossRef]

- Ozturk, O.; Saritürk, B.; Seker, D.Z. Comparison of fully convolutional networks (FCN) and U-Net for road segmentation from high resolution imageries. Int. J. Environ. Geoinform. 2020, 7, 272–279. [Google Scholar] [CrossRef]

- Huang, S.Q.; Pu, X.W.; Zhan, X.K.; Zhang, Y.; Dong, Z.; Huang, J. SAR ship target detection method based on CNN structure with wavelet and attention mechanism. PLoS ONE 2022, 17, e0265599. [Google Scholar] [CrossRef]

- Shamsolmoali, P.; Zareapoor, M.; Wang, R.; Zhou, H.; Yang, J. A novel deep structure U-Net for sea-land segmentation in remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3219–3232. [Google Scholar] [CrossRef]

- Huang, S.Q.; Liu, D.Z. SAR Image Processing and Application of Reconnaissance Measurement Target; China’s National Defense Industry Press: Beijing, China, 2012. [Google Scholar]

| 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 | |

| 0.754 | 0.762 | 0.768 | 0.771 | 0.798 | 0.851 | 0.813 | 0.810 | 0.792 | 0.782 |

| 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1.0 | 1.1 | 1.2 | |

| 0.812 | 0.816 | 0.820 | 0.821 | 0.832 | 0.840 | 0.848 | 0.839 | 0.830 |

| c1 | 0.8 | 1.2 | 1.6 | 2.0 | 2.4 | |

|---|---|---|---|---|---|---|

| c2 | ||||||

| 0.8 | 0.792 | 0.795 | 0.804 | 0.803 | 0.786 | |

| 1.2 | 0.798 | 0.818 | 0.816 | 0.812 | 0.801 | |

| 1.6 | 0.811 | 0.813 | 0.831 | 0.824 | 0.810 | |

| 2.0 | 0.804 | 0.825 | 0.831 | 0.848 | 0.825 | |

| 2.4 | 0.798 | 0.810 | 0.816 | 0.812 | 0.826 | |

| Algorithm | Mean (%) | Standard Deviation (%) |

|---|---|---|

| FCM | 73.52 | 3.26 |

| PSO | 72.15 | 3.54 |

| FKPFCM | 81.43 | 2.57 |

| BTPSOC | 89.51 | 1.52 |

| Methods | REC (%) | ACC (%) |

|---|---|---|

| FCN | 74.52 | 92.56 |

| U-Net | 75.15 | 91.88 |

| BTPSOC | 76.00 | 86.35 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, S.; Zhang, O.; Chen, Q. Ship Target Detection Method in Synthetic Aperture Radar Images Based on Block Thumbnail Particle Swarm Optimization Clustering. Remote Sens. 2023, 15, 4972. https://doi.org/10.3390/rs15204972

Huang S, Zhang O, Chen Q. Ship Target Detection Method in Synthetic Aperture Radar Images Based on Block Thumbnail Particle Swarm Optimization Clustering. Remote Sensing. 2023; 15(20):4972. https://doi.org/10.3390/rs15204972

Chicago/Turabian StyleHuang, Shiqi, Ouya Zhang, and Qilong Chen. 2023. "Ship Target Detection Method in Synthetic Aperture Radar Images Based on Block Thumbnail Particle Swarm Optimization Clustering" Remote Sensing 15, no. 20: 4972. https://doi.org/10.3390/rs15204972

APA StyleHuang, S., Zhang, O., & Chen, Q. (2023). Ship Target Detection Method in Synthetic Aperture Radar Images Based on Block Thumbnail Particle Swarm Optimization Clustering. Remote Sensing, 15(20), 4972. https://doi.org/10.3390/rs15204972