A Method of Chestnut Forest Identification Based on Time Series and Key Phenology from Sentinel-2

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Data Source and Processing

2.2.1. Sentinel-2 Time Series Images

2.2.2. DEM Data

2.2.3. Field Survey Sample Data

2.2.4. Accuracy Verification Data

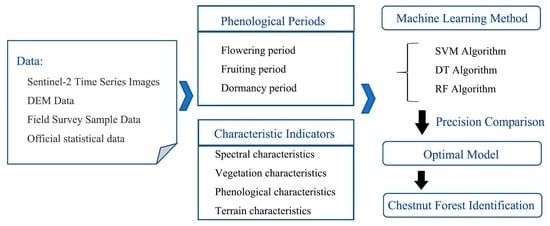

2.3. Methods

2.3.1. Feature Selection and Construction

2.3.2. Machine Learning Classification Method

2.3.3. Accuracy Evaluation

3. Results

3.1. Phenological Characteristic Construction

3.2. Comparison of Identification Methods of Chestnut Forest

3.3. Identification Result and Accuracy Evaluation

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Han, Y.; Xu, L.; Zhou, J. Current situation and trends of Chinese chestnut industry and market development. China Fruits 2021, 4, 83–88. [Google Scholar]

- Chen, J.; Wei, X.; Liu, Y.; Min, Q.; Liu, R.; Zhang, W.; Guo, C. Extraction of chestnut forest distribution based on multitemporal remote sensing observations. Remote Sens. Technol. Appl. 2020, 35, 1226–1236. [Google Scholar]

- Su, S.; Lin, L.; Deng, Y.; Lei, H. Synthetic evaluation of chestnut cultivar groups in north China. Nonwood For. Res. 2009, 27, 20–27. [Google Scholar]

- Liu, X.; Liu, F.; Zhang, J. Analysis on the layout and prospects of chestnut industry in the Beijing Tianjin Hebei region. China Fruits 2022, 2, 99–102. [Google Scholar]

- Tang, X.; Yuan, Y.; Zhang, X.; Zhang, J. Distribution and environmental factors of Castanea mollissima in soil and water loss areas in China. Bull. Soil Water Conserv. 2021, 41, 345–352. [Google Scholar]

- Wang, S.; Wang, F.; Li, Y.; Yang, X. Preliminary study on the prevention and control of soil and water loss under the chestnut forest in miyun district. Soil Water Conserv. China 2020, 6, 64–66. [Google Scholar]

- Wei, J.; Mao, X.; Fang, B.; Bao, X.; Xu, Z. Submeter remote sensing image recognition of trees based on Landsat 8 OLI support. J. Beijing For. Univ. 2016, 38, 23–33. [Google Scholar]

- Fu, F.; Wang, X.; Wang, J.; Wang, N.; Tong, J. Tree species and age groups classification based on GF-2 image. Remote Sens. Land Resour. 2019, 31, 118–124. [Google Scholar]

- Wang, C.; Li, B.; Zhu, R.; Chang, S. Study on tree species identification by combining Sentinel-1 and JL101A images. For. Eng. 2020, 36, 40–48. [Google Scholar]

- Dong, J.; Xiao, X.; Sheldon, S.; Biradar, C.; Xie, G. Mapping tropical forests and rubber plantations in complex landscapes by integrating PALSAR and MODIS imagery. ISPRS J. Photogramm. Remote Sens. 2012, 74, 20–33. [Google Scholar] [CrossRef]

- Cao, Y.; Chen, E.; Li, S. Estimation of provincial spatial distribution information of forest tree species (group) composition using multi-sources data. Sci. Silvae Sin. 2016, 52, 18–29. [Google Scholar]

- Yu, X.; Zhuang, D. Monitoring forest phenophases of northeast China based on Modis NDVI data. Resour. Sci. 2006, 28, 111–117. [Google Scholar]

- Zhang, Z.; Zhang, Q.; Qiu, X.; Peng, D. Temporal stage and method selection of tree species classification based on GF-2 remote sensing image. Chin. J. Appl. Ecol. 2019, 30, 4059–4070. [Google Scholar]

- Li, D.; Ke, Y.; Gong, H.; Li, X.; Deng, Z. Urban tree species classification with machine learning classifier using worldview-2 imagery. Geogr. Geo-Inf. Sci. 2016, 32, 84–89+127. [Google Scholar]

- Verlič, A.; Đurić, N.; Kokalj, Ž.; Marsetič, A.; Simončič, P.; Oštir, K. Tree species classification using WorldView-2 satellite images and laser scanning data in a natural urban forest. Šumarski List. 2014, 138, 477–488. [Google Scholar]

- Marchetti, F.; Arbelo, M.; Moreno-Ruíz, J.A.; Hernández-Leal, P.A.; Alonso-Benito, A. Multitemporal WorldView satellites imagery for mapping chestnut trees. Conference on Remote Sensing for Agriculture, Ecosystems, and Hydrology XIX. Int. Soc. Opt. Photonics 2017, 10421, 104211Q. [Google Scholar]

- Zhang, D.; Yang, Y.; Huang, L. Extraction of soybean planting areas combining Sentinel-2 images and optimized feature model. Trans. Chin. Soc. Agric. Eng. 2021, 37, 110–119. [Google Scholar]

- Zhu, M.; Zhang, L.; Wang, N.; Lin, Y.; Zhang, L.; Wang, S.; Liu, H. Comparative study on UNVI vegetation index and performance based on Sentinel-2. Remote Sens. Technol. Appl. 2021, 36, 936–947. [Google Scholar]

- Li, Y.; Li, C.; Liu, S.; Ma, T.; Wu, M. Tree species recognition with sentinel-2a multitemporal remote sensing image. J. Northeast. For. Univ. 2021, 49, 44–47, 51. [Google Scholar]

- Gu, X.; Zhang, Y.; Sang, H.; Zhai, L.; Li, S. Research on crop classification method based on sentinel-2 time series combined vegetation index. Remote Sens. Technol. Appl. 2020, 35, 702–711. [Google Scholar]

- Jiang, J.; Zhu, W.; Qiao, K.; Jiang, Y. An identification method for mountains coniferous in Tianshan with sentinel-2 data. Remote Sens. Technol. Appl. 2021, 36, 847–856. [Google Scholar]

- Li, F.; Ren, J.; Wu, S.; Zhang, N.; Zhao, H. Effects of NDVI time series similarity on the mapping accuracy controlled by the total planting area of winter wheat. Trans. Chin. Soc. Agric. Eng. 2021, 37, 127–139. [Google Scholar]

- Lei, G.; Li, A.; Bian, J.; Zhang, Z.; Zhang, W.; Wu, B. An practical method for automatically identifying the evergreen and deciduous characteristic of forests at mountainous areas: A case study in Mt. Gongga Region. Acta Ecol. Sin. 2014, 34, 7210–7221. [Google Scholar]

- Wang, X.; Tian, J.; Li, X.; Wang, L.; Gong, H.; Chen, B.; Li, X.; Guo, J. Benefits of Google Earth Engine in remote sensing. Natl. Remote Sens. Bull. 2022, 26, 299–309. [Google Scholar] [CrossRef]

- Li, W.; Tian, J.; Ma, Q.; Jin, X.; Yang, Z.; Yang, P. Dynamic monitoring of loess terraces based on Google Earth Engine and machine learning. J. Zhejiang AF Univ. 2021, 38, 730–736. [Google Scholar]

- Chen, B.; Xiao, X.; Li, X.; Pan, L.; Doughty, R.; Ma, J.; Dong, J.; Qin, Y.; Zhao, B.; Wu, Z.; et al. A mangrove forest map of China in 2015: Analysis of time series Landsat 7/8 and Sentinel-1A imagery in Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2017, 131, 104–120. [Google Scholar] [CrossRef]

- Ghorbanian, A.; Kakooei, M.; Amani, M.; Mahdavi, S.; Mohammadzadeh, A.; Hasanlou, M. Improved land cover map of Iran using Sentinel imagery within Google Earth Engine and a novel automatic workflow for land cover classification using migrated training samples. ISPRS J. Photogramm. Remote Sens. 2020, 167, 276–288. [Google Scholar] [CrossRef]

- Yang, G.; Yu, W.; Yao, X.; Zheng, H.; Cao, Q.; Zhu, Y.; Cao, W.; Cheng, T. AGTOC: A novel approach to winter wheat mapping by automatic generation of training samples and one-class classification on Google Earth Engine. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102446. [Google Scholar] [CrossRef]

- Tian, J.; Wang, L.; Yin, D.; Li, X.; Diao, C.; Gong, H.; Shi, C.; Menenti, M.; Ge, Y.; Nie, S.; et al. Development of spectral-phenological features for deep learning to understand Spartina alterniflora invasion. Remote Sens. Environ. 2020, 242, 111745. [Google Scholar] [CrossRef]

- Ni, R.; Tian, J.; Li, X.; Yin, D.; Li, J.; Gong, H.; Zhang, J.; Zhu, L.; Wu, D. An enhanced pixel-based phenological feature for accurate paddy rice mapping with Sentinel-2 imagery in Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2021, 178, 282–296. [Google Scholar] [CrossRef]

- Karra, K.; Kontgis, C.; Statman-Weil, Z.; Mazzariello, J.C.; Mathis, M.; Brumby, S.P. Global land use/land cover with Sentinel 2 and deep learning. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 4704–4707. [Google Scholar]

- Tucker, C. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Xu, H. A Study on Information Extraction of Water Body with the Modified Normalized Difference Water Index (MNDWI). J. Remote Sens. 2005, 9, 590–595. [Google Scholar]

- Zhi, Y.; Ni, S.; Yang, S. An effective approach to automatically extract urban land-use from tm lmagery. J. Remote Sens. 2003, 7, 38–40. [Google Scholar]

- Guyot, G.; Baret, F.; Major, D. High spectral resolution: Determination of spectral shifts between the red and near infrared. ISPRS Congr. 1988, 11, 740–760. [Google Scholar]

- Gitelson, A.; Merzlyak, M. Remote estimation of chlorophyll content in higher plant leaves. Int. J. Remote Sens. 1997, 18, 2691–2697. [Google Scholar] [CrossRef]

- Gitelson, A. Wide dynamic range vegetation index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P. Evaluation of the MERIS terrestrial chlorophyll index (MTCI). Adv. Space Res. 2007, 39, 100–104. [Google Scholar] [CrossRef]

- Huete, A. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Merzlyak, M.N.; Gitelson, A.A.; Chivkunova, O.B.; Rakitin, V.Y. Non-destructive optical detection of pigment changes during leaf senescence and fruit ripening. Physiol. Plant. 1999, 106, 135–141. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Chen, J.; Jönsson, P.; Tamura, M.; Gu, Z.; Matsushita, B.; Eklundh, L. A simple method for reconstructing a high-quality NDVI time-series data set based on the Savitzky-Golay filter. Remote Sens. Environ. 2004, 91, 332–344. [Google Scholar] [CrossRef]

- Wang, Z.; Ma, Z.; Xiong, Z.; Sun, T.; Huang, Z.; Fu, D.; Chen, L.; Xie, F.; Xie, C.; Chen, S. Assessment of multi-spectral imagery and machine learning algorithms for shallow water bathymetry inversion. Trop. Geogr. 2023, 43, 1689–1700. [Google Scholar]

- Wang, K.; Zhao, J.; Zhu, G. Early estimation of winter wheat planting area in Qingyang City by decision tree and pixel unmixing methods based on GF-1 satellite data. Remote Sens. Technol. Appl. 2018, 33, 158–167. [Google Scholar]

- Lin, W.; Guo, Z.; Wang, Y. Cost analysis for a crushing-magnetic separation process applied in furniture bulky waste. Recycl. Resour. Circ. Econ 2019, 12, 30–33. [Google Scholar]

- Li, L.; Liu, X.; Ou, J. Spatio-temporal changes and mechanism analysis of urban 3D expansion based on random forest model. Geogr. Geo-Inf. Sci. 2019, 35, 53–60. [Google Scholar]

- Liu, X.; Su, Y.; Hu, T.; Yang, Q.; Liu, B.; Deng, Y.; Tang, H.; Tang, Z.; Fang, J.; Guo, Q. Neural network guided interpolation for mapping canopy height of China’s forests by integrating GEDI and ICESat-2 data. Remote Sens. Environ. 2022, 269, 112844. [Google Scholar] [CrossRef]

- Congalton, R. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Azzari, G.; Lobell, D. Landsat-based classification in the cloud: An opportunity for a paradigm shift in land cover monitoring. Remote Sens. Environ. 2017, 202, 64–74. [Google Scholar] [CrossRef]

| Type | Training Sample Polygon/Pixel Number | Verification Sample Polygon/Pixel Number | Total Polygon/Pixel Number |

|---|---|---|---|

| Chestnut forest land | 76/6920 | 32/2965 | 108/9885 |

| Cultivated land | 50/5031 | 21/2175 | 71/7206 |

| Deciduous forest land | 54/5815 | 23/2472 | 77/8287 |

| Evergreen forest land | 60/4327 | 26/1804 | 86/6131 |

| Water area | 27/2904 | 11/1234 | 38/4138 |

| Construction land | 42/3918 | 18/1671 | 60/5589 |

| Band | Wave Length/μm | Spatial Resolution/m |

|---|---|---|

| Band2 (Blue) | 0.490 | 10 |

| Band3 (Green) | 0.560 | 10 |

| Band4 (Red) | 0.665 | 10 |

| Band5 (Red Edge 1) | 0.705 | 20 |

| Band6 (Red Edge 2) | 0.740 | 20 |

| Band7 (Red Edge 3) | 0.783 | 20 |

| Band8 (Near-infrared) | 0.842 | 10 |

| Band8A (Red Edge 4) | 0.865 | 20 |

| Band11 (Shortwave Infrared 1) | 1.610 | 20 |

| Band12 (Shortwave Infrared 2) | 2.190 | 20 |

| Vegetation Index | Calculation Formula | Full Name |

|---|---|---|

| NDVI | NDVI = (NIR − Red)/(NIR + Red) [32] | Normalized difference vegetation index |

| MNDWI | MNDWI = (Green − SWIR)/(Green + SWIR) [33] | Modified normalized difference water index |

| NDBI | NDBI = (SWIR − NIR)/(SWIR + NIR) [34] | Normalized difference build-up index |

| REP | REP = 705 + 3 [0.5(Red + RedEdge3) − RedEdge1]/(RedEdge2 − RedEdge1) [35] | Red-edge position index |

| GNDVI | GNDVI = (NIR − Green)/(NIR + Green) [36] | Green normalized difference vegetative index |

| WDRVI | WDRVI = (0.2NIR-Red)/ (0.2NIR + Red) [37] | Wide dynamic range vegetation index |

| MTCI | MTCI = (RedEdge2 − RedEdge1)/(RedEdge1 − Red) [38] | Meris terrestrial chlorophyll index |

| SAVI | SAVI = 1.5(NIR − Red)/(NIR + Red + 0.5) [39] | Soil-adjusted vegetation index |

| PSRI | PSRI = (Red-Blue)/RedEdge2 [40] | Plant senescence reflectance index |

| EVI | EVI = 2.5 (NIR − Red)/(NIR + 6Red − 7.5Blue + 1) [41] | Enhanced vegetation index |

| Phenological Period | Indicators in Different Phenological Period | Phenological Combinations |

|---|---|---|

| Flowering period | B4, B8, B12, EVI, NDVI, PSRI, MTCI | fB4, fB8, fB12, fEVI, fNDVI, fPSRI, fMTCI |

| Fruiting period | B2, B3, B8A, MNDWI, WDRVI, REP | uB2, uB3, uB8A, uMNDWI, uWDRVI, uREP |

| Dormancy period | B5, B6, B7, B11, GNDVI, NDBI, SAVI | rB5, rB6, rB7, rB11, rGNDVI, rNDBI, rSAVI |

| Num | Characteristic Combination | Involved Indicators |

|---|---|---|

| ① | spectral characteristics + vegetation characteristics | B2, B3, B4, B5, B6, B7, B8, B8A, B11, B12, NDVI, MNDWI, NDBI, REP, GNDVI, WDRVI, MTCI, SAVI, PSRI, EVI |

| ② | spectral characteristics + vegetation characteristics + phenological characteristics | fB4, fB8, fB12, fEVI, fNDVI, fPSRI, fMTCI, uB2, uB3, uB8A, uMNDWI, uWDRVI, uREP, rB5, rB6, rB7, rB11, rGNDVI, rNDBI, rSAVI |

| ③ | spectral characteristics + vegetation characteristics + terrain characteristics | B2, B3, B4, B5, B6, B7, B8, B8A, B11, B12, NDVI, MNDWI, NDBI, REP, GNDVI, WDRVI, MTCI, SAVI, PSRI, EVI, elevation, slope, aspect |

| ④ | spectral characteristics + vegetation characteristics + phenological characteristics + terrain characteristics | fB4, fB8, fB12, fEVI, fNDVI, fPSRI, fMTCI, uB2, uB3, uB8A, uMNDWI, uWDRVI, uREP, rB5, rB6, rB7, rB11, rGNDVI, rNDBI, rSAVI, elevation, slope, aspect |

| District | Extracted Area (Percentage) in This Study/km2 | Official Statistics Area (Percentage) in 2020/km2 |

|---|---|---|

| Huairou | 152.60(47.56%) | 146.67 (41.06%) |

| Miyun | 168.25 (52.44%) | 210.50 (58.94%) |

| Huairou and Miyun | 320.85 | 357.17 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiong, N.; Chen, H.; Li, R.; Su, H.; Dai, S.; Wang, J. A Method of Chestnut Forest Identification Based on Time Series and Key Phenology from Sentinel-2. Remote Sens. 2023, 15, 5374. https://doi.org/10.3390/rs15225374

Xiong N, Chen H, Li R, Su H, Dai S, Wang J. A Method of Chestnut Forest Identification Based on Time Series and Key Phenology from Sentinel-2. Remote Sensing. 2023; 15(22):5374. https://doi.org/10.3390/rs15225374

Chicago/Turabian StyleXiong, Nina, Hailong Chen, Ruiping Li, Huimin Su, Shouzheng Dai, and Jia Wang. 2023. "A Method of Chestnut Forest Identification Based on Time Series and Key Phenology from Sentinel-2" Remote Sensing 15, no. 22: 5374. https://doi.org/10.3390/rs15225374

APA StyleXiong, N., Chen, H., Li, R., Su, H., Dai, S., & Wang, J. (2023). A Method of Chestnut Forest Identification Based on Time Series and Key Phenology from Sentinel-2. Remote Sensing, 15(22), 5374. https://doi.org/10.3390/rs15225374