Machine Learning and Deep Learning for the Built Heritage Analysis: Laser Scanning and UAV-Based Surveying Applications on a Complex Spatial Grid Structure

Abstract

:1. Introduction

- The rationalization and speed up of prevention and maintenance operations as well as structural health monitoring based on the observation and processing of surveying data [5];

- The creation of rapid prototyping techniques and the use of specific representation methods to support scholars and operators (architects, engineers, restorers, historians) in returning faithful models and information to the broader public of users and public administrations involved in the infrastructure management process [6].

Research Aim

- The application of proper data acquisition techniques to ensure the required levels of precision and reliability even in comparison to consolidated works on older constructions;

- The characterization of geometric and spatial complexity for identifying structural elements, joints, and deteriorated components that require intervention or replacement;

- The 3D component reconstruction from the scan data in the BIM environment.

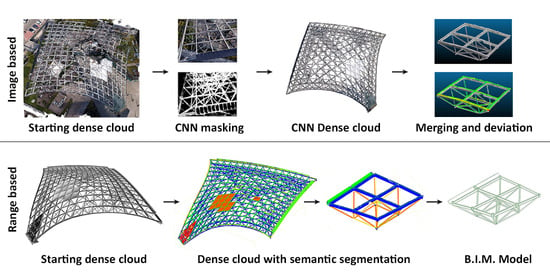

- On one hand, considering laser scan data, a supervised ML method, the random forest (RF) algorithm, was applied to assist in the classification (by type and by size) of the 3D architectural elements making up the structure, with a multi-level and multi-scale classification approach;

- On the other hand, for the UAV-based photogrammetric processing, a DL model was leveraged for automated masking of the input images to improve and expedite the construction of a 3D dense cloud.

2. State-of-the-Art: Supervised ML and CNN-Based Classification Methods

3. Materials

3.1. La Vela Spatial Grid Structure

3.2. 3D Surveying and Data Acquisition

3.2.1. Topographic Framework

3.2.2. Global Photogrammetric Survey

3.2.3. Detailed Photogrammetric Survey

3.2.4. Range-Based Survey

3.2.5. Data Integration and Validation

4. Methods

4.1. Supervised Machine Learning for the Classification of Laser Scan Data

- The original point cloud is subsampled to provide different levels of geometric resolution;

- Each time, at the various levels of detail, different component classes are identified on a reduced portion of the dataset (training set), and appropriate geometric features are extracted to describe the distribution of 3D points in the point cloud. Together, these data allow for the training of a RF algorithm to return a classification prediction (class of a 3D point) at the chosen level of resolution;

- Classification results at the lowest level of detail are back-interpolated onto the point cloud at a higher resolution to restore a higher point density. The 3D points classified as belonging to a given class constitute the basis for another classification at the highest level of detail.

- Classifying the entire point cloud at maximum resolution in a single step is very complex. It leads to overloaded computational efforts and long training times related to a high number of geometric features and the size of the dataset.

- An excessive number of semantic classes should subdivide the point cloud. This would lead to misclassification issues if classes of components share very similar features.

4.2. Deep Learning for Automated Masking of UAV Images Prior to Photogrammetric Processing

- Labeling. A set of ground-truth images was labeled by assigning a label to each pixel, with this operation being conducted manually on a chosen set of images. This manual masking process was performed via Agisoft Metashape software. The data were then extracted to constitute a specific folder of labeled images associated with the ground-truth images folder (Figure 13).

- Data augmentation. To improve the neural network’s ability to generalize over a larger dataset, data augmentation techniques were envisioned during training. Such techniques involve transformation operations such as translation, rotation, and reflection to generate new augmented samples from the original ones. Data augmentation reduces overfitting by avoiding training on a limited set, preventing the network from storing specific characteristics of the samples.

- Image adjustment strategies. The difference in brightness and weather conditions between one flight mission and another resulted in excessive color variability of the images. For this, image filters and adjustments, studied for a single image and automatically applied to several images of the same flight mission using Lightroom software, were used to equalize the color of the images and consequently improve the classification result on them.

4.3. Data Fusion and Construction of the BIM Model

5. Results and Discussion

5.1. Multi-Level Classification of Laser Scan Data

- At the first level of classification (lower point cloud resolution, with a relative distance of 1 cm between points of the 3D point cloud), the different components of La Vela were divided at a macro-architectonic scale by distinguishing tubular beams, rectangular-section beams, spherical and cylindrical knots, aluminum profiles, photovoltaic panels, skylights and electrical enclosures, steel cables, gutter, roofing panels, and glazing;

- At the second level of classification (point cloud processed to a 0.5 cm resolution), subparts of the electrical and lighting system were distinguished from structural element, while nodes were classified according to their cross section (cylindrical or spherical);

- At the third classification level (higher point cloud resolution of 0.2 cm), each mesh’s most significant structural elements were distinguished based on their size and, in particular, their diameter.

5.2. Classification of UAV Images and Improvement of the Photogrammetric Point Cloud

- (i)

- Images only from flight 4 (152 manual masks);

- (ii)

- Images from flight 4 + additional images from other flights (277 manual masks);

- (iii)

- The same set of training images as (ii), with the application of image adjustments for color correction.

5.3. Data Fusion and Construction of the BIM Model

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence | ||

| BIM | Building information modeling | ||

| CNNs | Convolutional neural networks | ||

| ETFE | Ethylene-tetrafluoroethylene copolymer | ||

| GCPs | Ground control points | ||

| DL | Deep learning | ||

| LoR | Level of reliability | ||

| ML | Machine learning | ||

| ML-MR | Multi-Level and Multi-Resolution | ||

| PPK | Post-processed kinematic | ||

| RF | Random forest | ||

| RPAS | Remotely piloted aircraft system | ||

| TLS | Terrestrial laser scanning | ||

| UAV | Unmanned-aerial vehicles | ||

| UAS | Unmanned aerial systems | ||

| 2D | Bi-dimensional | ||

| 3D | Three-dimensional | ||

Appendix A

Appendix B

| Tubular Beam | Diameter Derived from Surveying (cm) | Original Project Diameter (cm) | Error (cm) | Error (%) |

|---|---|---|---|---|

| 1 | 6.2 | 6.0 | 0.20 | 3% |

| 2 | 6.6 | 6.0 | 0.60 | 10% |

| 3 | 11.6 | 11.6 | 0.00 | 0% |

| 4 | 8.0 | 8.8 | 0.80 | 9% |

| 5 | 11.9 | 11.6 | 0.30 | 3% |

| 6 | 11.5 | 7.6 | 0.10 | 1% |

| 7 | 7.5 | 11.6 | 0.10 | 1% |

| 8 | 11.6 | 11.6 | 0.00 | 0% |

| 9 | 11.8 | 8.8 | 0.20 | 2% |

| 10 | 8.8 | 8.8 | 0.00 | 0% |

| 11 | 9.1 | 11.6 | 0.30 | 3% |

| 12 | 11.6 | 11.6 | 0.00 | 0% |

| 13 | 11.7 | 11.6 | 0.10 | 1% |

| 14 | 11.5 | 11.6 | 0.10 | 1% |

| 15 | 11.7 | 11.6 | 0.10 | 1% |

| 16 | 11.8 | 11.6 | 0.20 | 2% |

| 17 | 11.7 | 11.6 | 0.10 | 1% |

| 18 | 9.2 | 8.8 | 0.40 | 5% |

| 19 | 11.7 | 11.6 | 0.10 | 1% |

| 20 | 11.6 | 11.6 | 0.00 | 0% |

| 21 | 11.7 | 11.6 | 0.10 | 1% |

| Tubular Beam | Diameter Derived from Surveying (cm) | Original Project Diameter (cm) | Error (cm) | Error (%) |

|---|---|---|---|---|

| 1 | 20.0 | 20.0 | 0.0 | 0% |

| 2 | 15.6 | 15.4 | 0.2 | 1% |

| 3 | 21.3 | 22.0 | 0.7 | 3% |

| 4 | 15.8 | 15.4 | 0.4 | 3% |

References

- Bevilacqua, M.G.; Caroti, G.; Piemonte, A.; Terranova, A.A. Digital Technology and Mechatronic Systems for the Architectural 3D Metric Survey. In Mechatronics for Cultural Heritage and Civil Engineering; Ottaviano, E., Pelliccio, A., Gattulli, V., Eds.; Intelligent Systems, Control and Automation: Science and Engineering; Springer International Publishing: Cham, Switzerland, 2018; Volume 92, pp. 161–180. ISBN 978-3-319-68645-5. [Google Scholar]

- Fiorucci, M.; Khoroshiltseva, M.; Pontil, M.; Traviglia, A.; Del Bue, A.; James, S. Machine Learning for Cultural Heritage: A Survey. Pattern Recognit. Lett. 2020, 133, 102–108. [Google Scholar] [CrossRef]

- Bassier, M.; Vergauwen, M.; Van Genechten, B. Automated Classification of Heritage Buildings for As-Built BIM Using Machine Learning Techniques. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W2, 25–30. [Google Scholar] [CrossRef] [Green Version]

- Gui, G.; Pan, H.; Lin, Z.; Li, Y.; Yuan, Z. Data-Driven Support Vector Machine with Optimization Techniques for Structural Health Monitoring and Damage Detection. KSCE J. Civ. Eng. 2017, 21, 523–534. [Google Scholar] [CrossRef]

- Diez, A.; Khoa, N.L.D.; Makki Alamdari, M.; Wang, Y.; Chen, F.; Runcie, P. A Clustering Approach for Structural Health Monitoring on Bridges. J. Civ. Struct. Health Monit. 2016, 6, 429–445. [Google Scholar] [CrossRef] [Green Version]

- Spencer, B.F.; Hoskere, V.; Narazaki, Y. Advances in Computer Vision-Based Civil Infrastructure Inspection and Monitoring. Engineering 2019, 5, 199–222. [Google Scholar] [CrossRef]

- Ye, X.W.; Jin, T.; Yun, C.B. A Review on Deep Learning-Based Structural Health Monitoring of Civil Infrastructures. Smart Struct. Syst. 2019, 24, 567–585. [Google Scholar] [CrossRef]

- Avci, O.; Abdeljaber, O.; Kiranyaz, S.; Hussein, M.; Gabbouj, M.; Inman, D.J. A Review of Vibration-Based Damage Detection in Civil Structures: From Traditional Methods to Machine Learning and Deep Learning Applications. Mech. Syst. Signal Process. 2021, 147, 107077. [Google Scholar] [CrossRef]

- De Fino, M.; Galantucci, R.A.; Fatiguso, F. Mapping and Monitoring Building Decay Patterns by Photomodelling Based 3D Models. TEMA: Technol. Eng. Mater. Archit. 2019, 5, 27–35. [Google Scholar] [CrossRef]

- Adamopoulos, E. Learning-Based Classification of Multispectral Images for Deterioration Mapping of Historic Structures. J. Build. Rehabil. 2021, 6, 41. [Google Scholar] [CrossRef]

- Musicco, A.; Galantucci, R.A.; Bruno, S.; Verdoscia, C.; Fatiguso, F. Automatic Point Cloud Segmentation for the Detection of Alterations on Historical Buildings through an Unsupervised and Clustering-Based Machine Learning Approach. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, V-2–2021, 129–136. [Google Scholar] [CrossRef]

- Pocobelli, D.P.; Boehm, J.; Bryan, P.; Still, J.; Grau-Bové, J. Building Information Modeling for Monitoring and Simulation Data in Heritage Buildings. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII–2, 909–916. [Google Scholar] [CrossRef] [Green Version]

- Croce, V.; Caroti, G.; De Luca, L.; Jacquot, K.; Piemonte, A.; Véron, P. From the Semantic Point Cloud to Heritage-Building Information Modeling: A Semiautomatic Approach Exploiting Machine Learning. Remote Sens. 2021, 13, 461. [Google Scholar] [CrossRef]

- Croce, V.; Caroti, G.; De Luca, L.; Piemonte, A.; Véron, P. Semantic Annotations on Heritage Models: 2D/3D Approaches and Future Research Challenges. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B2-2020, 829–836. [Google Scholar] [CrossRef]

- Russo, M.; Russo, V. Geometric Analysis of a Space Grid Structure by an Integrated Survey Approach. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLVI-2/W1-2022, 465–472. [Google Scholar] [CrossRef]

- Rossini, G. Le Strutture Reticolari. Storia, Definizioni e Metodi Di Analisi Esempi Significativi in Architettura. Ph.D. Thesis, Sapienza Università di Roma, Rome, Italy, 2017. [Google Scholar]

- Sicignano, C. Le Strutture Tensegrali e La Loro Applicazione in Architettura. Ph.D. Thesis, Università degli Studi di Napoli Federico II, Naples, Italy, 2017. [Google Scholar]

- Wei, Y.; Liu, S.; Rao, Y.; Zhao, W.; Lu, J.; Zhou, J. NerfingMVS: Guided Optimization of Neural Radiance Fields for Indoor Multi-View Stereo. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 5590–5599. [Google Scholar]

- Liu, Y.-F.; Liu, X.-G.; Fan, J.-S.; Spencer, B.F.; Wei, X.-C.; Kong, S.-Y.; Guo, X.-H. Refined Safety Assessment of Steel Grid Structures with Crooked Tubular Members. Autom. Constr. 2019, 99, 249–264. [Google Scholar] [CrossRef]

- Jordan-Palomar, I.; Tzortzopoulos, P.; Garc, J.; Pellicer, E. Protocol to Manage Heritage-Building Interventions Using Heritage Building Information Modelling (HBIM). Sustainability 2018, 10, 908. [Google Scholar] [CrossRef] [Green Version]

- Flah, M.; Nunez, I.; Ben Chaabene, W.; Nehdi, M.L. Machine Learning Algorithms in Civil Structural Health Monitoring: A Systematic Review. Arch. Comput. Methods Eng. 2021, 28, 2621–2643. [Google Scholar] [CrossRef]

- Barrile, V.; Meduri, G.; Bilotta, G. Laser Scanner Surveying Techniques Aiming to the Study and the Spreading of Recent Architectural Structures. In Proceedings of the 9th WSEAS International Conference on Signal, Speech and Image Processing, and 9th WSEAS International Conference on Multimedia, Internet & Video Technologies, Budapest, Hungary, 3–5 September 2009; World Scientific and Engineering Academy and Society (WSEAS): Stevens Point, WI, USA, 2009; pp. 92–95. [Google Scholar]

- Pereira, Á.; Cabaleiro, M.; Conde, B.; Sánchez-Rodríguez, A. Automatic Identification and Geometrical Modeling of Steel Rivets of Historical Structures from Lidar Data. Remote Sens. 2021, 13, 2108. [Google Scholar] [CrossRef]

- Yang, L.; Cheng, J.C.P.; Wang, Q. Semi-Automated Generation of Parametric BIM for Steel Structures Based on Terrestrial Laser Scanning Data. Autom. Constr. 2020, 112, 103037. [Google Scholar] [CrossRef]

- Morioka, K.; Ohtake, Y.; Suzuki, H. Reconstruction of Wire Structures from Scanned Point Clouds. In Advances in Visual Computing; Bebis, G., Boyle, R., Parvin, B., Koracin, D., Li, B., Porikli, F., Zordan, V., Klosowski, J., Coquillart, S., Luo, X., et al., Eds.; Lecture Notes in Computer Science; Springer Berlin Heidelberg: Berlin/Heidelberg, Germany, 2013; Volume 8033, pp. 427–436. ISBN 978-3-642-41913-3. [Google Scholar]

- Leonov, A.V.; Anikushkin, M.N.; Ivanov, A.V.; Ovcharov, S.V.; Bobkov, A.E.; Baturin, Y.M. Laser Scanning and 3D Modeling of the Shukhov Hyperboloid Tower in Moscow. J. Cult. Herit. 2015, 16, 551–559. [Google Scholar] [CrossRef]

- Bernardello, R.A.; Borin, P. Form Follows Function in a Hyperboloidical Cooling Tower. Nexus Netw. J. 2022, 24, 587–601. [Google Scholar] [CrossRef]

- Knyaz, V.A.; Kniaz, V.V.; Remondino, F.; Zheltov, S.Y.; Gruen, A. 3D Reconstruction of a Complex Grid Structure Combining UAS Images and Deep Learning. Remote Sens. 2020, 12, 3128. [Google Scholar] [CrossRef]

- Candela, G.; Barrile, V.; Demartino, C.; Monti, G. Image-Based 3d Reconstruction of a Glubam-Steel Spatial Truss Structure Using Mini-UAV. In Modern Engineered Bamboo Structures; Xiao, Y., Li, Z., Liu, K.W., Eds.; CRC Press: Boca Raton, FL, USA, 2019; pp. 217–222. ISBN 978-0-429-43499-0. [Google Scholar]

- Achille, C.; Adami, A.; Chiarini, S.; Cremonesi, S.; Fassi, F.; Fregonese, L.; Taffurelli, L. UAV-Based Photogrammetry and Integrated Technologies for Architectural Applications—Methodological Strategies for the After-Quake Survey of Vertical Structures in Mantua (Italy). Sensors 2015, 15, 15520–15539. [Google Scholar] [CrossRef] [Green Version]

- McMinn Mitchell, E. Creating a 3D Model of the Famous Budapest Chain Bridge; GIM International (Online Resource): Latina, Italy, 2022. [Google Scholar]

- Hofer, M.; Wendel, A.; Bischof, H. Line-Based 3D Reconstruction of Wiry Objects. In Proceedings of the 18th Computer Vision Winter Workshop, Hernstein, Austria, 4 February 2013. [Google Scholar]

- Shah, G.A.; Polette, A.; Pernot, J.-P.; Giannini, F.; Monti, M. Simulated Annealing-Based Fitting of CAD Models to Point Clouds of Mechanical Parts’ Assemblies. Eng. Comput. 2020, 37, 1891–2909. [Google Scholar] [CrossRef]

- Croce, P.; Landi, F.; Puccini, B.; Martino, M.; Maneo, A. Parametric HBIM Procedure for the Structural Evaluation of Heritage Masonry Buildings. Buildings 2022, 12, 194. [Google Scholar] [CrossRef]

- Grilli, E.; Remondino, F. Classification of 3D Digital Heritage. Remote Sens. 2019, 11, 847. [Google Scholar] [CrossRef] [Green Version]

- Özdemir, E.; Remondino, F.; Golkar, A. Aerial Point Cloud Classification with Deep Learning and Machine Learning Algorithms. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-4/W18, 843–849. [Google Scholar] [CrossRef] [Green Version]

- Kyriakaki-Grammatikaki, S.; Stathopoulou, E.K.; Grilli, E.; Remondino, F.; Georgopoulos, A. Geometric Primitive Extraction from Semantically Enriched Point Clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLVI-2/W1-2022, 291–298. [Google Scholar] [CrossRef]

- Kaiser, A.; Ybanez Zepeda, J.A.; Boubekeur, T. A Survey of Simple Geometric Primitives Detection Methods for Captured 3D Data. Comput. Graph. Forum 2019, 38, 167–196. [Google Scholar] [CrossRef]

- Galantucci, R.A.; Fatiguso, F.; Galantucci, L.M. A Proposal for a New Standard Quantification of Damages of Cultural Heritages, Based on 3D Scanning. SCIRES-IT—Sci. Res. Inf. Technol. 2018, 8, 121–138. [Google Scholar] [CrossRef]

- Breiman, L. Ramdom Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Ni, H.; Lin, X.; Zhang, J. Classification of ALS Point Cloud with Improved Point Cloud Segmentation and Random Forests. Remote Sens. 2017, 9, 288. [Google Scholar] [CrossRef] [Green Version]

- Weinmann, M.; Jutzi, B.; Mallet, C.; Weinmann, M. Geometric Features and Their Relevance for 3D Point Cloud Classification. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-1/W1, 157–164. [Google Scholar] [CrossRef] [Green Version]

- Hackel, T.; Wegner, J.D.; Schindler, K. Fast Semantic Segmentation of 3D Point Clouds with Strongly Varying Density. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 177–184. [Google Scholar] [CrossRef] [Green Version]

- Landes, T. Contribution à la segmentation et à la modélisation 3D du milieu urbain à partir de nuages de points. Ph.D. Thesis, Université de Strasbourg, Strasbourg, France, 2020. [Google Scholar]

- Matrone, F.; Lingua, A.; Pierdicca, R.; Malinverni, E.S.; Paolanti, M.; Grilli, E.; Remondino, F.; Murtiyoso, A.; Landes, T. A Benchmark for Large-Scale Heritage Point Cloud Semantic Segmentation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B2-2020, 1419–1426. [Google Scholar] [CrossRef]

- Matrone, F.; Grilli, E.; Martini, M.; Paolanti, M.; Pierdicca, R.; Remondino, F. Comparing Machine and Deep Learning Methods for Large 3D Heritage Semantic Segmentation. IJGI 2020, 9, 535. [Google Scholar] [CrossRef]

- Teruggi, S.; Grilli, E.; Russo, M.; Fassi, F.; Remondino, F. A Hierarchical Machine Learning Approach for Multi-Level and Multi-Resolution 3D Point Cloud Classification. Remote Sens. 2020, 12, 2598. [Google Scholar] [CrossRef]

| Level of Reliability | Deviation |

|---|---|

| High | ≤10 mm |

| Medium | 10 mm < x ≤ 20 mm |

| Low | >20 mm |

| ML-MR Classification via Machine Learning | Minutes |

|---|---|

| 1. Creation of the training set | 120 |

| 2. Feature extraction and selection | 60 |

| 3. RF training | 60 |

| 4. Back interpolation | 30 |

| Total time required | 270 (4.5 h.) |

| AI Masking | Minutes | Working Days * |

|---|---|---|

| 1. Creation of the training set | 9695 | 21 |

| 2. Editing in Lightroom | 80 | - |

| 3. Deep learning network training | 270 | - |

| 4. Mask rectification | 135 | - |

| Total time required | 10,180 | 21 |

| Manual masking | ≈100 working days (Manual single image annotation ≈ 35 min) |

| AI masking | ≈21 working days |

| Element | Deviation from Survey Data (mm) | Level of Reliability |

|---|---|---|

| Rectangular beams | 10 | High |

| Tubular beams | 4 | High |

| Spherical knots | 13 | Medium |

| Cylindrical knots | 40 | Low |

| Gutter | 15 | Medium |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Billi, D.; Croce, V.; Bevilacqua, M.G.; Caroti, G.; Pasqualetti, A.; Piemonte, A.; Russo, M. Machine Learning and Deep Learning for the Built Heritage Analysis: Laser Scanning and UAV-Based Surveying Applications on a Complex Spatial Grid Structure. Remote Sens. 2023, 15, 1961. https://doi.org/10.3390/rs15081961

Billi D, Croce V, Bevilacqua MG, Caroti G, Pasqualetti A, Piemonte A, Russo M. Machine Learning and Deep Learning for the Built Heritage Analysis: Laser Scanning and UAV-Based Surveying Applications on a Complex Spatial Grid Structure. Remote Sensing. 2023; 15(8):1961. https://doi.org/10.3390/rs15081961

Chicago/Turabian StyleBilli, Dario, Valeria Croce, Marco Giorgio Bevilacqua, Gabriella Caroti, Agnese Pasqualetti, Andrea Piemonte, and Michele Russo. 2023. "Machine Learning and Deep Learning for the Built Heritage Analysis: Laser Scanning and UAV-Based Surveying Applications on a Complex Spatial Grid Structure" Remote Sensing 15, no. 8: 1961. https://doi.org/10.3390/rs15081961

APA StyleBilli, D., Croce, V., Bevilacqua, M. G., Caroti, G., Pasqualetti, A., Piemonte, A., & Russo, M. (2023). Machine Learning and Deep Learning for the Built Heritage Analysis: Laser Scanning and UAV-Based Surveying Applications on a Complex Spatial Grid Structure. Remote Sensing, 15(8), 1961. https://doi.org/10.3390/rs15081961